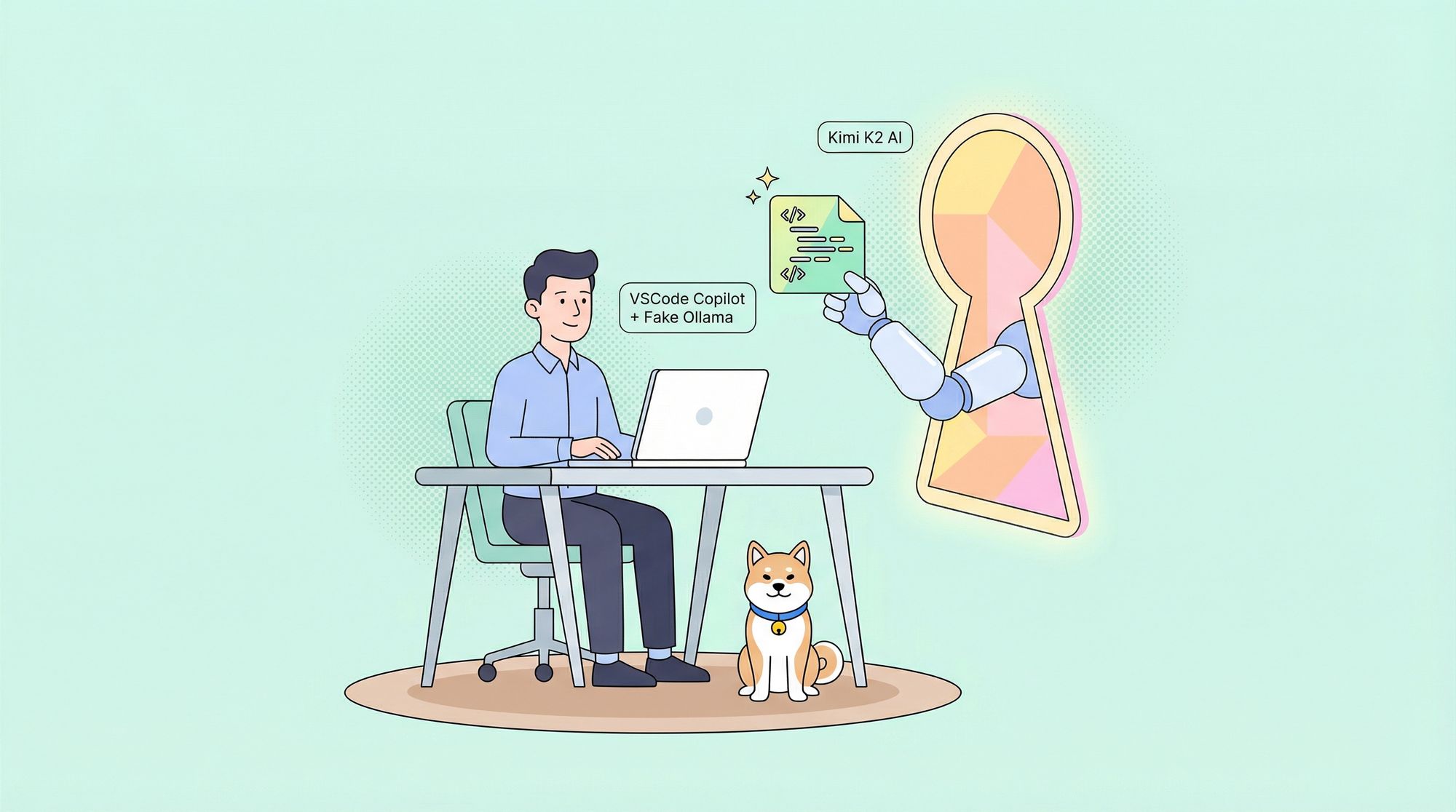

AI coding assistants have become essential for fast, high-quality software development. GitHub Copilot is a leader in this space for Visual Studio Code users, but what if you could upgrade its intelligence with one of the world’s most advanced language models—Kimi K2 from Moonshot AI? This guide shows API developers and engineering teams how to seamlessly connect Kimi K2 to VSCode Copilot using a clever proxy tool: Fake Ollama.

By following this tutorial, you’ll:

- Swap Copilot’s engine for Kimi K2’s massive one-trillion-parameter prowess.

- Enable custom, enterprise-grade AI in your coding workflow.

- Future-proof your setup for local or cloud-based LLMs.

💡 Need robust API testing and documentation? Apidog generates beautiful API documentation, empowers collaborative teams with an all-in-one platform, and is a cost-effective Postman alternative!

Why Connect Kimi K2 to VSCode Copilot?

Meet Kimi K2: Moonshot AI’s Flagship Model

Kimi K2 sets a new benchmark for large language models, leveraging a Mixture-of-Experts (MoE) architecture with a staggering one trillion parameters (32 billion active per inference).

Kimi K2 excels in:

- Coding: Top-tier results on LiveCodeBench and SWE-bench.

- Reasoning: Strong logical and analytical power for complex problem-solving.

- Long-context understanding: Handles up to 128,000 tokens—ideal for large codebases, API schemas, and technical docs.

Model options:

- Kimi-K2-Base: For researchers and advanced customization.

- Kimi-K2-Instruct: Fine-tuned for chat, code, and agentic tasks—perfect as a Copilot engine.

In this guide, we’ll use the Instruct model through an API.

What Is VSCode Copilot (and Why Use Custom Models)?

VSCode Copilot, built by GitHub and OpenAI, accelerates development with code suggestions, explanations, and refactoring help. Recent updates let you swap its default model for any LLM accessible via the Ollama API—unlocking custom model choices like Kimi K2.

What Is Fake Ollama (and Why Does It Matter)?

Fake Ollama is an open-source server that mimics the Ollama API. Many dev tools (including VSCode Copilot) natively support Ollama endpoints. With Fake Ollama, you can:

- Point Copilot to a local server.

- Translate requests to any LLM API—including Kimi K2 via OpenRouter.

- Experiment with local models or advanced APIs, all from VSCode.

This flexibility lets your team control which model powers AI coding assistance—crucial for enterprises with security or performance requirements.

Prerequisites

Before you begin, make sure you have:

- Visual Studio Code (latest version recommended)

- VSCode Copilot extension (active subscription)

- Python (3.8+)

- Git (for cloning repositories)

- Kimi K2 API key (from Moonshot AI or OpenRouter—details below)

Step-by-Step: Integrate Kimi K2 with VSCode Copilot

1. Get Your Kimi K2 API Key

You can access Kimi K2 through:

- Moonshot AI Platform: Sign up on Moonshot’s site for direct API access.

- OpenRouter: Highly recommended for its unified API for multiple LLMs, including Kimi K2. Switching models is easy—ideal for dev teams iterating fast.

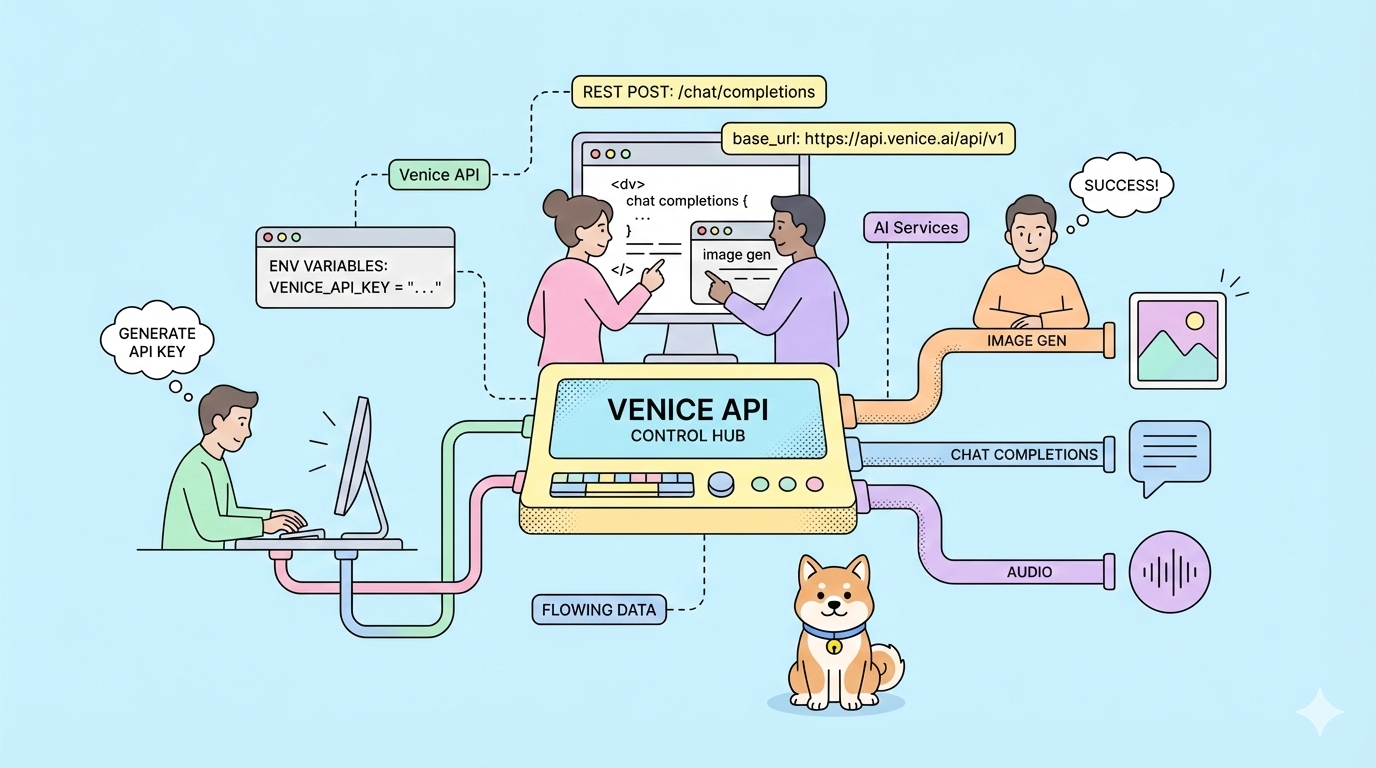

Example: Using OpenRouter with the OpenAI Python SDK

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="YOUR_OPENROUTER_API_KEY",

)

response = client.chat.completions.create(

model="moonshotai/kimi-k2",

messages=[

{"role": "user", "content": "Write a simple Python function to calculate the factorial of a number."},

],

)

print(response.choices[0].message.content)

Keep your API key ready for configuration in later steps.

2. Clone and Set Up Fake Ollama

In your terminal:

git clone https://github.com/spoonnotfound/fake-ollama.git

cd fake-ollama

pip install -r requirements.txt

3. Configure Fake Ollama for Kimi K2

Create a .env file in the fake-ollama directory with these lines (replace with your actual API key):

OPENAI_API_BASE=https://openrouter.ai/api/v1

OPENAI_API_KEY=YOUR_OPENROUTER_API_KEY

MODEL_NAME=moonshotai/kimi-k2

This setup ensures Fake Ollama forwards requests to OpenRouter, authenticates your API key, and targets the correct model.

4. Run the Fake Ollama Server

Start the server:

python main.py

You should see confirmation that Fake Ollama is running (default: http://localhost:11434). This is the endpoint Copilot will use.

5. Point VSCode Copilot to Fake Ollama

- Open VSCode and go to the Copilot Chat view.

- In chat input, type

/and select Select a Model. - Click Manage Models....

- Choose Ollama as the AI provider.

- Enter the server URL:

http://localhost:11434. - Select the model:

moonshotai/kimi-k2(or your configured model).

Your Copilot is now powered by Kimi K2! Start a chat or code session and enjoy advanced coding, reasoning, and long-context support.

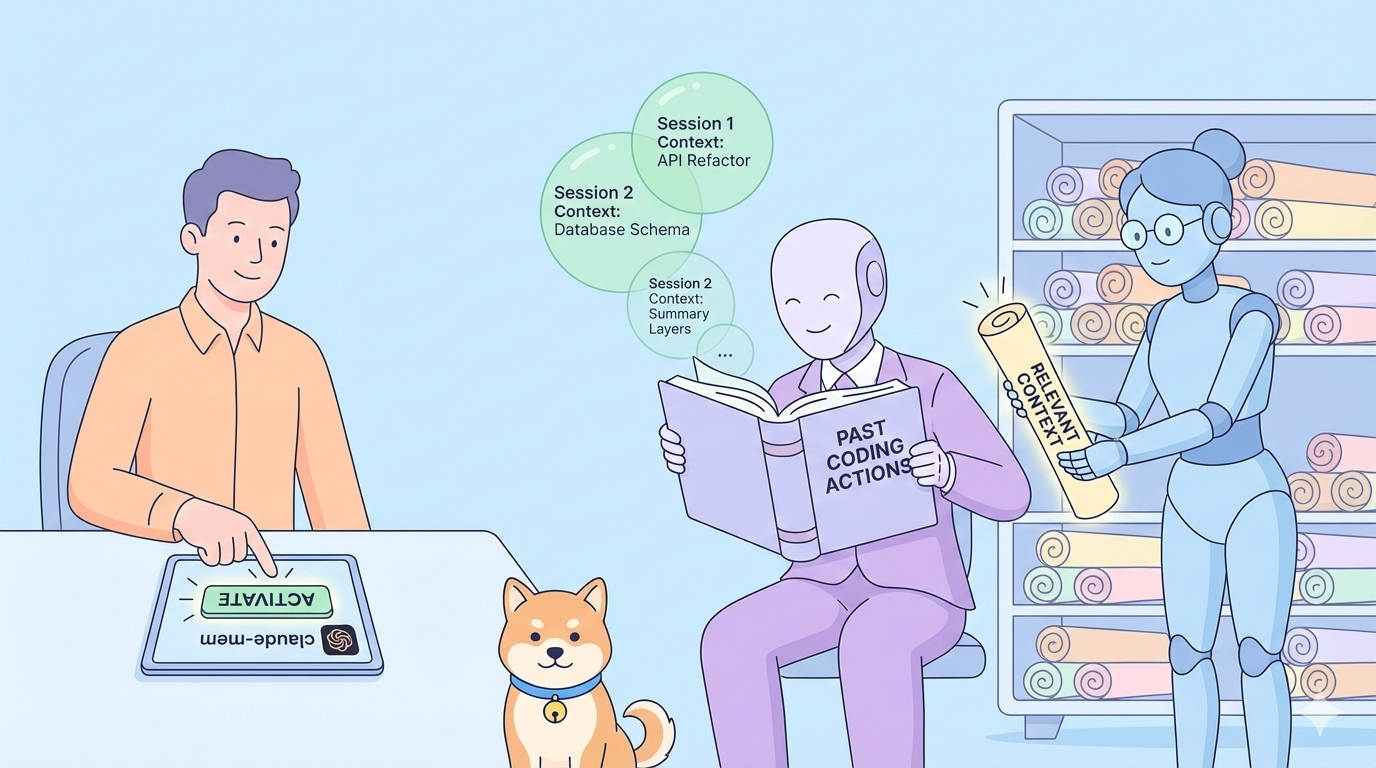

Advanced: Running Local LLMs via Fake Ollama

Fake Ollama isn’t limited to API-based models. You can use it as a bridge to locally hosted LLMs for full data control:

- vLLM: Fastest open-source LLM inference.

- llama.cpp: Lightweight LLaMA inference for CPUs or resource-limited environments.

- ktransformers: Supports recent models like Kimi K2, enabling quantized local runs for enterprises with strict privacy needs.

Simply point Fake Ollama’s endpoint to your local inference server instead of OpenRouter. This flexibility is valuable for organizations with sensitive data or custom hardware.

Why API Developers and Teams Choose Apidog

A powerful API workflow is key to leveraging modern AI tools and integrating with advanced models like Kimi K2. Apidog provides:

- Beautiful, auto-generated API documentation for internal or public sharing.

- All-in-one collaboration—design, test, and maintain APIs as a team (maximize productivity).

- Affordable Postman replacement (see pricing)—trusted by engineering teams worldwide.

Conclusion

Integrating Kimi K2 with VSCode Copilot via Fake Ollama gives API engineers and backend teams a flexible, cutting-edge AI coding assistant tailored to your needs. Whether you’re leveraging cloud APIs for the latest LLMs or running models locally for privacy, this setup keeps your workflow fast, adaptable, and future-ready.

For enhanced API testing, seamless documentation, and collaborative API management, Apidog is the platform of choice for modern developer teams.

Happy coding!