Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Unveiling Kimi-K2-Base: The Foundation for Open Agentic Intelligence

A new Open Source Model has emerged from Moonshot AI, promising not just to answer questions, but to actively perform tasks. This is Kimi K2, a state-of-the-art Mixture-of-Experts (MoE) model that redefines the boundaries of what open-source AI can achieve. At the heart of this release lies its foundational pillar: Kimi-K2-Base. This is not merely an incremental update; it is a meticulously engineered foundation designed to empower researchers, developers, and builders with unprecedented control and power. With a staggering one trillion total parameters, of which 32 billion are activated per token, Kimi-K2-Base stands as a testament to the new era of open, agentic intelligence, providing the raw material for the next generation of autonomous AI systems.

The Technical Architecture of Kimi-K2-Base

To understand the power of Kimi-K2-Base, one must first look under the hood at its sophisticated architecture and the groundbreaking innovations that made its creation possible. It is a Mixture-of-Experts (MoE) model, a design that allows for massive scale without incurring proportionally massive computational costs during inference. While the model boasts a total of 1 trillion parameters, an individual user query only activates a "mere" 32 billion, striking a balance between immense capacity and practical efficiency.

The model’s specifications, detailed by Moonshot AI, are formidable. It features 61 layers, including one dense layer, an attention hidden dimension of 7168, and a massive 128K context length, enabling it to process and understand vast amounts of information in a single pass. The MoE architecture is composed of 384 distinct "experts," with the model intelligently selecting 8 of these experts for each token it processes, alongside a single shared expert. This dynamic routing allows the model to specialize its computation, leading to more nuanced and accurate outputs.

However, the true secret sauce behind Kimi-K2-Base is the MuonClip optimizer. Scaling up language models to this magnitude presents enormous challenges, chief among them being training instability. As models grow, they often suffer from "exploding attention logits," a problem where numerical values in the attention mechanism spiral out of control, derailing the training process. While the previously developed Muon optimizer was more token-efficient than the standard AdamW, it was also more prone to this instability. To solve this, Moonshot AI developed MuonClip, a novel technique that stabilizes training at an unprecedented scale.

MuonClip works by directly rescaling the weight matrices of the query and key projections after each optimizer update. This technique, called qk-clip, effectively controls the scale of the attention logits at their source, preventing them from exploding. This innovation proved so effective that Moonshot AI was able to pre-train Kimi-K2-Base on an astonishing 15.5 trillion tokens of data with zero training spikes. This breakthrough is not just a technical achievement; it is the core enabler that makes a stable, trillion-parameter open-source model like Kimi-K2-Base a reality.

The Agentic Promise of Kimi-K2-Base

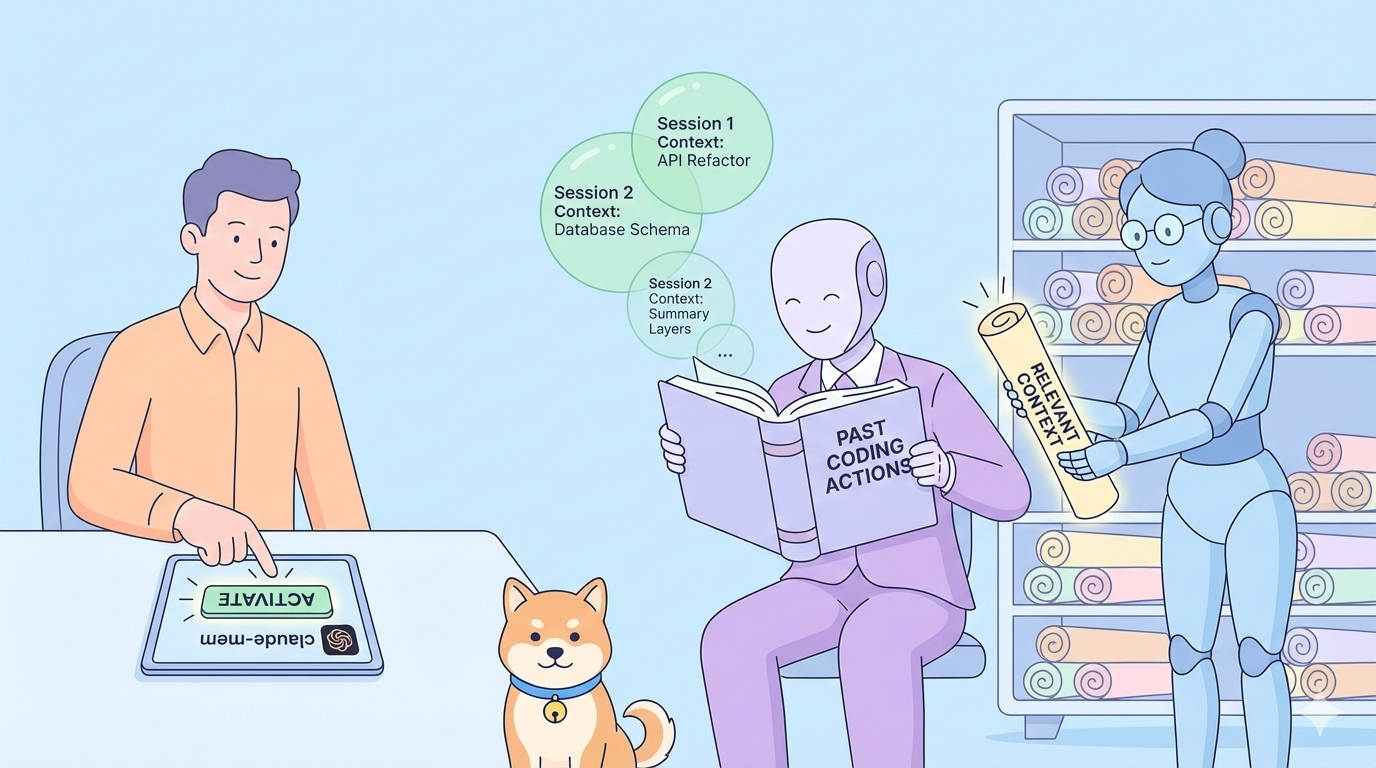

Moonshot AI has positioned Kimi K2 not as a simple chatbot, but as a platform for "Open Agentic Intelligence." An agentic model is one that does not just passively provide information but actively takes steps to accomplish a goal. It can use tools, execute code, and orchestrate complex workflows. The foundation for this remarkable capability is laid during the pre-training of Kimi-K2-Base.

This agentic prowess is built on two pillars. The first is Large-Scale Agentic Data Synthesis. To teach a model how to use tools effectively, it needs to be trained on vast amounts of high-quality examples. Moonshot AI developed a sophisticated pipeline that simulates real-world scenarios involving hundreds of domains and thousands of tools. In these simulations, AI agents are given tasks and sets of tools, and their interactions are recorded. An LLM judge then evaluates these interactions against a rubric, filtering out all but the highest-quality examples to be used as training data. This rigorous, scalable process imbues Kimi-K2-Base with a deep, instinctual understanding of tool use from its very inception.

The second pillar is General Reinforcement Learning (RL). Learning from interaction is critical for surpassing the limitations of static datasets. The key challenge lies in applying RL to tasks where success is not easily verifiable, such as writing a comprehensive report, as opposed to verifiable tasks like solving a math problem. Moonshot AI’s system uses a self-judging mechanism where the model acts as its own critic, providing scalable feedback for these non-verifiable tasks. This critic is, in turn, continuously improved using data from tasks with verifiable rewards, ensuring its judgments remain accurate and aligned with desired outcomes.

Kimi-K2-Base is the direct result of this intensive pre-training. It is the potent, unrefined foundation containing all the latent knowledge of tool use and problem-solving, waiting for developers to harness it for their own specific agentic applications.

The Exceptional Performance Benchmarks of Kimi-K2-Base

A foundational model is only as good as its performance, and Kimi-K2-Base delivers outstanding results across a wide array of industry-standard benchmarks. When compared to other leading open-source base models like Deepseek-V3-Base, Qwen2.5-72B, and Llama 4 Maverick, Kimi-K2-Base consistently demonstrates superior or highly competitive performance, proving it is a powerful starting point for any custom AI project.

In general reasoning and knowledge tasks, the model excels. On the widely respected MMLU benchmark, it achieves a score of 87.8, outperforming its peers. This trend continues across more challenging variants like MMLU-pro (69.2) and specialized knowledge tests like GPQA-Diamond and SuperGPQA, showcasing its robust and broad understanding.

Its capabilities in coding and mathematics are particularly noteworthy. On the MATH benchmark, it scores an impressive 70.2, and on GSM8k, it reaches 92.1, indicating a strong grasp of logical and mathematical reasoning. For developers, its performance on coding benchmarks is a significant draw. It achieves a state-of-the-art score of 80.3 on EvalPlus, a figure that is substantially higher than its competitors, and a strong 26.3 Pass@1 on the challenging LiveCodeBench v6. These results confirm that Kimi-K2-Base is not just a generalist but also a highly capable model for specialized technical domains.

Building with Kimi-K2-Base: Use Cases and Applications

While its sibling, Kimi-K2-Instruct, is a drop-in solution for chatbots, the true power of Kimi-K2-Base lies in its potential for customization. It is a blank canvas for developers and researchers to build upon. The primary use case is custom fine-tuning. Organizations can adapt the model to their specific needs by training it on proprietary data from specialized fields like medicine, law, or finance, creating a bespoke expert AI.

Moreover, Kimi-K2-Base is the ideal starting point for building sophisticated, custom agentic systems from the ground up. Developers can control the entire post-training process, implementing their own reinforcement learning pipelines to craft agents tailored for specific complex workflows. Imagine an agent that can not only write code but also manage version control, run tests, and deploy applications, all learned on top of the powerful foundation provided by the base model.

The "Salary Data Analysis" example provided by Moonshot AI perfectly illustrates the type of complex, multi-step agentic tasks the Kimi K2 family is built for. In the demonstration, the model receives a high-level request to analyze a dataset. It then autonomously performs a sixteen-step process: it uses an IPython tool to load and filter the data, generates multiple advanced visualizations like violin and box plots, runs statistical tests like ANOVA and t-tests, intelligently handles errors when a required library is missing, and culminates in generating a complete, interactive HTML webpage report. This ability to plan, execute, self-correct, and deliver a polished final product is rooted in the capabilities pre-trained into Kimi-K2-Base.

The Future of Kimi-K2-Base: Deployment and What's Next

Getting started with Kimi-K2-Base is straightforward. The model is available on Hugging Face with a permissive Modified MIT License, encouraging both academic and commercial use. Its checkpoints are provided in the efficient block-fp8 format and are optimized to run on popular inference engines like vLLM, SGLang, and TensorRT-LLM.

Moonshot AI has acknowledged some limitations, such as occasional long-winded outputs on hard reasoning tasks, and is actively working to address them. The roadmap for the future is clear: to build upon this powerful foundation by incorporating more advanced capabilities like "thinking"—the ability for long-form reasoning and reflection—and multi-modal visual understanding.

In conclusion, Kimi-K2-Base represents more than just a powerful new model. It is a strategic move to democratize the development of highly capable, autonomous AI agents. By open-sourcing a foundation of this scale and quality, Moonshot AI has equipped the global community of builders with the tools to innovate and create the next wave of agentic intelligence. It is a strong, stable, and exceptionally capable starting point, and the world is waiting to see what will be built upon it.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!