In today's interconnected world, APIs act as the invisible bridges between applications, enabling the smooth flow of data and functionality. However, a malfunctioning API can disrupt user experiences and cripple entire systems. To safeguard against such issues, meticulous testing is crucial.

If you wish to learn more about Apidog's ability, proceed by clicking on the button below!

This comprehensive guide equips you with the knowledge and tools to write effective manual test cases for API testing. By following these steps, you'll be empowered to meticulously examine your APIs, ensuring they function flawlessly and deliver the seamless performance your users expect.

Definition of Test Cases

Let's jolt some memory back into what test cases are before further venturing into the topic.

An API test case is a documented description of a specific scenario designed to evaluate the functionality, behavior, or non-functional characteristics (e.g., performance, security) of an API endpoint.

Important Characteristics of Test Cases

1. Clarity and Conciseness:

Clear Objective: Each test case should have a well-defined objective that specifies what aspect of the API it's testing (e.g., verify user login functionality, validate data format in response).

Concise Instructions: Execution steps should be clear, concise, and easy to follow for any tester. Avoid ambiguity and ensure each step contributes to the test objective.

2. Data-Driven Approach:

Defined Inputs: Specify the data (payloads, parameters) to be used in the test request. This may include:

- Valid Data: Test cases with valid data confirm the API processes information correctly under normal conditions.

- Invalid Data: These cases include data that intentionally violates API specifications to check for proper error handling.

- Edge Cases & Boundary Values: Test data that pushes the limits of expected values (e.g., very large numbers, empty strings) helps identify potential issues.

Expected Outputs: Define the anticipated response from the API in detail. This includes:

- Status Code: The HTTP status code expected in the response (e.g., 200 for success, 401 for unauthorized access).

- Response Format: Specify the format of the response data (JSON, XML, etc.).

- Response Content: Detail the specific data elements and their expected values within the response body.

3. Pass/Fail Criteria:

Clear Conditions: Define unambiguous criteria to determine whether a test case has passed or failed. This could involve:

- Matching Expected Responses: Compare the actual response with the defined expected outputs to check for discrepancies.

- Verifying Specific Behavior: Confirm that the API triggers the intended behavior based on the test request (e.g., successful user creation, error message for invalid login).

4. Reusability and Maintainability:

- Modular Design: Structure test cases to be modular and reusable across different scenarios. This reduces redundancy and simplifies maintenance.

- Parameterization: Consider using parameters for data inputs and expected outputs, allowing for easy adaptation to different test cases with similar structures.

- Clear Documentation: Document each test case clearly, including the objective, pre-conditions (if needed), execution steps, expected results, pass/fail criteria, and any relevant notes.

5. Comprehensiveness:

- Variety of Scenarios: Aim to cover a wide range of scenarios, including positive and negative test cases, to maximize test coverage of the API.

- Error Handling: Include test cases that verify the API's behavior under error conditions (e.g., network failures, invalid authentication details).

- Non-Functional Testing: Incorporate test cases to assess non-functional aspects like performance (response times) and security (authorization checks).

Additional Considerations:

- Severity: Categorize test cases based on the potential impact of a failure (high, medium, low) to prioritize testing efforts.

- Traceability: Link test cases to specific API requirements or user stories for improved test management.

What are Manual API Test Cases?

A manual API test case is very similar to an ordinary API test case, where it is a documented procedure designed, however, it is executed by a human tester to validate the functionality, behavior, and characteristics of an API under specific conditions. It serves as a blueprint for verifying an API endpoint's performance without the use of automated testing tools.

Key Elements of Manual API Test Cases

Test Objective

A clear statement specifying the purpose of the test case and what aspect of the API it's evaluating (e.g., validate user authentication process, verify data format in response).

Test Data (Inputs)

Defines the specific data (payloads, parameters) to be sent in the API request. This may include:

- Valid Data: Tests with valid data confirm the API processes information correctly under normal conditions.

- Invalid Data: These cases include data that intentionally violates API specifications to check for proper error handling.

- Edge Cases & Boundary Values: Test data that pushes the limits of expected values (e.g., very large numbers, empty strings) helps identify potential issues.

Execution Steps

A sequential list of actions the tester needs to perform to execute the test. This includes:

- Sending the API request (specifying method, URL, headers, body)

- Handling authentication (e.g., using tokens, basic authentication)

- Managing responses (parsing data, extracting relevant information)

Expected Results (Outputs)

Details the anticipated response from the API that may include:

- Status Code: The HTTP status code expected in the response (e.g., 200 for success, 401 for unauthorized access).

- Response Format: Specify the format of the response data (JSON, XML, etc.).

- Response Content: Detail the specific data elements and their expected values within the response body.

Pass/Fail Criteria

Defines the conditions that determine whether the test case has passed or failed. This could involve:

- Matching Expected Responses: Compare the actual response with the defined expected outputs to check for discrepancies.

- Verifying Specific Behavior: Confirm that the API triggers the intended behavior based on the test request (e.g., successful user creation, error message for invalid login).

Optional Elements:

- Pre-conditions: Any specific setup required before executing the test (e.g., creating test data, setting up environment variables).

- Post-conditions: Any actions needed after the test execution (e.g., cleaning up test data).

- Severity: Categorization of the test case based on the potential impact of a failure (high, medium, low) to prioritize testing efforts.

- Traceability: Linking the test case to specific API requirements or user stories for improved test management.

Apidog - Personalize your API Test Cases to Perfection

To ensure that your API can handle not just random data, but ones that are similar in structure to real-world data, you will need an API tool.

With Apidog, you can build, test, mock, and document APIs. Gone are the days when you have to find a specific tool for each stage of the API lifecycle - Apidog has functionalities to support users every step of the way.

Creating Your Own API with Apidog

Apidog not only provides users the opportunity to customize test cases. With Apidog, users will also be able to create APIs limited to their imaginations!

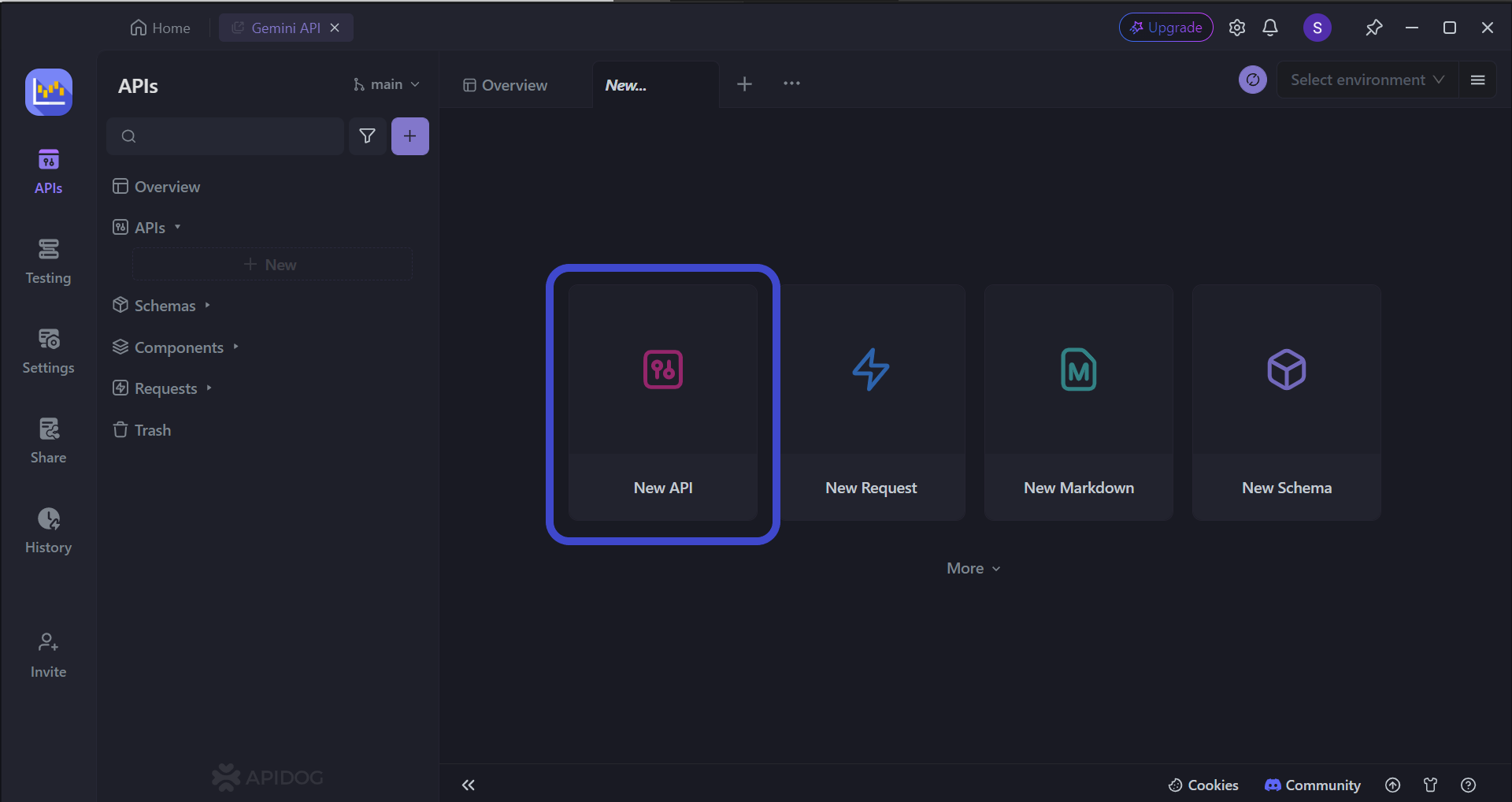

To begin, press the New API button, as shown in the image above.

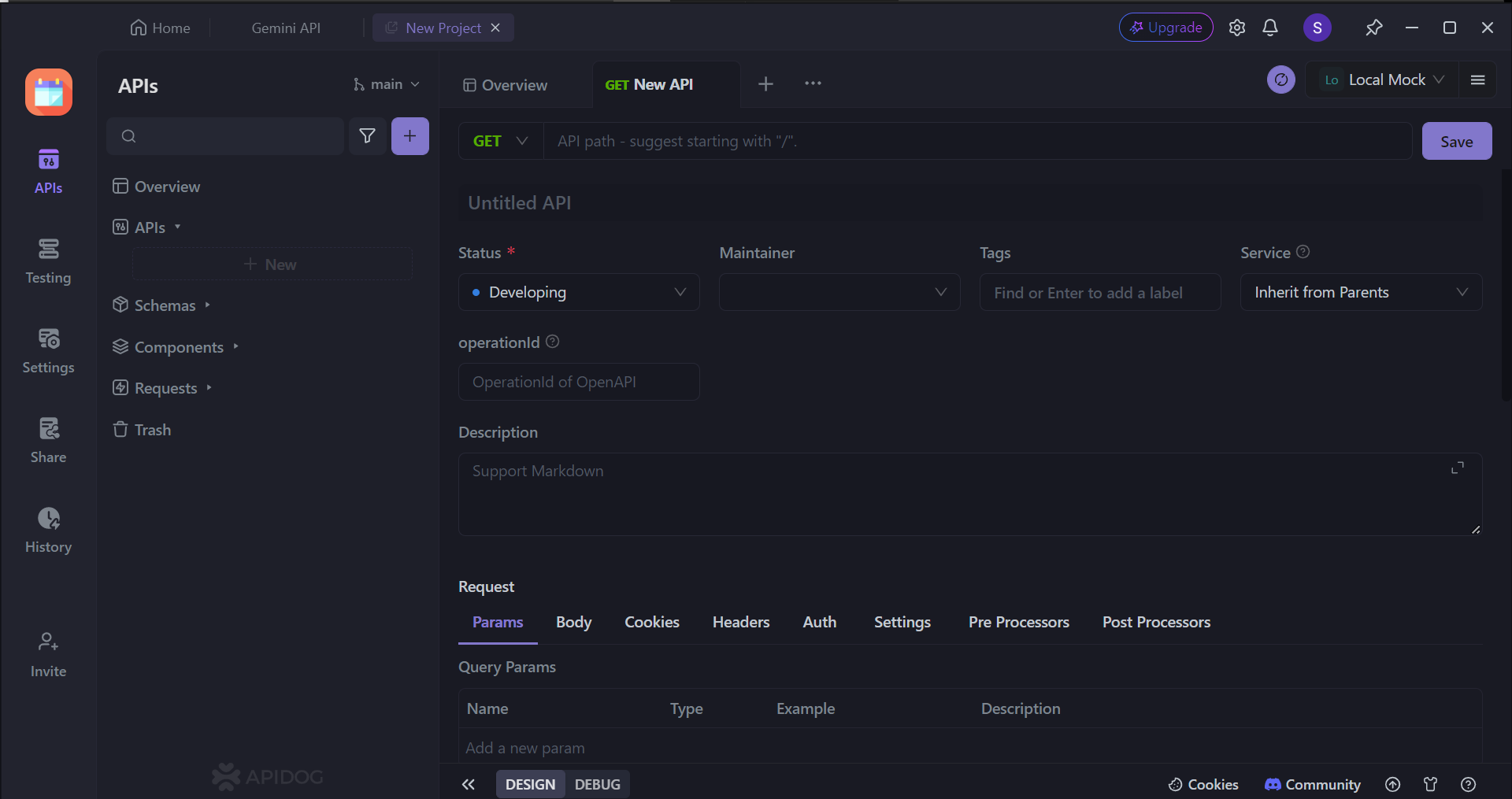

Next, you can select many of the API's characteristics. On this page, you can:

- Set the HTTP method (GET, POST, PUT, or DELETE)

- Set the API URL (or API endpoint) for client-server interaction

- Include one/multiple parameters to be passed in the API URL

- Provide a description of what functionality the API aims to provide.

To provide some assistance in creating APIs in case this is your first time creating one, you may consider reading these articles to understand the best practices for making REST APIs (or APIs in general), as REST APIs tend to be extremely popular today:

Setting Values for Your APIs' Test Cases with Apidog

With Apidog, you can determine what type of values will be sent through. This ensures that your API can process various types of data, be it random or real.

First, open an API that you have created or imported.

Arrow 1 - Continue by locating the DEBUG button as pointed out by arrow 1. You should then be able to see a different page.

Arrow 2 - If the DEBUG button has been pressed, you should be able to see the Insert Dynamic Value. Click this button to proceed.

Another pop-up menu will show on your screen prompting you to select what type of value you wish to put through your API whenever you wish to test its performance. This is particularly useful for API functional testing as well!

Conclusion

Crafting effective manual test cases is an essential skill for API testing success. By following the outlined steps and incorporating the key characteristics, you can create a comprehensive suite of test cases that meticulously examine your APIs.

This not only ensures they function flawlessly but also empowers you to identify and address potential issues before they impact your users. Remember, well-defined manual test cases serve as a valuable foundation for robust API testing, safeguarding the seamless user experiences your applications depend on.