The GPT-4.1 API, developed by OpenAI, represents a significant advancement in artificial intelligence, offering developers robust capabilities for building applications that excel in coding, instruction following, and handling long-context tasks. Whether you're creating intelligent chatbots, automating workflows, or generating dynamic content, this API provides the tools to integrate cutting-edge AI into your projects.

What Is the GPT-4.1 API?

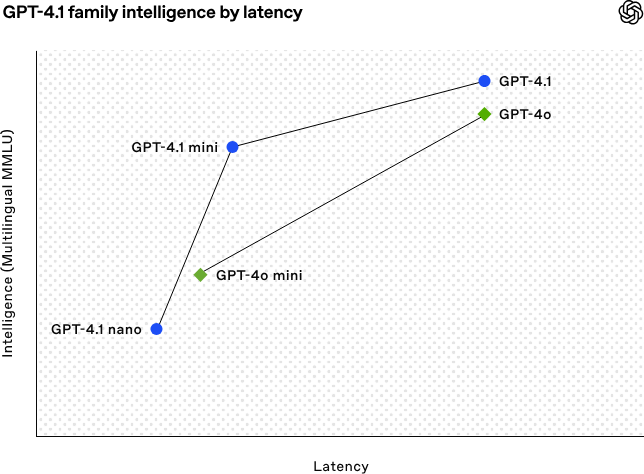

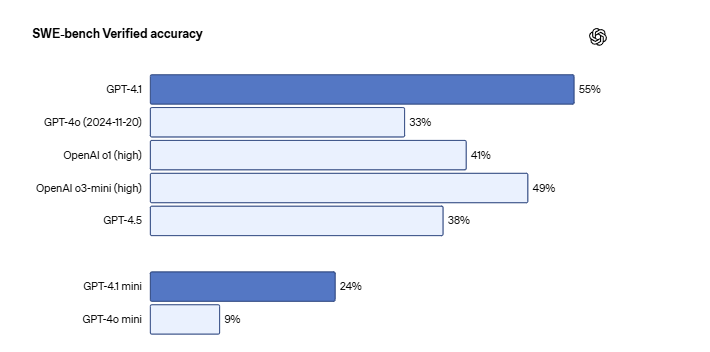

The GPT-4.1 API is an API-only interface provided by OpenAI, designed to deliver enhanced performance over its predecessors. It supports a context window of up to 1 million tokens, enabling it to process and generate extensive text, code, and structured data. According to OpenAI, GPT-4.1 excels in real-world utility, particularly for developers building applications that require precise instruction adherence and complex reasoning.

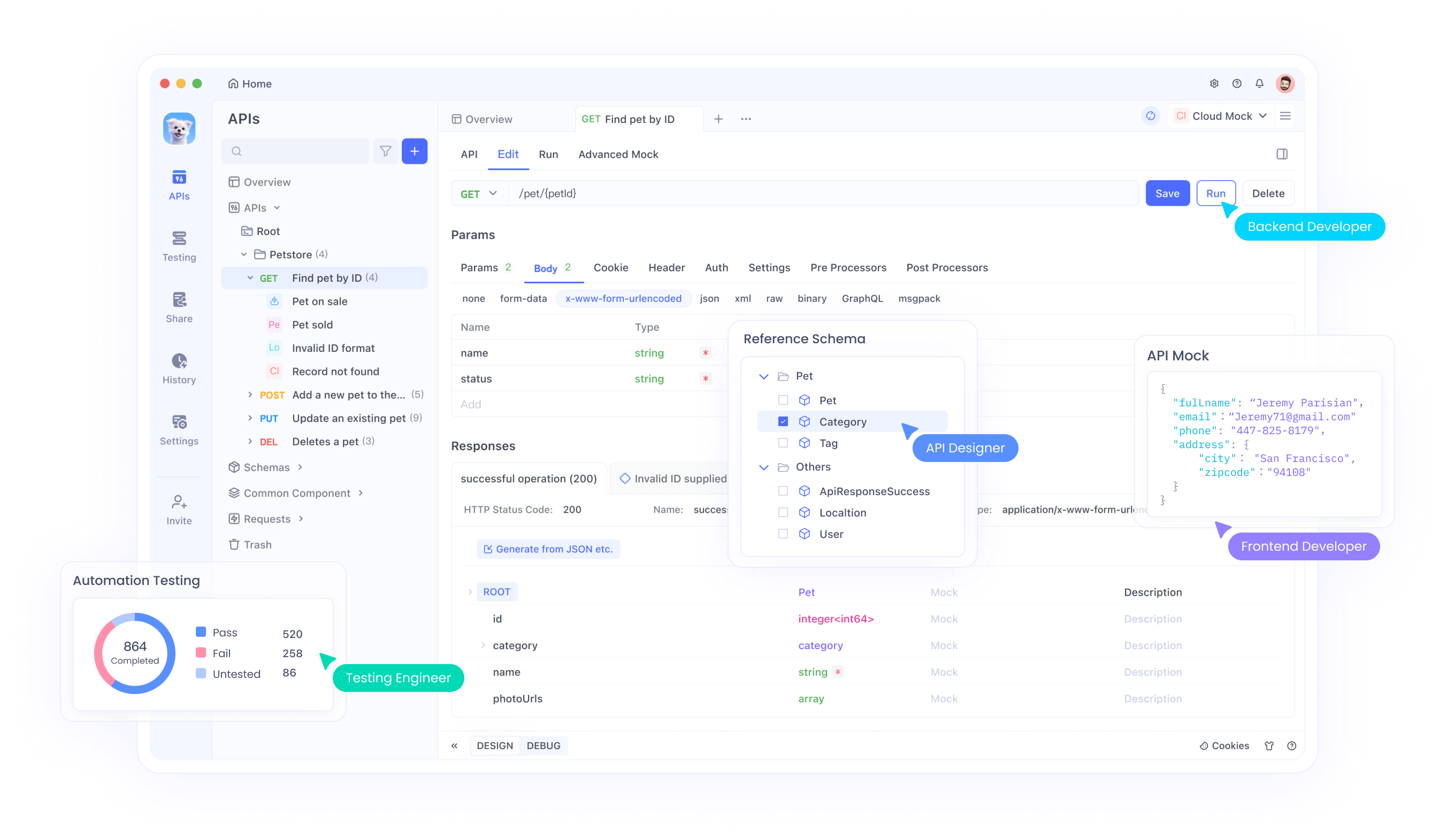

Moreover, the API is available in multiple variants, including GPT-4.1-mini and GPT-4.1-nano, catering to different use cases based on resource constraints and performance needs.

Prerequisites for Using the GPT-4.1 API

Before diving into the technical setup, ensure you have the following:

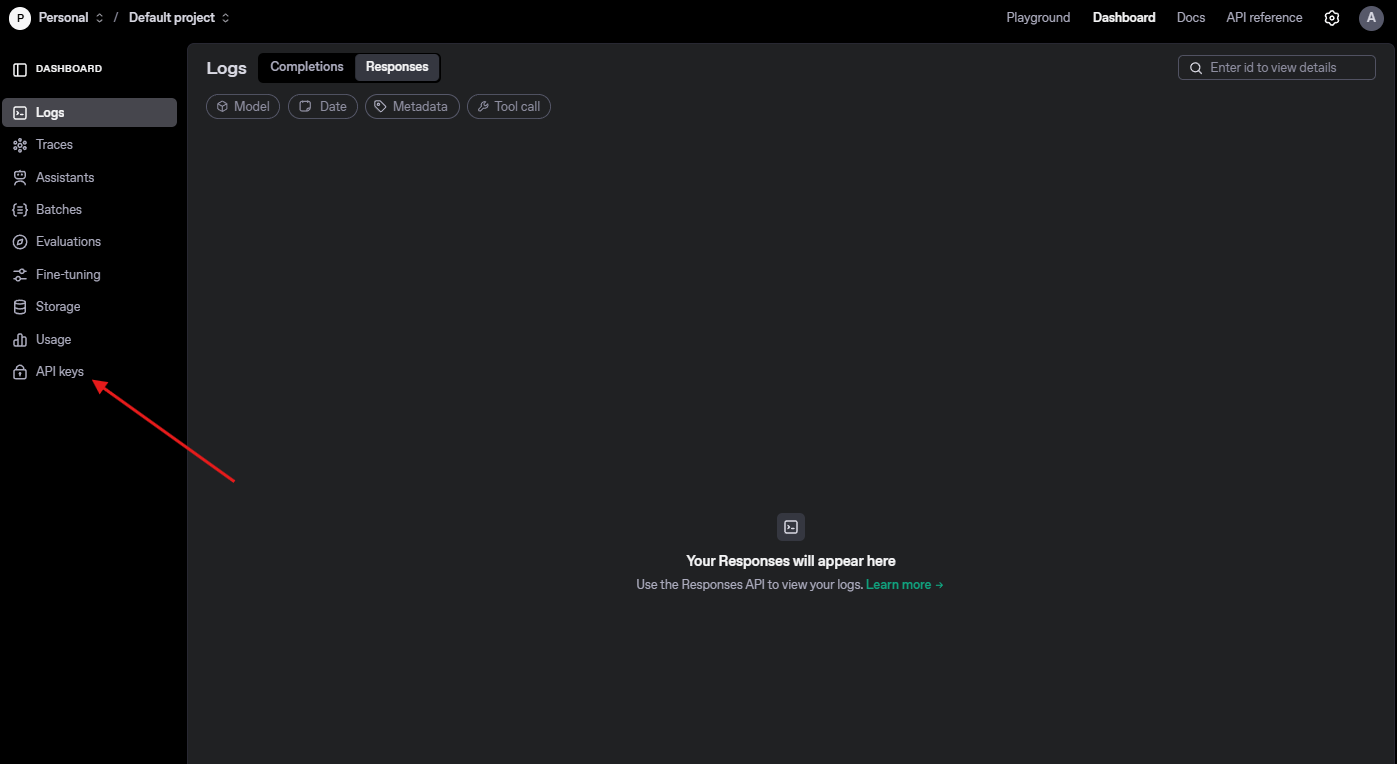

OpenAI Account: Sign up at OpenAI’s website to access the API dashboard.

API Key: Generate a unique API key from the OpenAI platform to authenticate your requests.

Programming Environment: Set up a development environment with a language like Python, Node.js, or cURL for making HTTP requests.

Apidog Installation: Install Apidog to test and manage your API calls efficiently.

Basic API Knowledge: Understand HTTP methods (POST, GET) and JSON formatting for structuring requests.

With these in place, let’s proceed to setting up your environment for the GPT-4.1 API.

Setting Up Your Environment for the GPT-4.1 API

To interact with the GPT-4.1 API, configure your development environment as follows:

Step 1: Install the OpenAI SDK

For Python developers, the OpenAI SDK simplifies API interactions. Install it using pip:

pip install openai

Step 2: Secure Your API Key

Store your API key securely, preferably in an environment variable, to avoid hardcoding it in your scripts. For example, in a Unix-based system:

export OPENAI_API_KEY='your-api-key-here'

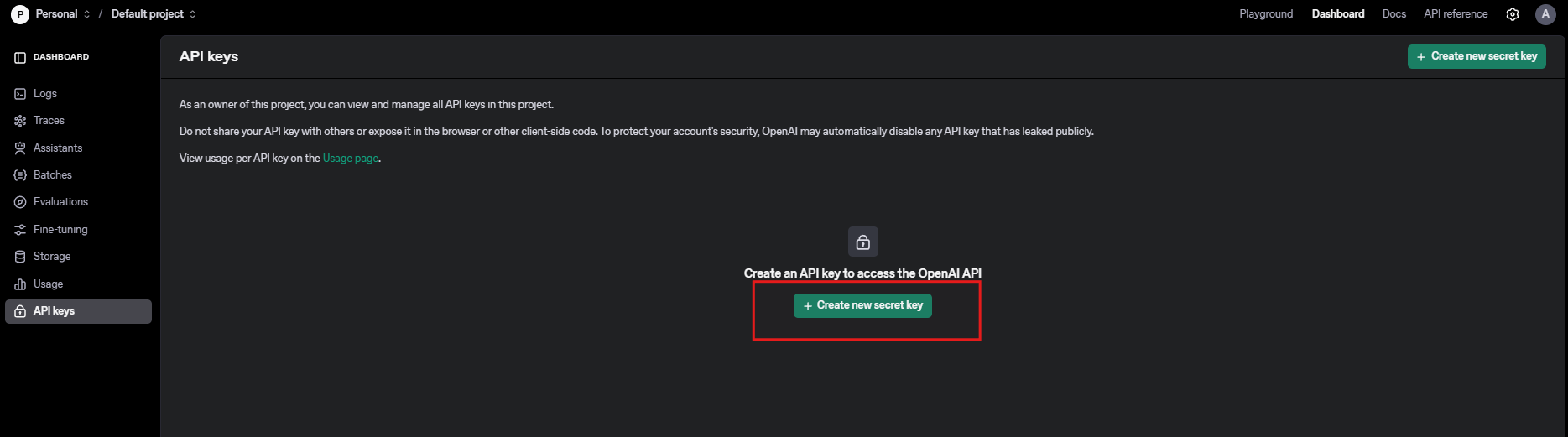

Step 3: Verify Apidog Installation

Download and install Apidog from Apidog. Create a new project in Apidog to manage your GPT-4.1 API requests. Apidog’s intuitive interface allows you to test endpoints, validate responses, and monitor performance without writing extensive code.

Step 4: Test Connectivity

Run a simple script to confirm your setup:

import openai

import os

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.ChatCompletion.create(

model="gpt-4.1",

messages=[{"role": "user", "content": "Hello, GPT-4.1!"}]

)

print(response.choices[0].message.content)

If successful, you’ll see a response from the API. Now, let’s explore how to structure API requests.

Structuring GPT-4.1 API Requests

The GPT-4.1 API uses a chat-based architecture, requiring JSON-formatted requests. Here’s a breakdown of the key components:

- Model: Specify

"gpt-4.1","gpt-4.1-mini", or"gpt-4.1-nano"based on your needs. - Messages: An array of message objects defining the conversation context. Each message includes:

- Role:

"system","user", or"assistant". - Content: The text or instructions for the model.

- Parameters: Optional settings like

temperature(controls randomness) andmax_tokens(limits response length).

For example, to generate a code snippet:

response = openai.ChatCompletion.create(

model="gpt-4.1",

messages=[

{"role": "system", "content": "You are a coding assistant."},

{"role": "user", "content": "Write a Python function to calculate factorial."}

],

temperature=0.7,

max_tokens=200

)

This request instructs GPT-4.1 to act as a coding assistant and generate a factorial function. Apidog can help you test such requests by allowing you to input JSON payloads and inspect responses in real time.

Next, I’ll cover how to handle API responses effectively.

Handling GPT-4.1 API Responses

The GPT-4.1 API returns a JSON object containing the model’s output. Key fields include:

- choices: An array of response options, typically containing one item unless multiple completions are requested.

- message.content: The generated text or code.

- usage: Token consumption details for input and output.

Here’s how to parse a response:

response = openai.ChatCompletion.create(

model="gpt-4.1",

messages=[{"role": "user", "content": "Explain quantum computing in simple terms."}]

)

content = response.choices[0].message.content

tokens_used = response.usage.total_tokens

print(f"Response: {content}")

print(f"Tokens Used: {tokens_used}")

To optimize costs, monitor token usage, as pricing depends on input and output tokens. According to OpenAI’s pricing page, GPT-4.1 is competitively priced for its capabilities, but costs can accumulate for high-volume applications.

Apidog simplifies response handling by visualizing JSON structures and highlighting errors, ensuring you catch issues early. Let’s now examine practical use cases for the GPT-4.1 API.

Practical Use Cases for the GPT-4.1 API

The versatility of the GPT-4.1 API makes it suitable for various applications. Here are three examples:

1. Code Generation

Instruct GPT-4.1 to write scripts, debug code, or generate boilerplate. For instance:

{

"model": "gpt-4.1",

"messages": [

{"role": "user", "content": "Create a REST API endpoint in Flask to fetch user data."}

]

}

Test this request in Apidog to verify the generated code’s syntax and functionality.

2. Content Creation

Generate blog posts, product descriptions, or social media content. For example:

response = openai.ChatCompletion.create(

model="gpt-4.1",

messages=[

{"role": "system", "content": "You are a content writer."},

{"role": "user", "content": "Write a 100-word product description for a smartwatch."}

]

)

3. Data Analysis

Use GPT-4.1 to summarize datasets or generate insights. For instance, upload a CSV summary via Apidog and request:

{

"model": "gpt-4.1",

"messages": [

{"role": "user", "content": "Analyze this sales data: [CSV content]."}

]

}

These use cases highlight GPT-4.1’s flexibility. However, optimizing performance requires fine-tuning parameters, which I’ll discuss next.

Optimizing GPT-4.1 API Performance

To maximize the GPT-4.1 API’s efficiency, adjust the following parameters:

- Temperature: Set between 0.0 (deterministic) and 1.0 (creative). For technical tasks, use 0.3–0.7.

- Max Tokens: Limit output length to reduce costs and improve response time.

- Top-p Sampling: Control response diversity. A value of 0.9 balances coherence and variety.

For example:

response = openai.ChatCompletion.create(

model="gpt-4.1",

messages=[{"role": "user", "content": "Summarize a 500-word article."}],

temperature=0.5,

max_tokens=100,

top_p=0.9

)

Additionally, batch requests when possible to minimize API calls. Apidog’s automation features can schedule and execute batch tests, ensuring consistent performance.

Now, let’s address common challenges and how to troubleshoot them.

Troubleshooting Common GPT-4.1 API Issues

Despite its robustness, you may encounter issues with the GPT-4.1 API. Here are solutions to frequent problems:

1. Authentication Errors

- Issue: Invalid API key (401 Unauthorized).

- Solution: Verify your key in the OpenAI dashboard and ensure it’s correctly set in your environment.

2. Rate Limits

- Issue: Exceeding request limits (429 Too Many Requests).

- Solution: Check OpenAI’s rate limit documentation and implement exponential backoff in your code.

3. Unexpected Outputs

- Issue: Irrelevant or incomplete responses.

- Solution: Refine your prompts with clear instructions and adjust parameters like temperature.

Apidog’s error tracking helps identify issues by logging request-response pairs, making debugging straightforward. For example, if a response is truncated, Apidog highlights token limits exceeded.

Next, I’ll explain how Apidog enhances GPT-4.1 API integration.

Leveraging Apidog for GPT-4.1 API Integration

Apidog is a powerful tool for managing API workflows, offering features tailored to the GPT-4.1 API:

- Request Builder: Create and send JSON requests without coding.

- Response Validation: Verify outputs against expected formats.

- Performance Monitoring: Track response times and token usage.

- Collaboration: Share API projects with team members for streamlined development.

To use Apidog:

- Open Apidog and create a new project.

- Add the GPT-4.1 API endpoint:

https://api.openai.com/v1/chat/completions. - Input your API key in the Authorization header.

- Build a request, such as:

{

"model": "gpt-4.1",

"messages": [{"role": "user", "content": "Test request"}]

}

- Send the request and analyze the response in Apidog’s interface.

By integrating Apidog, you reduce manual errors and accelerate development. Let’s now consider best practices for secure and efficient API usage.

Best Practices for Using the GPT-4.1 API

To ensure optimal results, follow these guidelines:

- Secure API Keys: Store keys in environment variables or a vault solution.

- Monitor Costs: Track token usage to stay within budget, referencing OpenAI’s pricing details.

- Use Specific Prompts: Craft detailed instructions to minimize irrelevant outputs.

- Test Iteratively: Use Apidog to run small-scale tests before deploying at scale.

- Stay Updated: Follow OpenAI’s blog for API updates and new features.

These practices enhance reliability and cost-efficiency. Finally, let’s summarize the key takeaways.

Conclusion

The GPT-4.1 API empowers developers to build sophisticated AI-driven applications with unparalleled capabilities in coding, content generation, and data analysis. By setting up your environment, structuring requests, and leveraging tools like Apidog, you can integrate this API seamlessly into your projects. Furthermore, optimizing parameters and troubleshooting issues ensures consistent performance, while best practices safeguard security and efficiency.

Start exploring the GPT-4.1 API today, and download Apidog for free to streamline your workflow. With these tools, you’re well-equipped to harness the power of advanced AI in your applications.