Hey there, fellow developer and AI enthusiast! Have you ever found yourself staring at a blank code editor, the idea in your head feeling just out of reach? Or maybe you've been tinkering with one AI model, wondering if you could combine its strengths with another to create something truly powerful. Well, you're in the right place.

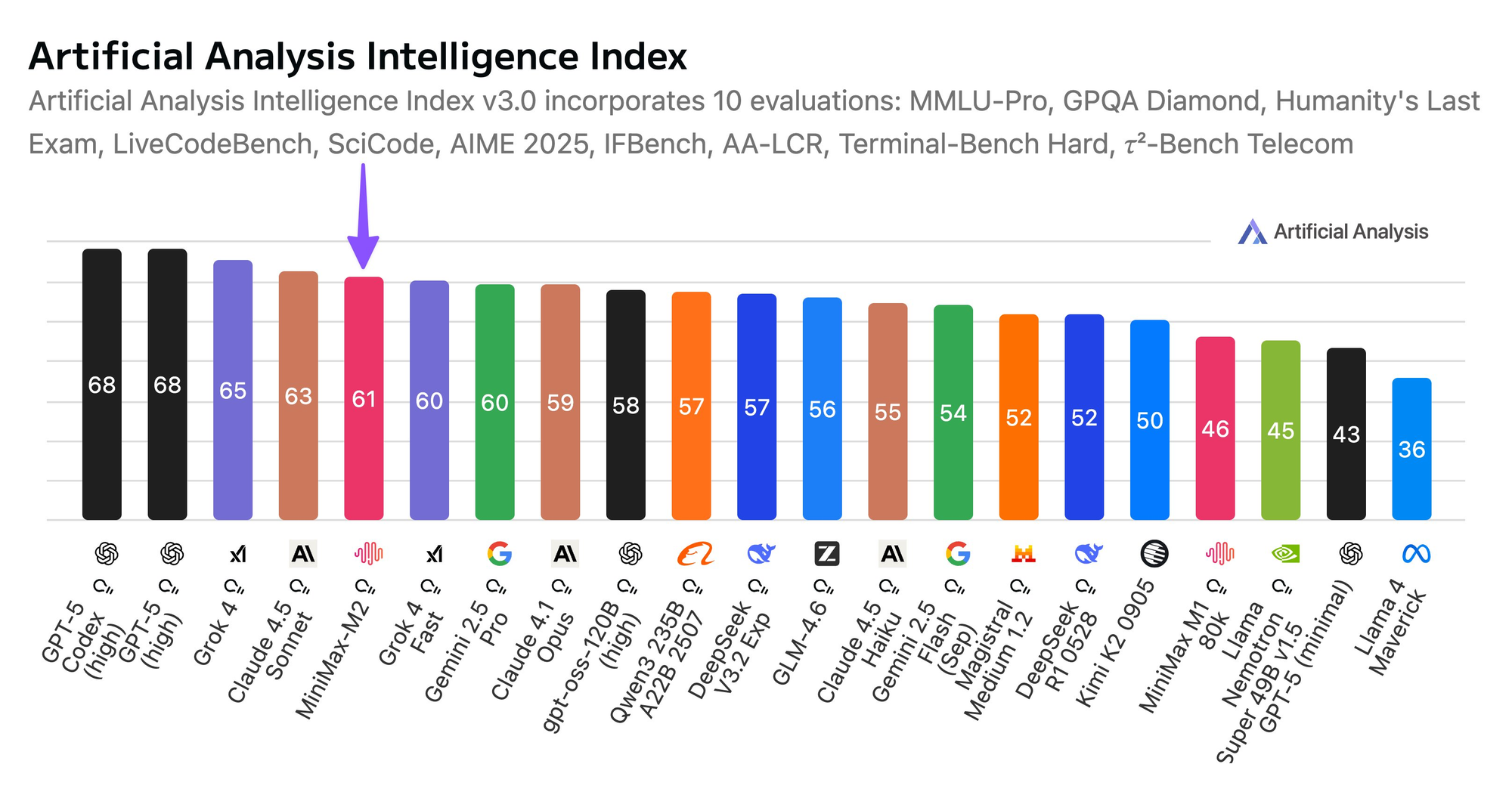

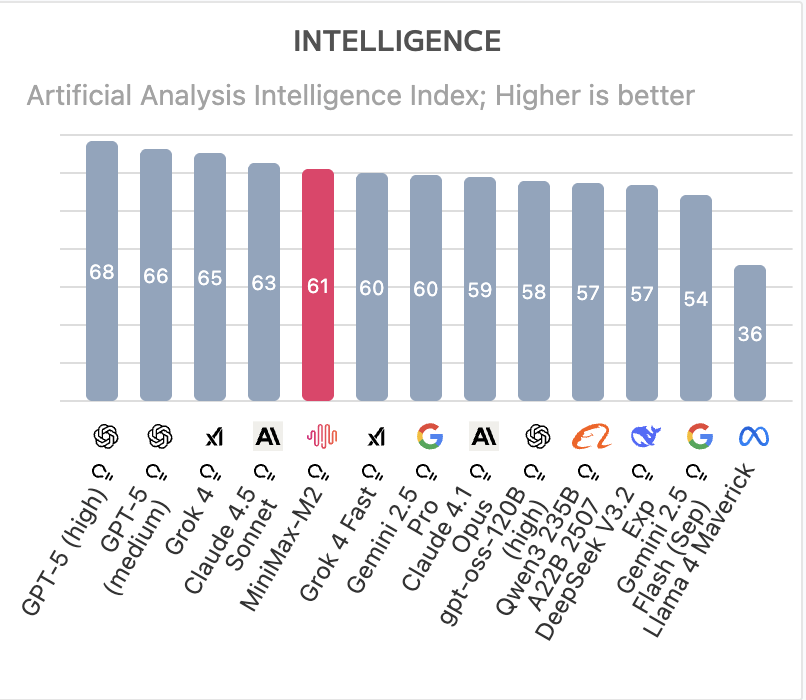

Today, we're diving deep into one of the most exciting developer workflows out there: using Minimax M2 alongside Claude to write, refine, and understand code. Think of it as assembling your own AI dream team. Claude, with its razor-sharp reasoning and vast context window, can be your strategic architect. Meanwhile, Minimax M2, a powerhouse in its own right, can act as a specialized engineer, generating and executing code with impressive precision.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

So, grab your favorite beverage, and let's embark on this journey to supercharge your coding process!

Setting the Stage: Why Combine Minimax M2 and Claude?

First things first, you might be wondering, "Why go through the trouble of using two AI models? Isn't one enough?" It's a fair question! The answer lies in the concept of specialization and collaboration.

Understanding Our AI Power Duo

Claude (from Anthropic) is often praised for its deep reasoning capabilities, its ability to grasp complex, nuanced instructions, and its massive context window. You can give it a sprawling, multi-file codebase and ask for a detailed refactor, and it will maintain a coherent understanding of the entire project. It's a brilliant strategist and architect.

Minimax M2, on the other hand, is a multimodal LLM from a leading Chinese AI company. It's exceptionally strong at code generation and following specific, structured prompts. It can take a well-defined task and produce clean, functional, and efficient code.

The Synergistic Workflow

So, how do they work together? Imagine this flow:

- High-Level Planning with Claude: You describe your software idea in plain English to Claude. "I want a Python web app that fetches the latest tech news, summarizes the articles, and sends me a daily digest email." Claude can then break this down into a development plan: backend with FastAPI, a web scraper, an integration with a summarization API, and an email-sending service.

- Component Generation with Minimax M2: You take one of those components let's say, "create a FastAPI endpoint that accepts an email address and returns a success message" and you feed that precise instruction to Minimax M2 via its API. It will quickly generate the exact Python code for that endpoint.

- Review and Integration with Claude: Finally, you bring the generated code back to Claude. You can ask, "Claude, here's the FastAPI code from Minimax M2. Review it for best practices, check for security issues, and integrate it into our existing

main.pyfile."

This back-and-forth leverages the unique strengths of each model, ultimately leading to a higher-quality, more robust final product than you might get from relying on a single model alone. It's all about creating a powerful, iterative {{conversational}} loop between you and your AI assistants.

Understanding the Basics: What Are Minimax M2 and Claude?

What Is Minimax M2 and Why Should You Care?

Minimax is a leading Chinese AI company known for its large multimodal models. The M2 series represents their second-generation foundation models, optimized for tasks like natural language understanding, code generation, and even multimodal reasoning (think: image + text).

Unlike general-purpose models, Minimax M2 is fine-tuned for Chinese-language contexts but also supports English and other languages with impressive fluency. It shines in:

- Generating high-quality code snippets

- Explaining complex logic in simple terms

- Translating technical documentation

- Assisting with cloud infrastructure scripting (great if you’re into cybersecurity or cloud computing wink)

But here’s the catch: while Minimax M2 is powerful, it’s not specifically designed as a “code-first” model. That’s where Claude Code comes in.

Enter Claude Code: Anthropic’s Developer-Centric AI

Anthropic’s Claude, especially newer versions like Claude 3.5 Sonnet, includes a specialized mode often referred to informally as “Claude Code” not an official product name, but a community term for Claude’s enhanced code understanding and generation capabilities.

Claude Code excels at:

- Reading and explaining entire codebases

- Writing secure, efficient, and well-documented functions

- Refactoring legacy code

- Detecting potential bugs or security vulnerabilities

It’s trained on a massive corpus of open-source code and technical documentation, making it one of the most developer-friendly LLMs available today.

So why not just use Claude alone?

Great question. The answer lies in complementarity.

Minimax M2 might give you better results for region-specific logic, multilingual support, or domain-specific tasks (e.g., generating cloud security policies compliant with Chinese regulations). Meanwhile, Claude provides robust, general-purpose coding intelligence with strong reasoning and fewer hallucinations.

By combining both, you create a hybrid AI assistant that leverages the best of East and West, specialized and general, secure and scalable.

When Does It Make Sense to Combine Minimax M2 and Claude Code?

Not every project needs two LLMs. In fact, over-engineering can slow you down. So let’s be strategic.

Use both when:

✅ You’re building a global application that serves users in China and internationally

✅ Your code requires deep localization (e.g., generating AWS + Alibaba Cloud deployment scripts)

✅ You need redundant validation e.g., have Minimax draft a function, then ask Claude to review it for security flaws

✅ You’re experimenting with AI agent architectures where different models handle different subtasks

Stick to one when:

❌ You’re working on a simple CRUD app with no localization needs

❌ Your team only uses English and public cloud providers (AWS/GCP/Azure)

❌ You’re on a tight latency or budget constraint (two API calls = double cost & delay)

Now, assuming you do want to integrate both how do you actually do it?

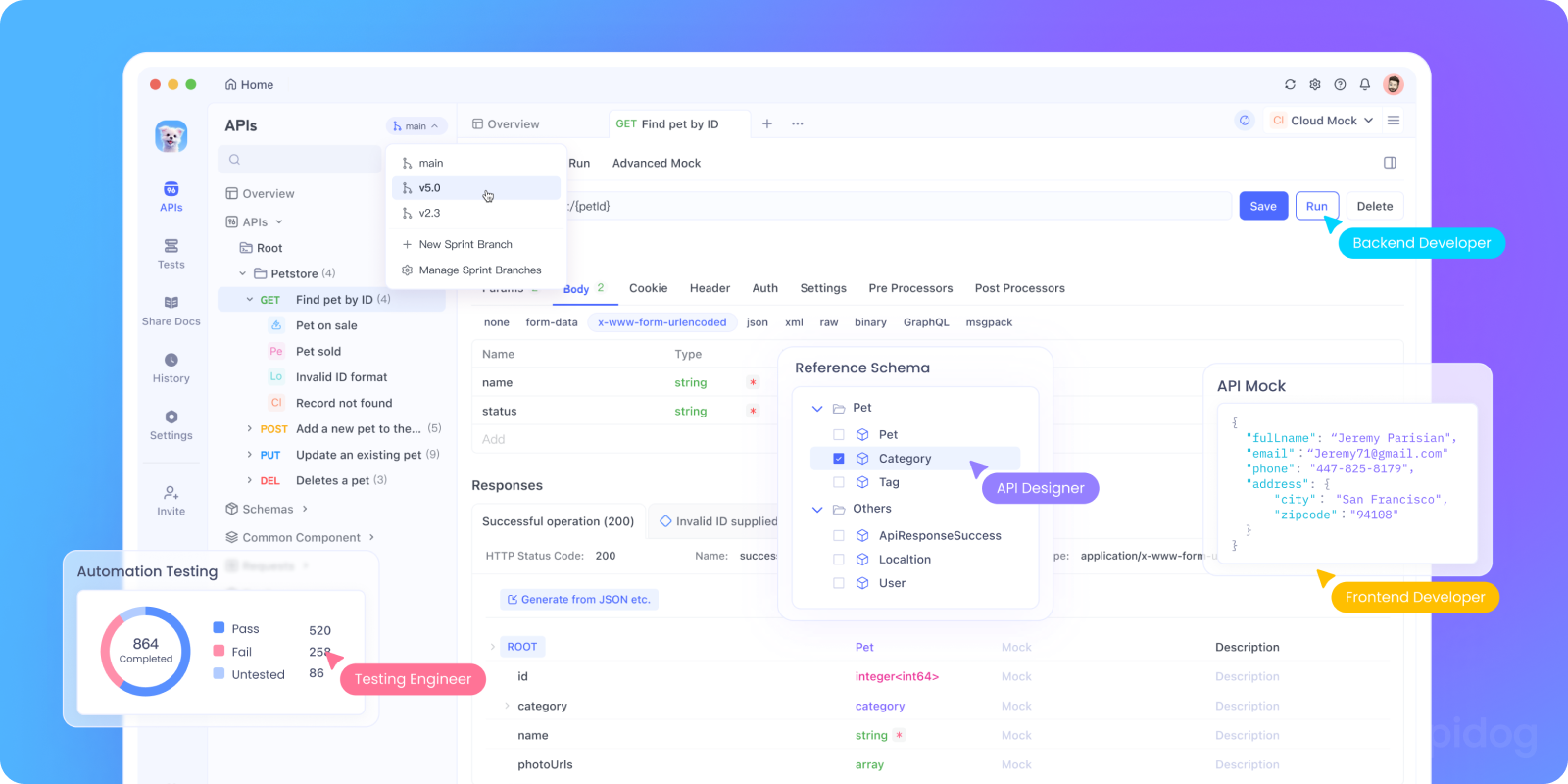

Diving into the APIs: A Hands-On Exploration with Apidog

Now for the fun part! Let's get familiar with the APIs we'll be using. Instead of just writing code, let's first use Apidog to interact with them directly. This helps us understand the request and response structure without any coding overhead.

Testing the Minimax M2 API in Apidog

First, let's fire up Apidog and create a new request.

- Set the Request Method and URL: Choose

POSTand enter the Minimax M2 API endpoint:https://api.minimax.chat/v1/text/chat/completions_pro. - Configure the Headers: In the "Headers" tab, add the following:

Content-Type:application/jsonAuthorization:Bearer YOUR_MINIMAX_API_KEY

- Craft the Request Body: Switch to the "Body" tab and select "raw" and "JSON." Here's a basic structure to get a code generation response:

json

{

"model": "abab6.5-chat",

"messages": [

{

"role": "user",

"content": "Write a Python function to calculate the factorial of a number using recursion."

}

],

"temperature": 0.7

}

Let's break down these {{}} parameters:

model: We're usingabab6.5-chat, which is the latest and most powerful model from Minimax at the time of writing.messages: An array where we define the conversation. We're starting with auserrole and our prompt.temperature: This controls the randomness of the output. A value of 0.7 provides a good balance between creativity and determinism.

- Hit Send! Click the "Send" button in Apidog. You should see a response from the Minimax API on the right-hand side, neatly formatted, containing the generated Python code.

Wasn't that easy? Apidog instantly shows you the status code, the response time, and the full JSON body. You can easily tweak your request and resend it without messing with terminal commands.

Testing the Anthropic Claude API in Apidog

Now, let's do the same for Claude. The process is nearly identical.

- New Request: Create a new request in Apidog.

- Method and URL:

POSTtohttps://api.anthropic.com/v1/messages. - Headers:

Content-Type:application/jsonx-api-key:YOUR_ANTHROPIC_API_KEYanthropic-version:2023-06-01

4. Request Body:

json

{

"model": "claude-3-sonnet-20240229",

"max_tokens": 1000,

"messages": [

{

"role": "user",

"content": "Explain the concept of recursion in programming as if you were talking to a beginner."

}

]

}

model: We're usingclaude-3-sonnet-20240229, a great balance of intelligence and speed for this task.max_tokens: The maximum length of the response.messages: The same conversational structure.

Click "Send" again, and voilà! You'll get Claude's clear, well-reasoned explanation in the response pane. By using Apidog, you've just interacted with two different, powerful AI APIs in under a minute, confirming everything works before writing a single line of integration code.

Real-World Use Case: Secure Cloud Deployment Script Generator

Imagine you’re a cloud security engineer (sound familiar?). You need to generate deployment scripts for clients using AWS, Azure, and Alibaba Cloud.

With Minimax + Claude:

- Minimax generates region-specific Terraform or CloudFormation templates

- Claude audits them for:

- Over-permissive IAM policies

- Missing encryption settings

- Publicly exposed storage buckets

You then package this into a CLI tool or internal web app all tested and documented in Apidog.

Leveling Up: Advanced Techniques and Best Practices

You've got the basic flow down! Now, let's talk about how to make this workflow truly robust and effective.

Mastering the Art of Prompt Engineering

The quality of your output is directly tied to the quality of your input. Here are some tips:

- Be Specific and Provide Context: Don't just say "write a function." Specify the input parameters, the expected output, any edge cases to consider, and the coding style you prefer (e.g., "use type hints").

- Use System Prompts: The

systemparameter in the Claude API is incredibly powerful. You can set a persistent persona for Claude, like "You are a senior backend engineer at a FAANG company," which will influence its responses throughout the conversation. - Iterative Refinement: Your first prompt might not yield perfect results. Treat it as a conversation. If Minimax's code is missing something, don't start over. Send a follow-up message: "That's good, but now please add a check to ensure the password isn't a common word."

Handling Complex, Multi-file Projects

How do you manage a larger project? The strategy is similar but requires more organization.

- Project Blueprint with Claude: Start by giving Claude a high-level overview. "I'm building a Flask web application with user authentication, a SQLite database, and a React frontend." Ask Claude to generate a project structure and a

requirements.txtfile. - Generate Files Sequentially with Minimax: Then, go file by file. "Now, using the blueprint, write the

app.pyfile for the Flask backend. It should include the following routes:/login,/register, and/dashboard." You can feed it the content of other related files for context. - Continuous Integration with Claude: After generating a few files, paste them all into Claude's context window and ask, "Review these files for consistency. Do the imports align? Is the data flow between the frontend and backend logical?"

Error Handling and Debugging with the AI Team

Inevitably, you'll encounter errors. Your AI team can help here too.

- Get the Error: When your code fails, copy the full traceback.

- Diagnose with Claude: Paste the traceback and the relevant code to Claude. "Claude, I'm getting this error when running my Flask app. What does it mean, and how can I fix it?" Claude is excellent at explaining errors in plain English.

- Generate the Fix with Minimax: Once you understand the problem, you can ask Minimax to write the corrected code. "The error was a null reference. Please rewrite the

get_user_profilefunction to handle the case where a user is not found in the database."

Prompt Engineering Tips for Minimax + Claude

To get the best results, tailor your prompts to each model’s strengths.

For Minimax M2:

- Use clear, directive language

- Specify language and framework explicitly

- Include context about your cloud environment (e.g., “Assume IAM roles are already configured”)

Example:

“Generate a Python Flask route that accepts a file upload and stores it in Alibaba Cloud OSS. Use oss2 SDK. Do not include secret keys.”

For Claude Code:

- Ask for structured feedback (e.g., “List vulnerabilities in bullet points”)

- Request alternative implementations

- Specify compliance standards (e.g., “Check against OWASP Top 10”)

Example:

“Review this code for compliance with NIST SP 800-53 security controls. Focus on authentication, logging, and data integrity.”

Beyond the Code: Other Powerful Use Cases

This {{Minimax M2 with Claude Code}} synergy isn't just for writing new applications from scratch. Here are a few other ways you can leverage this combo:

- Code Translation: Use Claude to understand the logic of a complex Perl script, and then use Minimax to translate it into modern Python.

- Documentation Generation: Feed a complex function from your codebase to Minimax and ask it to write docstrings. Then, give a whole module to Claude and ask it to write a comprehensive README.md file.

- Test Case Generation: This is a killer feature. Give your function code to Minimax and prompt it: "Generate comprehensive unit tests for this function using Python's

pytestframework. Cover edge cases and invalid inputs." - Performance Optimization: Ask Claude to analyze a slow piece of code and identify bottlenecks. Then, prompt Minimax with: "Rewrite the following function to be more efficient. Focus on algorithmic improvement. The bottleneck is the nested loop."

Conclusion: The Future of Hybrid AI Coding

And there you have it! We've journeyed from understanding the why behind combining {{Minimax M2}} and {{Claude}}, all the way to building a functional Python script that orchestrates them into a cohesive, powerful coding partner.

We've seen how to:

- Set up our environment and API keys securely.

- Use Apidog to interact with and understand the APIs visually before writing any code a huge time-saver.

- Build Python clients for both AI services.

- Create a practical,

{{conversational}}workflow where these models play to their strengths. - Apply advanced techniques like prompt engineering and multi-file project management.

The key takeaway is that the future of development is not about AI replacing developers; it's about developers who use AI replacing those who don't. By learning to effectively orchestrate these powerful tools, you're not just writing code faster you're solving more complex problems, learning best practices on the fly, and elevating the entire quality of your work.

So, what will you build with your new AI dream team? The possibilities are truly endless. Happy coding!