Are you eager to harness the power of the new Llama 3.2 API? If so, you’re in the right place! This guide will walk you through everything you need to know about using the Llama 3.2 API effectively. But before we dive in, let me give you a quick tip: download Apidog for free! This powerful API development tool will make working with Llama 3.2 even easier. Now, let’s get started!

What is Llama 3.2?

Llama 3.2 is an advanced language model designed to assist developers in creating applications that require natural language understanding and generation. It offers enhanced capabilities over previous versions, making it an excellent choice for anyone looking to implement AI-driven features in their projects.

Why Use the Llama 3.2 API?

Using the Llama 3.2 API provides several advantages. First and foremost, it allows you to integrate powerful language processing features without needing to build your own model from scratch. You can generate text, understand context, and even carry on conversations—all with just a few API calls. Additionally, its user-friendly documentation and community support make it accessible for developers of all skill levels.

Getting Started with Llama 3.2 API

Before you can start using the Llama 3.2 API, you’ll need to set up a few things. Here’s a step-by-step guide:

Step 1: Sign Up and Get Your API Key

First, you’ll need to sign up for access to the Llama 3.2 API. You can do either in a matter of seconds from Llama’s API page.

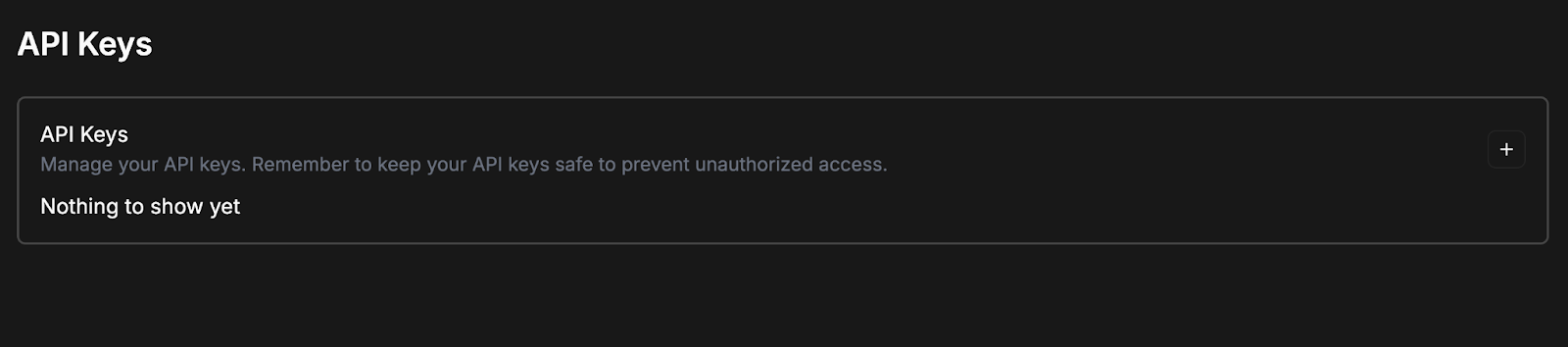

As soon as you’re logged in, you should see a screen that prompts you to create an API token.

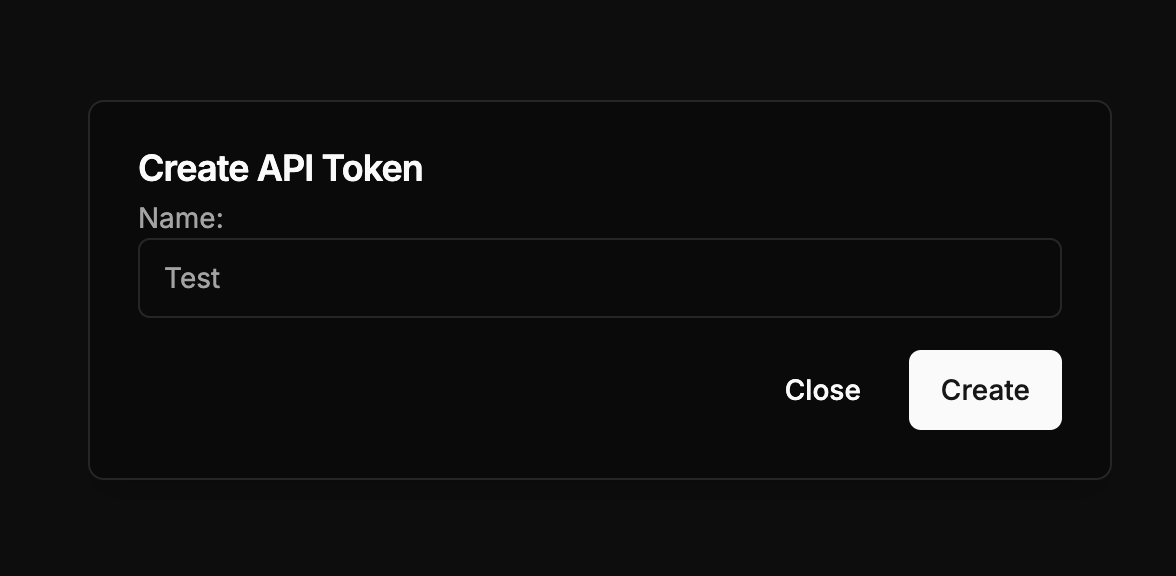

Go ahead and click on the + button; give your API token a name; and then click “Create.

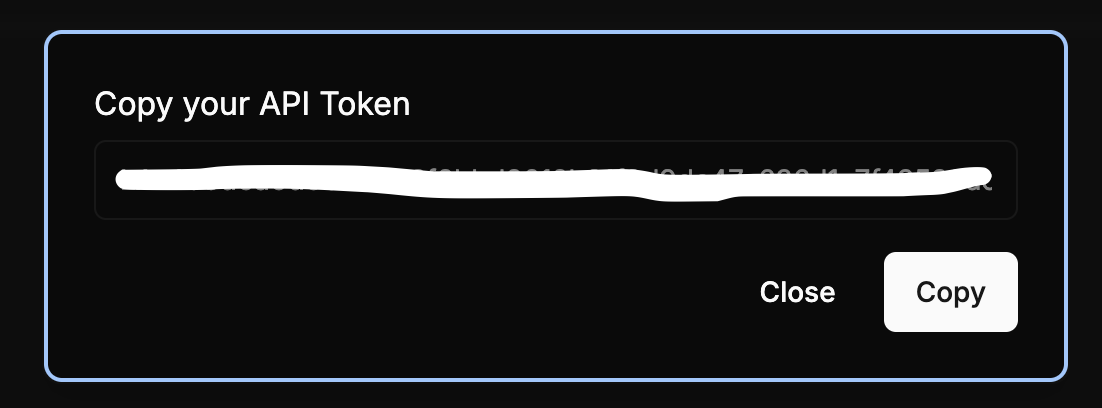

Your API token should now be auto-generated. You should copy and store it in a secure place to prevent unauthorized access.

Once the API token is created, you can copy it, change the token’s name, and delete it. You can also easily create additional tokens by following steps outlined above.

Step 2: Install Apidog

As I mentioned earlier, Apidog can be a game-changer for your API development process. Download it for free, and you’ll gain access to a variety of features that streamline API testing and documentation. It’s particularly useful for working with Llama 3.2, as it can help you format requests and analyze responses effortlessly.

Step 3: Understand the API Structure

Familiarize yourself with the API endpoints provided by Llama 3.2. The key endpoints typically include:

- /generate: For generating text based on prompts.

- /analyze: For understanding context and intent.

- /converse: For carrying on conversations in real-time.

Understanding these endpoints is vital for making effective API calls.

Making Your First API Call

Now that you’re set up, it’s time to make your first API call. Here’s how to do it step-by-step.

Step 1: Prepare Your Environment

Ensure you have a suitable environment for making HTTP requests. You can use tools like Postman, curl, or even your programming language’s HTTP library.

Step 2: Create Your Request

Here’s a sample request for the /generate endpoint. Using Apidog can simplify this process:

POST /generate

{

"api_key": "your_api_key_here",

"prompt": "Once upon a time in a distant land",

"max_tokens": 100

}

Step 3: Send the Request

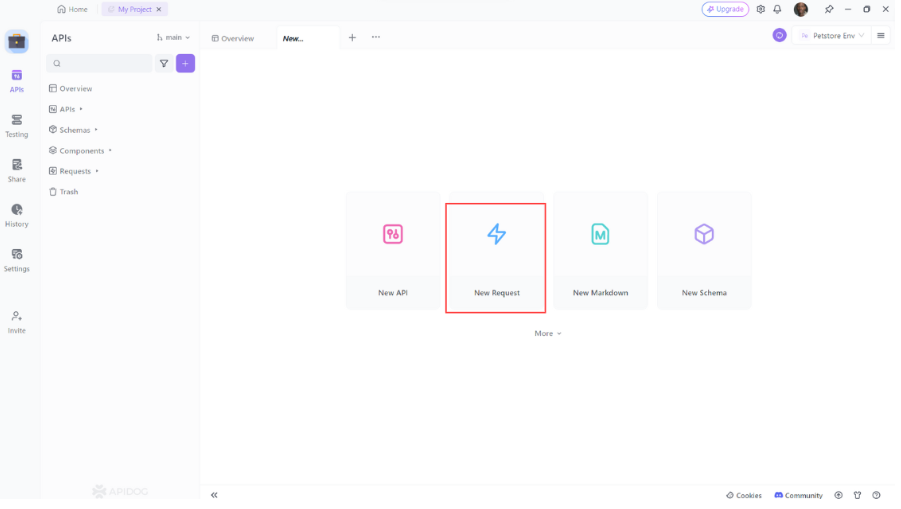

Once you’ve prepared your request, send it. If you’re using Apidog, you can simply:

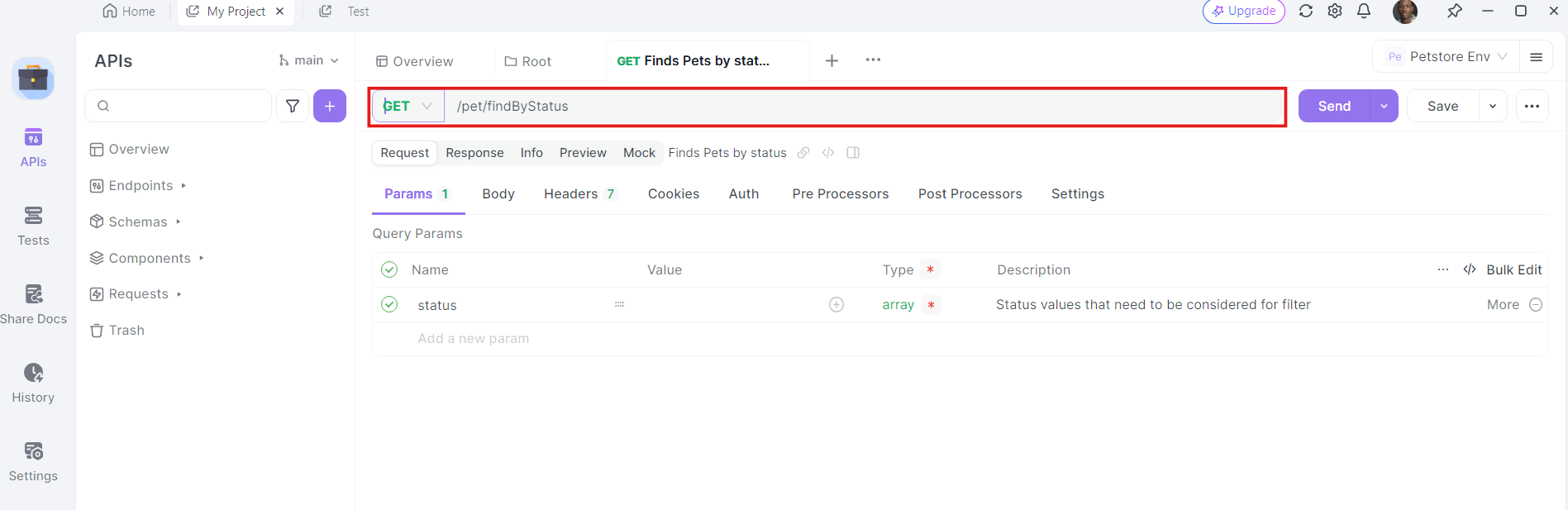

- Open Apidog: Launch Apidog and create a new request.

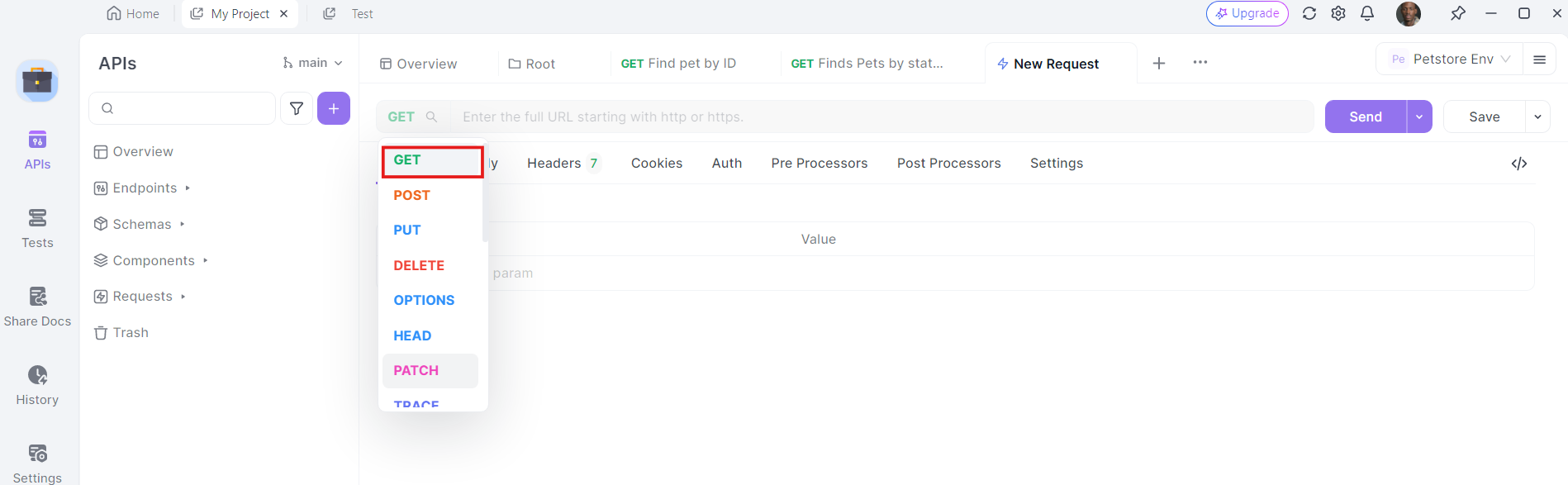

2. Select the HTTP Method: Choose "GET" as the request method or "Post"

3. Enter the URL: In the URL field, enter the endpoint you want to send the GET request to.

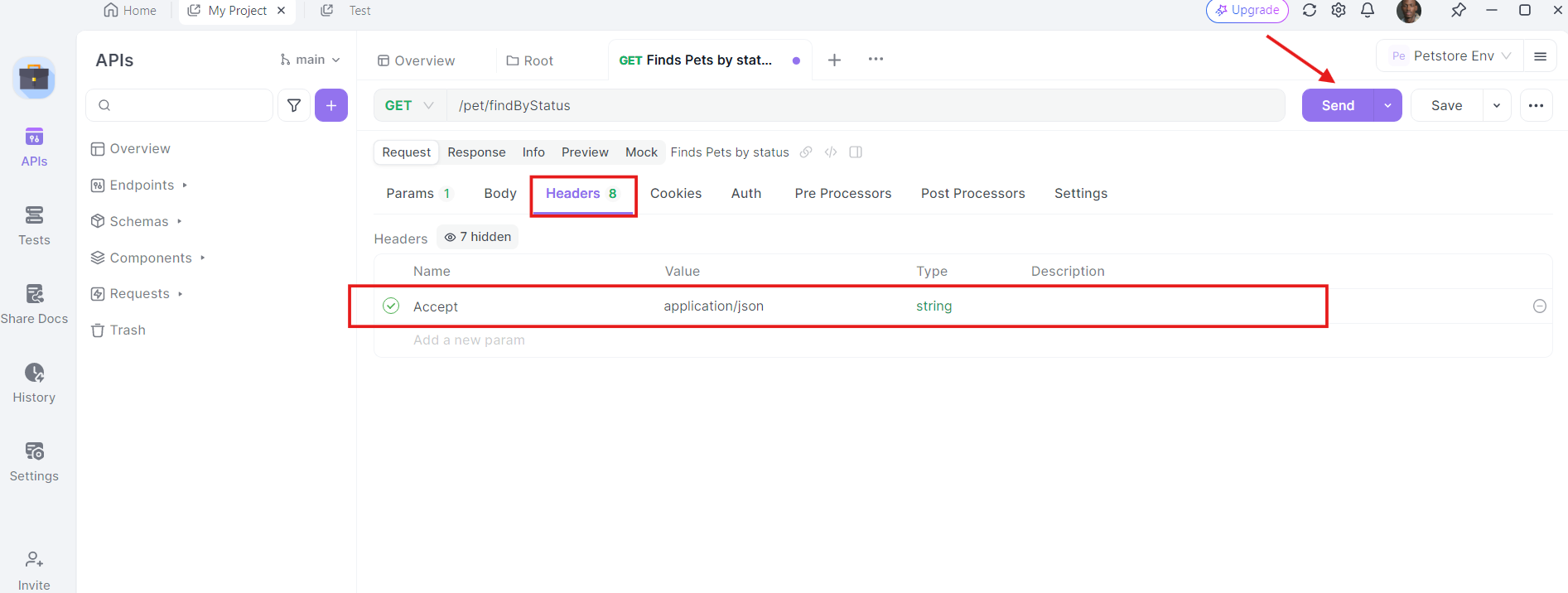

4. Add Headers: Now, it's time to add the necessary headers. Click on the "Headers" tab in apidog. Here, you can specify any headers required by the API. Common headers for GET requests might include Authorization, Accept, and User-Agent.

For example:

- Authorization:

Bearer YOUR_ACCESS_TOKEN - Accept:

application/json

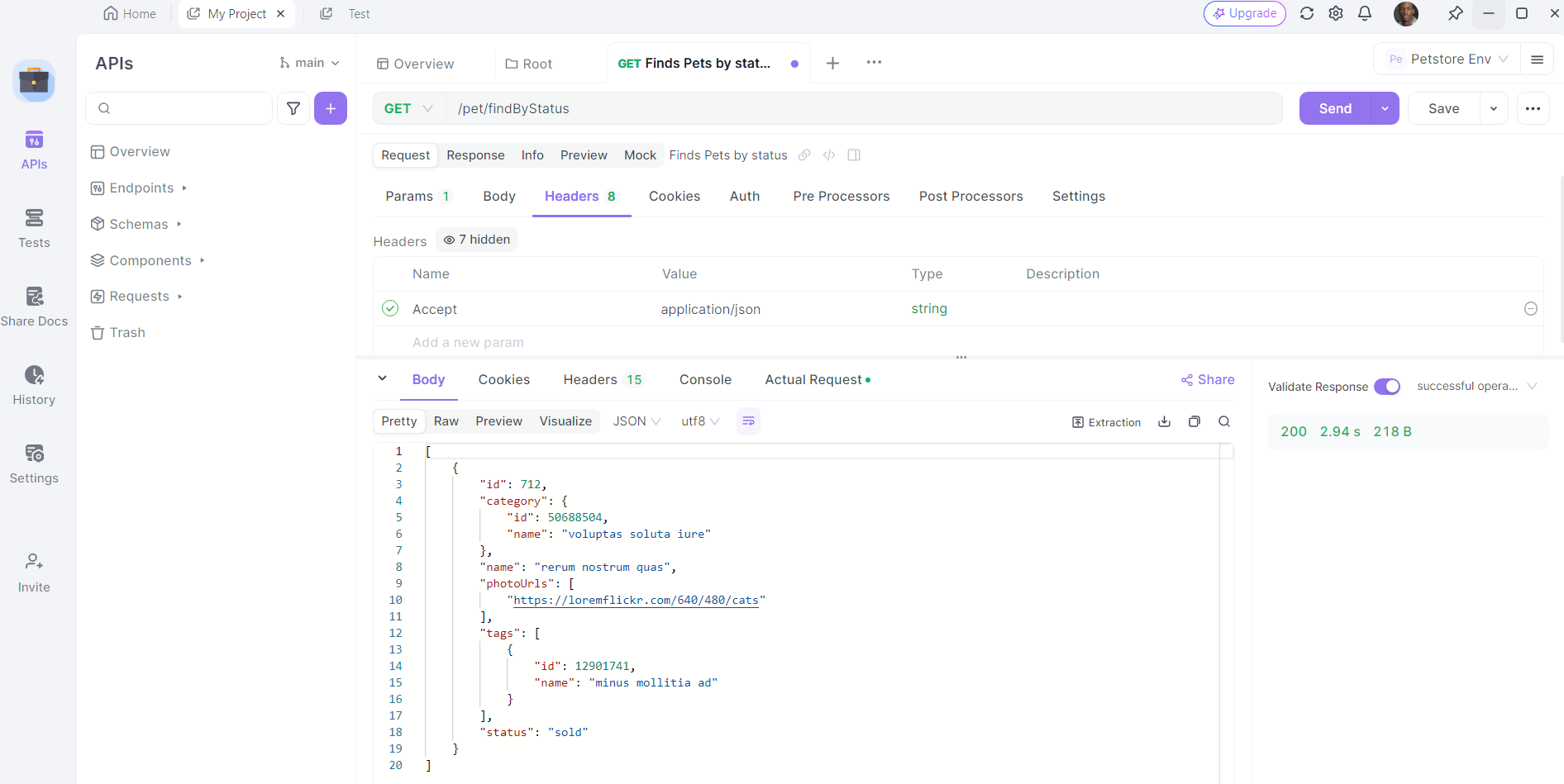

5. Send the Request and Inspect the Response: With the URL, query parameters, and headers in place, you can now send the API request. Click the "Send" button and apidog will execute the request. You'll see the response displayed in the response section.

Step 4: Analyze the Response

The response will typically include the generated text along with other relevant metadata. For example:

{

"success": true,

"data": {

"text": "there lived a brave knight who fought against dragons."

}

}

This response indicates that your request was successful and provides the generated text.

Advanced Features of Llama 3.2 API

Once you’re comfortable with basic requests, you can explore advanced features.

Dynamic Prompting

One of the coolest features of Llama 3.2 is dynamic prompting. You can craft more nuanced requests by including context or asking specific questions. For example:

{

"api_key": "your_api_key_here",

"prompt": "What are the key benefits of using Llama 3.2 API?",

"max_tokens": 50

}

This approach allows you to tailor the output to your needs.

Contextual Conversations

With the /converse endpoint, you can create interactive applications that simulate real conversations. This is particularly useful for chatbots or virtual assistants. Here’s how you can implement it:

POST /converse

{

"api_key": "your_api_key_here",

"message": "Hello, what can you do?"

}

The API will respond in a way that feels natural, helping to create engaging user experiences.

Best Practices for Using Llama 3.2 API

To maximize your success with the Llama 3.2 API, keep these best practices in mind:

1. Optimize Your Prompts

The quality of your output often depends on the clarity and specificity of your prompts. Spend time crafting well-thought-out questions or statements to get the best results.

2. Monitor Usage

Keep an eye on your API usage to avoid unexpected charges. Most providers offer dashboards where you can track your usage metrics.

3. Implement Error Handling

API calls can sometimes fail due to various reasons, like network issues or exceeding rate limits. Implement robust error handling in your code to manage these situations gracefully.

4. Use Caching Wisely

If you’re making repetitive requests, consider caching responses to improve performance and reduce API calls. This can save both time and resources.

Conclusion

Using the Llama 3.2 API opens up a world of possibilities for developers. With its advanced language processing capabilities, you can create applications that understand and generate human-like text. Remember to download Apidog for free to enhance your API development experience. By following the steps outlined in this guide, you’ll be well on your way to integrating Llama 3.2 into your projects.