Want to chat with 100+ large language models (LLMs) like they’re all OpenAI’s API? Whether you’re building a chatbot, automating tasks, or just geeking out, LiteLLM is your ticket to calling LLMs from OpenAI, Anthropic, Ollama, and more, all using the same OpenAI-style format. I dove into LiteLLM to simplify my API calls, and let me tell you—it’s a lifesaver for keeping code clean and flexible. In this beginner’s guide, I’ll show you how to set up LiteLLM, call a local Ollama model and OpenAI’s GPT-4o, and even stream responses, all based on the official docs. Ready to make your AI projects smoother than a sunny afternoon? Let’s get started!

What is LiteLLM? Your LLM API Superpower

LiteLLM is an open-source Python library and proxy server that lets you call over 100 LLM APIs—like OpenAI, Anthropic, Azure, Hugging Face, and local models via Ollama—using the OpenAI Chat Completions format. It standardizes inputs and outputs, handles API keys, and adds goodies like streaming, fallbacks, and cost tracking, so you don’t need to rewrite code for each provider. With 22.7K+ GitHub stars and adoption by companies like Adobe and Lemonade, LiteLLM is a dev favorite. Whether you’re documenting APIs (like with MkDocs) or building AI apps, LiteLLM simplifies your workflow. Let’s set it up and see it in action!

Setting Up Your Environment for LiteLLM

Before we call LLMs with LiteLLM, let’s prep your system. This is beginner-friendly, with each step explained to keep you on track.

1. Check Prerequisites: You’ll need these tools:

- Python: Version 3.8 or higher. Run

python --versionin your terminal. If it’s missing or too old, grab it from python.org. Python runs LiteLLM’s scripts. - pip: Python’s package manager, included with Python 3.4+. Verify with

pip --version. If absent, downloadget-pip.pyand runpython get-pip.py. - Ollama: For local models. Download from ollama.com and verify with

ollama --version(e.g., 0.1.44). We’ll use it for a local LLM test.

Missing anything? Install it now to keep things smooth.

2. Create a Project Folder: Let’s stay organized:

mkdir litellm-api-test

cd litellm-api-test

This folder will hold your LiteLLM project, and cd gets you ready.

3. Set Up a Virtual Environment: Avoid package conflicts with a Python virtual environment:

python -m venv venv

Activate it:

- Mac/Linux:

source venv/bin/activate - Windows:

venv\Scripts\activate

Seeing (venv) in your terminal means you’re in a clean environment, isolating LiteLLM’s dependencies.

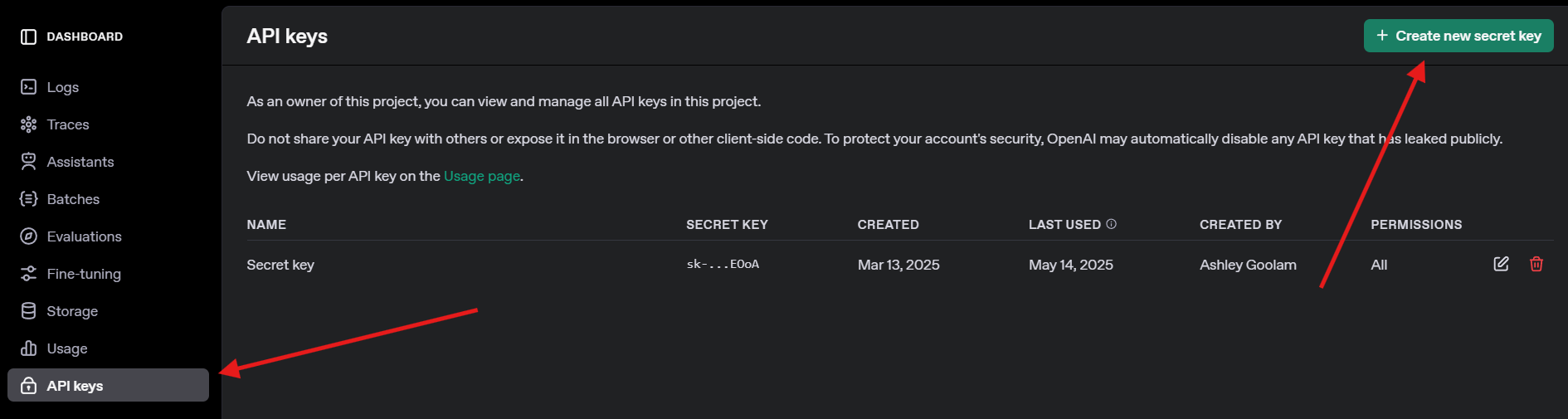

4. Get an OpenAI API Key: For the GPT-4o test, sign up at openai.com, navigate to API keys, and create a key. Save it securely—you’ll need it later.

Installing LiteLLM and Ollama

Now, let’s install LiteLLM and set up Ollama for local models. This is quick and sets the stage for our API calls.

1. Install LiteLLM: In your activated virtual environment, run:

pip install litellm openai

This installs LiteLLM and the OpenAI SDK (required for compatibility). It pulls dependencies like pydantic and httpx.

2. Verify LiteLLM: Check the installation:

python -c "import litellm; print(litellm.__version__)"

Expect a version like 1.40.14 or newer. If it fails, update pip (pip install --upgrade pip).

3. Set Up Ollama: Ensure Ollama is running and pull a lightweight model like Llama 3 (8B):

ollama pull llama3

This downloads ~4.7GB, so grab a snack if your connection’s slow. Verify with ollama list to see llama3:latest. Ollama hosts local models for LiteLLM to call.

Calling LLMs with LiteLLM: OpenAI and Ollama Examples

Let’s get to the fun part—calling LLMs! We’ll create a Python script to call OpenAI’s GPT-4o and a local Llama 3 model via Ollama, both using LiteLLM’s OpenAI-compatible format. We’ll also try streaming for real-time responses.

1. Create a Test Script: In your litellm-api-test folder, create test_llm.py with this code:

from litellm import completion

import os

# Set environment variables

os.environ["OPENAI_API_KEY"] = "your-openai-api-key" # Replace with your key

os.environ["OLLAMA_API_BASE"] = "http://localhost:11434" # Default Ollama endpoint

# Messages for the LLM

messages = [{"content": "Write a short poem about the moon", "role": "user"}]

# Call OpenAI GPT-4o

print("Calling GPT-4o...")

gpt_response = completion(

model="openai/gpt-4o",

messages=messages,

max_tokens=50

)

print("GPT-4o Response:", gpt_response.choices[0].message.content)

# Call Ollama Llama 3

print("\nCalling Ollama Llama 3...")

ollama_response = completion(

model="ollama/llama3",

messages=messages,

max_tokens=50,

api_base="http://localhost:11434"

)

print("Llama 3 Response:", ollama_response.choices[0].message.content)

# Stream Ollama Llama 3 response

print("\nStreaming Ollama Llama 3...")

stream_response = completion(

model="ollama/llama3",

messages=messages,

stream=True,

api_base="http://localhost:11434"

)

print("Streamed Llama 3 Response:")

for chunk in stream_response:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

print() # Newline after streaming

This script:

- Sets up API keys and Ollama’s endpoint.

- Defines a prompt (“Write a short poem about the moon”).

- Calls GPT-4o and Llama 3 with LiteLLM’s

completionfunction. - Streams Llama 3’s response for real-time output.

2. Replace the API Key: Update os.environ["OPENAI_API_KEY"] with your actual OpenAI key. If you don’t have one, skip the GPT-4o call and focus on Ollama.

3. Ensure Ollama is Running: Start Ollama in a separate terminal:

ollama serve

This runs Ollama at http://localhost:11434. Keep it open for the Llama 3 calls.

4. Run the Script: In your virtual environment, execute:

python test_llm.py

- When I ran this, GPT-4o returned a polished poem like:

>> The moon’s soft glow, a silver dream, lights paths where quiet shadows gleam.

- Llama 3 gave a simpler but charming version, like:

>> Moon so bright in the night sky, glowing soft as clouds float by.

The streamed response printed word-by-word, feeling like the LLM was typing live. If it fails, check that Ollama is running, your OpenAI key is valid, or port 11434 is open. Debug logs are in ~/.litellm/logs.

Adding Observability with LiteLLM Callbacks

Want to track your LLM calls like a pro? LiteLLM supports callbacks to log inputs, outputs, and costs to tools like Langfuse or MLflow. Let’s add a simple callback to log costs.

Update the Script: Modify test_llm.py to include a cost-tracking callback:

from litellm import completion

import os

# Callback function to track cost

def track_cost_callback(kwargs, completion_response, start_time, end_time):

cost = kwargs.get("response_cost", 0)

print(f"Response cost: ${cost:.4f}")

# Set callback

import litellm

litellm.success_callback = [track_cost_callback]

# Rest of the script (same as above)

os.environ["OPENAI_API_KEY"] = "your-openai-api-key"

os.environ["OLLAMA_API_BASE"] = "http://localhost:11434"

messages = [{"role": "user", "content": "Write a short poem about the moon"}]

print("Calling GPT-4o...")

gpt_response = completion(model="openai/gpt-4o", messages=messages, max_tokens=50)

print("GPT-4o Response:", gpt_response.choices[0].message.content)

# ... (Ollama and streaming calls unchanged)

This logs the cost of each call (e.g., “Response cost: $0.0025” for GPT-4o). Ollama calls are free, so their cost is $0.

Run Again: Execute python test_llm.py. You’ll see cost logs alongside responses, helping you monitor expenses for cloud-based LLMs.

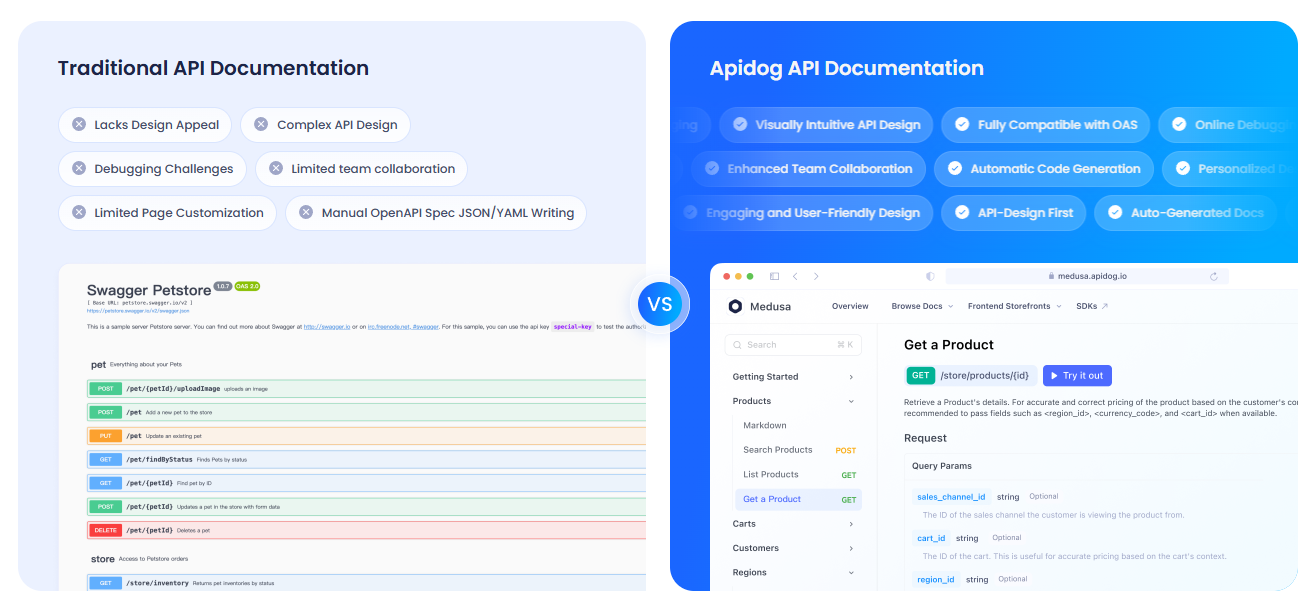

Documenting Your APIs with APIdog

Since you’re working with LLM APIs, you’ll likely want to document them clearly for your team or users. I strongly recommend you check out APIdog. APIdog’s Documentation is a fantastic tool for this! It offers a sleek, interactive platform to design, test, and document APIs, with features like API playgrounds and self-hosting options. Pairing LiteLLM’s API calls with APIdog’s polished docs can take your project to the next level—give it a try!

My Takes on LiteLLM

After playing with LiteLLM, here’s what I love:

- Unified Format: One code structure for OpenAI, Ollama, and beyond—no more API-specific headaches.

- Local Power: Ollama integration lets you run models offline, perfect for privacy or low-budget projects.

- Streaming Fun: Real-time responses make apps feel alive, like chatting with a friend.

- Community Buzz: With 18K+ GitHub stars, LiteLLM is a dev favorite.

Challenges? Setup can be tricky if Ollama or API keys aren’t configured right, but the docs are solid.

Pro Tips for LiteLLM Success

- Debugging: Enable verbose logging with

litellm.set_verbose = Trueto see raw requests and responses. - More Models: Try Anthropic’s Claude or Azure OpenAI by adding their API keys and models (e.g.,

anthropic/claude-3-sonnet-20240229). - Async Calls: Use

litellm.acompletionfor non-blocking calls in FastAPI apps. - Proxy Server: Run LiteLLM as a proxy (

litellm --model gpt-3.5-turbo) for multiple apps to share one endpoint. - Community: Join the LiteLLM Discord or GitHub Discussions for tips and updates.

Wrapping Up: Your LiteLLM Journey Starts Here

You’ve just unlocked the power of LiteLLM to call LLMs like a pro, from OpenAI’s GPT-4o to local Llama 3, all in one clean format! Whether you’re building AI apps or experimenting like a curious coder, LiteLLM makes it easy to switch models, stream responses, and track costs. Try new prompts, add more providers, or set up a proxy server for bigger projects. Share your LiteLLM wins on the LiteLLM GitHub—I’m stoked to see what you create! And don’t forget to check out APIdog for documenting your APIs. Happy coding!