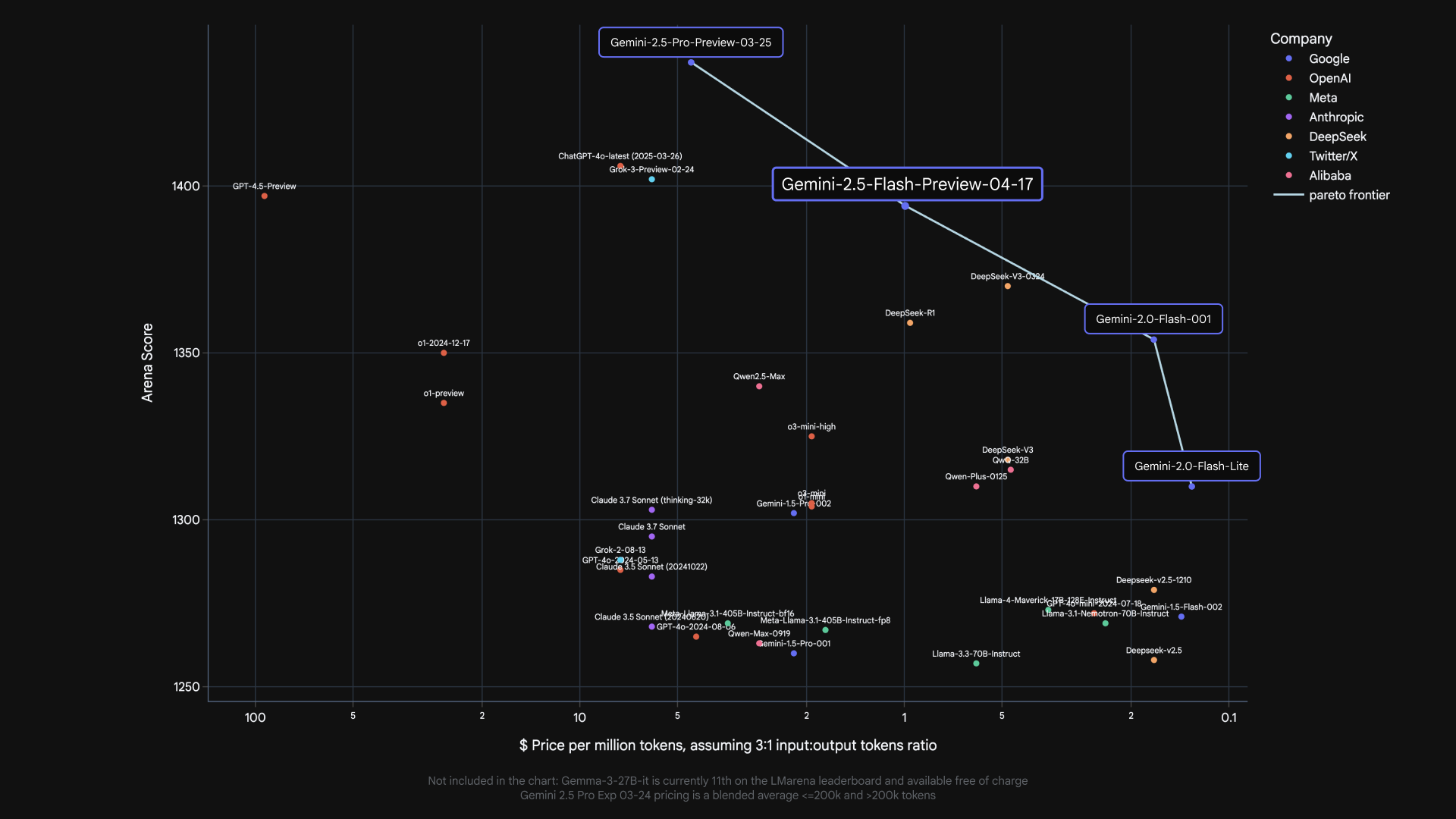

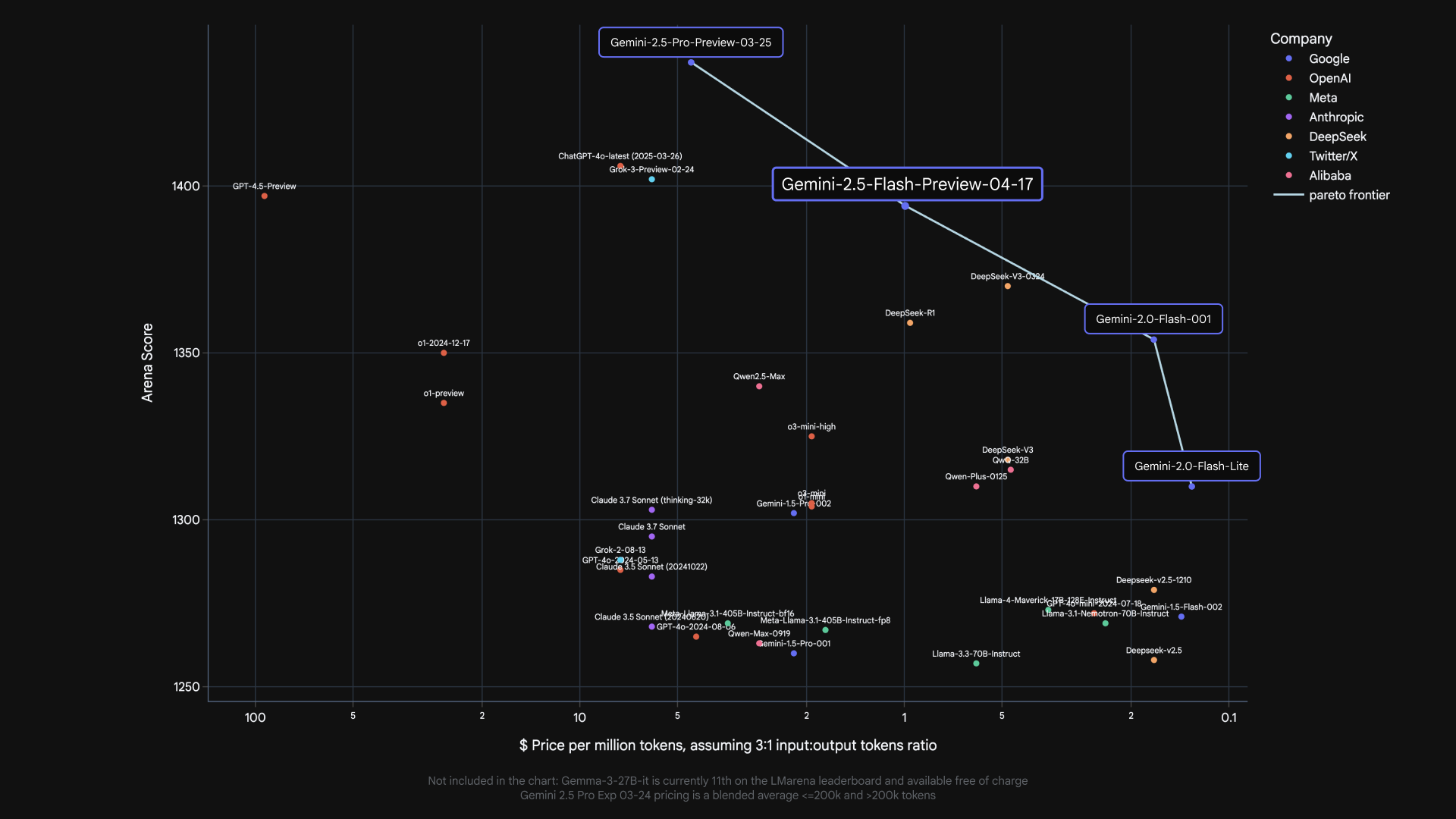

Google's advancements in artificial intelligence continue to accelerate, and the introduction of Gemini 2.5 Flash marks another significant step. This model, available in preview, builds upon the speed and efficiency of its predecessor (2.0 Flash) while integrating powerful new reasoning capabilities. What makes 2.5 Flash particularly compelling for developers is its unique hybrid reasoning system and the introduction of a controllable "thinking budget," allowing fine-tuning of the balance between response quality, latency, and cost.

This article provides a practical guide on how to start using the Google Gemini 2.5 Flash model via its API. We'll cover obtaining the necessary API key, setting up your environment, making API calls with specific configurations for 2.5 Flash, and understanding how students can access advanced Gemini features for free.

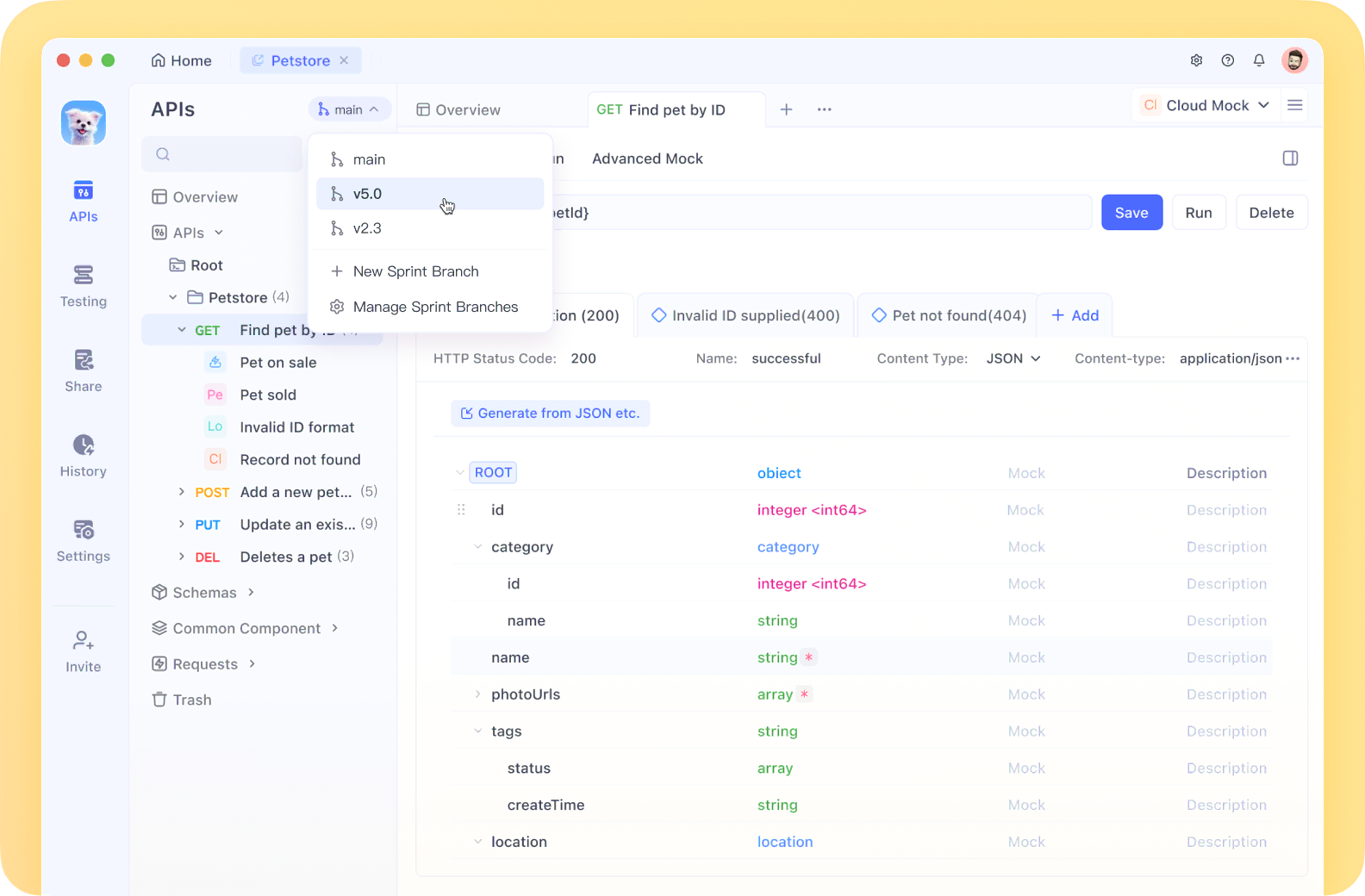

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Google Gemini 2.5 Flash: the Most Cost-Efficient Thinking Model Yet

Before diving into the API calls, let's briefly recap what makes 2.5 Flash stand out:

Hybrid Reasoning: Unlike models that generate output instantly, 2.5 Flash can perform an internal "thinking" process before responding. This allows it to better understand prompts, break down complex tasks, and plan more accurate, comprehensive answers, especially for multi-step problems.

Controllable Thinking Budget: Developers can set a thinking_budget (in tokens, from 0 to 24,576) via the API. This budget caps the amount of internal reasoning the model performs.

- A budget of

0prioritizes speed and cost, behaving like a faster version of 2.0 Flash. - Higher budgets allow for more reasoning, potentially improving quality on complex tasks but increasing latency and cost.

- The model intelligently uses only the budget needed for the given prompt's complexity.

Cost-Efficiency: It's designed to offer performance comparable to other leading models but at a fraction of the cost, making advanced AI more accessible.

Now, let's get hands-on with the API.

How to Use Google Gemini 2.5 Flash Via API

Step 1: Obtaining Your Gemini API Key

To interact with any Gemini model via the API, you first need an API key. This key authenticates your requests. Here’s how to get one:

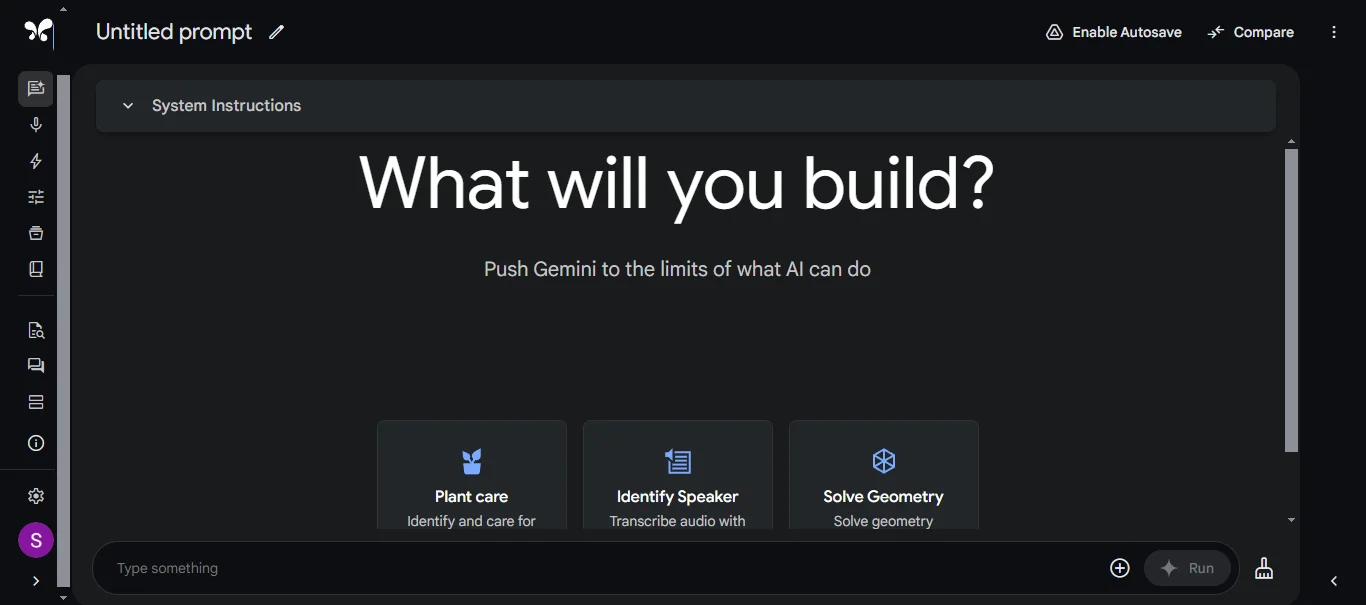

Navigate to Google AI Studio: The primary place to get started and obtain API keys for individual use is Google AI Studio (https://aistudio.google.com/).

Sign In: You'll need to sign in with your Google Account.

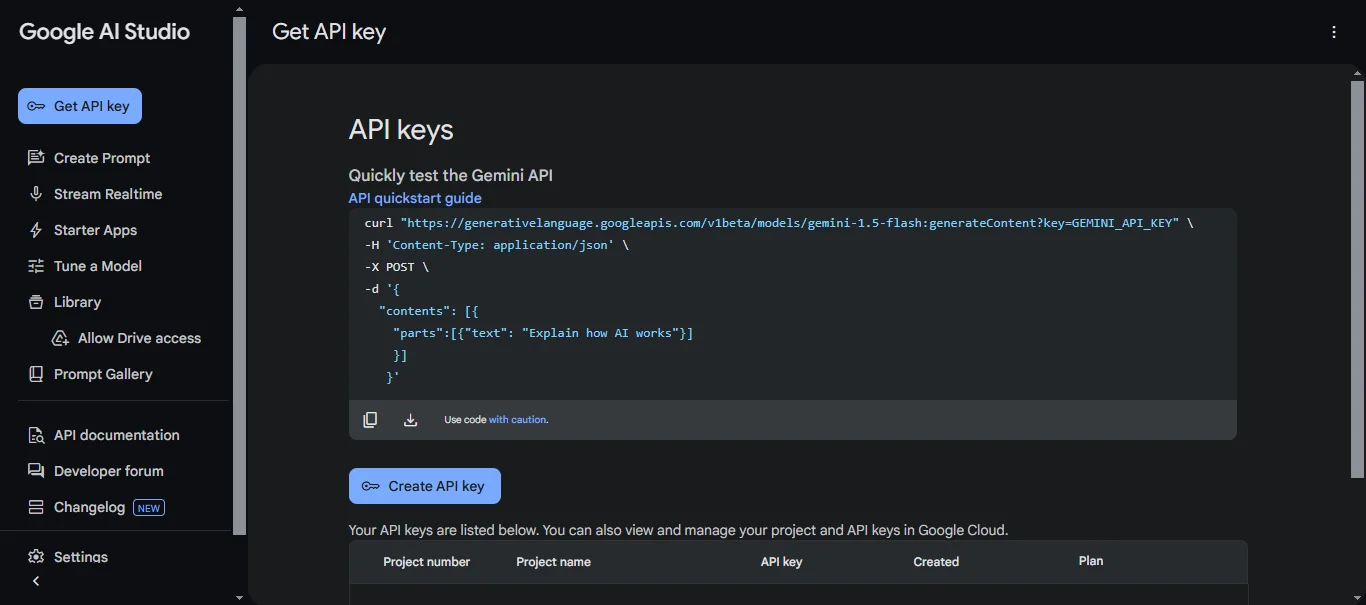

Generate API Key: Once logged in, look for an option like "Get API key" or navigate to the API key management section (the exact location in the UI might evolve). You'll typically find options to create a new API key.

Create Key: Follow the prompts to create a new key. Google AI Studio will generate a unique string of characters – this is your API key.

Secure Your Key: Treat your API key like a password. Do not share it publicly, embed it directly in your code (especially if the code is checked into version control), or expose it in client-side applications. Store it securely, for instance, using environment variables or a dedicated secrets management system.

Note: For enterprise-level usage, deploying models via Google Cloud's Vertex AI platform is often preferred, which involves different authentication mechanisms (like service accounts), but for initial development and smaller projects, an API key from Google AI Studio is the quickest way to start.

Step 2: Setting Up Your Development Environment

With your API key in hand, you need to set up your programming environment. We'll use Python as an example, as Google provides a well-supported client library.

Install the Client Library: Open your terminal or command prompt and install the necessary package using pip:

pip install google-generativeai

Configure Authentication (Securely): The best practice is to make your API key available to your application via an environment variable. How you set an environment variable depends on your operating system:

- Linux/macOS:

export GEMINI_API_KEY="YOUR_API_KEY"

(Replace "YOUR_API_KEY" with the actual key you generated). You might add this line to your shell profile (.bashrc, .zshrc, etc.) for persistence across sessions.

- Windows (Command Prompt):

set GEMINI_API_KEY=YOUR_API_KEY

- Windows (PowerShell):

$env:GEMINI_API_KEY="YOUR_API_KEY"

Then, in your Python code, you can configure the library to automatically pick up the key:

import google.generativeai as genai

import os

# Load the API key from the environment variable

api_key = os.getenv("GEMINI_API_KEY")

if not api_key:

raise ValueError("GEMINI_API_KEY environment variable not set.")

genai.configure(api_key=api_key)

Step 3: Making an API Call to Gemini 2.5 Flash

Now you're ready to make your first API call specifically targeting the Gemini 2.5 Flash model.

Import Libraries and Initialize Model: Start by importing the necessary library (if not already done) and instantiating the GenerativeModel class, specifying the correct model name for the 2.5 Flash preview.

import google.generativeai as genai

import os

# Configure API Key (as shown in Step 2)

api_key = os.getenv("GEMINI_API_KEY")

if not api_key:

raise ValueError("GEMINI_API_KEY environment variable not set.")

genai.configure(api_key=api_key)

# Specify the Gemini 2.5 Flash preview model

# Note: This model name might change after the preview phase.

model_name = "gemini-2.5-flash-preview-04-17"

model = genai.GenerativeModel(model_name=model_name)

Define Your Prompt: Create the text input you want the model to process.

prompt = "Explain the concept of hybrid reasoning in Gemini 2.5 Flash in simple terms."

Configure the Thinking Budget (Crucial for 2.5 Flash): This is where you leverage the unique capabilities of 2.5 Flash. Create a GenerationConfig object and within it, a ThinkingConfig to set the thinking_budget.

# --- Option 1: Prioritize Speed/Cost (Disable Thinking) ---

config_no_thinking = genai.types.GenerationConfig(

thinking_config=genai.types.ThinkingConfig(

thinking_budget=0

)

# You can also add other generation parameters here like temperature, top_p, etc.

# Example: temperature=0.7

)

# --- Option 2: Allow Moderate Reasoning ---

config_moderate_thinking = genai.types.GenerationConfig(

thinking_config=genai.types.ThinkingConfig(

thinking_budget=1024 # Allow up to 1024 tokens for internal thinking

)

)

# --- Option 3: Allow Extensive Reasoning ---

config_high_thinking = genai.types.GenerationConfig(

thinking_config=genai.types.ThinkingConfig(

thinking_budget=8192 # Allow a larger budget for complex tasks

)

)

# --- Option 4: Use Default (Model Decides) ---

# Simply omit the thinking_config or the entire generation_config

# if you want the model's default behavior.

config_default = genai.types.GenerationConfig() # Or just don't pass config later

Generate Content: Call the generate_content method on your model instance, passing the prompt and your chosen configuration.

print(f"--- Generating with NO thinking (budget=0) ---")

try:

response_no_thinking = model.generate_content(

prompt,

generation_config=config_no_thinking

)

print(response_no_thinking.text)

except Exception as e:

print(f"An error occurred: {e}")

print(f"\n--- Generating with MODERATE thinking (budget=1024) ---")

try:

response_moderate_thinking = model.generate_content(

prompt,

generation_config=config_moderate_thinking

)

print(response_moderate_thinking.text)

except Exception as e:

print(f"An error occurred: {e}")

# Example for a more complex prompt potentially benefiting from higher budget:

complex_prompt = """

Write a Python function `evaluate_cells(cells: Dict[str, str]) -> Dict[str, float]`

that computes the values of spreadsheet cells.

Each cell contains:

- A number (e.g., "3")

- Or a formula like "=A1 + B1 * 2" using +, -, *, / and other cells.

Requirements:

- Resolve dependencies between cells.

- Handle operator precedence (*/ before +-).

- Detect cycles and raise ValueError("Cycle detected at <cell>").

- No eval(). Use only built-in libraries.

"""

print(f"\n--- Generating COMPLEX prompt with HIGH thinking (budget=8192) ---")

try:

response_complex = model.generate_content(

complex_prompt,

generation_config=config_high_thinking

)

# For code, you might want to inspect parts or check for specific attributes

print(response_complex.text)

except Exception as e:

print(f"An error occurred: {e}")

Process the Response: The response object contains the generated text (accessible via response.text), along with other potential information like safety ratings or usage metadata (depending on the API version and configuration).

By experimenting with different thinking_budget values for various prompts, you can directly observe its impact on the quality, depth, and latency of the responses, allowing you to optimize for your specific application's needs.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

How to Use Google Gemini 2.5 Flash for Free

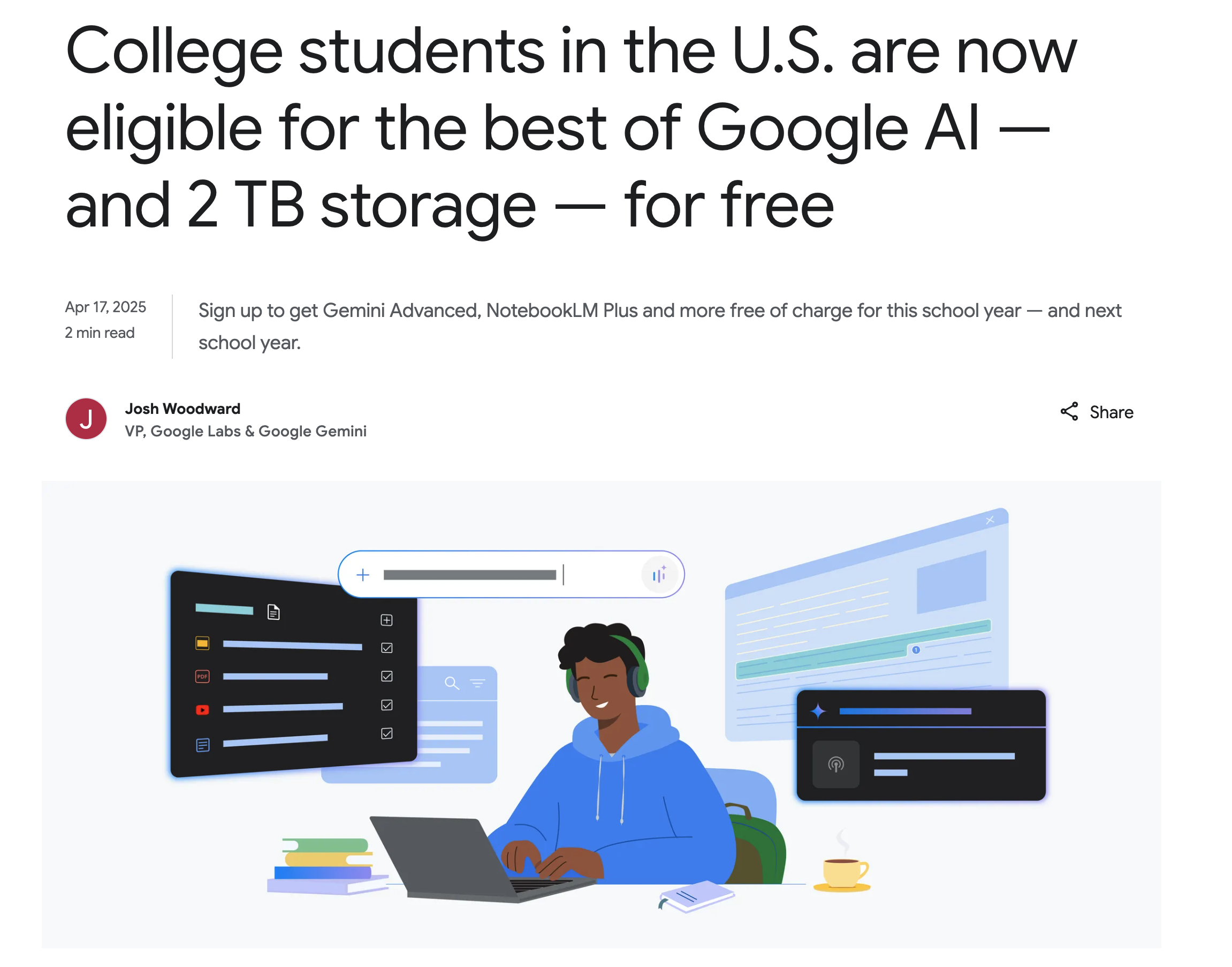

Google has made a significant move to empower students by offering the Google One AI Premium plan for free to eligible college students in the U.S. This plan provides access to a suite of Google's most advanced AI capabilities.

What does the free Google One AI Premium for students include?

- Access to Advanced Gemini Models: Use Google's top-tier models (like Gemini Advanced) within integrated experiences like the Gemini app.

- Gemini in Google Workspace: Get AI assistance directly within Google Docs, Sheets, Slides, and Gmail for writing, analysis, presentation generation, and more.

- NotebookLM Plus: An AI-powered research tool that helps understand and synthesize information from uploaded documents (like course materials), create study aids, and discover new sources.

- Creative Tools: Experiment with features often found in Google Labs, such as Veo 2 for text-to-video generation and Whisk for image mixing.

- Gemini Live & Deep Research: Engage in free-flowing conversations and conduct in-depth research on complex topics within the Gemini interface.

- 2 TB Cloud Storage: Significant storage across Google Drive, Photos, and Gmail.

How does this relate to the Gemini 2.5 Flash API?

It's important to understand the distinction: The free Google One AI Premium plan for students primarily grants access to advanced AI features within Google's own applications and services (Gemini app, Workspace, NotebookLM). These features are powered by advanced models, which could include Gemini 2.5 Flash or similar tiers under the hood.

However, this student offer does not typically translate into free API credits for making direct programmatic calls to the Gemini API (like the generate_content examples shown above). API usage generally follows standard pricing tiers based on token consumption, although free tiers or introductory credits might be available separately through Google AI Studio or Google Cloud.

The value for students lies in leveraging the capabilities of models like 2.5 Flash through user-friendly interfaces integrated into tools they already use for learning and productivity, without needing to pay the Google One subscription fee or manage API billing directly for that usage.

Conclusion

Gemini 2.5 Flash offers a compelling blend of speed, cost-effectiveness, and sophisticated reasoning capabilities, made even more versatile by its controllable thinking budget. Getting started with the API involves obtaining a key from Google AI Studio, setting up your environment with the client library, and making calls using the specific model name and desired thinking_config. By carefully choosing the thinking budget, developers can tailor the model's behavior to their exact needs.

While the free Google One AI Premium plan for students provides access to experiences powered by these advanced models within Google's ecosystem, direct API usage generally remains a separate, billed service. Nonetheless, the availability of 2.5 Flash in preview via the API opens up exciting possibilities for building the next generation of intelligent applications. As the model is still in preview, developers should keep an eye on Google's documentation for updates on model names, features, and general availability.