Developers constantly seek powerful AI models without incurring high costs. Google addresses this need by offering free access to advanced models, including preview versions like Gemini 3 Pro.

Google maintains a generous free tier for the Gemini API via Google AI Studio. This setup allows you to generate an API key and start calling models immediately. Furthermore, as of November 2025, Google provides access to Gemini 3 Pro preview models at no charge, though with rate limits suitable for prototyping, testing, and small-scale applications.

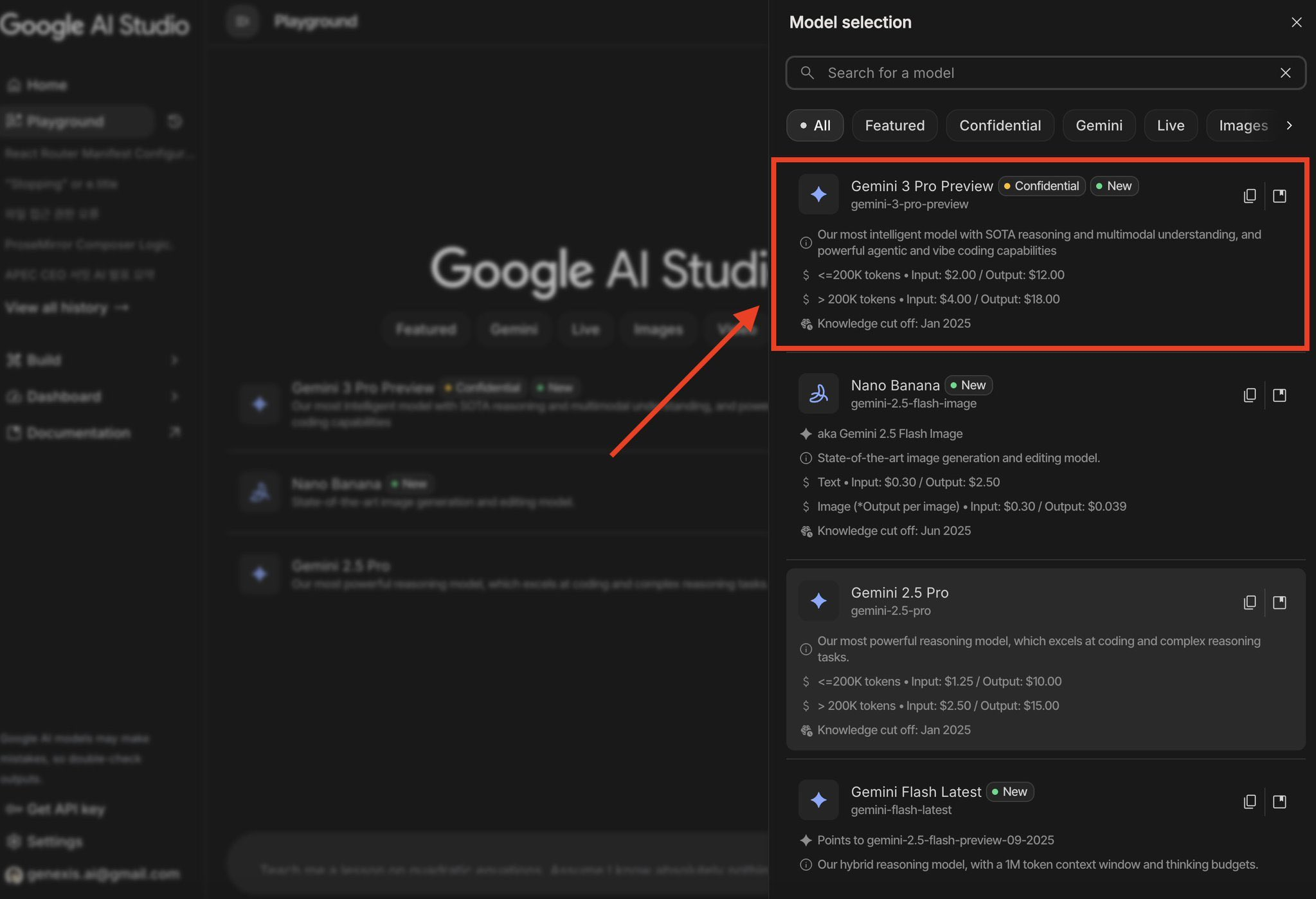

Understanding the Gemini 3 API Pricing

Generally speaking, the Gemini 3.0 Pricing goes like this:

- <200k token $2.00 in, $12.00 out

- >200k token $4.00 in, $18.00 out

Knowledge cut off date of Gemini 3.0 is set to Jan 2025.

If you want to use Gemini 3.0 API for free, Google is generous enough to let you have a try: Google structures the Gemini API around two primary tiers: free and pay-as-you-go. The free tier operates entirely without billing activation. Consequently, all requests remain cost-free until you explicitly enable Cloud Billing for higher quotas.

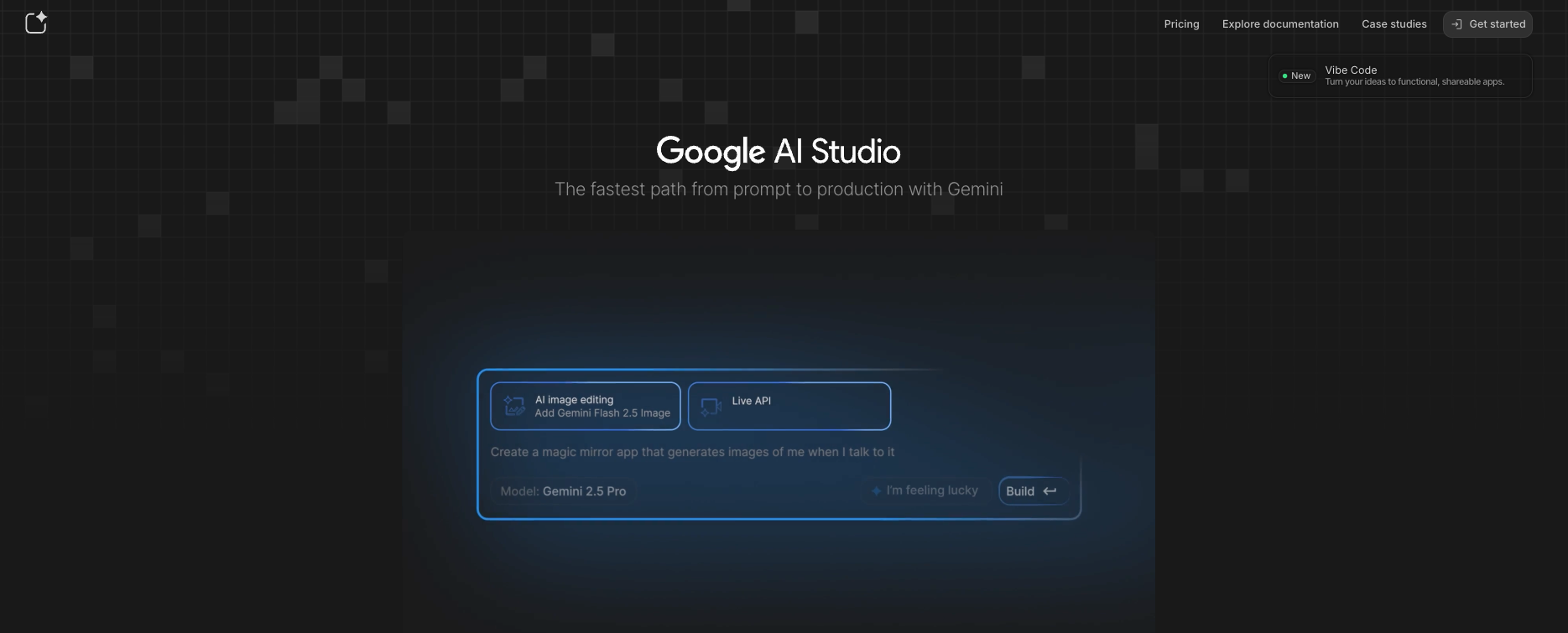

Google AI Studio functions as the primary interface for free access. Here, you prototype prompts, generate API keys, and call models directly. Preview models like Gemini 3 Pro appear automatically in the model selector when available in your region.

Key advantages of the free tier include:

- No credit card requirement

- Access to cutting-edge preview models, including Gemini 3 Pro

- Support for multimodal inputs (text, images, video, audio)

- Function calling, structured outputs, and code execution tools

- Rate limits that reset daily, sufficient for development and moderate production use

However, the free tier enforces quotas to ensure fair usage. Typical limits for Gemini 3 Pro preview models stand at approximately 5-10 requests per minute (RPM), 250,000 tokens per minute (TPM), and 50-100 requests per day (RPD). These values vary by model variant and region, and Google adjusts them periodically.

Step-by-Step: Generate Your Gemini 3 API Key

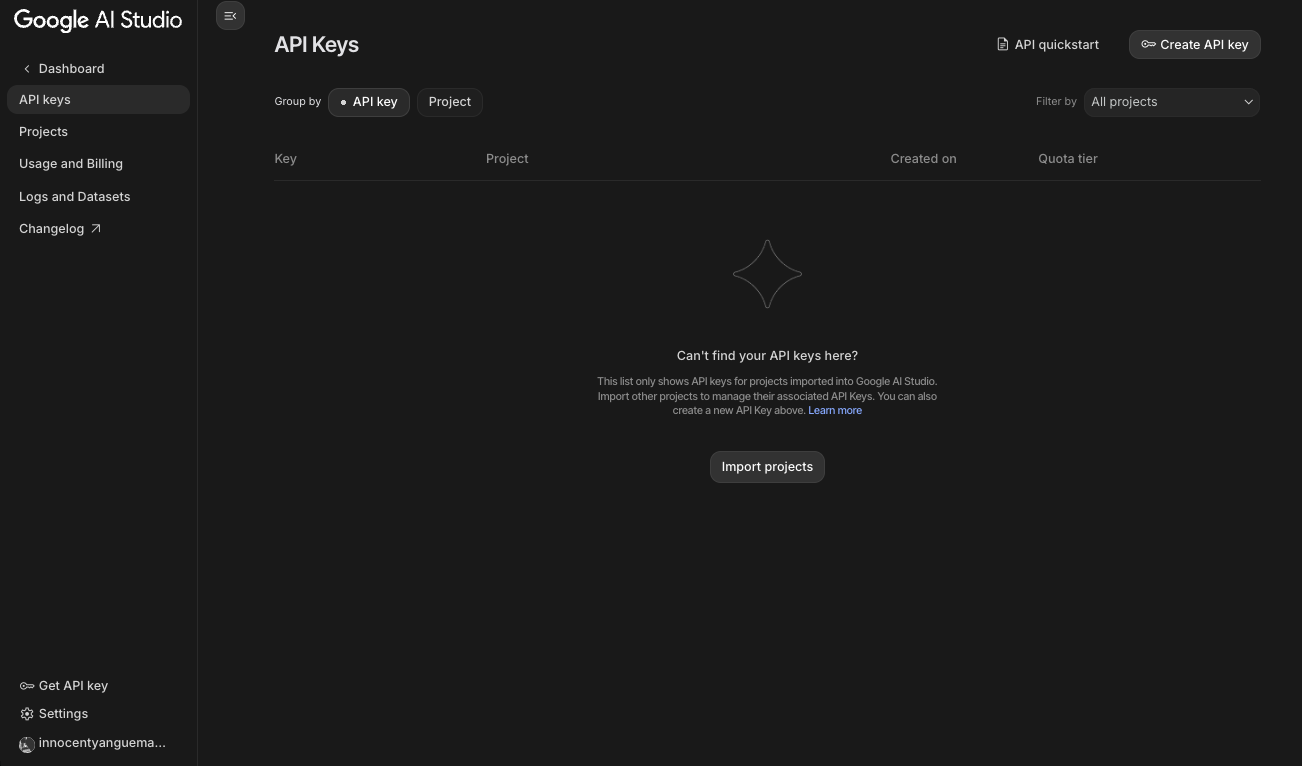

You start by creating an API key in Google AI Studio. Follow these precise steps:

- Navigate to aistudio.google.com.

- Sign in with your Google account (personal or Workspace).

4. Click the profile icon in the top-right corner and select "Get API key". Choose "Create API key in new project" (or select an existing one).

6. Google generates the key instantly. Copy it immediately – you cannot view it again.

Moreover, you can create multiple keys and restrict them to specific referrers or IP addresses for security. Always treat the key as sensitive data; never commit it to public repositories.

For direct access to Gemini 3 Pro preview, visit this link provided by Google: https://aistudio.google.com/prompts/new_chat?model=gemini-3-pro-preview. This opens a chat interface pre-configured with the preview model.

Let's Try Out Gemini 3.0 API in Google AI Studio

Installing the Official Gemini SDKs for Programmatic Access

Google provides client libraries in multiple languages. You install them via package managers.

For Python developers:

pip install -U google-generativeai

Then configure the library:

import google.generativeai as genai

genai.configure(api_key="YOUR_API_KEY_HERE")

model = genai.GenerativeModel("gemini-3-pro-preview-11-2025")

response = model.generate_content("Explain quantum entanglement in simple terms.")

print(response.text)

Node.js users run:

npm install @google/generative-ai

const { GoogleGenerativeAI } = require("@google/generative-ai");

const genAI = new GoogleGenerativeAI("YOUR_API_KEY_HERE");

const model = genAI.getGenerativeModel({ model: "gemini-3-pro-preview-11-2025" });

const result = await model.generateContent("Write a Python function to calculate Fibonacci numbers.");

console.log(result.response.text());

Other supported languages include Go, Java, and Swift. All libraries handle authentication, retries, and streaming automatically.

Sending Your First Request to Gemini 3 API Using curl

You can test directly with curl for quick verification:

curl https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-preview-11-2025:generateContent?key=YOUR_API_KEY \

-H 'Content-Type: application/json' \

-d '{

"contents": [{

"role": "user",

"parts": [{ "text": "Describe the key improvements in Gemini 3 over previous versions." }]

}]

}'

This endpoint returns a JSON response containing the generated text and usage metadata.

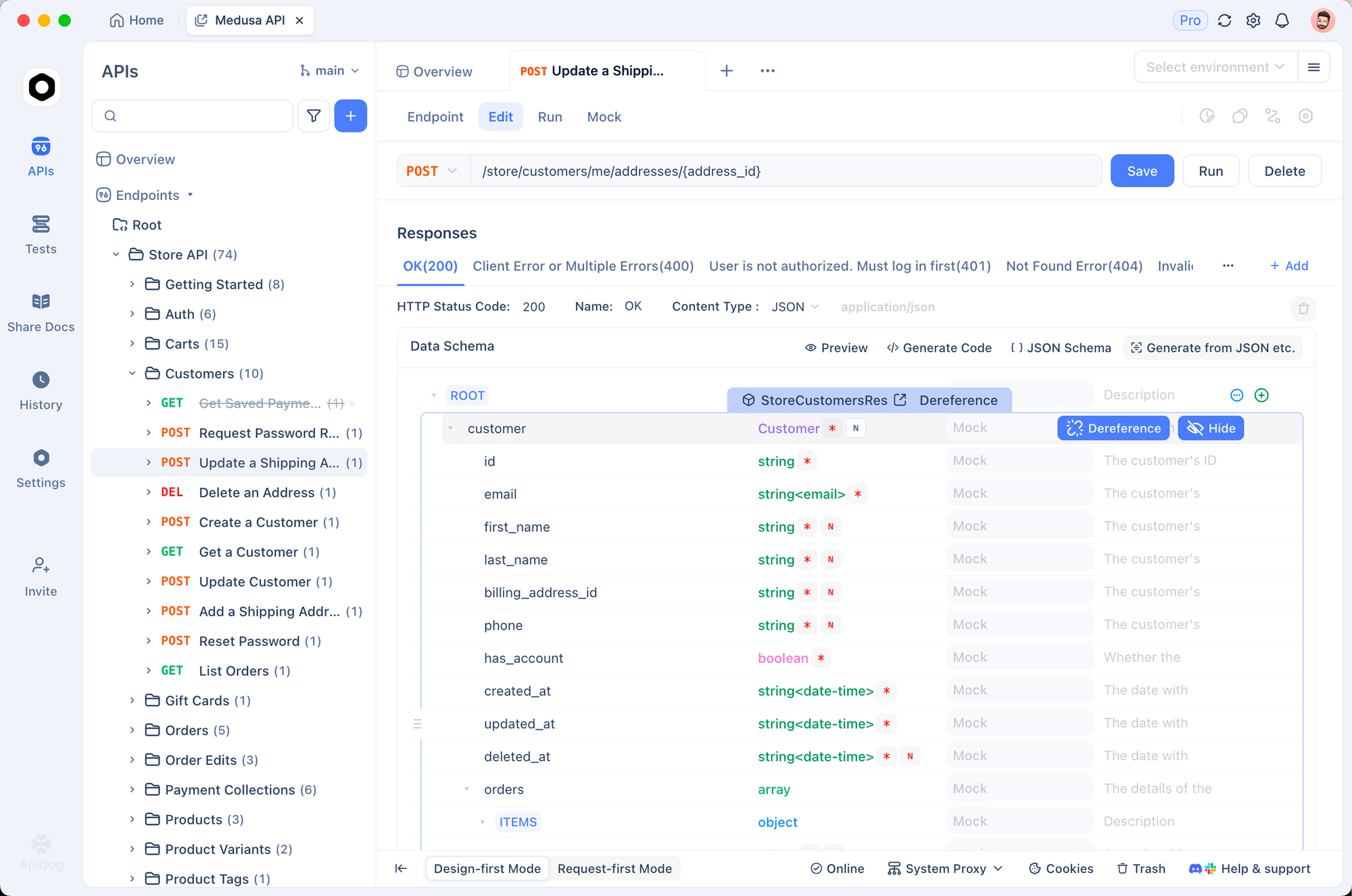

Use Apidog for Better, More Intergrated API Testing Workflow

Raw curl commands work for simple tests, yet production-grade development demands better tools. Apidog excels here as a full-featured API client and documentation platform.

You import the official Gemini OpenAPI specification into Apidog, which automatically generates all endpoints, parameters, and request bodies. Subsequently, you:

- Save your API key as an environment variable

- Create collections for different use cases (text generation, vision, function calling)

- Send multimodal requests by uploading images or videos directly

- View token usage and response metadata in real time

- Generate client code (Python, JS, etc.) from actual requests

- Mock servers for frontend testing without consuming quota

Apidog's interface for testing Gemini 3 API endpoints

Many developers choose Apidog specifically for Gemini workflows because it handles large file uploads, streaming responses, and structured JSON outputs seamlessly – features that Postman struggles with for generative APIs.

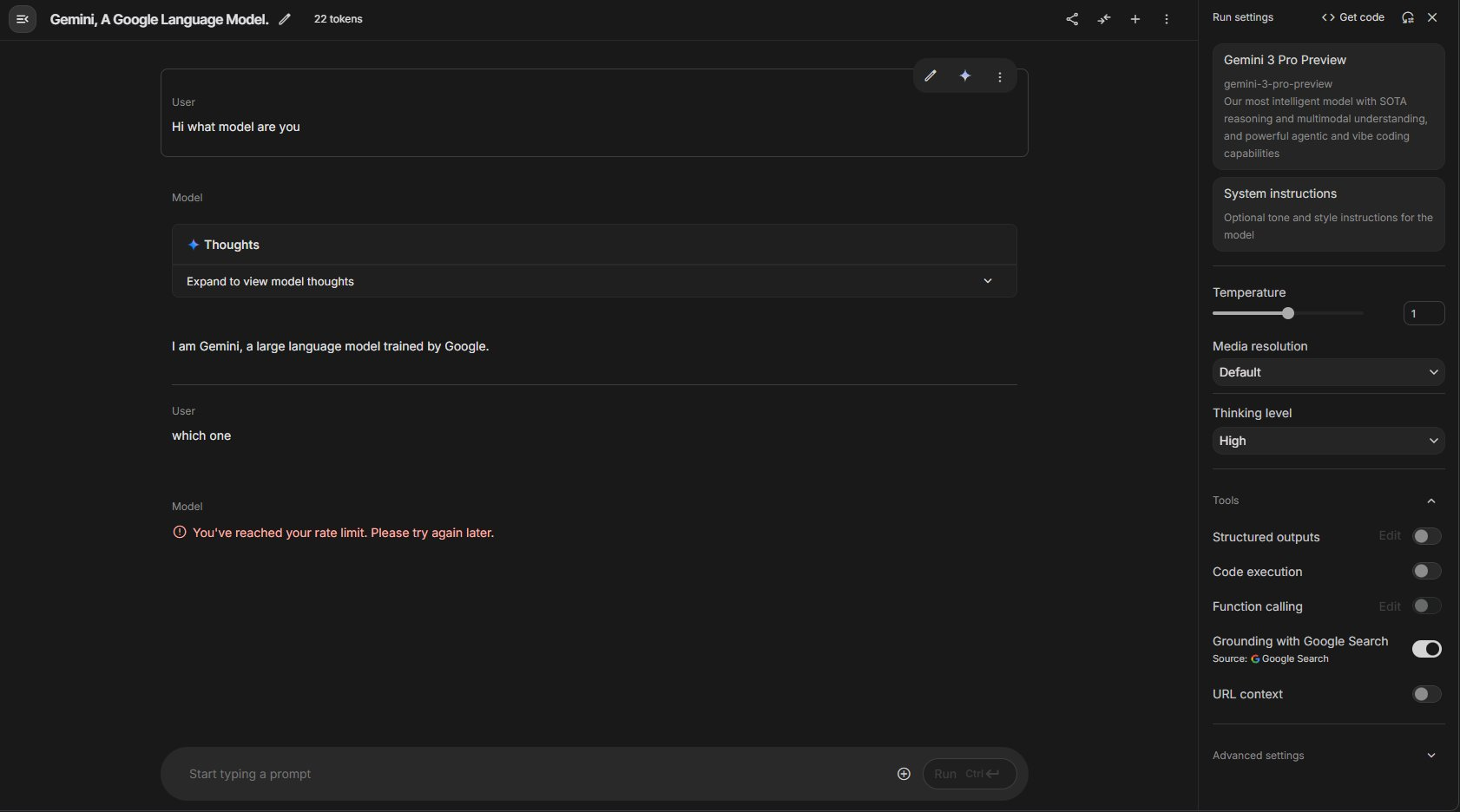

What're Gemini 3.0 API Rate Limits?

Google does not disclose the Gemini 3.0 API rate limit currently, although users are reporting that they are suffering from rate limits within a few chats (Error code: You've reached your rate limit. Please try again later.)

Based on the official Google documentation, the Gemini API has a free tier accessible via Google AI Studio that is subject to rate limits to ensure fair use. These limits are primarily measured in Requests Per Minute (RPM), and for some models, also in Requests Per Day (RPD) and Tokens Per Minute (TPM). For example, the standard gemini-1.0-pro model has a free rate limit of 60 RPM, which is generally sufficient for development and testing. These limits are provided at no charge and do not require a billing account to be set up.

Different models within the Gemini family have different rate limits. Exceeding a model's rate limit will result in an HTTP 429 "Resource Exhausted" error. To manage this, developers should implement error handling with an exponential backoff strategy, which involves waiting for progressively longer periods before retrying a request. For applications requiring a higher request volume than the free tier allows, you must enable billing on a Google Cloud project and transition to the pay-as-you-go plan, which offers significantly higher limits (ai.google.dev).

For higher throughput, you create multiple Google accounts (each with its own key) and rotate them – a common practice among developers staying within free limits.

Vision and Video with Gemini 3.0 API

Gemini 3 processes images and video natively. Example Python code for image analysis:

from google.generativeai.types import File

image_file = genai.upload_file(path="diagram.png")

model = genai.GenerativeModel("gemini-3-pro-preview-11-2025")

response = model.generate_content([

"Explain this architecture diagram in detail:",

image_file

])

print(response.text)

Video understanding works similarly – upload an MP4 and ask questions about contents, timestamps, or actions.

Conclusion

Google enables unprecedented access to frontier-level AI through its free tier. You obtain a key in under two minutes, start calling Gemini 3 Pro preview models immediately, and build sophisticated applications without spending money.

Combine this capability with a robust API client like Apidog, and you gain a complete development environment rivaling paid services. Thousands of developers already prototype agents, RAG systems, and multimodal apps this way.

Take action now: head to Google AI Studio, generate your key, download Apidog, and send your first Gemini 3 request. The barrier to building with state-of-the-art AI has never been lower.