This API tool lets you test and debug your model’s endpoints effortlessly. Download Apidog for free today and streamline your workflow as you explore Mistral Small 3.1’s capabilities!

DeepSeek V3 0324 represents a groundbreaking advancement in the world of accessible AI. This powerful 685B-parameter mixture-of-experts model is now available completely free through OpenRouter, offering unprecedented capabilities without financial barriers. With a massive 131,072 token context window (approximately 100,000 words) and zero cost for both input and output tokens, this model democratizes access to enterprise-grade AI.

This guide will walk you through everything you need to know to leverage DeepSeek V3 0324 API in your projects, applications, or research.

How Good Is DeepSeek V3 0324?

DeepSeek V3 0324 succeeds the original DeepSeek V3 model with significant improvements. As a mixture-of-experts architecture, it employs specialized neural network components that activate selectively depending on the input, allowing for exceptional performance across diverse tasks.

Key Capabilities:

- Extended Context Processing: Process and analyze documents up to 131K tokens in length

- Advanced Reasoning: Solve complex problems requiring multi-step logical thinking

- Code Generation: Create, debug, and optimize code across programming languages

- Content Creation: Generate high-quality written content for various purposes

- Research & Analysis: Summarize research papers, analyze data, and extract insights

- Translation & Language Tasks: Process and generate content across multiple languages

Getting Started with OpenRouter

DeepSeek V3 0324 is accessible through OpenRouter, a platform that provides standardized access to multiple AI models through an OpenAI-compatible API interface.

Step 1: Create an OpenRouter Account

Visit OpenRouter's website and sign up for a free account.

Step 2: Generate Your API Key

After creating your account:

- Navigate to the API Keys section in your dashboard

- Click "Create API Key"

- Name your key (optional but recommended for organization)

- Copy and save your API key immediately in a secure location

Important: For security reasons, your API key will only be displayed once. If you lose it, you'll need to generate a new one.

Step 3: Install Required Libraries

For Python users, install the OpenAI library:

pip install openai

For TypeScript/JavaScript users, install the OpenAI package:

npm install openai

Making Your First Deepseek V3 0324 API Call

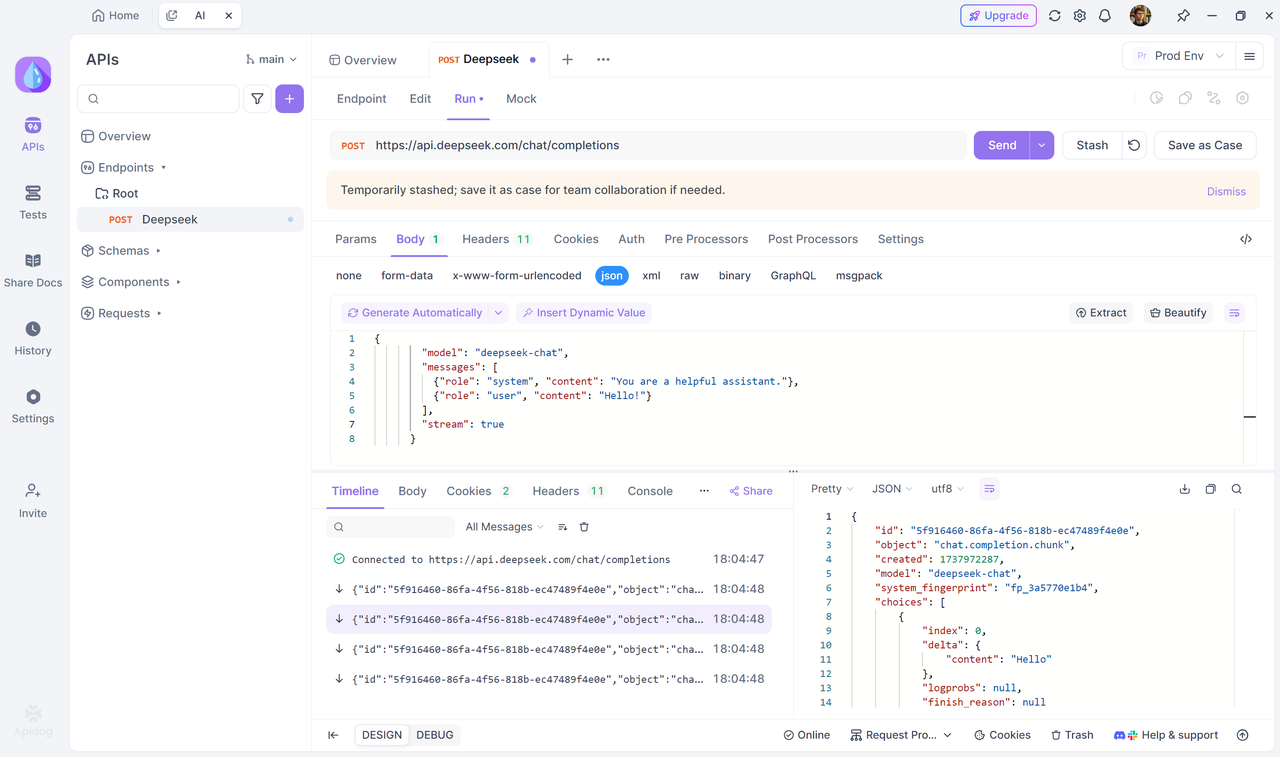

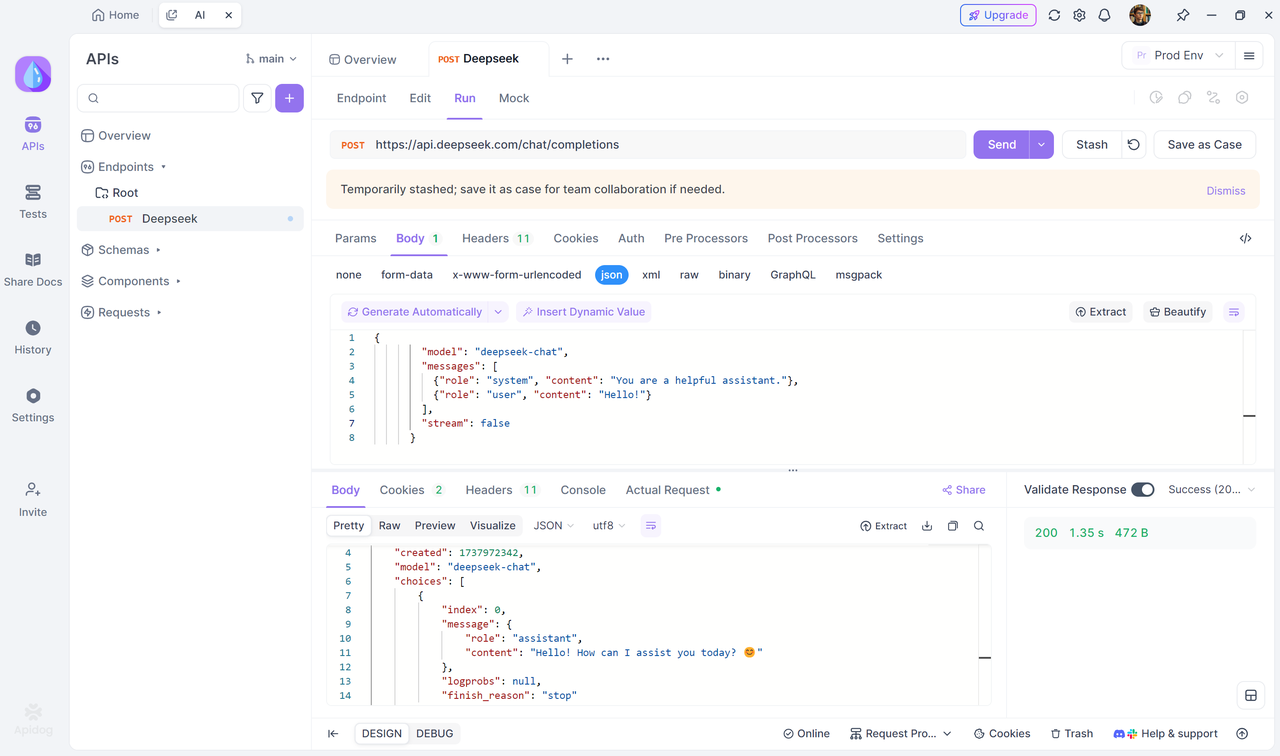

Once you have the API key, you can begin making API calls. The official Deepseek API documentation is available here: https://api-docs.deepseek.com/. Below, we will use Apidog to test the API and stream the messages returned by the AI model.

Here's the Deepseek V3 0324 API Model you need to use: https://openrouter.ai/deepseek/deepseek-chat-v3-0324:free/api

Or output the entire response.

model='deepseek-chat'.You can invoke DeepSeek-R1 by specifying

model='deepseek-reasoner'.

If you haven't used Apidog yet, we highly recommend it. It is an integrated collaboration platform that combines API documentation, debugging, design, testing, mock, and automated testing all in one. With Apidog’s user-friendly interface, you can easily test and manage your DeepSeek API. Use the out-of-box DeeepSeek API in Apidog to get started quickly.

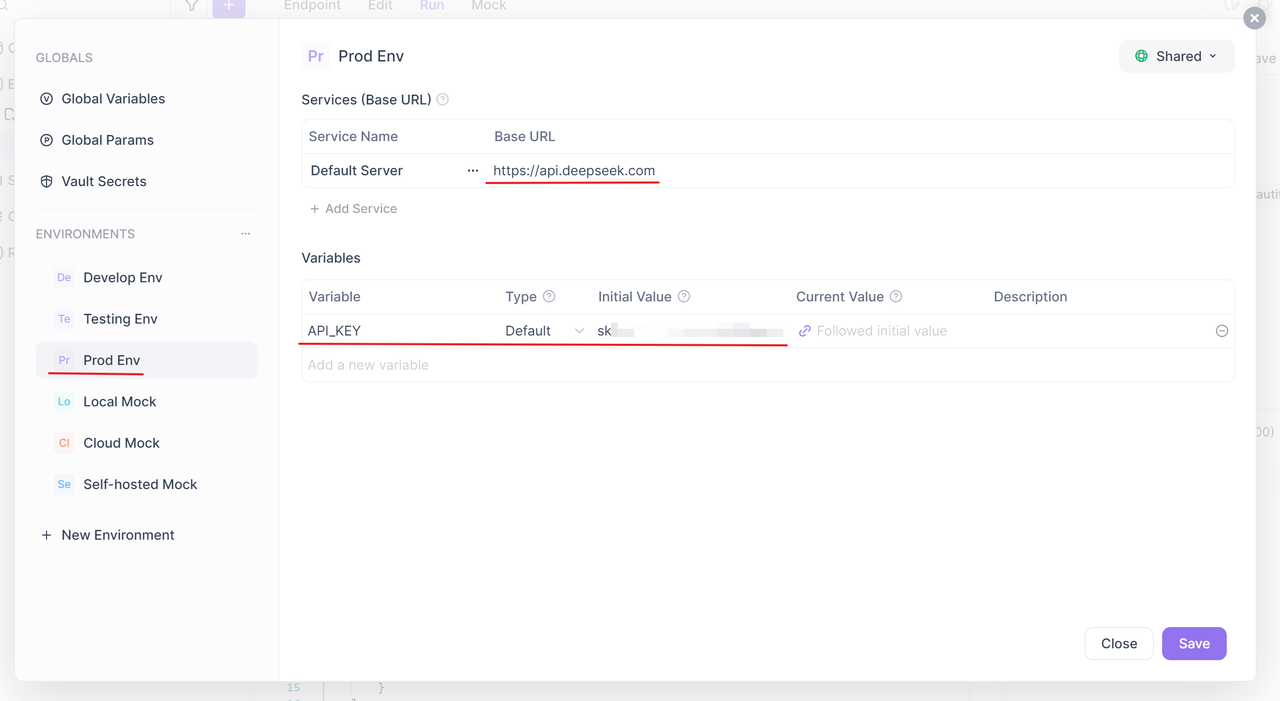

Set Up API Key

Log in to Apidog and create a new HTTP project. After that, go to the "Environment Management" section in the upper-right corner of the project. Click on "Prod Env" and in the Service Base URL field, enter https://api.deepseek.com. Then, add an environment variable named API_KEY with the value of the Deepseek API key you created earlier. After adding, save the changes.

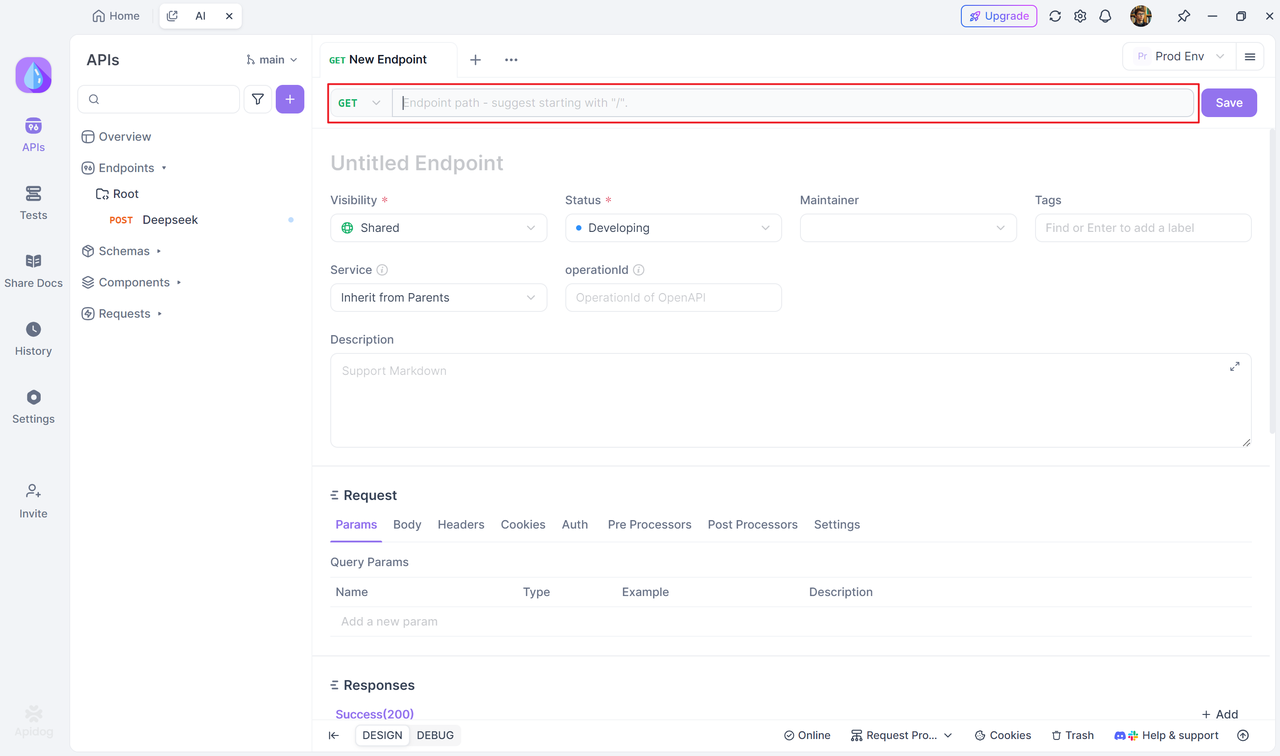

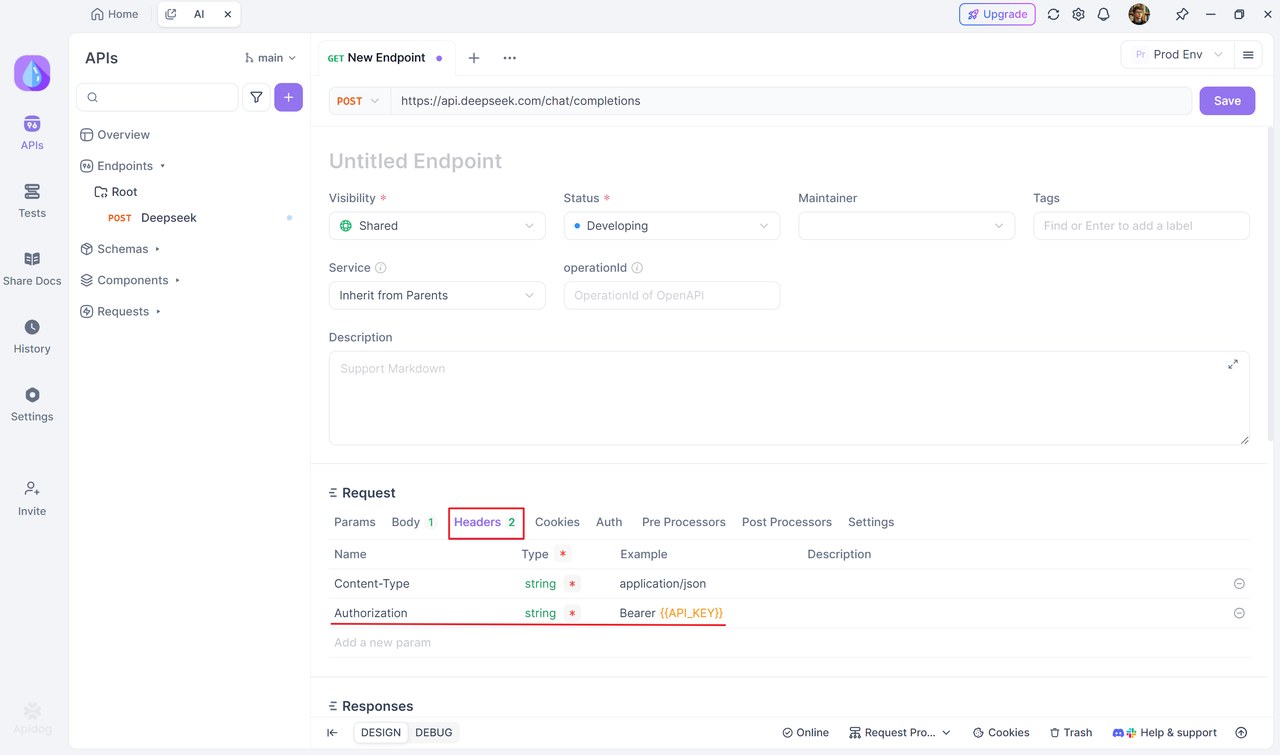

Create a New Endpoint

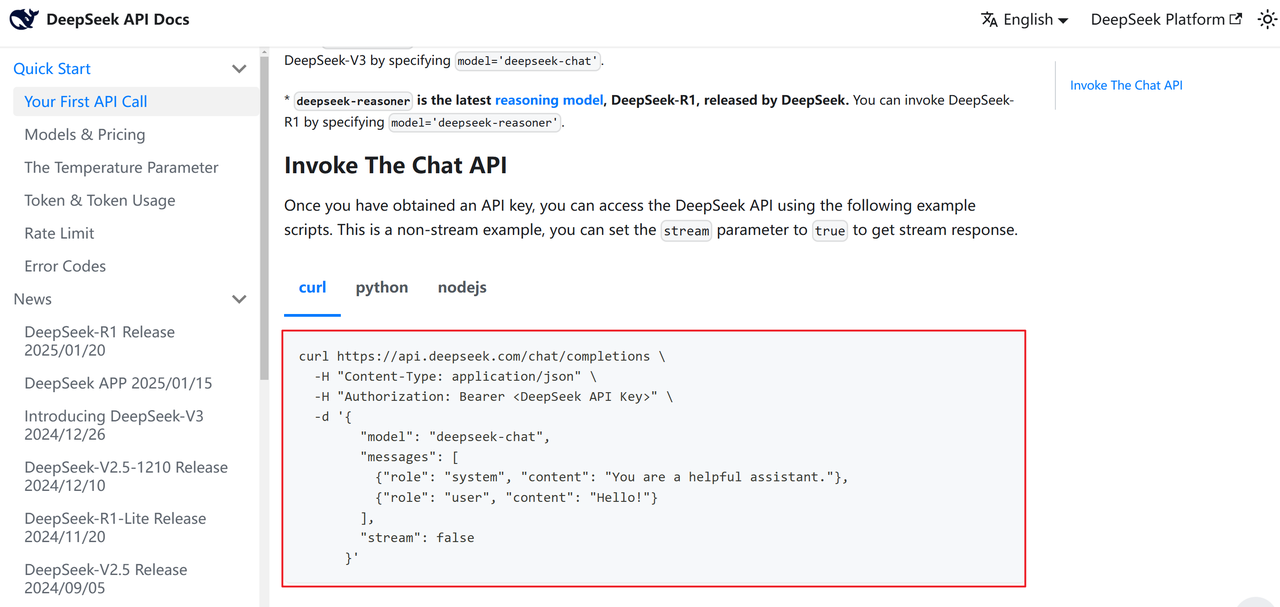

Once the API key is set up, create a new endpoint in your project. Then, copy the cURL command for the conversation API from the Deepseek API documentation.

Go back to Apidog, and simply paste the cURL directly into the endpoint path by pressing "Ctrl + V." Apidog will automatically parse the cURL.

In the parsed endpoint, click on "Headers" and modify the Authorization parameter. Change its value to Bearer {{API_KEY}}, so that the API request will include the API key stored in the environment variable.

Make a Deepseek V3 0324 API Call with Python

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="YOUR_OPENROUTER_API_KEY", # Replace with your actual API key

)

completion = client.chat.completions.create(

extra_headers={

"HTTP-Referer": "YOUR_SITE_URL", # Optional for rankings

"X-Title": "YOUR_SITE_NAME", # Optional for rankings

},

model="deepseek/deepseek-chat-v3-0324:free",

messages=[

{

"role": "user",

"content": "What are the key advantages of mixture-of-experts architecture in large language models?"

}

]

)

print(completion.choices[0].message.content)

Make a Deepseek V3 0324 API Call with TypeScript/JavaScript

import OpenAI from 'openai';

const openai = new OpenAI({

baseURL: 'https://openrouter.ai/api/v1',

apiKey: 'YOUR_OPENROUTER_API_KEY',

});

async function callDeepSeekV3() {

const completion = await openai.chat.completions.create({

model: 'deepseek/deepseek-chat-v3-0324:free',

messages: [

{ role: 'user', content: 'Explain how to implement a binary search algorithm in JavaScript.' }

],

headers: {

'HTTP-Referer': 'YOUR_SITE_URL',

'X-Title': 'YOUR_SITE_NAME',

}

});

console.log(completion.choices[0].message.content);

}

callDeepSeekV3();

Make a Deepseek V3 0324 API Call with cURL (Command Line)

curl https://openrouter.ai/api/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_OPENROUTER_API_KEY" \

-H "HTTP-Referer: YOUR_SITE_URL" \

-H "X-Title: YOUR_SITE_NAME" \

-d '{

"model": "deepseek/deepseek-chat-v3-0324:free",

"messages": [

{

"role": "user",

"content": "Design a database schema for a social media application."

}

]

}'

How to Write Effective Prompts with Deepseek V3 0324

To get the most out of DeepSeek V3 0324, consider these prompt engineering best practices:

Be Specific and Detailed

Provide clear, detailed instructions. Compare these examples:

Vague prompt: "Write about climate change."

Improved prompt: "Write a 500-word explanation of how climate change affects marine ecosystems, focusing on coral reefs and including recent scientific findings from the past 5 years."

Leverage the Context Window

With its 131K token context, you can provide extensive background information, examples, and requirements:

I'll provide a scientific paper about quantum computing, and I'd like you to:

1. Summarize the key findings in 3-5 bullet points

2. Explain the methodology in simple terms

3. Identify potential limitations of the study

4. Suggest areas for future research

Here's the paper:

[paste entire paper text here]

Structure Complex Requests

For multi-part tasks, use clear formatting:

I need to develop a marketing strategy for a new fitness app.

Target audience:

- Professionals aged 25-40

- Beginner to intermediate fitness levels

- Urban dwellers with limited time

Goals:

1. Increase app downloads by 50% in Q3

2. Improve user retention beyond 30 days

3. Generate positive social media engagement

Please provide:

- 3 core marketing messages aligned with these goals

- Recommended marketing channels with rationale

- Ideas for a launch campaign

- KPIs to track success

Use System Messages for Personality/Role Setting

When using the chat interface, you can set a system message to establish the model's role:

messages=[

{

"role": "system",

"content": "You are an expert Python programming tutor who explains concepts clearly with simple examples tailored for beginners."

},

{

"role": "user",

"content": "Explain decorators in Python. I'm confused about how they work."

}

]

Conclusion

DeepSeek V3 0324's free availability represents an exceptional opportunity for developers, researchers, content creators, and businesses to access cutting-edge AI capabilities without financial barriers. By following the setup and usage guidelines outlined in this article, you can quickly begin leveraging this powerful model for a wide range of applications.

As you experiment with the model, you'll likely discover creative ways to apply its capabilities to your specific needs. The combination of its large parameter count, extensive context window, and cost-free access makes DeepSeek V3 0324 a remarkable resource in the AI landscape.

Start exploring today and discover how this free yet powerful AI model can enhance your projects, streamline workflows, and unlock new possibilities in your work.