Google BigQuery is a powerhouse for large-scale data analytics, offering serverless architecture, massive scalability, and a familiar SQL interface. But if you’re building automated data pipelines, integrating analytics into your apps, or managing resources at scale, harnessing BigQuery’s APIs is essential.

This guide walks API developers, backend engineers, and technical leads through using BigQuery APIs—covering API types, environment setup, Python integration, and advanced use cases. You'll find practical code, step-by-step instructions, and ways to boost your workflow efficiency.

💡 Want an API testing suite that also generates beautiful API documentation? Looking for an all-in-one platform for your developer team to work together at maximum productivity? Apidog delivers these and replaces Postman at a much more affordable price.

Why Use BigQuery APIs?

While the Google Cloud Console and bq CLI are great for quick tasks, APIs are the key to:

- Automating frequent or complex data operations

- Integrating analytics into backend services and dashboards

- Building CI/CD pipelines for data workflows

- Managing datasets, jobs, and access at scale

BigQuery API Overview

Core Concepts

- Project: Top-level container for your resources, data, and billing.

- Dataset: Logical container for tables and views, similar to a schema or database.

- Table: Stores structured data, supports nested/repeated fields.

- Job: Represents async tasks (queries, loads, exports, copies) in BigQuery.

API Types

BigQuery offers several programmatic interfaces:

- REST API: Direct, HTTP/JSON-based access to all BigQuery operations. Offers granular control, but requires manual request/response handling and OAuth 2.0 authentication.

- Client Libraries: Idiomatic libraries for Python, Java, Go, Node.js, and more. Handles authentication, retries, and simplifies most tasks. Recommended for most developers.

- Specialized APIs:

- BigQuery Storage Read API: Ultra-fast data export and streaming reads.

- BigQuery Connection API: Query external sources (Cloud SQL, Spanner, GCS) from BigQuery.

- Analytics Hub API: Share datasets across organizations.

- BigLake API: Query data in open formats stored in Google Cloud Storage.

Setting Up: Prerequisites & Authentication

Before making API calls, ensure your environment is ready:

Essentials

- Google Cloud Account: Register at cloud.google.com.

- Project: Create/select a Google Cloud project; note the Project ID.

- Enable APIs: In Cloud Console, enable BigQuery API (and others like Storage Read API as needed).

- Billing: Activate billing for your project.

Authentication

- Application Default Credentials (ADC): The preferred way to authenticate. Libraries pick up credentials automatically from your environment.

- For local dev: Install

gcloudCLI and run:gcloud auth application-default login - For deployed code (Compute Engine, Cloud Functions): ADC uses the resource’s service account.

- Service Accounts: For non-GCP environments or fine-grained permissions, create a service account, grant roles (BigQuery Data Editor, Job User), download the JSON key, and set:

export GOOGLE_APPLICATION_CREDENTIALS=/path/to/key.json

- For local dev: Install

Install Python Client Libraries

We’ll use Python for code examples.

pip install google-cloud-bigquery

pip install google-cloud-bigquery-storage # (Optional, for Storage Read API)

pip install pandas db-dtypes pyarrow # (Optional, for DataFrame integration)

Using BigQuery with Python: Key Operations

1. Initialize the Client

from google.cloud import bigquery

import pandas as pd

client = bigquery.Client()

print("BigQuery client created.")

- If credentials are set up, this works out-of-the-box.

- Optionally specify the project:

client = bigquery.Client(project='your-project-id')

2. Running Queries

Synchronous Query

query = """

SELECT name, SUM(number) as total_people

FROM `bigquery-public-data.usa_names.usa_1910_2013`

WHERE state = 'TX'

GROUP BY name, state

ORDER BY total_people DESC

LIMIT 10

"""

query_job = client.query(query)

rows = query_job.result() # Waits for completion

for row in rows:

print(f"Name: {row.name}, Count: {row['total_people']}")

df = rows.to_dataframe()

print(df.head())

Asynchronous Query

For heavy queries, submit and check status later:

query = """

SELECT corpus, COUNT(word) as distinct_words

FROM `bigquery-public-data.samples.shakespeare`

GROUP BY corpus

ORDER BY distinct_words DESC;

"""

job_config = bigquery.QueryJobConfig(use_legacy_sql=False)

query_job = client.query(query, job_config=job_config)

print(f"Started job: {query_job.job_id}")

# Later...

results = query_job.result()

for row in results:

print(f"Corpus: {row.corpus}, Words: {row.distinct_words}")

Parameterized Query

Always use parameters to guard against SQL injection.

from google.cloud.bigquery import ScalarQueryParameter, QueryJobConfig

state_param = "NY"

prefix_param = "Ma"

min_count_param = 1000

query = """

SELECT name, SUM(number) as total_people

FROM `bigquery-public-data.usa_names.usa_1910_2013`

WHERE state = @state_abbr AND name LIKE @name_prefix

GROUP BY name

HAVING total_people >= @min_count

ORDER BY total_people DESC;

"""

job_config = QueryJobConfig(

query_parameters=[

ScalarQueryParameter("state_abbr", "STRING", state_param),

ScalarQueryParameter("name_prefix", "STRING", f"{prefix_param}%"),

ScalarQueryParameter("min_count", "INT64", min_count_param),

]

)

query_job = client.query(query, job_config=job_config)

rows = query_job.result()

for row in rows:

print(f"{row.name}: {row.total_people}")

3. Managing Datasets

Create, list, get, and delete datasets:

project_id = client.project

dataset_id = f"{project_id}.my_new_dataset"

dataset = bigquery.Dataset(dataset_id)

dataset.location = "US"

dataset.description = "Created via Python client"

try:

dataset = client.create_dataset(dataset, timeout=30)

print(f"Dataset created: {dataset.dataset_id}")

for ds in client.list_datasets():

print(ds.dataset_id)

retrieved = client.get_dataset(dataset_id)

print(retrieved.description)

finally:

client.delete_dataset(dataset_id, delete_contents=True, not_found_ok=True)

print(f"Dataset deleted: {dataset_id}")

4. Managing Tables

Create tables, load data, retrieve metadata, and clean up:

table_id = f"{client.project}.my_new_dataset.my_new_table"

schema = [

bigquery.SchemaField("full_name", "STRING", mode="REQUIRED"),

bigquery.SchemaField("age", "INTEGER", mode="REQUIRED"),

bigquery.SchemaField("email", "STRING", mode="NULLABLE"),

]

table = bigquery.Table(table_id, schema=schema)

try:

client.create_dataset("my_new_dataset", exists_ok=True)

table = client.create_table(table)

print(f"Table created: {table.table_id}")

# Load data from DataFrame

data = {'full_name': ['Alice Smith', 'Bob Johnson'], 'age': [30, 45], 'email': ['alice@example.com', None]}

df = pd.DataFrame(data)

load_job = client.load_table_from_dataframe(df, table_id)

load_job.result()

print("Data loaded.")

# Get metadata

table = client.get_table(table_id)

print(f"Rows in table: {table.num_rows}")

finally:

client.delete_table(table_id, not_found_ok=True)

print(f"Table deleted: {table_id}")

5. Managing Jobs

Monitor async operations:

for job in client.list_jobs(max_results=10):

print(f"Job ID: {job.job_id}, Type: {job.job_type}, State: {job.state}")

Retrieve details for a specific job as needed.

Advanced: Specialized BigQuery APIs

BigQuery Storage Read API

- Purpose: High-speed, parallel data reads—ideal for ML pipelines or bulk exports.

- Integration: Use with pandas’

read_gbqor manually viagoogle-cloud-bigquery-storage.

Example (with pandas):

df = pd.read_gbq(

"bigquery-public-data.usa_names.usa_1910_2013",

project_id=client.project,

columns=["name", "number", "state"],

row_filter="state = 'CA' AND number > 5000",

use_bqstorage_api=True

)

print(df.head())

BigQuery Connection API

- Purpose: Connect BigQuery to external sources (Cloud SQL, Spanner, GCS) with no data movement.

- Workflow:

- Create a connection resource via API or Console.

- Grant permissions to connection’s service account.

- Use

EXTERNAL_QUERY("connection_id", "external_sql")in your SQL.

Analytics Hub & BigLake APIs

- Analytics Hub: Securely share datasets via “exchanges” and “listings” across orgs.

- BigLake: Query open-format data in GCS buckets as BigQuery tables, using consistent security and governance.

Using the REST API Directly

Client libraries are recommended, but REST API access is useful for:

- Languages without client libraries

- Advanced or unreleased features

- Full control over HTTP requests

Example: Run a Query via REST

TOKEN=$(gcloud auth print-access-token)

PROJECT_ID="your-project-id"

REQUEST_BODY='{

"query": "SELECT name, SUM(number) as total_people FROM `bigquery-public-data.usa_names.usa_1910_2013` WHERE state = 'CA' GROUP BY name ORDER BY total_people DESC LIMIT 5;",

"useLegacySql": false

}'

curl -X POST \

"https://bigquery.googleapis.com/bigquery/v2/projects/${PROJECT_ID}/jobs" \

-H "Authorization: Bearer ${TOKEN}" \

-H "Content-Type: application/json; charset=utf-8" \

-d "${REQUEST_BODY}"

You’ll need to poll job status and retrieve results with further API calls.

Best Practices for BigQuery API Usage

- Prefer Client Libraries for most use cases—they save time and reduce errors.

- Rely on ADC for authentication; avoid hard-coding credentials.

- Always parameterize queries to prevent SQL injection.

- Optimize SQL: Avoid

SELECT *, filter early, use partitioning/clustering. - Handle errors robustly, especially for quota and network issues.

- Clean up resources (datasets, tables) after tests to minimize costs.

- Monitor usage and costs using GCP tools and query configuration options.

- Leverage specialized APIs for performance-critical tasks or external data integration.

Apidog: Supercharge Your API Workflows

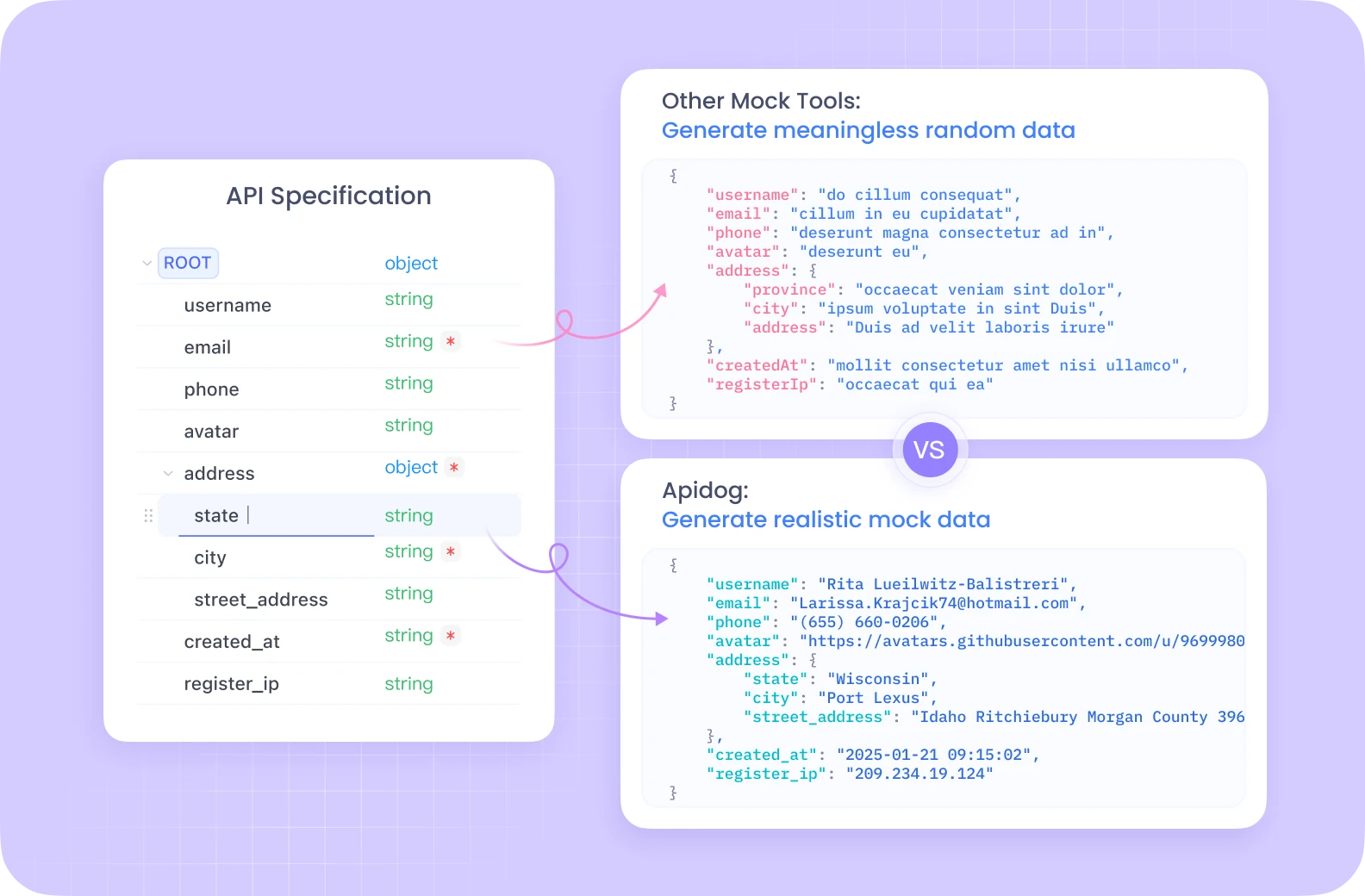

When building or testing your BigQuery API integrations, a reliable API platform is crucial. Apidog provides an integrated environment for API design, automated testing, and seamless documentation—boosting developer productivity and collaboration.

- Instant API documentation for BigQuery endpoints

- Automated testing workflows for your data pipelines

- Centralized workspace for your API team

Explore how Apidog complements your BigQuery automation:

- API Documentation that’s beautiful and shareable

- All-in-one workspace for maximum productivity

- Affordable alternative to Postman

Conclusion

BigQuery APIs unlock advanced automation, integration, and scalability for data-driven teams. With the right setup, client libraries, and best practices, you can streamline every aspect of your analytics workflows—from querying and loading to sharing and managing resources.

For API-first teams, combining BigQuery’s powerful APIs with a platform like Apidog accelerates development and ensures your data projects are robust, collaborative, and well-documented.