)

MiniMax M1: Unlocking Scalable Long-Context Reasoning for API Developers

MiniMax M1, built by a Shanghai-based AI startup, is setting new standards for large language models (LLMs) focused on long-context reasoning and software engineering tasks. With an industry-leading 1 million token context window, efficient Mixture-of-Experts (MoE) architecture, and open-weight availability, MiniMax M1 is rapidly gaining traction among API developers, backend engineers, and teams working with complex or lengthy data.

If you’re ready to supercharge your AI workflows and ship features faster, Hypereal AI provides seamless access to MiniMax’s advanced audio, video, and language models—making it easy to build and scale AI-powered apps. Try Hypereal AI and accelerate your next project.

Why MiniMax M1 Matters for API and Engineering Teams

MiniMax M1 stands out for its combination of performance, scalability, and cost-effectiveness. Available in two variants—M1-40k and M1-80k—this model is purpose-built for:

- Long-context document summarization (process entire books, codebases, or datasets)

- Software engineering tasks (code analysis, debugging, generation)

- Mathematical reasoning

- Agentic tool use (complex workflows, function calling)

Unlike many closed-source LLMs, MiniMax M1 is open-weight, enabling on-premise deployments and fine-tuning for sensitive projects.

Benchmark Highlights

- MMLU Score: 0.808 (M1-40k), outperforming many open-weight competitors

- Intelligence Index: 61 (M1-40k)

- Context Window: 1 million tokens input, up to 80,000 tokens output

- Specialization: Excels in FullStackBench, SWE-bench, MATH, GPQA, and TAU-Bench—ideal for API debugging, codebase analysis, and tool-use scenarios

MiniMax M1 Pricing and Efficiency

Source: Artificialanalysis.AI

- M1-40k: $0.40 per 1M input tokens, $2.10 per 1M output tokens (3:1 ratio), total ~$0.82/1M tokens

- M1-80k: Slightly higher due to extended output support

- Output Speed: 41.1 tokens/sec (slower than average, but optimized for long-context tasks)

- Latency: 1.35s time-to-first-token (TTFT), faster than typical LLMs

- Efficiency: Hybrid MoE and Lightning Attention use only 25% of the compute (FLOPs) vs. rivals at 100k-token generation

- Training Cost: $534,700 over 3 weeks on 512 H800 GPUs—significantly more cost-effective than comparable models

💡 For engineering teams needing robust API testing and documentation, Apidog offers an all-in-one platform that boosts productivity and collaboration—often at a fraction of Postman’s cost. Discover more.

MiniMax M1 Architecture: Inside the Model

- Hybrid-Attention: Combines Lightning Attention (fast, linear cost) with periodic Softmax (expressive, quadratic cost)

- Sparse MoE Routing: Activates only ~10% of 456B parameters per inference, maximizing efficiency

- Reinforcement Learning: Uses the CISPO algorithm to improve performance and cost

- Open-Source License: Apache 2.0 for flexible use, on-premise deployment, and fine-tuning

MiniMax M1’s unique architecture makes it a top choice for developers handling long documents, large codebases, or needing cost-effective, scalable AI solutions.

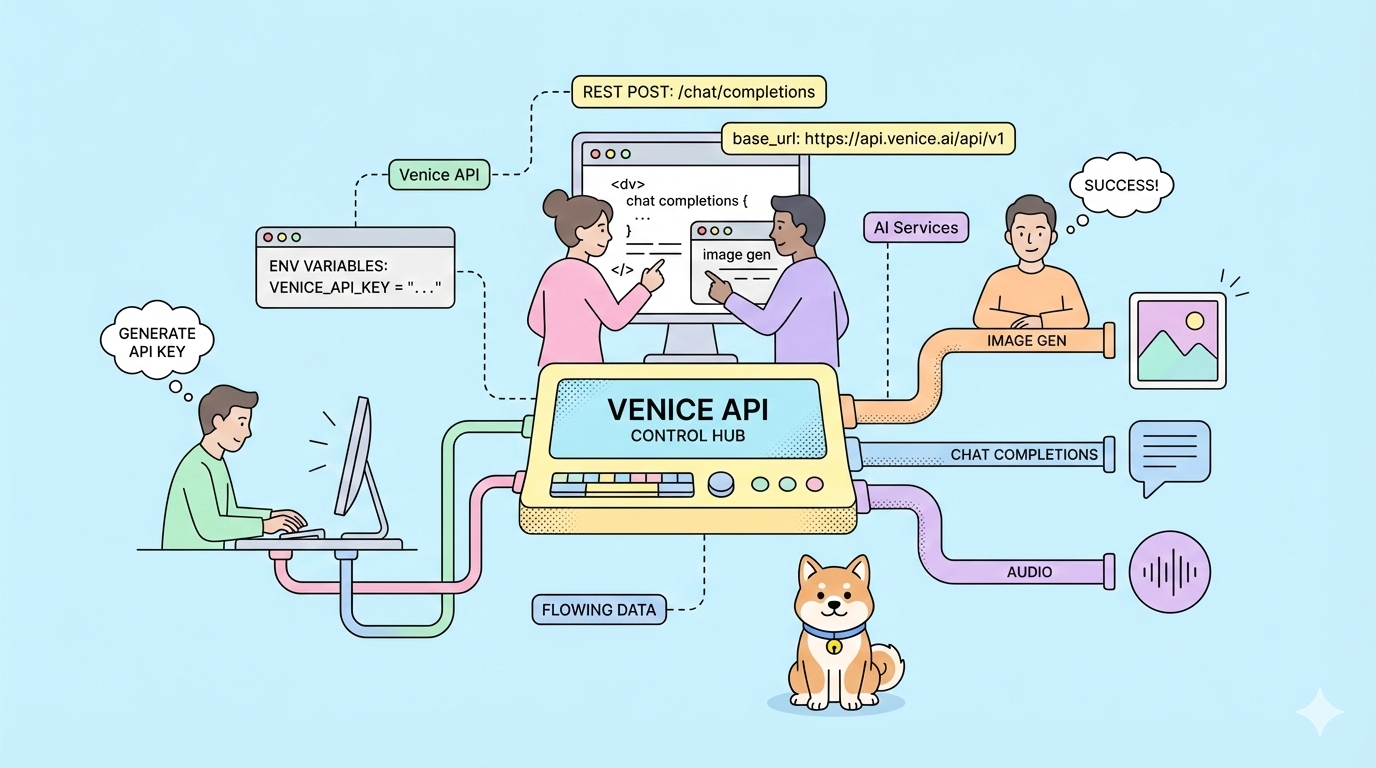

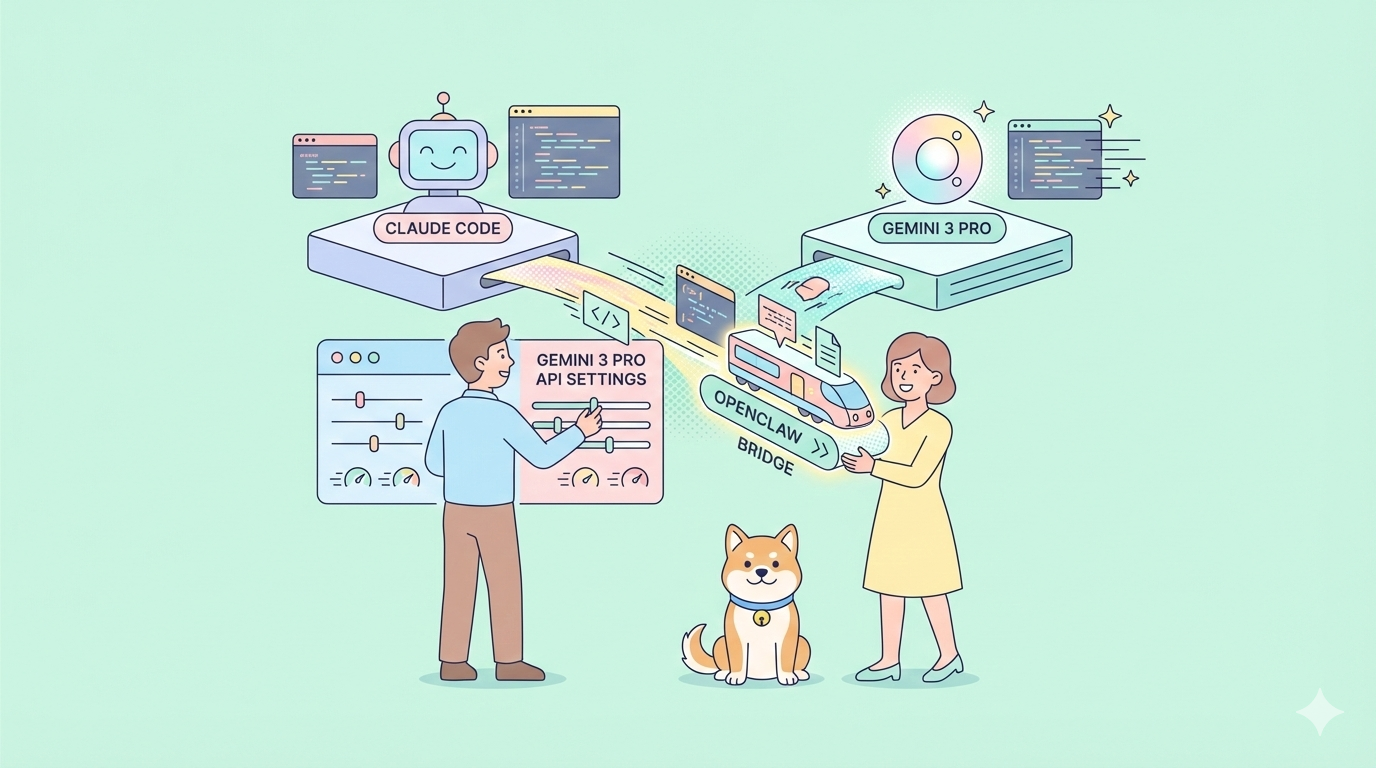

How to Run MiniMax M1 via OpenRouter API

OpenRouter provides a unified, OpenAI-compatible API to access MiniMax M1, streamlining integration into developer workflows.

Step 1: Get Started with OpenRouter

- Visit the OpenRouter website and register using email or Google OAuth.

- Generate your API key in the dashboard's "API Keys" section.

- Add billing information and top up your account—look for MiniMax M1 promos for cost savings.

Step 2: Understand MiniMax M1’s Capabilities on OpenRouter

- Default Variant: M1-40k (1M input, 40k output tokens)

- Supported Tasks: Long-context summarization, code generation, mathematical reasoning, agentic workflows

Step 3: Make API Requests — Example in Python

Prerequisites:

- Python 3.7+

- Install SDK:

pip install openai

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="your_openrouter_api_key_here"

)

prompt = "Summarize the key features of MiniMax M1 in 100 words."

response = client.chat.completions.create(

model="minimax/minimax-m1",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

max_tokens=200,

temperature=1.0,

top_p=0.95

)

print("Response:", response.choices[0].message.content)

Tips:

- Replace

your_openrouter_api_key_herewith your real key. - Use system prompts to guide behavior (e.g., "You are a senior backend engineer.").

- Adjust

max_tokensandtemperaturefor task-specific needs.

Step 4: Handle and Optimize Responses

- The API returns JSON; final output is in

choices[0].message.content. - For long-context tasks, ensure your input stays within token limits. Paginate output if needed.

- For code, math, or agentic tasks, use clear, structured prompts and adjust temperature for precision.

Step 5: Monitor Usage and Costs

OpenRouter’s dashboard lets you track usage and spending. Optimize your inputs to minimize tokens and control costs.

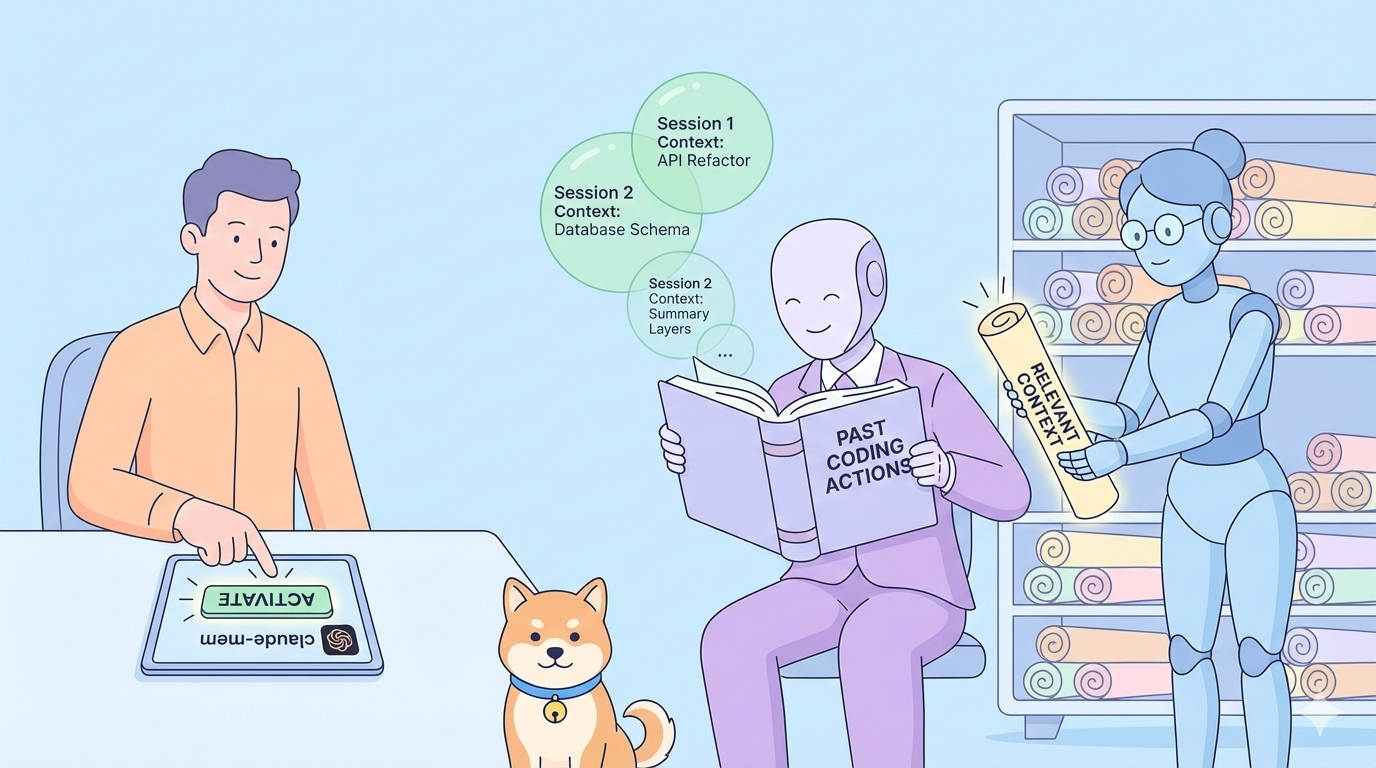

Step 6: Advanced Integrations

- vLLM: Deploy MiniMax M1 for high-throughput serving

- Transformers: Use with Hugging Face for local inference

- CometAPI: Unified access coming soon

Troubleshooting:

- Rate Limits: Upgrade your plan if you hit request limits.

- Errors: Double-check your API key and model name.

- Performance: For speed, use M1-40k or reduce input size.

Bringing It All Together: MiniMax M1 for Scalable AI Engineering

MiniMax M1 delivers the long-context reasoning power and cost-efficiency that API-focused teams, backend engineers, and technical leads demand. Whether you’re summarizing massive documents, automating code review, or building advanced agentic workflows, integrating MiniMax M1 via OpenRouter gives you a robust foundation for production-scale AI.

💡 Want to streamline API testing and generate beautiful API documentation? Apidog empowers developer teams to work together with maximum productivity and can replace Postman at a much more affordable price.

)

)

)