Recently Meta’s powerful AI Llama 3.2 has been released as a game-changing language model, offering impressive capabilities for both text and image processing. For developers and AI enthusiasts eager to harness the power of this advanced model on their local machines, tool like LM Studio stand out. This comprehensive guide will walk you through the process of running Llama 3.2 locally using these powerful platforms, empowering you to leverage cutting-edge AI technology without relying on cloud services.

What is New with Llama 3.2: The Latest in AI Innovation

Before diving into the installation process, let’s briefly explore what makes Llama 3.2 special:

- Multimodal Capabilities: Llama 3.2 can process both text and images, opening up new possibilities for AI applications.

- Improved Efficiency: Designed for better performance with reduced latency, making it ideal for local deployment.

- Varied Model Sizes: Available in multiple sizes, from lightweight 1B and 3B models suitable for edge devices to powerful 11B and 90B versions for more complex tasks.

- Extended Context: Supports a 128K context length, allowing for more comprehensive understanding and generation of content.

Run Llama 3.2 with LM Studio Locally

LM Studio offers a more user-friendly approach with a graphical interface. Here’s how to run Llama 3.2 locally using LM Studio:

Step 1: Download and Install LM Studio

- Visit the LM Studio website.

- Download the version compatible with your operating system.

- Follow the installation instructions provided.

Step 2: Launch LM Studio

Once installed, open LM Studio. You’ll be greeted with an intuitive interface.

Step 3: Find and Download Llama 3.2

- Use the search function in LM Studio to find Llama 3.2.

- Select the appropriate version of Llama 3.2 for your needs (e.g., 3B for lightweight applications or 11B for more complex tasks).

- Click the download button to start the download process.

Step 4: Configure the Model

After downloading, you can configure various parameters:

- Adjust the context length if needed (Llama 3.2 supports up to 128K).

- Set the temperature and top-p sampling values to control output randomness.

- Experiment with other settings to fine-tune the model’s behavior.

Step 5: Start Interacting with Llama 3.2

With the model configured, you can now:

- Use the chat interface to have conversations with Llama 3.2.

- Try different prompts to explore the model’s capabilities.

- Use the Playground feature to experiment with more advanced prompts and settings.

Step 6: Set Up a Local Server (Optional)

For developers, LM Studio allows you to set up a local server:

- Go to the Server tab in LM Studio.

- Configure the server settings (port, API endpoints, etc.).

- Start the server to use Llama 3.2 via API calls in your applications.

Best Practices to Run Llama 3.2 Locally

To get the most out of your local Llama 3.2 setup, consider these best practices:

- Hardware Considerations: Ensure your machine meets the minimum requirements. A dedicated GPU can significantly improve performance, especially for larger model sizes.

- Prompt Engineering: Craft clear, specific prompts to get the best results from Llama 3.2. Experiment with different phrasings to optimize output quality.

- Regular Updates: Keep both your chosen tool (LM Studio) and the Llama 3.2 model updated for the best performance and latest features.

- Experiment with Parameters: Don’t hesitate to adjust settings to find the right balance for your use case. Lower values generally produce more focused, deterministic outputs, while higher values introduce more creativity and variability.

- Ethical Use: Always use AI models responsibly and be aware of potential biases in the outputs. Consider implementing additional safeguards or filters if deploying in production environments.

- Data Privacy: Running Llama 3.2 locally enhances data privacy. Be mindful of the data you input and how you use the model’s outputs, especially when handling sensitive information.

- Resource Management: Monitor your system resources when running Llama 3.2, especially for extended periods or with larger model sizes. Consider using task managers or resource monitoring tools to ensure optimal performance.

Troubleshoot Common Issues

When running Llama 3.2 locally, you might encounter some challenges. Here are solutions to common issues:

- Slow Performance:

- Ensure you have sufficient RAM and CPU/GPU power.

- Try using a smaller model size if available (e.g., 3B instead of 11B).

- Close unnecessary background applications to free up system resources.

2. Out of Memory Errors:

- Reduce the context length in the model settings.

- Use a smaller model variant if available.

- Upgrade your system’s RAM if possible.

3. Installation Problems:

- Check if your system meets the minimum requirements for LM Studio.

- Ensure you have the latest version of the tool you’re using.

- Try running the installation with administrator privileges.

4. Model Download Failures:

- Check your internet connection stability.

- Temporarily disable firewalls or VPNs that might be interfering with the download.

- Try downloading during off-peak hours for better bandwidth.

5. Unexpected Outputs:

- Review and refine your prompts for clarity and specificity.

- Adjust the temperature and other parameters to control output randomness.

- Ensure you’re using the correct model version and configuration.

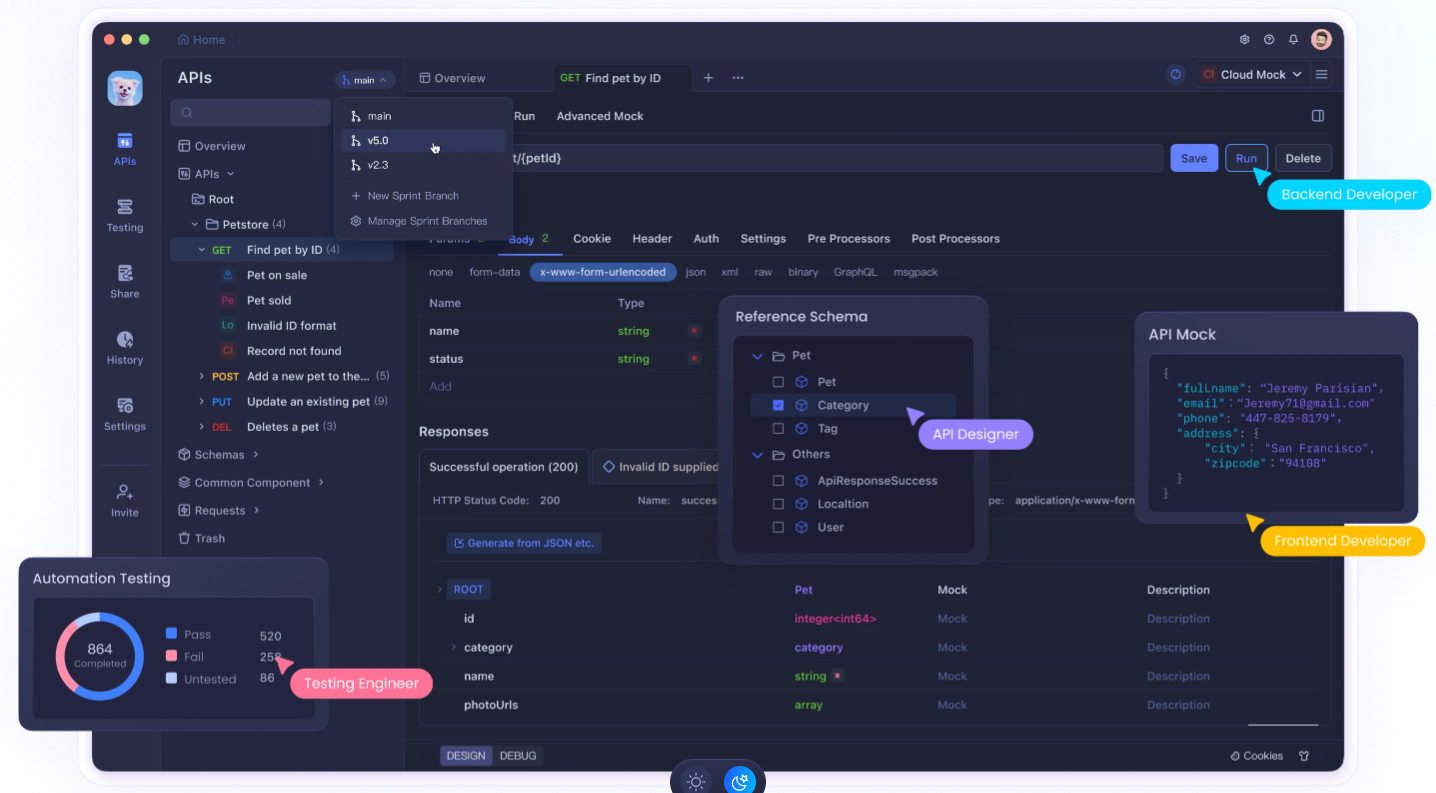

Using Apidog Enhance Your API Development

Running Llama 3.2 locally is powerful, integrating it into your applications often requires robust API development and testing. This is where Apidog comes into play. Apidog is a comprehensive API development platform that can significantly enhance your workflow when working with local LLMs like Llama 3.2.

Key Features of Apidog for Local LLM Integration:

- API Design and Documentation: Easily design and document APIs for your Llama 3.2 integrations, ensuring clear communication between your local model and other parts of your application.

- Automated Testing: Create and run automated tests for your Llama 3.2 API endpoints, ensuring reliability and consistency in your model's responses.

- Mock Servers: Use Apidog's mock server functionality to simulate Llama 3.2 responses during development, allowing you to progress even when you don't have immediate access to your local setup.

- Environment Management: Manage different environments (e.g., local Llama 3.2, production API) within Apidog, making it easy to switch between configurations during development and testing.

- Collaboration Tools: Share your Llama 3.2 API designs and test results with team members, fostering better collaboration in AI-driven projects.

- Performance Monitoring: Monitor the performance of your Llama 3.2 API endpoints, helping you optimize response times and resource usage.

- Security Testing: Implement security tests for your Llama 3.2 API integrations, ensuring that your local model deployment doesn't introduce vulnerabilities.

Getting Started with Apidog for Llama 3.2 Development:

- Sign up for an Apidog account.

- Create a new project for your Llama 3.2 API integration.

- Design your API endpoints that will interact with your local Llama 3.2 instance.

- Set up environments to manage different configurations (e.g., LM Studio setups).

- Create automated tests to ensure your Llama 3.2 integrations are working correctly.

- Use the mock server feature to simulate Llama 3.2 responses during early development stages.

- Collaborate with your team by sharing API designs and test results.

By leveraging Apidog alongside your local Llama 3.2 setup, you can create more robust, well-documented, and thoroughly tested AI-powered applications.

Conclusion: Embracing the Power of Local AI

Running Llama 3.2 locally represents a significant step towards democratizing AI technology. Whether you choose the user-friendly interface of LM Studio or another, you now have the tools to harness the power of advanced language models on your own machine.

Remember that local deployment of large language models like Llama 3.2 is just the beginning. To truly excel in AI development, consider integrating tools like Apidog into your workflow. This powerful platform can help you design, test, and document APIs that interact with your local Llama 3.2 instance, streamlining your development process and ensuring the reliability of your AI-powered applications.

As you embark on your journey with Llama 3.2, keep experimenting, stay curious, and always strive to use AI responsibly. The future of AI is not just in the cloud – it's right here on your local machine, waiting to be explored and harnessed for innovative applications. With the right tools and practices, you can unlock the full potential of local AI and create groundbreaking solutions that push the boundaries of what's possible in technology.