If you are looking for a way to improve the performance and reliability of your APIs, you might want to consider using gRPC streaming. gRPC is a modern, open-source framework that allows you to create efficient and scalable services using protocol buffers and HTTP/2. Streaming is one of the key features of gRPC that enables you to send and receive multiple messages in a single connection, without waiting for each response.

In this blog post, I will explain what gRPC streaming is, how it works, and why it is beneficial for your APIs. I will also show you how to use a tool called apidog to test and debug your gRPC streaming services. By the end of this post, you will have a better understanding of gRPC streaming and how to use it in your projects.

What is gRPC Streaming?

gRPC streaming is a way of sending and receiving multiple messages in a single connection, using HTTP/2 as the underlying transport protocol. HTTP/2 is a newer version of HTTP that supports multiplexing, which means that multiple requests and responses can be sent over the same TCP connection, without blocking each other. This reduces the overhead of opening and closing connections, and improves the latency and throughput of your APIs.

gRPC streaming allows you to use four types of communication patterns:

- Unary: This is the simplest and most common pattern, where the client sends one request and receives one response from the server. This is similar to a regular HTTP request and response.

- Server streaming: In this pattern, the client sends one request and receives multiple responses from the server. The server can stream the responses as they become available, without waiting for the client to request them. This is useful for scenarios where the server needs to send a large amount of data to the client, or where the server needs to push updates to the client in real-time.

- Client streaming: In this pattern, the client sends multiple requests and receives one response from the server. The client can stream the requests as they become available, without waiting for the server to acknowledge them. This is useful for scenarios where the client needs to upload a large amount of data to the server, or where the client needs to send multiple parameters to the server in a single request.

- Bidirectional streaming: In this pattern, the client and the server can send and receive multiple messages in both directions. The messages can be sent and received independently, without following a strict order. This is useful for scenarios where the client and the server need to have a continuous and dynamic conversation, or where the client and the server need to exchange data in a peer-to-peer manner.

How Does gRPC Streaming Work?

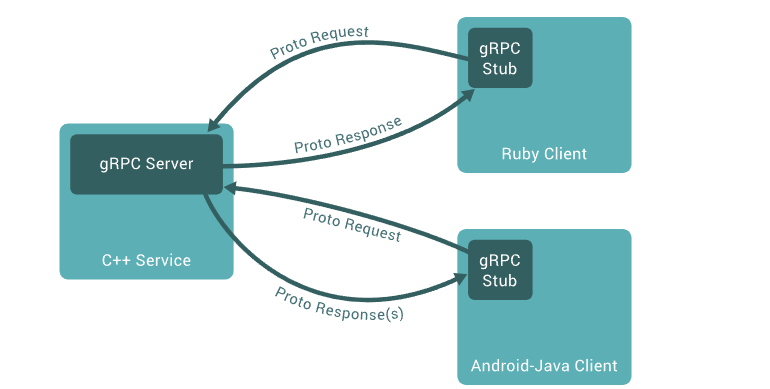

gRPC streaming works by using protocol buffers and HTTP/2 to encode and transport the messages. Protocol buffers are a binary serialization format that allows you to define the structure and types of your messages in a schema file. Protocol buffers are compact, fast, and easy to use, and they support cross-language and cross-platform compatibility.

HTTP/2 is a binary protocol that supports multiplexing, compression, and encryption. HTTP/2 allows you to send multiple messages over the same connection, using frames as the basic unit of communication. Each frame has a header that indicates the type, length, and stream ID of the frame. The stream ID is a unique identifier that associates a frame with a specific request or response. HTTP/2 also supports flow control and error handling, which help to ensure the reliability and efficiency of your APIs.

To use gRPC streaming, you need to define your service and messages in a protocol buffer file, using the gRPC syntax. For example, here is a simple service that supports unary and server streaming calls:

syntax = "proto3";

package example;

// A service that returns the current time

service TimeService {

// Unary call: returns the current time

rpc GetTime (GetTimeRequest) returns (GetTimeResponse) {}

// Server streaming call: returns the current time every second

rpc StreamTime (StreamTimeRequest) returns (stream StreamTimeResponse) {}

}

// A message that represents a request to get the current time

message GetTimeRequest {

// The timezone of the requested time

string timezone = 1;

}

// A message that represents a response with the current time

message GetTimeResponse {

// The current time in ISO 8601 format

string time = 1;

}

// A message that represents a request to stream the current time

message StreamTimeRequest {

// The timezone of the requested time

string timezone = 1;

}

// A message that represents a response with the current time

message StreamTimeResponse {

// The current time in ISO 8601 format

string time = 1;

}

To implement your service, you need to use a gRPC library for your programming language of choice. gRPC supports many languages, such as C#, C++, Go, Java, Node.js, Python, Ruby, and more. Each language has its own API and conventions for creating and consuming gRPC services. For example, here is a simple implementation of the TimeService in Python:

import grpc

import time

from concurrent import futures

from example_pb2 import GetTimeRequest, GetTimeResponse, StreamTimeRequest, StreamTimeResponse

from example_pb2_grpc import TimeServiceServicer, add_TimeServiceServicer_to_server

# A class that implements the TimeService

class TimeService(TimeServiceServicer):

# Unary call: returns the current time

def GetTime(self, request, context):

# Get the timezone from the request

timezone = request.timezone

# Get the current time in the requested timezone

time = get_current_time(timezone)

# Create and return a response with the current time

response = GetTimeResponse(time=time)

return response

# Server streaming call: returns the current time every second

def StreamTime(self, request, context):

# Get the timezone from the request

timezone = request.timezone

# Loop indefinitely

while True:

# Get the current time in the requested timezone

time = get_current_time(timezone)

# Create and yield a response with the current time

response = StreamTimeResponse(time=time)

yield response

# Wait for one second

time.sleep(1)

# A function that returns the current time in a given timezone

def get_current_time(timezone):

# TODO: implement this function

pass

# Create a gRPC server

server = grpc.server(futures.ThreadPoolExecutor(max_workers=10))

# Add the TimeService to the server

add_TimeServiceServicer_to_server(TimeService(), server)

# Start the server on port 50051

server.add_insecure_port('[::]:50051')

server.start()

# Wait for termination

server.wait_for_termination()

To consume your service, you need to use a gRPC client for your programming language of choice. gRPC clients use stubs to communicate with gRPC servers. Stubs are generated from the protocol buffer file, and they provide methods that correspond to the service methods. For example, here is a simple client that calls the TimeService in Python:

import grpc

from example_pb2 import GetTimeRequest, StreamTimeRequest

from example_pb2_grpc import TimeServiceStub

# Create a gRPC channel to the server

channel = grpc.insecure_channel('localhost:50051')

# Create a stub for the TimeService

stub = TimeServiceStub(channel)

# Unary call: get the current time in UTC

request = GetTimeRequest(timezone='UTC')

response = stub.GetTime(request)

print(f'The current time in UTC is {response.time}')

# Server streaming call: stream the current time in PST

request = StreamTimeRequest(timezone='PST')

responses = stub.StreamTime(request)

for response in responses:

print(f'The current time in PST is {response.time}')

Why Use gRPC Streaming?

gRPC streaming offers many benefits for your APIs, such as:

- Performance: gRPC streaming reduces the overhead of opening and closing connections, and allows you to send and receive multiple messages in a single connection. This improves the latency and throughput of your APIs, and makes them more responsive and efficient.

- Reliability: gRPC streaming uses HTTP/2, which supports flow control and error handling. Flow control allows you to control the rate of data transfer, and avoid congestion and buffer overflow. Error handling allows you to detect and recover from errors, and gracefully terminate the connection. These features help to ensure the reliability and robustness of your APIs, and prevent data loss and corruption.

- Flexibility: gRPC streaming allows you to use different communication patterns, depending on your use case and requirements. You can choose between unary, server streaming, client streaming, and bidirectional streaming, and switch between them easily. This gives you more flexibility and control over your APIs, and allows you to handle different scenarios and challenges.

- Simplicity: gRPC streaming simplifies the development and maintenance of your APIs, by using protocol buffers and gRPC libraries. Protocol buffers allow you to define your service and messages in a clear and concise way, and generate code for different languages and platforms. gRPC libraries allow you to create and consume your service using a consistent and intuitive API, and handle the low-level details of streaming and HTTP/2 for you. This makes your code more readable, reusable, and portable, and reduces the complexity and boilerplate of your APIs.

How to Test and Debug gRPC Streaming Services?

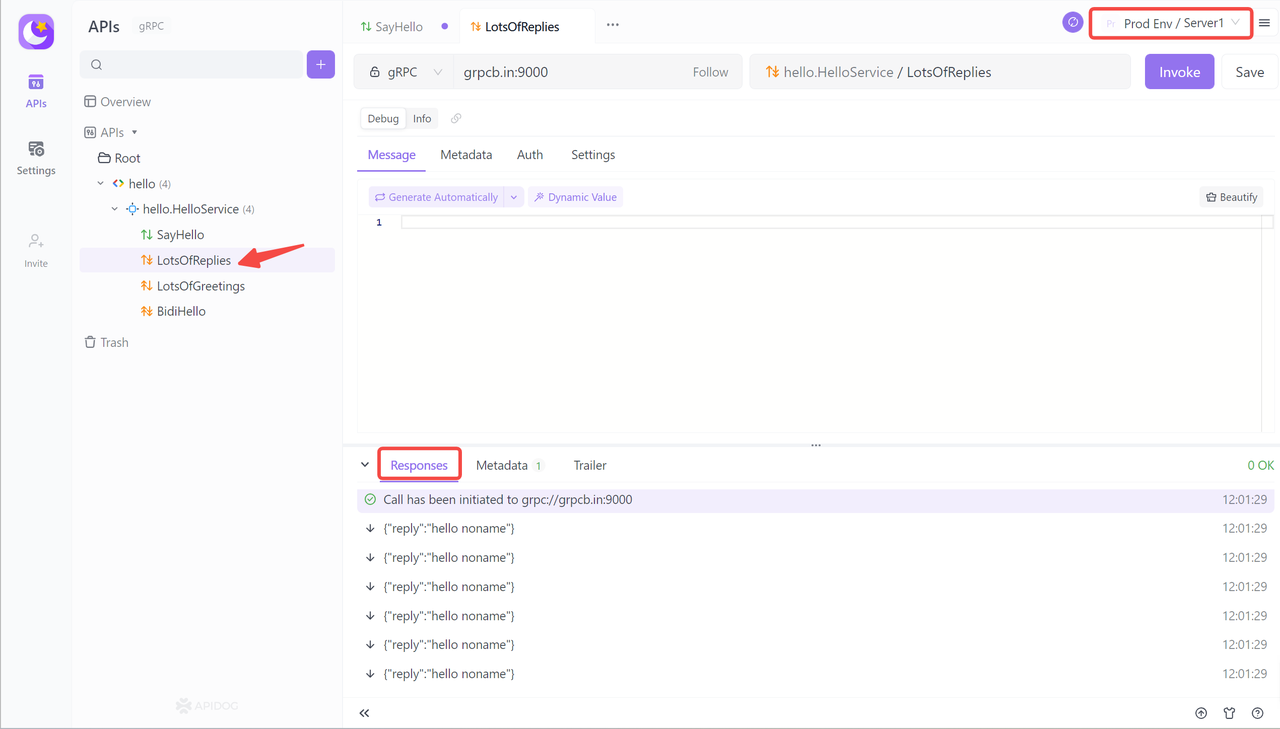

Testing and debugging gRPC streaming services can be challenging, especially if you are using different languages and platforms. Fortunately, there is a tool that can help you with that: Apidog.

Apidog is a web-based tool that allows you to test and debug your gRPC streaming services, using a simple and intuitive interface. apidog supports many languages, such as C#, C++, Go, Java, Node.js, Python, Ruby, and more. Apidog also supports different communication patterns, such as unary, server streaming, client streaming, and bidirectional streaming.

With apidog, you can:

- Connect to your gRPC server, using a secure or insecure channel, and specify the service and method you want to call.

- Send requests to your gRPC server, using a JSON or binary format, and specify the metadata and deadline for each request.

- Receive responses from your gRPC server, using a JSON or binary format, and view the metadata and status for each response.

- Monitor the performance and status of your gRPC connection, using graphs and charts that show the latency, throughput, and errors of your requests and responses.

- Debug your gRPC service, using logs and traces that show the details and errors of your requests and responses, and allow you to filter and search for specific events.

Apidog is a powerful and easy-to-use tool that can help you test and debug your gRPC streaming services, and ensure that they work as expected.

Conclusion

gRPC streaming is a feature that can make your APIs faster and more reliable, by allowing you to send and receive multiple messages in a single connection, using HTTP/2 and protocol buffers. gRPC streaming also offers you flexibility and simplicity, by allowing you to use different communication patterns and languages, and by providing you with a consistent and intuitive API. gRPC streaming is a great choice for your APIs, especially if you need to handle large amounts of data, real-time updates, or complex interactions.

If you want to learn more about gRPC streaming, you can check out the official documentation at https://grpc.io/docs. If you want to test and debug your gRPC streaming services, you can use Apidog, a web-based tool that allows you to connect, send, receive, monitor, and debug your gRPC streaming services, using a simple and intuitive interface. You can try apidog for free.