xAI's Grok models have emerged as powerful contenders challenging industry leaders like OpenAI's GPT-4, Anthropic's Claude, and Google's Gemini. Developed by Elon Musk's artificial intelligence company, Grok 3 and its smaller sibling, Grok 3 Mini, represent cutting-edge AI technology that's generating significant interest among developers, researchers, and AI enthusiasts.

This comprehensive guide explores how you can access and use Grok 3 and Grok 3 Mini online without paying for an X Premium+ subscription, focusing on OpenRouter as a gateway. We'll examine their performance benchmarks, API pricing structures, usage limits, and provide practical steps to leverage these powerful models effectively.

Understanding Grok 3 and Grok 3 Mini

Grok 3: The Flagship Model

Grok 3 is xAI's flagship model designed to excel in enterprise applications. According to OpenRouter's description, it demonstrates exceptional capabilities in:

- Data extraction and analytics

- Advanced coding and technical problem-solving

- Comprehensive text summarization

- Deep domain knowledge across finance, healthcare, law, and scientific fields

- Strong performance on structured tasks and challenging benchmarks

Grok 3 features a substantial 131,072 token context window, allowing it to process and understand extremely long documents or conversations—a significant advantage over many competing models.

Grok 3 Mini: The Efficient Thinker

Grok 3 Mini represents a more lightweight alternative with some distinctive characteristics:

- Designed as a "smaller thinking model" that contemplates before responding

- Excels at reasoning-heavy tasks requiring careful thought processes

- Particularly effective for mathematical and quantitative problems

- Features transparent "thinking traces" that show its reasoning process

- Optimized for better performance-to-cost ratio compared to the full model

- Also offers a 131,072 token context window

A unique feature of Grok 3 Mini is its reasoning capability, which can be adjusted with the parameter setting reasoning: { effort: "high" } for more complex tasks.

Benchmarks: How Grok 3 Performs Against Competitors

While complete benchmark data for the latest Grok 3 model is still emerging, we can gain insights from xAI's published results for previous Grok versions and industry comparisons.

Based on available data from xAI's blog posts and third-party assessments, Grok 3 demonstrates competitive performance against top-tier models:

- MMLU (Massive Multitask Language Understanding): Earlier Grok models showed significant improvements between versions. Grok-1 scored 73% on 5-shot MMLU tests, while Grok-1.5 reached 81.3%, placing it competitive with models like Claude 3 Sonnet.

- GPQA, LCB, and MMLU-Pro: According to OpenRouter's description, Grok 3 outperforms Grok 3 Mini on these advanced benchmarks even when Mini is set to high thinking mode, suggesting Grok 3's superior capabilities on complex reasoning tasks.

- Reasoning and Problem-Solving: A key strength of the Grok models is their "thinking before responding" approach, which appears to yield better results on multi-step reasoning problems compared to models that generate answers immediately.

While direct head-to-head comparisons with the latest versions of GPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 will require more comprehensive third-party testing, early indications suggest Grok 3 is positioned as a highly competitive model in the top tier of current LLMs.

Grok 3 vs Grok 3 Mini, Which Model Shall I Pick?

Grok 3 (Full Model)

- Complex financial analysis and forecasting

- Advanced scientific research assistance

- In-depth legal document analysis and contract review

- Sophisticated code generation and debugging for enterprise applications

- Medical research and healthcare data interpretation

Grok 3 Mini

- Educational tutoring and problem-solving

- General customer service and FAQ responses

- Content summarization and generation

- Basic coding assistance and debugging

- Mathematical problem-solving

- Creative writing and brainstorming

Hwo to Use Grok 3 and Grok 3 Mini for Free through OpenRouter

The primary way to access Grok is through an X Premium+ subscription, which costs $16/month. However, OpenRouter offers an alternative route that allows developers to test and use these models with more flexibility and potentially lower costs.

What is OpenRouter?

OpenRouter is a unified API platform that provides access to over 300 AI models from various providers through a single interface. It standardizes requests and responses across different model providers, making it easier to experiment with and compare different AI models without managing multiple API integrations.

Steps to Access Grok 3 and Grok 3 Mini via OpenRouter

Create an OpenRouter Account:

- Visit OpenRouter.ai

- Sign up for a new account or log in to your existing account

- New users typically receive some free credits to start experimenting

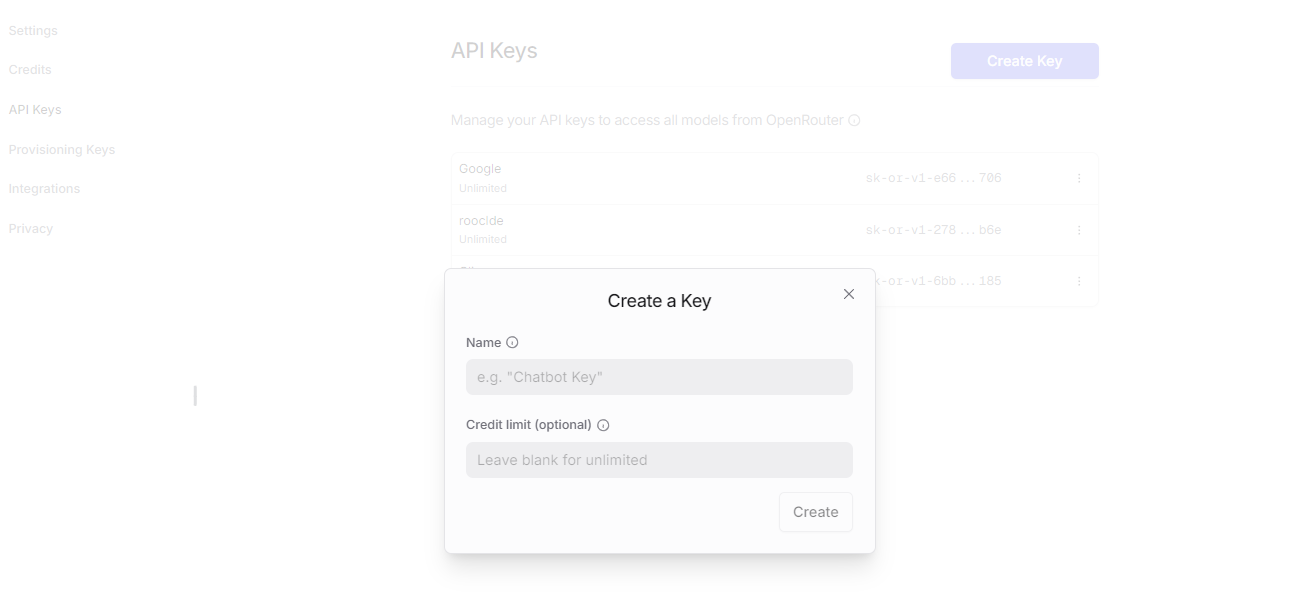

Generate an API Key:

- Navigate to your account settings

- Look for the API Keys section

- Create a new API key and save it securely

- Set Up Your Development Environment:

- OpenRouter provides an OpenAI-compatible API, meaning you can use the OpenAI SDK

- Install the necessary libraries (for Python users:

pip install openai)

- Make API Calls to Grok Models:

import openai

# Configure the client with your OpenRouter API key

client = openai.Client(

base_url="<https://openrouter.ai/api/v1>",

api_key="your_openrouter_api_key_here"

)

# For Grok 3

response = client.chat.completions.create(

model="x-ai/grok-3-beta",

messages=[

{"role": "user", "content": "Explain quantum computing in simple terms"}

]

)

# For Grok 3 Mini with high reasoning

response = client.chat.completions.create(

model="x-ai/grok-3-mini-beta",

messages=[

{"role": "user", "content": "Solve this puzzle: A bat and ball cost $1.10. The bat costs $1 more than the ball. How much does the ball cost?"}

],

reasoning={"effort": "high"} # Optional parameter to enhance reasoning

)

print(response.choices[0].message.content)

For JavaScript/Node.js developers, a similar approach applies:

import OpenAI from 'openai';

const openai = new OpenAI({

baseURL: '<https://openrouter.ai/api/v1>',

apiKey: 'your_openrouter_api_key_here',

});

async function getGrokResponse() {

const response = await openai.chat.completions.create({

model: 'x-ai/grok-3-beta',

messages: [

{ role: 'user', content: 'Explain quantum computing in simple terms' }

],

});

console.log(response.choices[0].message.content);

}

getGrokResponse();

Advanced Usage Tips

Endpoint Selection: OpenRouter notes that there are two xAI endpoints for Grok models. By default, you'll be routed to the base endpoint, but you can add provider: { sort: "throughput" } to your request to use the faster endpoint when available.

Headers for Analytics: Add optional headers to allow your app to appear on OpenRouter leaderboards:

headers = {

"HTTP-Referer": "<https://your-site.com>",

"X-Title": "Your Application Name"

}

Handling Specific Requirements: For certain applications, you may need to adjust parameters like temperature, top_p, or max_tokens to optimize the model's output for your use case.

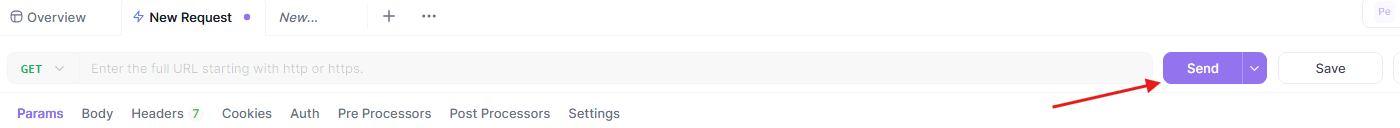

Testing Grok 3 API with Apidog

Apidog is a comprehensive API testing tool that simplifies the process of interacting with APIs like Grok 3. Its features, such as environment management and scenario simulation, make it ideal for developers. Let’s see how to use Apidog to test the Grok 3 API.

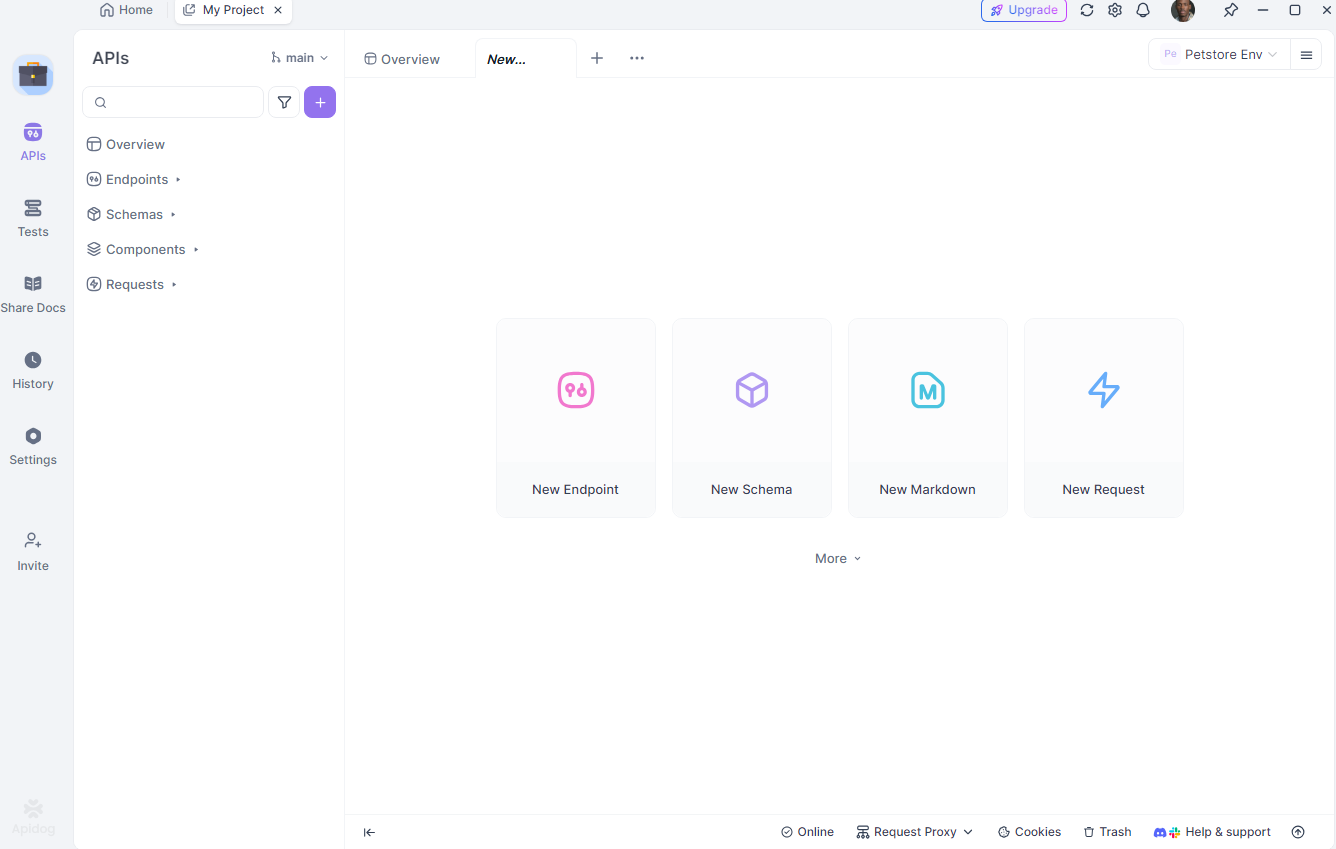

Set Up Apidog

First, download and install Apidog from apidog.com. Once installed, create a new project and add the Grok 3 API endpoint: https://openrouter.ai/api/v1/chat/completions.

Configure Your Environment

Next, set up different environments (e.g., development and production) in Apidog. Define variables like your API key and base URL to easily switch between setups. In Apidog, go to the “Environments” tab and add:

api_key: Your OpenRouter API keybase_url:https://openrouter.ai/api/v1

Create a Test Request

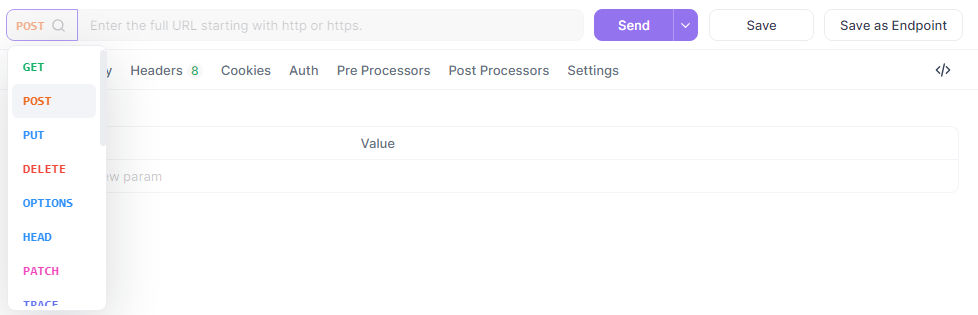

Now, create a new POST request in Apidog.

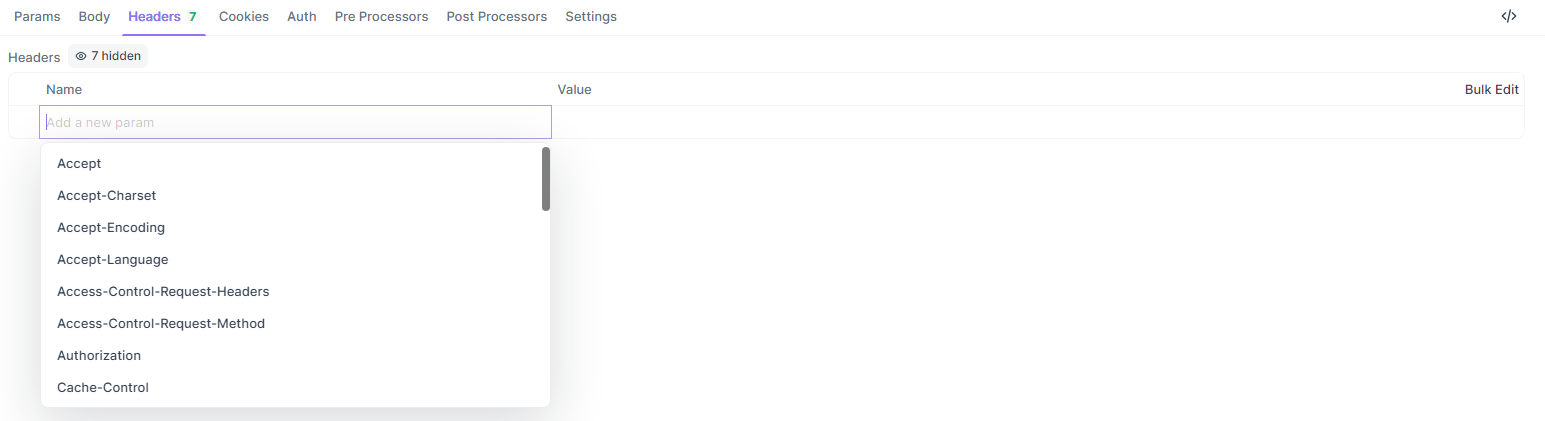

Set the URL to {{base_url}}/chat/completions, add your headers, and input the JSON body:

{

"model": "quasar-alpha",

"messages": [

{"role": "user", "content": "Explain the difference between let and const in JavaScript."}

],

"max_tokens": 300

}

In the headers section, add:

Authorization:Bearer {{api_key}}Content-Type:application/json

Run and Analyze the Test

Finally, send the request and analyze the response in Apidog’s visual interface. Apidog provides detailed reports, including response time, status code, and token usage. You can also save this request as a reusable scenario for future testing.

Apidog’s ability to simulate real-world scenarios and generate exportable reports makes it a powerful tool for debugging and optimizing your interactions with the Grok 3 API. Let’s wrap up with some best practices.

API Pricing and Limits of Grok 3 APIs

Understanding the pricing structure and usage limits is crucial for managing costs when using Grok models via OpenRouter.

Pricing Structure

As of current data from OpenRouter:

- Grok 3 Beta:

- $3.00 per million input tokens

- $15.00 per million output tokens

- Grok 3 Mini Beta:

- $0.30 per million input tokens

- $0.50 per million output tokens

This pricing model highlights the significant cost difference between the two models, with Grok 3 Mini being approximately 10x cheaper for input tokens and 30x cheaper for output tokens. For many applications where the full capabilities of Grok 3 aren't necessary, Grok 3 Mini offers a much more cost-effective solution.

How Open Router Free Credits Work

OpenRouter typically offers new users some free credits upon signup, which allows for initial experimentation with models including Grok 3 and Grok 3 Mini. While the exact amount may vary, these credits provide an opportunity to test the models before committing to a payment method.

For example, with even just $5 in free credits, you could process:

- Approximately 1.6M input tokens or 330K output tokens with Grok 3

- Around 16.6M input tokens or 10M output tokens with Grok 3 Mini

This is sufficient for considerable testing and small-scale applications without any upfront cost.

Usage Limits and Considerations for Using Free Grok 3 APIs

While using Grok models via OpenRouter, be aware of these potential limitations:

- Rate Limits: OpenRouter may impose rate limits on API calls, especially for new or free-tier users.

- Context Window Utilization: Both Grok 3 and Grok 3 Mini offer impressive 131,072 token context windows, but using the full capacity will consume more tokens and therefore increase costs.

- Queue Times: During high demand periods, requests may experience longer processing times, especially for the more powerful Grok 3 model.

- Beta Status: Both models are currently in beta, which means their performance, availability, and pricing could change as they move towards general availability.

- Credit Depletion Alerts: Set up monitoring to be notified when your free credits are running low to avoid unexpected service interruptions.

Conclusion

Accessing Grok 3 and Grok 3 Mini "for free" through OpenRouter presents an excellent opportunity to explore these powerful AI models without committing to an X Premium+ subscription. While the free credits won't last forever, they provide enough runway to thoroughly test the models and determine if they meet your needs before deciding on ongoing usage.

The significant performance capabilities of both models, combined with OpenRouter's flexible API access and competitive pricing (especially for Grok 3 Mini), make them valuable additions to any developer's AI toolkit. As these models continue to evolve beyond their current beta status, we can expect even more impressive capabilities and optimizations.

Whether you're building the next cutting-edge AI application, conducting research, or simply exploring the capabilities of today's most advanced language models, OpenRouter's access to Grok 3 and Grok 3 Mini offers a practical and cost-effective path to leverage xAI's technology without being limited to the X platform interface.

Start by claiming your free OpenRouter credits today, and discover how Grok models can enhance your projects with their unique reasoning capabilities and performance characteristics.