Introduction

GPT4Free is an open-source Python library that provides access to a collection of powerful language models through various providers. It serves as a proof-of-concept API package that demonstrates multi-provider AI requests with features like timeouts, load balancing, and flow control. This library enables developers to use different language models for text generation, including GPT-3.5 and GPT-4 variants, without the need for official API keys in many cases.

This tutorial will guide you through the installation, setup, and usage of the gpt4free library, showing you how to leverage its capabilities for various AI-powered text generation tasks.

Legal Considerations

By using this repository or any code related to it, you agree to the legal notice provided by the developers. The original author is not responsible for the usage of this repository nor endorses it. The author is also not responsible for any copies, forks, re-uploads made by other users, or anything else related to GPT4Free.

GPT4Free serves primarily as a proof of concept demonstrating the development of an API package with multi-provider requests. Using this library to bypass official APIs may violate the terms of service of various AI providers. Before deploying any solution based on this library in a production environment, ensure you have the proper authorization and comply with the terms of service of each provider you intend to use.

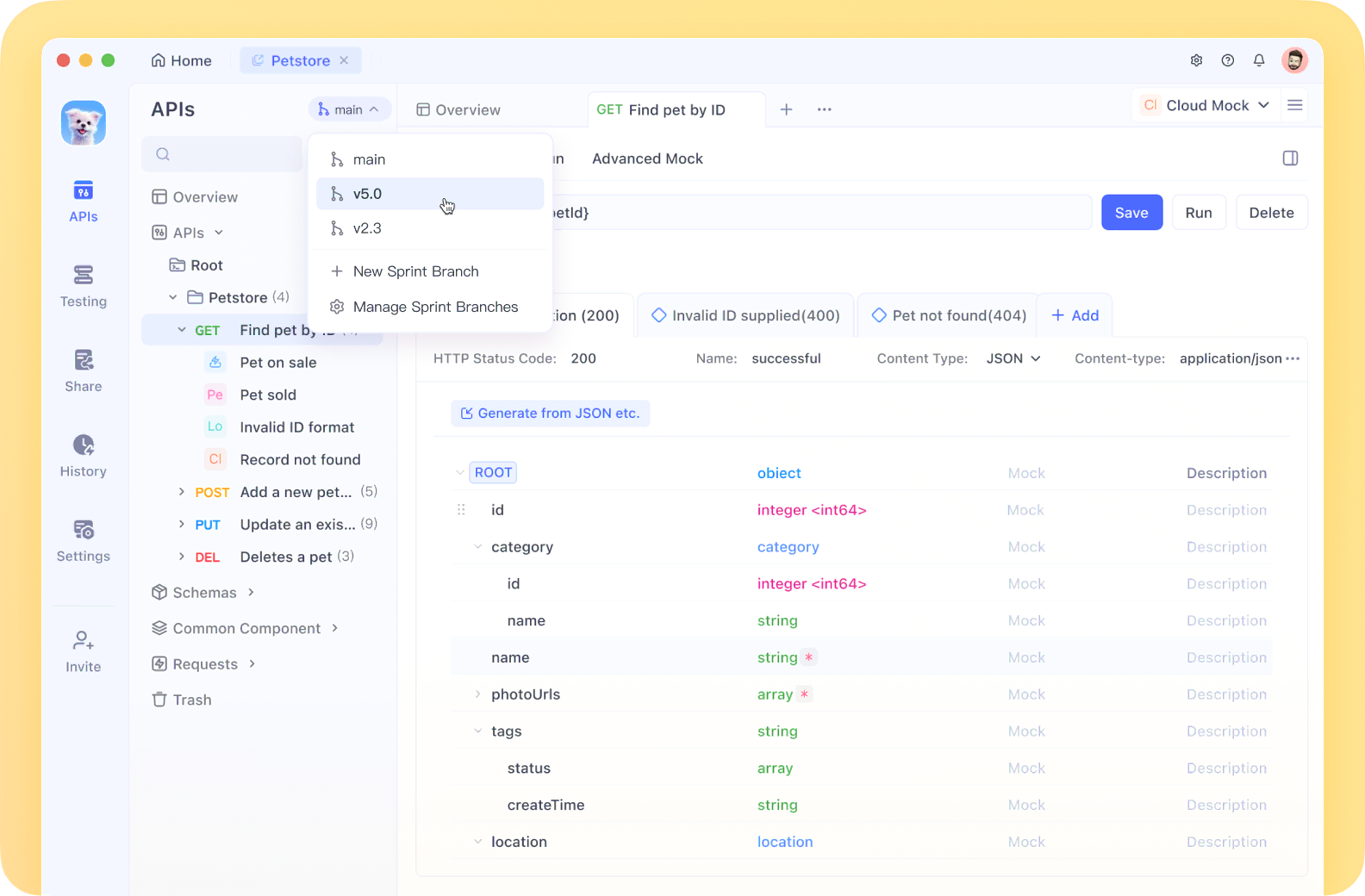

Before diving into the implementation details, it's worth mentioning that you can use Apidog as an excellent alternative to Postman for API testing with gpt4free. Apidog is a comprehensive API development platform that offers features such as API design, debugging, automated testing, and documentation.

When working with the gpt4free interference API, Apidog can help you:

- Send requests to the API endpoints

- Test different parameter configurations

- Visualize response data

- Create and save collections of API requests for future use

How to Install GPT4Free

Prerequisites

- Python 3.10 or higher (recommended)

- Google Chrome (required for providers with webdriver)

Methods to Install GPT4Free

Method 1: Using PyPI

For a complete installation with all features:

pip install -U g4f[all]

For partial installations:

# For OpenAI Chat provider

pip install -U g4f[openai]

# For interference API

pip install -U g4f[api]

# For web interface

pip install -U g4f[gui]

# For image generation

pip install -U g4f[image]

# For providers with webdriver

pip install -U g4f[webdriver]

# For proxy support

pip install -U aiohttp_socks

Method 2: From GitHub Repository

# Clone the repository

git clone https://github.com/xtekky/gpt4free.git

# Navigate to the project directory

cd gpt4free

# Create a virtual environment (recommended)

python3 -m venv venv

# Activate the virtual environment

# On Windows:

.\venv\Scripts\activate

# On macOS and Linux:

source venv/bin/activate

# Install minimum requirements

pip install -r requirements-min.txt

# Or install all requirements

pip install -r requirements.txt

Method 3: Using Docker

# Pull the Docker image

docker pull hlohaus789/g4f

# Run the container

docker run -p 8080:8080 -p 1337:1337 -p 7900:7900 --shm-size="2g" hlohaus789/g4f:latest

For the slim version (compatible with both x64 and arm64):

# Create necessary directories

mkdir -p ${PWD}/har_and_cookies ${PWD}/generated_images

chown -R 1000:1000 ${PWD}/har_and_cookies ${PWD}/generated_images

# Run the slim Docker image

docker run \

-p 1337:1337 \

-v ${PWD}/har_and_cookies:/app/har_and_cookies \

-v ${PWD}/generated_images:/app/generated_images \

hlohaus789/g4f:latest-slim \

/bin/sh -c 'rm -rf /app/g4f && pip install -U g4f[slim] && python -m g4f --debug'

How to Use GPT4Free with Python Basics

Text Generation with ChatCompletion

Simple Example

import g4f

# Enable debug logging (optional)

g4f.debug.logging = True

# Disable automatic version checking (optional)

g4f.debug.version_check = False

# Normal (non-streaming) response

response = g4f.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Hello, how are you today?"}]

)

print(response)

Streaming Response

import g4f

# Stream the response

response = g4f.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Write a short story about a robot."}],

stream=True

)

for message in response:

print(message, end='', flush=True)

Using a Specific Provider

import g4f

# List all available working providers

providers = [provider.__name__ for provider in g4f.Provider.__providers__ if provider.working]

print("Available providers:", providers)

# Use a specific provider

response = g4f.ChatCompletion.create(

model="gpt-3.5-turbo",

provider=g4f.Provider.Aichat,

messages=[{"role": "user", "content": "Explain quantum computing in simple terms."}]

)

print(response)

Using the Client API

A more modern approach using the client API:

from g4f.client import Client

# Create a client instance

client = Client()

# Generate text using chat completions

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": "Hello"}],

web_search=False

)

print(response.choices[0].message.content)

Image Generation

from g4f.client import Client

# Create a client instance

client = Client()

# Generate an image

response = client.images.generate(

model="flux",

prompt="a white siamese cat",

response_format="url"

)

print(f"Generated image URL: {response.data[0].url}")

Provider Authentication

Some providers require cookies or access tokens for authentication:

from g4f import set_cookies

# Set cookies for Bing

set_cookies(".bing", {"_U": "cookie value"})

# Set access token for OpenAI Chat

set_cookies("chat.openai.com", {"access_token": "token value"})

Using Browser Automation

For providers requiring browser interaction:

import g4f

from undetected_chromedriver import Chrome, ChromeOptions

# Configure Chrome options

options = ChromeOptions()

options.add_argument("--incognito")

# Initialize Chrome WebDriver

webdriver = Chrome(options=options, headless=True)

try:

# Use the browser for multiple requests

for idx in range(5):

response = g4f.ChatCompletion.create(

model=g4f.models.default,

provider=g4f.Provider.MyShell,

messages=[{"role": "user", "content": f"Give me idea #{idx+1} for a mobile app."}],

webdriver=webdriver

)

print(f"Idea {idx+1}:", response)

finally:

# Always close the webdriver when done

webdriver.quit()

Asynchronous Support

For improved performance with multiple requests:

import g4f

import asyncio

# Define providers to use

providers = [

g4f.Provider.Aichat,

g4f.Provider.ChatBase,

g4f.Provider.Bing,

g4f.Provider.GptGo

]

async def run_provider(provider):

try:

response = await g4f.ChatCompletion.create_async(

model=g4f.models.default,

messages=[{"role": "user", "content": "What's your name?"}],

provider=provider,

)

print(f"{provider.__name__}:", response)

except Exception as e:

print(f"{provider.__name__}:", e)

async def run_all():

calls = [run_provider(provider) for provider in providers]

await asyncio.gather(*calls)

# Run all providers asynchronously

asyncio.run(run_all())

Proxy and Timeout Support

For handling network constraints:

import g4f

# Use with specific proxy and extended timeout

response = g4f.ChatCompletion.create(

model=g4f.models.default,

messages=[{"role": "user", "content": "How can I improve my Python code?"}],

proxy="http://host:port", # or socks5://user:pass@host:port

timeout=120 # in seconds

)

print("Result:", response)

You can also set a global proxy via an environment variable:

export G4F_PROXY="http://host:port"

Running the Web Interface with GPT4Free

GPT4Free includes a web interface for easier interaction:

from g4f.gui import run_gui

run_gui()

Alternatively, use the command line:

# Start the Flask server

python -m g4f.cli gui --port 8080 --debug

# Or start the FastAPI server

python -m g4f --port 8080 --debug

The web interface will be available at http://localhost:8080/chat/

Using the Interference API with GPT4Free

GPT4Free provides an OpenAI-compatible API for integration with other tools:

# Run the API server

from g4f.api import run_api

run_api()

Or via command line:

g4f-api

# or

python -m g4f.api.run

Then connect to it using the OpenAI Python client:

from openai import OpenAI

client = OpenAI(

# Optional: Set your Hugging Face token for embeddings

api_key="YOUR_HUGGING_FACE_TOKEN",

base_url="http://localhost:1337/v1"

)

# Use it like the official OpenAI client

chat_completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Write a poem about a tree"}],

stream=True,

)

for token in chat_completion:

content = token.choices[0].delta.content

if content is not None:

print(content, end="", flush=True)

GPT4Free Suppored Models & Providers

GPT4Free supports numerous providers with various capabilities. Some providers support GPT-4, others support GPT-3.5, and some support alternative models.

GPT-4 Providers

- Bing (g4f.Provider.Bing)

- GeekGpt (g4f.Provider.GeekGpt)

- GptChatly (g4f.Provider.GptChatly)

- Liaobots (g4f.Provider.Liaobots)

- Raycast (g4f.Provider.Raycast)

GPT-3.5 Providers

- AItianhu (g4f.Provider.AItianhu)

- AItianhuSpace (g4f.Provider.AItianhuSpace)

- AiAsk (g4f.Provider.AiAsk)

- Aichat (g4f.Provider.Aichat)

- ChatBase (g4f.Provider.ChatBase)

- ChatForAi (g4f.Provider.ChatForAi)

- ChatgptAi (g4f.Provider.ChatgptAi)

- And many more...

Other Models

- Bard (Google's Palm)

- DeepInfra

- HuggingChat

- Llama2

- OpenAssistant

Conclusion

The gpt4free Python library offers an impressive range of capabilities for accessing various language models without requiring official API keys in many cases. By providing a unified interface for multiple providers, it enables developers to experiment with different language models and compare their performance.

While this library is primarily a proof of concept and may have legal implications for production use, it's a valuable tool for learning about AI capabilities, testing small projects, and understanding how different language models work.

Remember to use this library responsibly, respecting the terms of service of the providers and being mindful of the ethical considerations around AI usage. For production applications, it's strongly recommended to use official APIs with proper authentication and licensing.

The library is under active development, so check the GitHub repository and documentation for the latest features, providers, and best practices. As the field of AI continues to evolve rapidly, tools like gpt4free help democratize access to cutting-edge technology, allowing more developers to experiment with and learn from these powerful models.