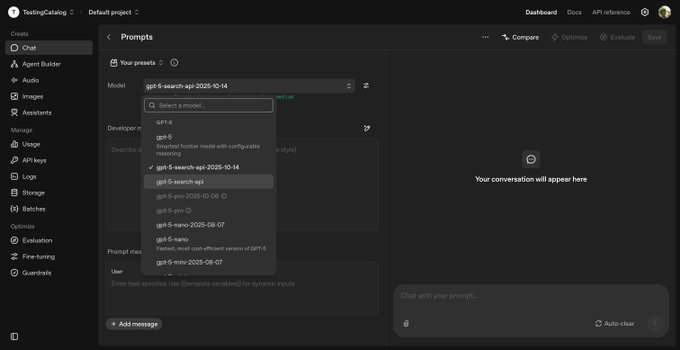

Developers constantly seek ways to integrate advanced AI capabilities into their applications, and OpenAI's latest offerings provide powerful tools for that purpose. The gpt-5-search-api-2026-10-14 and gpt-5-search-api models stand out as specialized variants that embed web search functionality directly into AI responses. These models enable applications to fetch real-time information from the internet, process it intelligently, and deliver cited answers.

OpenAI released these search-enhanced models in October 2026, marking a significant advancement in AI's ability to handle dynamic queries. This release builds on the foundational GPT-5 family, which excels in reasoning, coding, and multimodal tasks. Furthermore, the search APIs address limitations in traditional language models by incorporating live data, making them ideal for applications like news aggregators, research tools, and personalized assistants.

As you explore these models, remember that small adjustments in configuration often yield substantial improvements in response quality and latency. For instance, selecting the appropriate reasoning effort level transforms a simple query into a comprehensive analysis. Developers configure the API to balance speed and depth, ensuring optimal performance for specific use cases.

Understanding the GPT-5 Search API Fundamentals

OpenAI designs the gpt-5-search-api-2026-10-14 as a dated snapshot model, capturing enhancements up to October 14, 2026, while gpt-5-search-api serves as the evergreen version that receives ongoing updates. Both models integrate the web search tool, allowing the AI to perform internet searches autonomously during response generation. This integration eliminates the need for separate search engines in your stack, as the model handles querying, result parsing, and citation embedding.

The core mechanism relies on the "web_search" tool, which the model invokes based on the input prompt's requirements. When a query demands current information—such as stock prices, weather updates, or recent events—the model activates the tool, retrieves data from trusted sources, and incorporates it into the output. Additionally, these models support three search modes: non-reasoning for quick lookups, agentic search for iterative reasoning, and deep research for exhaustive investigations.

However, developers must note the context window limitation of 128,000 tokens, even with larger underlying models. This constraint ensures efficient processing but requires careful prompt engineering to avoid truncation. Moreover, the models enforce rate limits tied to your OpenAI tier, so monitor usage to prevent throttling during high-volume operations.

To illustrate, consider a basic scenario where an application needs to answer "What are the latest advancements in quantum computing?" The gpt-5-search-api queries the web, synthesizes results from multiple sources, and returns a summarized response with inline citations. This process happens seamlessly, but understanding the underlying parameters enhances control.

Setting Up Your Environment for GPT-5 Search API

Developers start by creating an OpenAI account and generating an API key through the platform dashboard. Navigate to the API keys section, create a new key, and store it securely in your environment variables. Next, install the OpenAI SDK for your preferred language—Python users execute pip install openai, while JavaScript developers use npm install openai.

Once set up, configure the client with your key. For example, in Python:

import openai

client = openai.OpenAI(api_key="your-api-key-here")

This initialization prepares the client for API calls. Furthermore, ensure your account has access to GPT-5 models; as of 2026, these require a paid tier, with pricing details available in the OpenAI documentation.

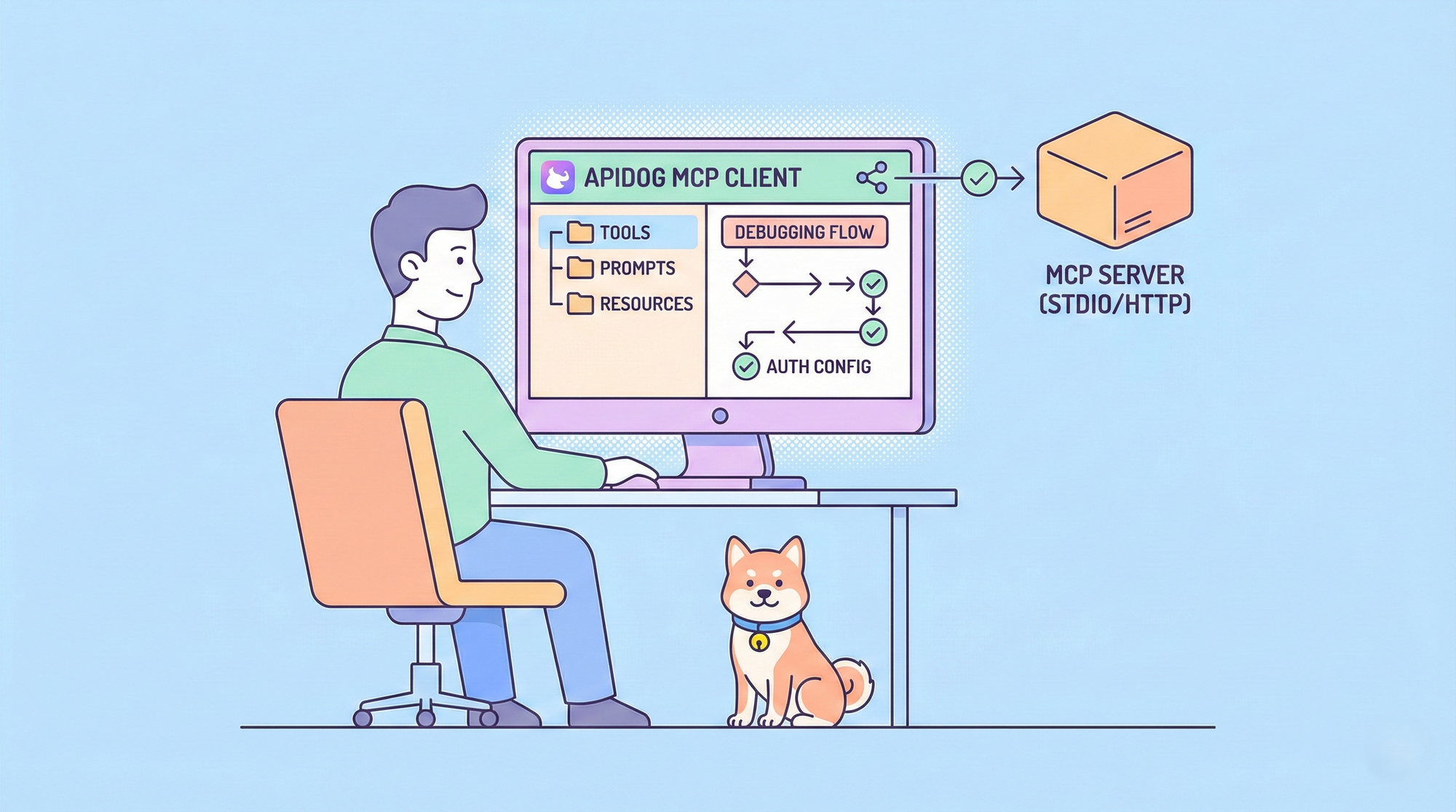

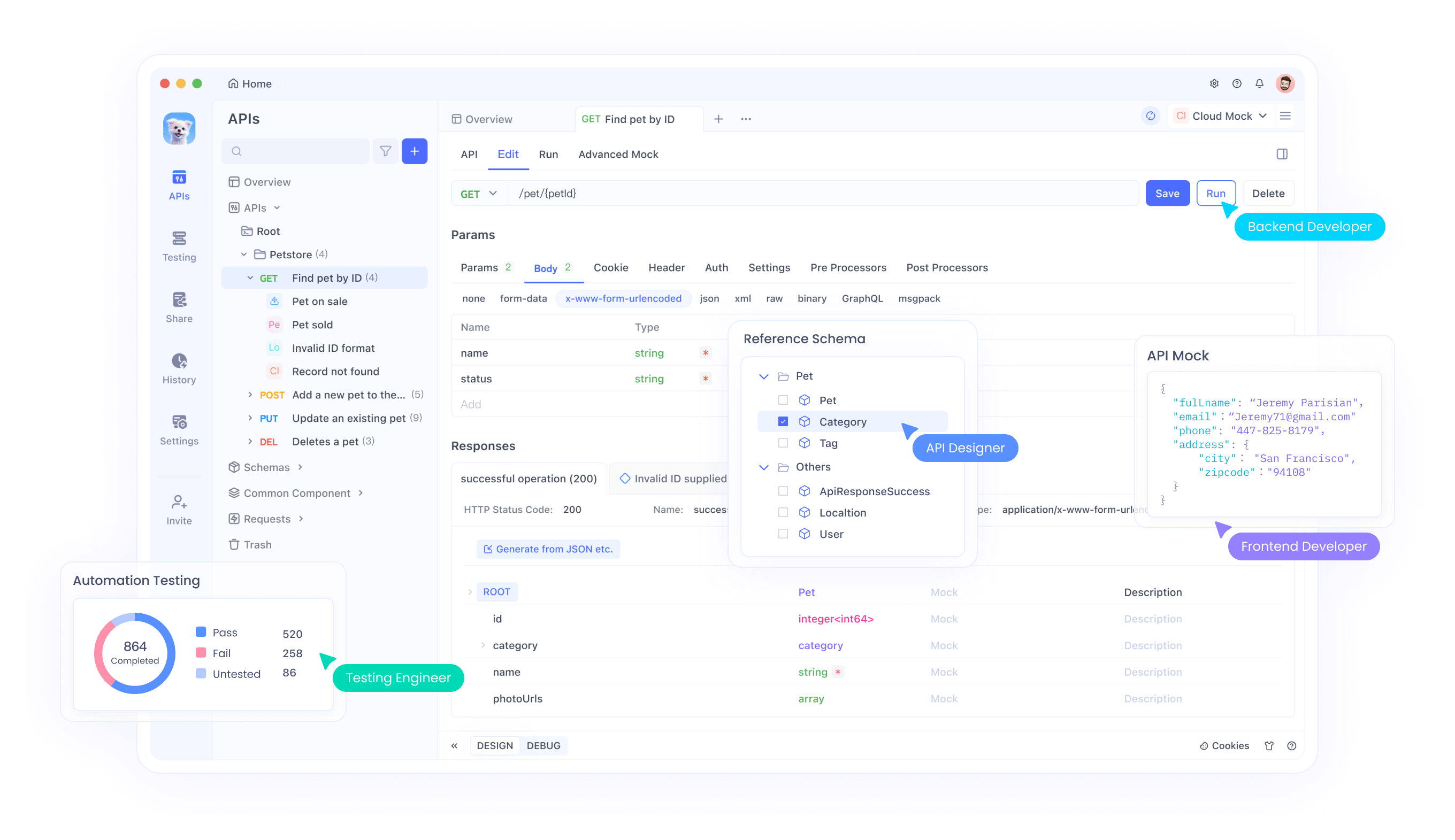

Apidog complements this setup by providing a visual interface for API exploration. After downloading Apidog, import the OpenAI API spec from their official OpenAPI file. This action creates endpoints for testing, allowing you to simulate requests without writing code initially. For instance, set up a POST request to /responses and parameterize the model as "gpt-5-search-api-2026-10-14".

Security considerations play a crucial role here. Always use HTTPS for API calls and rotate keys periodically. Additionally, implement error handling in your code to manage exceptions like rate limit errors or invalid parameters.

Implementing Basic Web Search with GPT-5

Developers implement the search functionality by including the "web_search" tool in the API request. The model then decides whether to use it based on the prompt. For a simple non-reasoning search, structure the call as follows in JavaScript:

import OpenAI from "openai";

const client = new OpenAI();

const response = await client.responses.create({

model: "gpt-5-search-api",

tools: [{ type: "web_search" }],

input: "Summarize the top news stories from today.",

});

console.log(response.output_text);

This code sends the query, triggers a search if needed, and logs the response. The output includes cited sources, which you display as clickable links in your application UI.

Transitioning to more complex scenarios, agentic search leverages GPT-5's reasoning capabilities. Set the reasoning effort to "medium" for balanced performance:

const response = await client.responses.create({

model: "gpt-5-search-api-2026-10-14",

reasoning: { effort: "medium" },

tools: [{ type: "web_search" }],

input: "Analyze the impact of recent AI regulations on startups.",

});

Here, the model iterates over search results, refines queries, and builds a reasoned argument. However, this increases latency, so reserve it for analytical tasks.

Apidog facilitates testing these calls by allowing parameter variations. Create a collection for GPT-5 endpoints, add variables for models like gpt-5-search-api, and run batches to compare outputs. This approach identifies optimal configurations quickly.

Advanced Parameters and Customization

OpenAI provides several parameters to fine-tune the gpt-5-search-api. The "filters" object restricts searches to allowed domains, enhancing reliability:

"tools": [

{

"type": "web_search",

"filters": {

"allowed_domains": ["nytimes.com", "bbc.com"]

}

}

]

This limits results to trusted news sites, reducing noise in responses. Additionally, the "user_location" parameter customizes results geographically:

"user_location": {

"type": "approximate",

"country": "US",

"city": "New York",

"region": "New York"

}

For location-based queries like "Find nearby events," this ensures relevant data.

Furthermore, the "include" array retrieves additional metadata, such as full source lists:

"include": ["web_search_call.action.sources"]

This provides transparency beyond inline citations, useful for auditing.

In deep research mode, set reasoning to "high" and run asynchronously if possible. The model consults hundreds of sources, ideal for comprehensive reports. However, monitor costs, as web searches incur additional fees.

Apidog excels in parameter experimentation. Use its environment variables to switch between gpt-5-search-api-2026-10-14 and gpt-5-search-api, testing how date-specific snapshots affect results.

Handling Outputs and Citations

The API returns a structured response with "web_search_call" and "message" objects. Parse the "content" for the text and "annotations" for citations. Developers render these as superscripts or footnotes, linking to original URLs.

For example, process the response in Python:

for item in response:

if item.type == "message":

text = item.content[0].text

for ann in item.content[0].annotations:

if ann.type == "url_citation":

# Insert link at ann.start_index to ann.end_index

print(f"Citation: {ann.title} - {ann.url}")

This ensures users access sources easily. Moreover, display full sources from "include" in a dedicated section for enhanced credibility.

Common pitfalls include ignoring citation visibility requirements—OpenAI mandates clickable links in UIs. Additionally, handle cases where no search occurs by checking the "web_search_call" status.

Integrating GPT-5 Search API with Apidog

Apidog streamlines integration by offering features like API mocking and automation. First, create a new project in Apidog and import the OpenAI spec. Then, define endpoints for /responses and /chat/completions, setting the model to gpt-5-search-api.

Test searches by sending prompts and inspecting responses. Apidog's assertion tools verify citation presence and response format. For instance, assert that "annotations" contains at least one "url_citation".

Furthermore, use Apidog's CI/CD integration to automate tests in pipelines. This ensures gpt-5-search-api-2026-10-14 behaves consistently across deployments.

In advanced workflows, combine with other tools. Generate mocks for search results to test offline, then switch to live API for production.

Best Practices for Optimal Performance

Developers optimize prompts to guide search invocation effectively. Use clear instructions like "Search the web for current data on X and analyze it." This triggers the tool reliably.

Monitor latency—non-reasoning searches complete in seconds, while deep research takes minutes. Choose modes based on application needs.

Additionally, respect rate limits; tier 5 allows higher throughput for gpt-5-search-api. Implement exponential backoff for retries.

Security best practices include validating user inputs to prevent prompt injection and filtering sensitive domains.

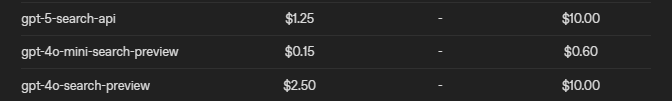

Finally, benchmark against other models. Compare gpt-5-search-api with gpt-4o-search-preview for cost-efficiency.

Real-World Examples and Case Studies

Consider a news bot application. Developers use gpt-5-search-api to fetch and summarize articles:

const response = await client.responses.create({

model: "gpt-5-search-api-2026-10-14",

tools: [{ type: "web_search" }],

input: "Provide a summary of today's top tech news with sources.",

});

The output includes cited summaries, enhancing user trust.

In e-commerce, personalize recommendations with location-aware searches: "Recommend restaurants in my area based on reviews."

Apidog aids in prototyping these by simulating responses and testing edge cases.

Another example involves research tools. For academic queries, deep research mode synthesizes papers: set reasoning to "high" and include domain filters for sites like pubmed.ncbi.nlm.nih.gov.

However, test for biases in search results and cross-verify citations.

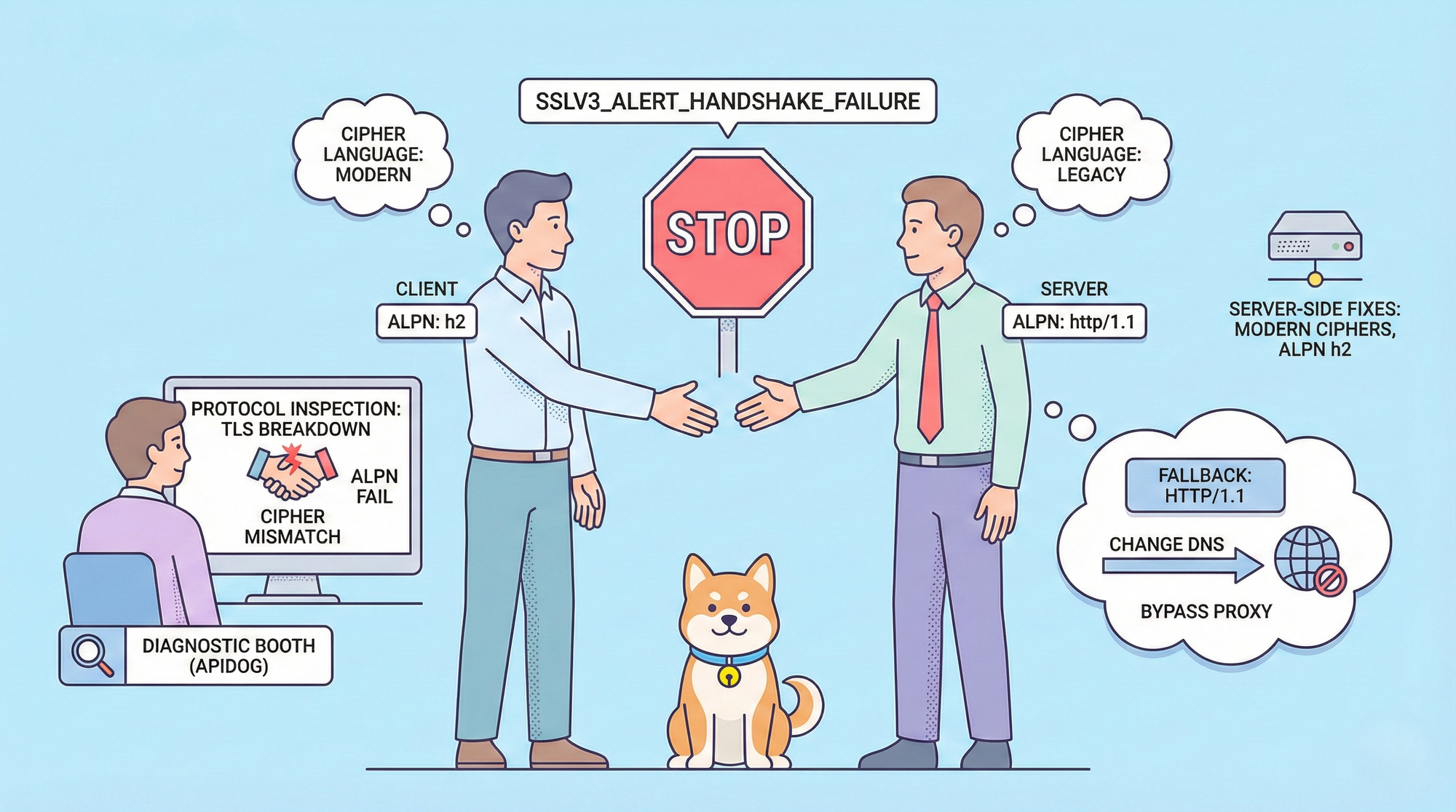

Troubleshooting Common Issues

If searches fail to trigger, refine prompts to explicitly require external data. Check logs for "tool_choice" behavior.

Timeouts occur in deep research; use background mode or reduce scope.

Apidog helps debug by capturing requests and responses, highlighting errors like invalid keys.

Community forums discuss issues like API/UI discrepancies in web search availability.

Future Prospects and Updates

OpenAI continues evolving the gpt-5-search-api family, with potential integrations like multimodal search. Stay updated via the platform docs.

As AI advances, these models pave the way for more autonomous applications.

In summary, mastering the gpt-5-search-api-2026-10-14 and gpt-5-search-api requires understanding their mechanics, careful configuration, and tools like Apidog. By following these steps, developers build robust, information-rich AI systems.