Developers constantly seek advanced tools to enhance AI-driven applications, and GPT-5 Pro stands out as a premier model for complex reasoning tasks. This model delivers precise responses by leveraging high computational effort, making it ideal for scenarios that demand accuracy and depth. As you explore ways to integrate this technology, consider tools that streamline the process.

Furthermore, this article examines access methods through two primary platforms: OpenAI and OpenRouter. Each offers unique advantages, from direct integration to cost-effective routing. You gain insights into prerequisites, authentication, endpoints, and code examples. Additionally, the discussion covers pricing structures on both platforms, enabling informed decisions. By the end, you understand how small choices in setup influence overall performance.

Understanding GPT-5 Pro: Key Features and Capabilities

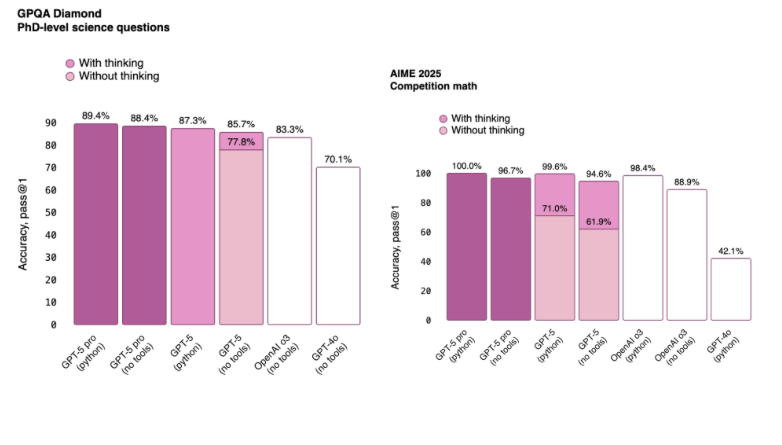

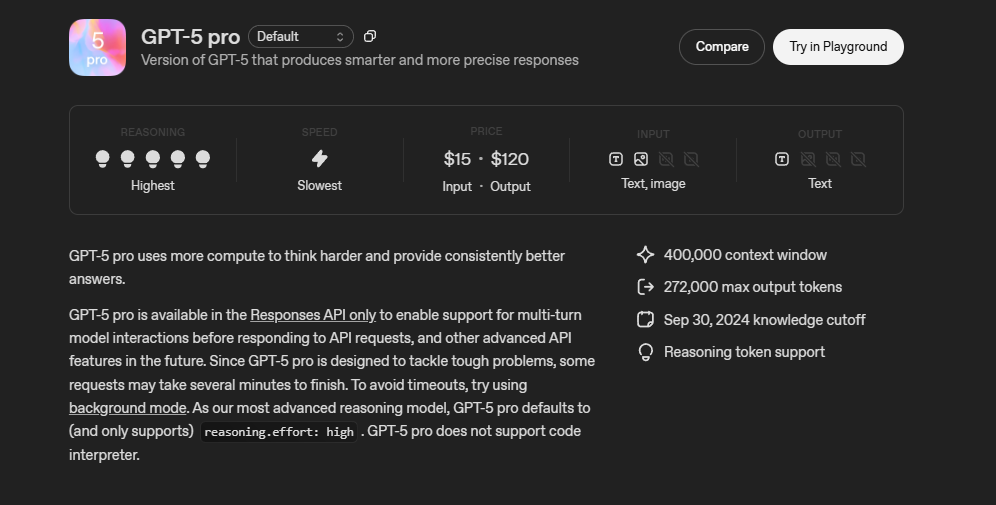

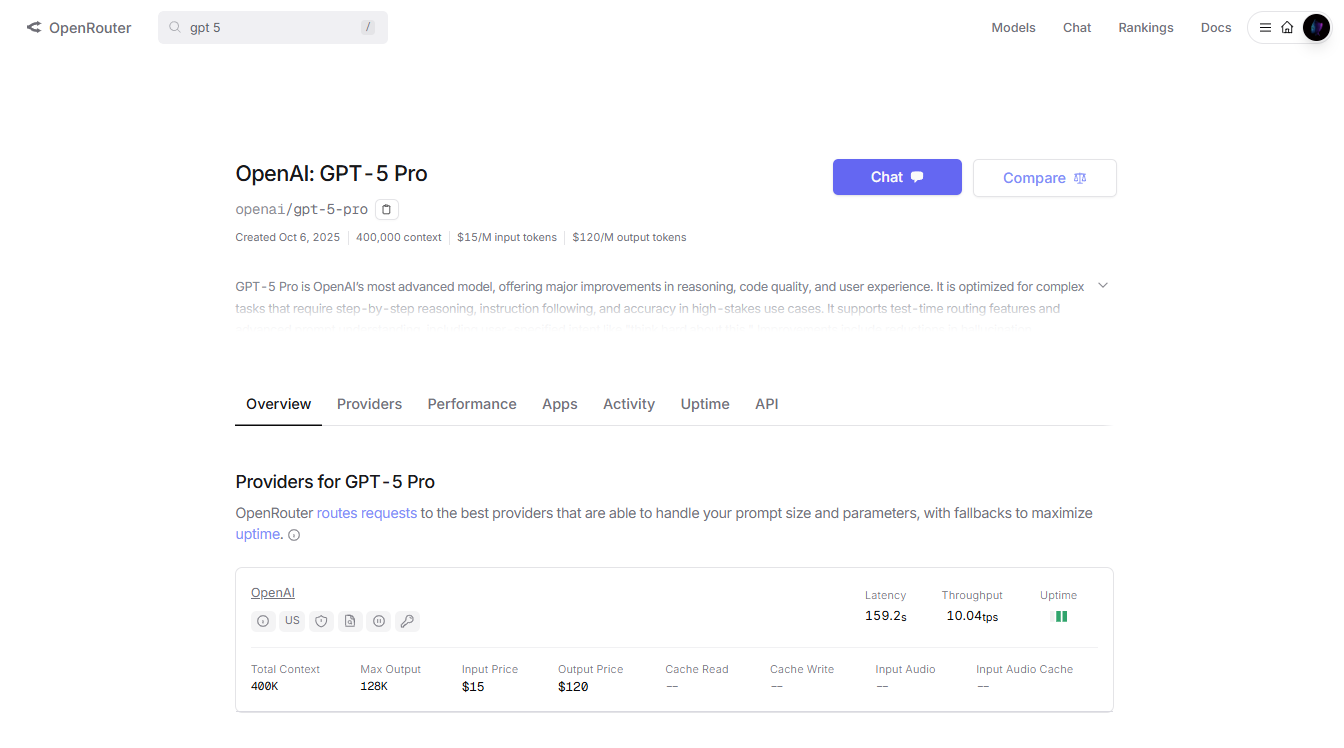

Engineers design GPT-5 Pro to excel in demanding applications. The model employs elevated reasoning effort, setting it apart from predecessors. Specifically, it defaults to high reasoning mode, which ensures consistent quality in outputs. Moreover, it supports a massive 400,000-token context window, allowing processing of extensive inputs. Users can generate up to 272,000 output tokens, facilitating detailed responses.

Next, consider the modalities. GPT-5 Pro handles text inputs and outputs efficiently, while also accepting image inputs. However, it lacks support for audio or video. Developers appreciate the inclusion of function calling and structured outputs, which enhance programmability. On the other hand, features like streaming, fine-tuning, and code interpretation remain unavailable.

Additionally, the knowledge cutoff dates to September 30, 2024, so applications must account for this when requiring current information. Tools such as web search integrate seamlessly, expanding utility. Overall, these attributes position GPT-5 Pro as a robust choice for technical projects, from code generation to analytical reasoning.

Prerequisites for Accessing the GPT-5 Pro API

Before integrating GPT-5 Pro, you prepare several essentials. First, create an account on the chosen platform. For OpenAI, visit their developer portal and sign up.

Similarly, OpenRouter requires registration to generate API keys.

Next, verify your organization if using OpenAI, as some models demand this step. Developers often overlook this, leading to access denials. Furthermore, ensure compliance with usage policies, including rate limits based on your tier.

You also need programming knowledge in languages like Python or JavaScript, since API calls rely on these. Install relevant libraries, such as the OpenAI SDK, to simplify interactions. However, always check compatibility, as GPT-5 Pro focuses on specific endpoints like Responses API.

Moreover, budget for costs, as token-based pricing applies. Track usage to avoid surprises. By addressing these prerequisites, you establish a solid foundation for successful API access.

Accessing GPT-5 Pro via the OpenAI Platform

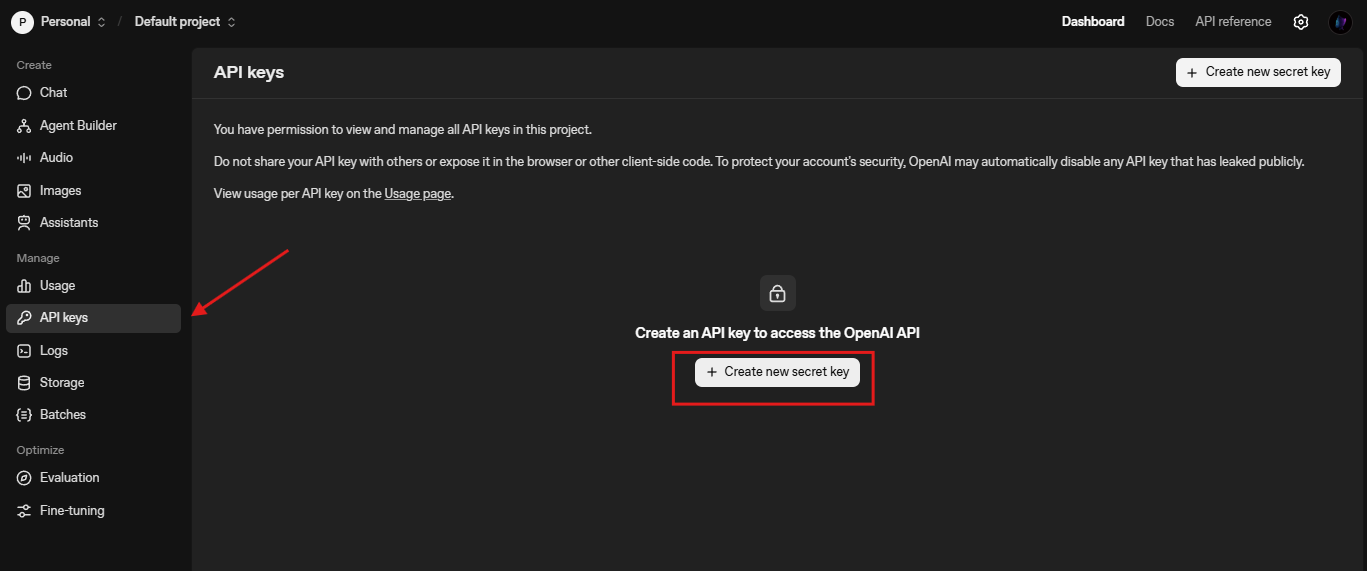

OpenAI provides direct access to GPT-5 Pro, enabling seamless integration. You begin by obtaining an API key from the platform's dashboard. Navigate to the API keys section, generate a new key, and store it securely.

Subsequently, authenticate requests using this key in headers. For example, include "Authorization: Bearer YOUR_API_KEY" in HTTP calls. This step prevents unauthorized access and ensures secure communication.

Now, explore available endpoints. GPT-5 Pro primarily utilizes the Responses API at /v1/responses, ideal for multi-turn interactions. Other endpoints include /v1/chat/completions for standard chats and /v1/embeddings for vector representations. Developers select endpoints based on task requirements.

For instance, to make a basic call, you construct a POST request with parameters like model="gpt-5-pro" and prompt details. The system processes inputs and returns structured responses. However, note that complex queries may take minutes, so implement background mode to handle timeouts.

Additionally, incorporate tools like web search or file search to augment capabilities. You specify these in the request body, enhancing the model's output. Through these steps, you harness GPT-5 Pro effectively on OpenAI.

Code Examples for OpenAI Integration

You implement GPT-5 Pro with straightforward code. Start with Python and the OpenAI library. Install it via pip: pip install openai.

Then, initialize the client: from openai import OpenAI; client = OpenAI(api_key="YOUR_API_KEY").

To generate a response, use: response = client.chat.completions.create(model="gpt-5-pro", messages=[{"role": "user", "content": "Explain quantum computing."}]).

Print the output: print(response.choices[0].message.content).

This example demonstrates basic usage. For advanced scenarios, add reasoning effort: include "reasoning": {"effort": "high"} in parameters.

Furthermore, handle errors by wrapping calls in try-except blocks. Log rate limit headers to monitor usage.

In JavaScript, employ the fetch API: fetch("https://api.openai.com/v1/chat/completions", {method: "POST", headers: {"Authorization": "Bearer YOUR_API_KEY", "Content-Type": "application/json"}, body: JSON.stringify({model: "gpt-5-pro", messages: [{"role": "user", "content": "Solve this math problem."}]})}).then(res => res.json()).then(data => console.log(data.choices[0].message.content)).

These snippets illustrate practical integration, allowing quick prototyping.

Pricing Details on OpenAI for GPT-5 Pro

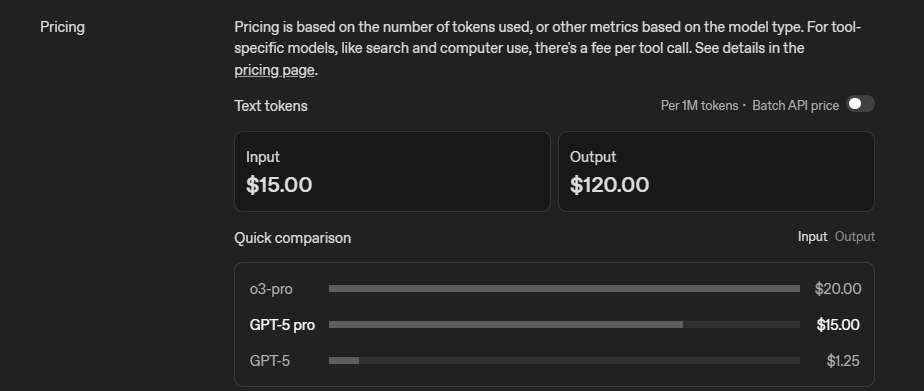

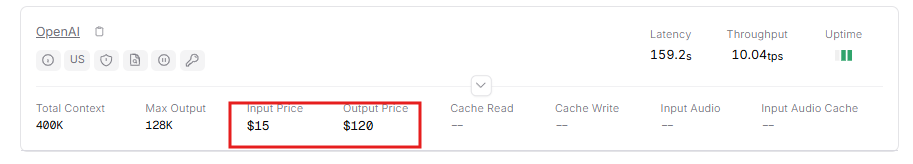

OpenAI structures pricing around tokens, charging per million. For GPT-5 Pro, input tokens cost $15 per million, while output tokens reach $120 per million. Batch API offers the same rates, promoting efficient processing.

Compare this to related models. GPT-5 input costs $1.25 per million, highlighting Pro's premium positioning. Additionally, tool calls incur separate fees, depending on usage.

Rate limits tie into tiers, influencing costs indirectly. Tier 1 allows 500 requests per minute and 30,000 tokens per minute. Higher tiers, like Tier 5, support 10,000 requests and 30 million tokens, suiting enterprise needs.

Developers optimize by minimizing tokens through concise prompts. Monitor dashboards to track spending. This approach ensures cost-effective access to GPT-5 Pro.

Advantages of Using OpenRouter for GPT-5 Pro Access

OpenRouter simplifies access by routing requests across providers, ensuring reliability. You benefit from a unified API compatible with OpenAI SDK, reducing adaptation efforts.

Moreover, it offers fallbacks, maintaining uptime during peak loads. Developers value this for production environments.

Additionally, OpenRouter potentially discounts models, like 50% off on GPT-5 variants in promotions. This lowers barriers for experimentation.

The platform aggregates over 400 models, allowing switches without code changes. You specify "openai/gpt-5-pro" in requests, streamlining workflows.

However, consider BYOK options, where you supply keys and pay a 5% fee after initial free requests. This flexibility appeals to cost-conscious users.

Overall, OpenRouter enhances accessibility, making GPT-5 Pro more approachable.

Setting Up Access on OpenRouter

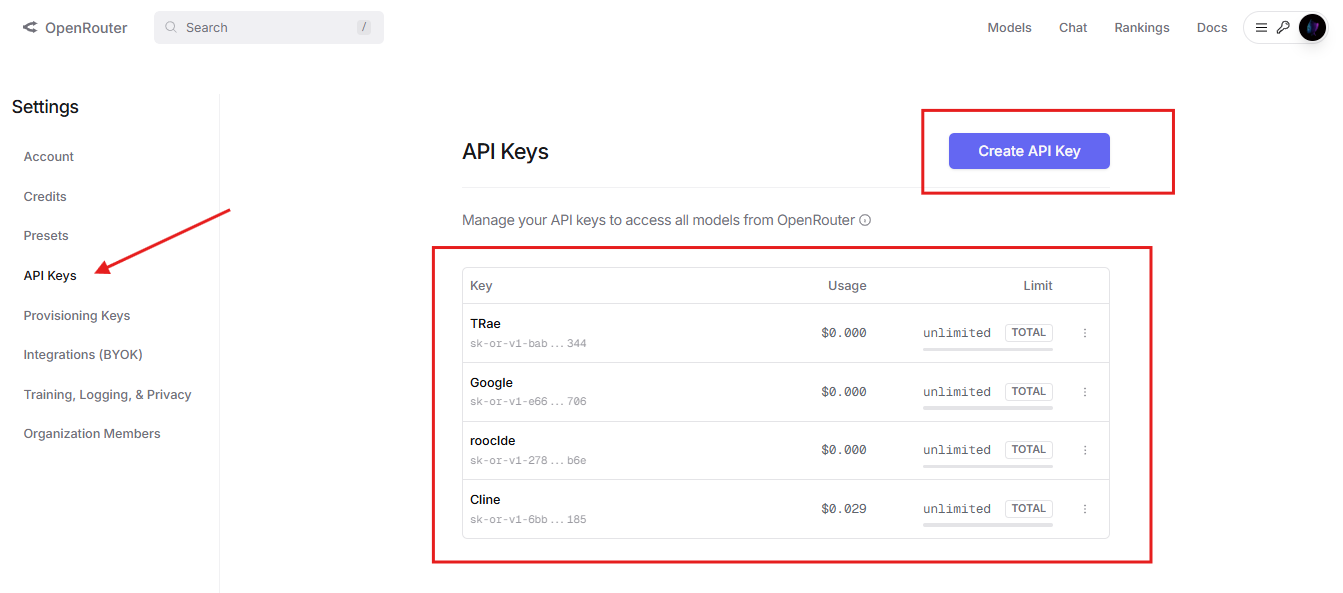

You start by creating an OpenRouter account and adding credits. Visit the credits page to deposit funds, as usage deducts from this balance.

Next, generate an API key from the dashboard. Use this key in place of OpenAI's for compatibility.

Authenticate similarly: include "Authorization: Bearer YOUR_OPENROUTER_KEY" in headers.

For routing preferences, add headers like "HTTP-Referer" to attribute apps. This optional step aids leaderboards.

Furthermore, select variants like :floor for cost optimization or :nitro for speed. Append these to model names, such as "openai/gpt-5-pro:floor".

By following these steps, you configure OpenRouter efficiently for GPT-5 Pro.

Code Examples for OpenRouter Integration

OpenRouter mirrors OpenAI's API, so code resembles previous examples. In Python: from openai import OpenAI; client = OpenAI(base_url="https://openrouter.ai/api/v1", api_key="YOUR_OPENROUTER_KEY").

Then: response = client.chat.completions.create(model="openai/gpt-5-pro", messages=[{"role": "user", "content": "Generate code for a web app."}]).

Handle outputs identically.

To enable fallbacks, specify multiple providers in headers if advanced routing applies.

In JavaScript: adjust the URL to "https://openrouter.ai/api/v1/chat/completions".

These adaptations ensure smooth transitions between platforms.

Pricing Details on OpenRouter for GPT-5 Pro

OpenRouter's pricing varies by model and provider. For GPT-5 Pro, it often mirrors OpenAI's rates: $15 per million input tokens and $120 per million output tokens. However, promotions like 50% off on similar models reduce costs temporarily.

Additionally, free tiers exist for certain variants, though GPT-5 Pro typically requires credits. BYOK adds a 5% fee after 1 million requests monthly.

Compare this to OpenAI: OpenRouter's routing can select cheaper providers, potentially saving money.

Monitor per-request costs on their models page. This transparency helps budget planning.

Comparing OpenAI and OpenRouter for GPT-5 Pro Access

OpenAI offers direct control, ideal for dedicated integrations. You access exclusive features like snapshots for version locking.

Conversely, OpenRouter provides broader model variety and automatic fallbacks, enhancing reliability.

Pricing-wise, OpenAI sets baseline rates, while OpenRouter introduces discounts and optimizations.

For scalability, OpenAI's tiers suit growing needs, but OpenRouter's unified interface accelerates development.

Choose based on priorities: precision with OpenAI or flexibility with OpenRouter.

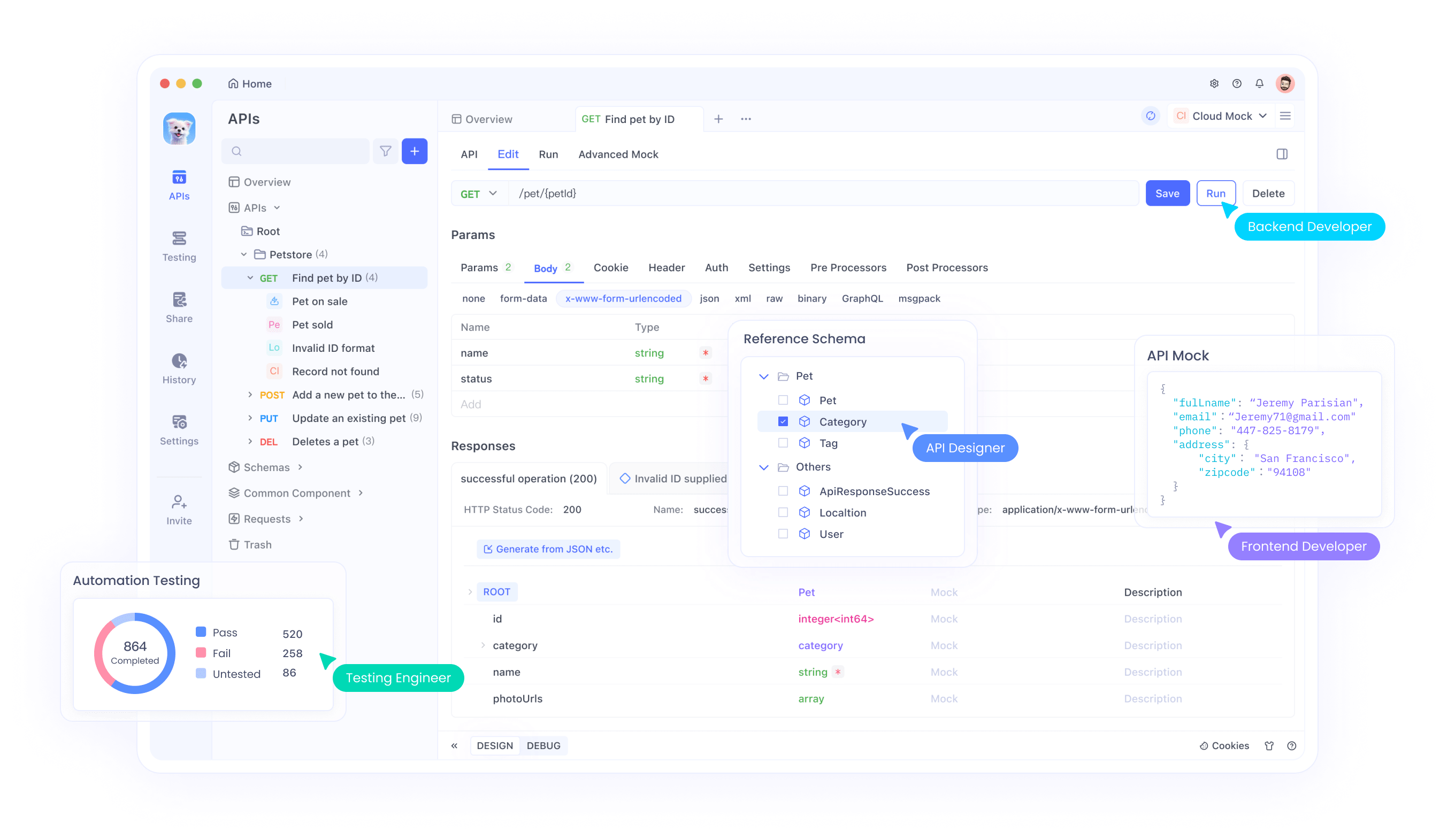

Leveraging Apidog for GPT-5 Pro API Testing

Apidog streamlines API interactions, making it essential for GPT-5 Pro. You create collections for endpoints, organizing requests.

Import OpenAPI specs to auto-generate tests. Send calls to /v1/responses and inspect responses.

Moreover, mock servers simulate behaviors, aiding offline development.

Debug issues with detailed logs. Apidog's collaboration features support team workflows.

Download Apidog for free to elevate your API management.

Best Practices for GPT-5 Pro API Usage

Optimize prompts for brevity to cut costs. Use high reasoning judiciously.

Implement retries for transient errors. Monitor metrics via dashboards.

Secure keys with environment variables. Batch requests where possible.

Additionally, test across platforms to identify strengths.

These practices maximize efficiency.

Potential Challenges and Solutions

Challenges include timeouts on complex queries. Solve by using background mode.

Rate limits constrain usage; upgrade tiers accordingly.

Pricing surprises arise from overlooked tokens; employ estimators.

By anticipating issues, you mitigate disruptions.

Advanced Integration Techniques

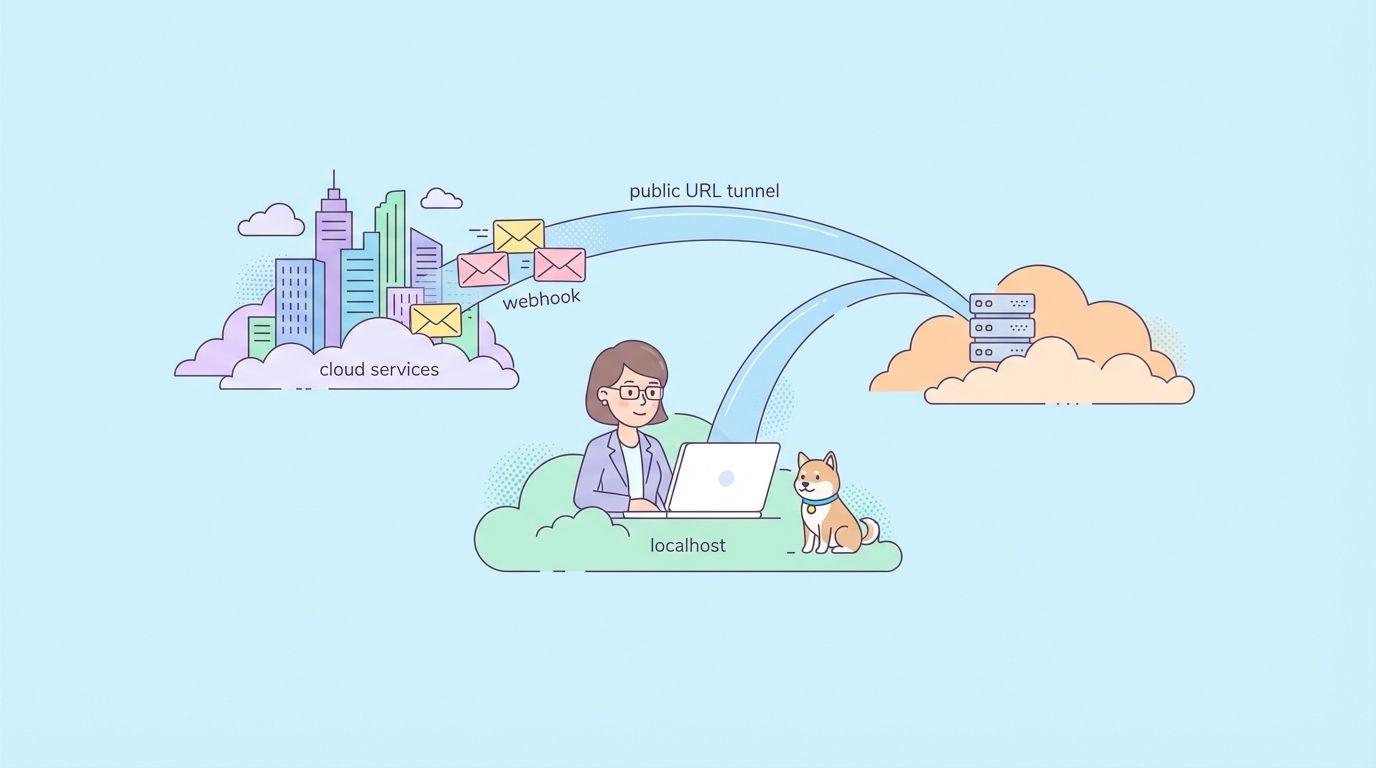

Incorporate webhooks for asynchronous responses. Build agents with function calling.

Scale with load balancers across platforms.

These techniques unlock sophisticated applications.

Conclusion

Accessing GPT-5 Pro API empowers innovative solutions. Through OpenAI and OpenRouter, you navigate options effectively. Remember, tools like Apidog enhance the journey. Implement these insights to transform your projects.