OpenAI advances coding assistance with the release of gpt-5-codex, a specialized version of GPT-5 tailored for agentic coding scenarios. This model optimizes performance in tools that developers rely on daily, such as Cursor and Visual Studio Code. Engineers now access enhanced capabilities through API endpoints, enabling automated code reviews, intelligent completions, and autonomous task handling. Furthermore, gpt-5-codex adjusts its computational effort based on task complexity, responding swiftly to simple queries while dedicating more resources to intricate problems.

This article examines gpt-5-codex in detail, from its core features to practical implementations. Developers benefit from understanding how this model integrates with popular IDEs, and the following sections break down each aspect technically.

Understanding GPT-5-Codex: Core Architecture and Optimizations

OpenAI engineers gpt-5-codex as a refined iteration of GPT-5, focusing on coding-specific enhancements. The model employs dynamic reasoning allocation, which means it evaluates task difficulty and scales its processing accordingly. For instance, a basic code snippet request receives an immediate response, whereas a multi-file refactor prompts extended analysis and tool usage.

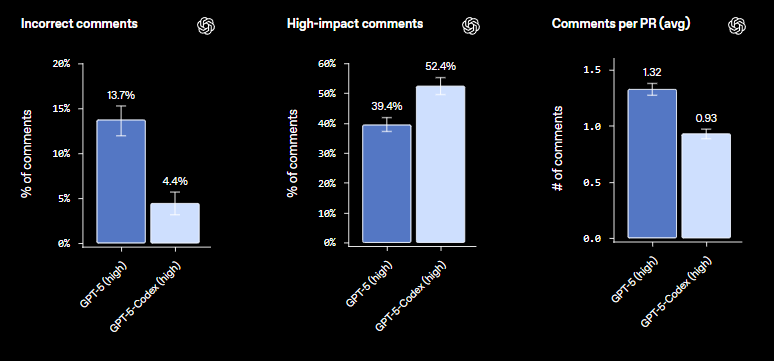

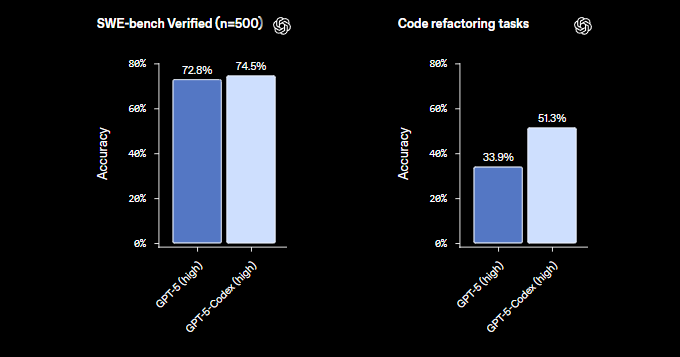

Moreover, gpt-5-codex incorporates training data emphasizing real-world software engineering challenges. This approach results in superior performance on benchmarks like SWE-bench, where it matches or exceeds standard GPT-5 in code resolution rates. Developers notice improvements in refactor tasks, with internal metrics showing a jump from 33.9% to 51.3% effectiveness.

The architecture builds on transformer-based layers but fine-tunes for agentic behaviors. Agents in this context refer to autonomous systems that execute sequences of actions, such as editing files, running tests, and applying patches. Consequently, gpt-5-codex excels in environments requiring iterative interactions, like IDE extensions.

Additionally, safety measures play a crucial role. OpenAI publishes an addendum to the GPT-5 system card, detailing mitigations for potential misuse in coding contexts. These include content filters and usage monitoring to prevent harmful code generation.

API Availability and Access Methods for GPT-5-Codex

OpenAI makes gpt-5-codex accessible via API, with rollout updates as of September 23, 2025. Developers obtain API keys through their OpenAI accounts, enabling integration into custom applications. The API supports endpoints for chat completions, code reviews, and agentic tasks.

To start, users authenticate with an API key and select gpt-5-codex as the model in requests. For example, a basic completion call looks like this in Python:

import openai

openai.api_key = 'your-api-key'

response = openai.ChatCompletion.create(

model="gpt-5-codex",

messages=[{"role": "user", "content": "Generate a Python function to sort a list."}]

)

print(response.choices[0].message.content)

This structure ensures compatibility with existing OpenAI SDKs. However, note that API access initially targets CLI users, with broader availability planned soon.

Providers like OpenRouter also host gpt-5-codex, offering an OpenAI-compatible completion API. This allows developers to route requests through alternative infrastructures for cost efficiency or redundancy.

Furthermore, pricing follows token-based models, though exact rates depend on usage tiers. Enterprise users access it through Business or Pro plans, covering multiple sessions weekly.

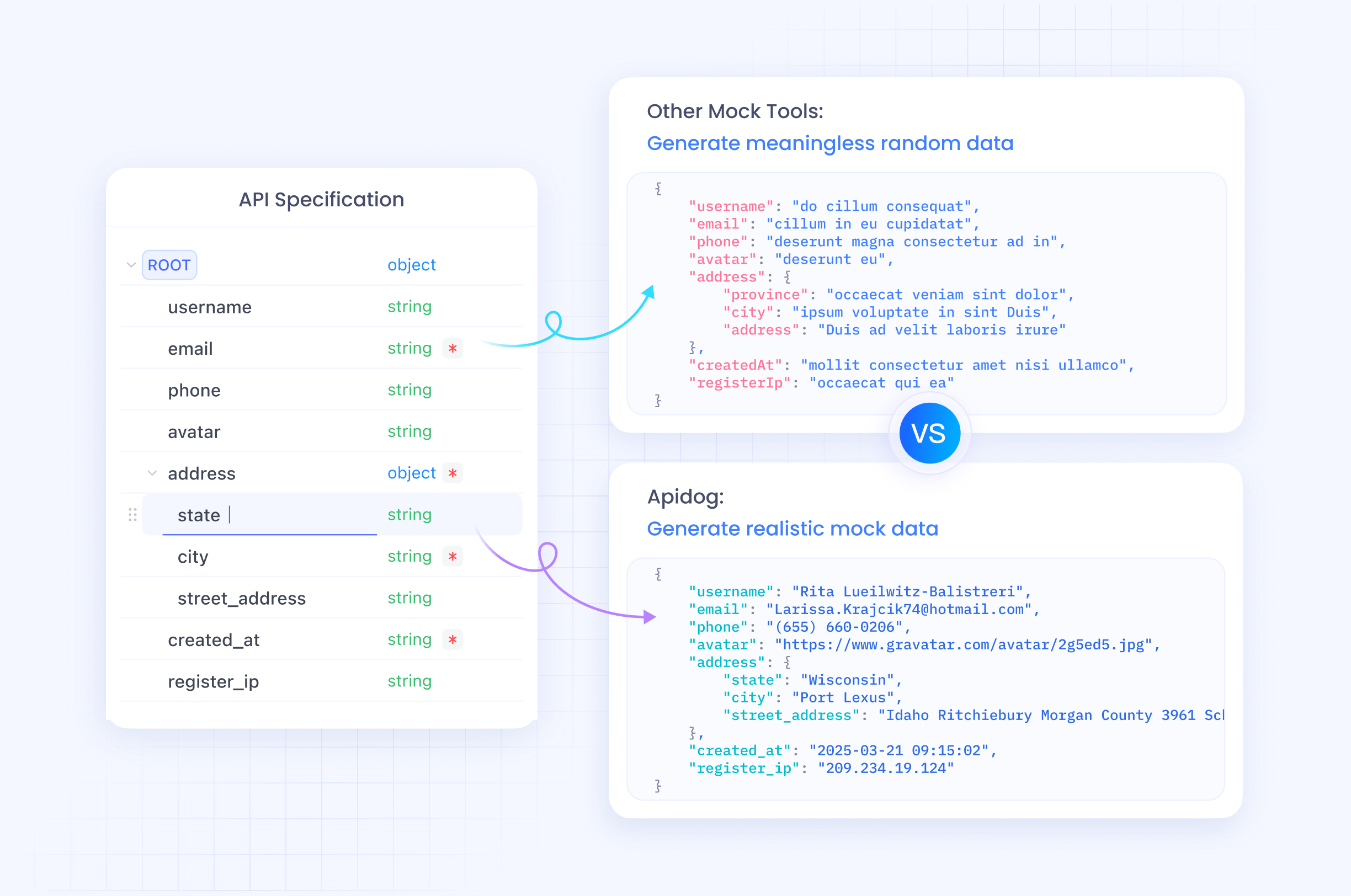

When integrating, tools like Apidog prove invaluable. Apidog enables mocking API responses from gpt-5-codex, testing edge cases without incurring costs. Its documentation features import OpenAPI specs directly, facilitating client generation aligned with gpt-5-codex outputs.

Integrating GPT-5-Codex with Cursor: Setup and Use Cases

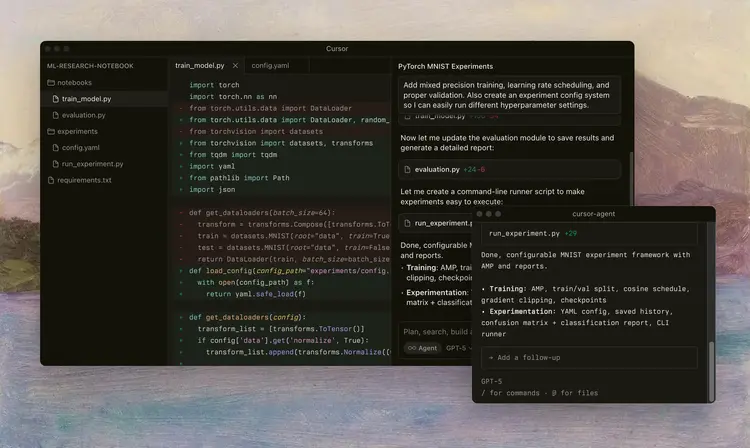

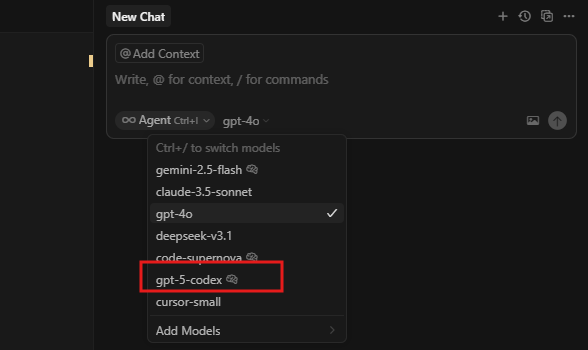

Cursor integrates gpt-5-codex natively, as announced on September 23, 2025. This AI-powered code editor allows selection of gpt-5-codex in its agent menu, enabling features like context-aware completions and command executions.

To set up, users update Cursor to the latest version and navigate to the model selector

Once activated, developers leverage gpt-5-codex for tasks such as generating boilerplate code. For example, prompting "Implement a REST API endpoint in Node.js" yields structured responses with dependencies and error handling.

Moreover, Cursor's CLI integration uses gpt-5-codex for terminal-based operations. Users run commands like codex review to analyze pull requests automatically.

However, users report occasional issues with CLI termination after tasks complete. To mitigate, incorporate timeout mechanisms in scripts.

In practice, gpt-5-codex accelerates workflows in Cursor by handling multi-step processes. Consider a scenario where a developer refactors a React component: gpt-5-codex identifies optimizations, applies patches, and runs tests – all within the editor.

Leveraging GPT-5-Codex in Visual Studio Code via GitHub Copilot

Visual Studio Code users access gpt-5-codex through GitHub Copilot's public preview, rolled out on September 23, 2025. This integration requires VS Code version 1.104.1 or higher and a qualifying Copilot plan.

Administrators enable the model in organization settings for Business and Enterprise tiers. Individual Pro users select it in the Copilot Chat model picker.

Gpt-5-codex enhances Copilot's agentic modes: ask, edit, and agent. In agent mode, it autonomously resolves issues, such as debugging a Python script by stepping through code and suggesting fixes.

For instance, typing "@copilot fix this bug" invokes gpt-5-codex to analyze context and propose solutions.

Transitioning to advanced use, developers embed API calls within VS Code extensions. Using the OpenAI SDK, custom plugins query gpt-5-codex for specialized tasks, like generating SQL queries from natural language.

User feedback highlights its rollout speed and potential for free access expansions. Nevertheless, gradual deployment means not all users see it immediately.

Apidog complements this by providing API testing within VS Code workflows. Download Apidog to simulate gpt-5-codex responses, ensuring robust integrations.

Advanced Prompting Techniques for GPT-5-Codex

Effective prompting maximizes gpt-5-codex's potential. Adhere to the "less is more" principle: start with minimal instructions and add specifics only as needed.

Avoid preambles, as the model supports Responses API without verbosity controls. For tool usage, limit to essentials like terminal and apply_patch. This matches training data, improving accuracy.

In API contexts, structure messages with roles: system for guidelines, user for queries.

Furthermore, test prompts iteratively. If results fall short, refine by removing redundancies rather than expanding.

Examples demonstrate this. Prompting "Write a Flask API for user authentication" produces complete code with security best practices.

However, for complex tasks, allow gpt-5-codex to iterate autonomously, as it can sustain efforts over extended periods.

Apidog aids in crafting prompts by documenting API structures, which serve as context for gpt-5-codex.

Real-World Applications and Case Studies

Developers apply gpt-5-codex across domains. In web development, it generates full-stack applications from specs, handling frontend React components and backend Express servers.

A case study from enterprise settings shows gpt-5-codex reducing refactor time by 50% in large codebases.

In mobile app development, via ChatGPT integration, it prototypes SwiftUI views.

Moreover, security teams use it for vulnerability scans, identifying issues in code reviews.

Transitioning to automation, gpt-5-codex powers CI/CD pipelines, approving PRs based on quality metrics.

Users in Cursor report faster iterations on AI projects, while VS Code integrations streamline open-source contributions.

However, challenges include cost management for heavy usage. Pro plans support full workweeks, but monitor tokens.

Comparisons with Previous Models and Competitors

Gpt-5-codex outperforms GPT-5 in coding tasks, particularly refactors. It avoids model switching by handling varied complexities.

Compared to Claude Code, gpt-5-codex emphasizes agentic autonomy, leading in long-task persistence.

Against Gemini, it offers deeper GitHub integration.

Furthermore, API availability sets it apart, enabling custom builds.

However, competitors like Anthropic provide alternative strengths in revenue models.

Overall, gpt-5-codex leads in optimized coding efficiency.

Best Practices for Security and Performance

Secure implementations start with API key management. Use environment variables and rotate keys regularly.

Gpt-5-codex includes built-in filters, but review outputs for sensitive data.

For performance, batch requests and optimize prompts to reduce tokens.

Monitor usage via OpenAI dashboards.

Additionally, integrate Apidog for security testing, scanning APIs for vulnerabilities.

Future Implications and Evolutions

OpenAI plans broader API expansions, potentially including fine-tuning options.

As adoption grows, expect ecosystem growth around gpt-5-codex, with more IDE supports.

Moreover, advancements in multimodal inputs could enhance its capabilities.

Developers prepare by experimenting now, using tools like Cursor and VS Code.

In conclusion, gpt-5-codex redefines coding through API and integrations. Its technical prowess, combined with practical tools like Apidog, empowers efficient development.