As developers increasingly integrate AI into coding workflows, the GPT-5-Codex API emerges as a powerful tool for automating complex tasks. This specialized model enhances code generation, debugging, and optimization, making it essential for modern software engineering.

Understanding the GPT-5-Codex API: Core Concepts and Architecture

OpenAI engineers designed the GPT-5-Codex API to build upon the foundational capabilities of GPT-5, tailoring it specifically for coding and software development scenarios. This model processes natural language prompts and generates code in multiple programming languages, including Python, JavaScript, and C++. Developers access it through standard HTTP requests, sending inputs via POST methods to endpoints like /v1/chat/completions. The API returns structured JSON responses containing generated code, explanations, or modifications.

Gpt-5-codex incorporates advanced reasoning chains, allowing it to handle multi-step problems. For instance, when users submit a prompt describing a web application architecture, the model outlines the structure, suggests libraries, and produces initial code snippets. This functionality stems from its training on vast datasets of open-source code repositories, enabling it to recognize patterns and best practices automatically.

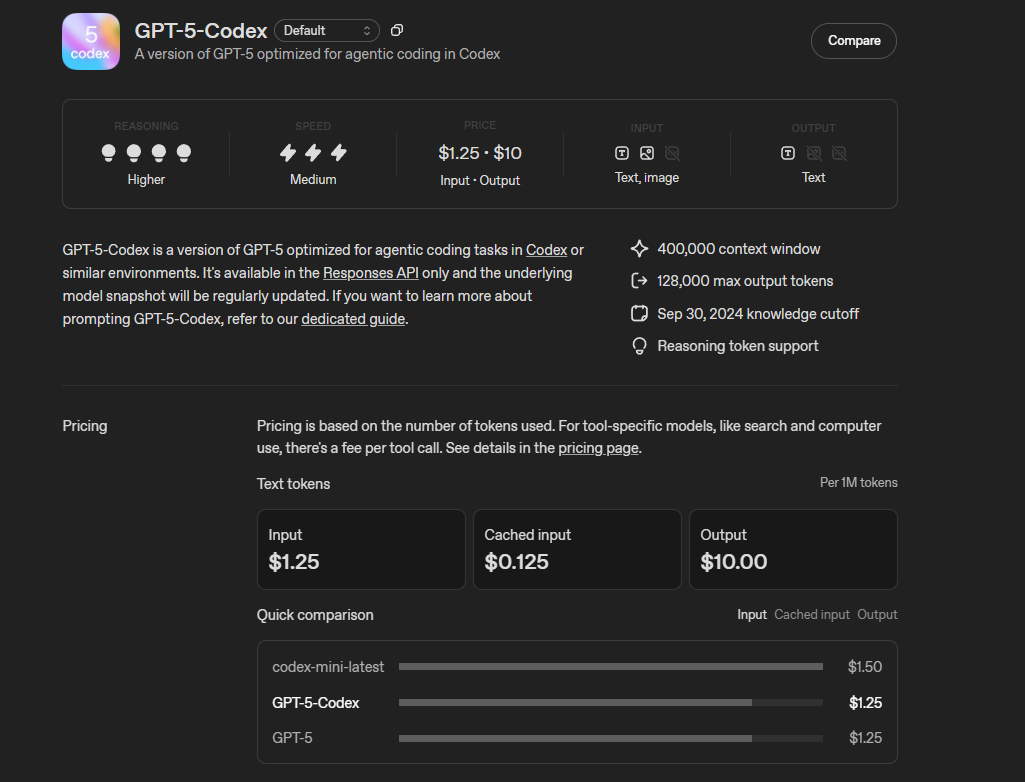

Transitioning to its technical specifications, gpt-5-codex supports a maximum context length of 128,000 tokens, which accommodates extensive codebases or detailed project descriptions in a single interaction. Users configure parameters such as temperature for creativity control—set it low for deterministic outputs or higher for varied suggestions—and max_tokens to limit response length. Additionally, the model integrates with tools for function calling, where it invokes external APIs or executes code snippets within responses.

Engineers at OpenAI optimized gpt-5-codex for agentic workflows, meaning it operates autonomously in loops, refining code based on feedback. This feature proves invaluable in iterative development cycles. However, users must manage token usage carefully, as exceeding limits triggers errors. Overall, the architecture prioritizes efficiency, with low-latency responses averaging under 500 milliseconds for standard queries.

Key Features of GPT-5-Codex API That Drive Innovation

Gpt-5-codex excels in code generation, where it produces functional scripts from high-level descriptions. Developers input requirements like "Build a RESTful API for user authentication," and the model outputs complete endpoints with error handling and security measures. This capability reduces development time significantly, allowing teams to focus on customization rather than boilerplate code.

The API supports code understanding and explanation. Users paste existing code, and gpt-5-codex analyzes it, identifying bugs, suggesting optimizations, or documenting functions. For example, it detects inefficiencies in algorithms and proposes alternatives with big-O notation explanations. This analytical prowess stems from its fine-tuning on diverse codebases, ensuring accuracy across domains like machine learning and web development.

Another standout feature involves multimodal support, although limited in the initial release. Gpt-5-codex processes text-based diagrams or pseudocode, converting them into executable programs. Developers leverage this for rapid prototyping. In addition, the model handles version control integrations, generating commit messages or diff patches for Git repositories.

Shifting to security aspects, OpenAI implemented safeguards in the API to prevent malicious code generation. Prompts attempting to create harmful scripts receive neutralized responses or warnings. Nevertheless, users bear responsibility for reviewing outputs. The API also offers streaming responses, enabling real-time code completion in IDEs like VS Code via extensions.

Accessing the GPT-5-Codex API: Step-by-Step Integration Guide

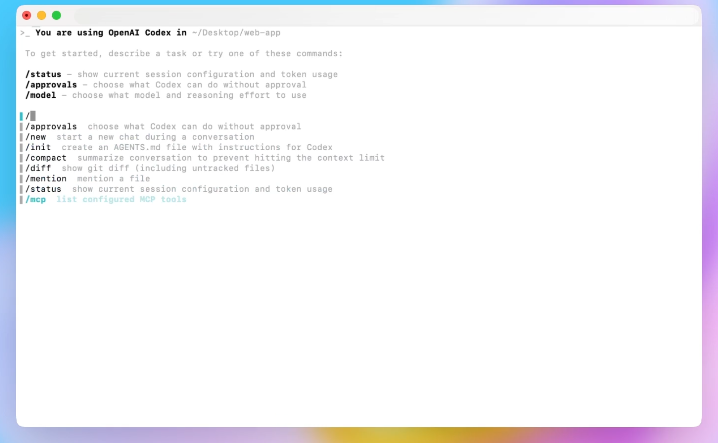

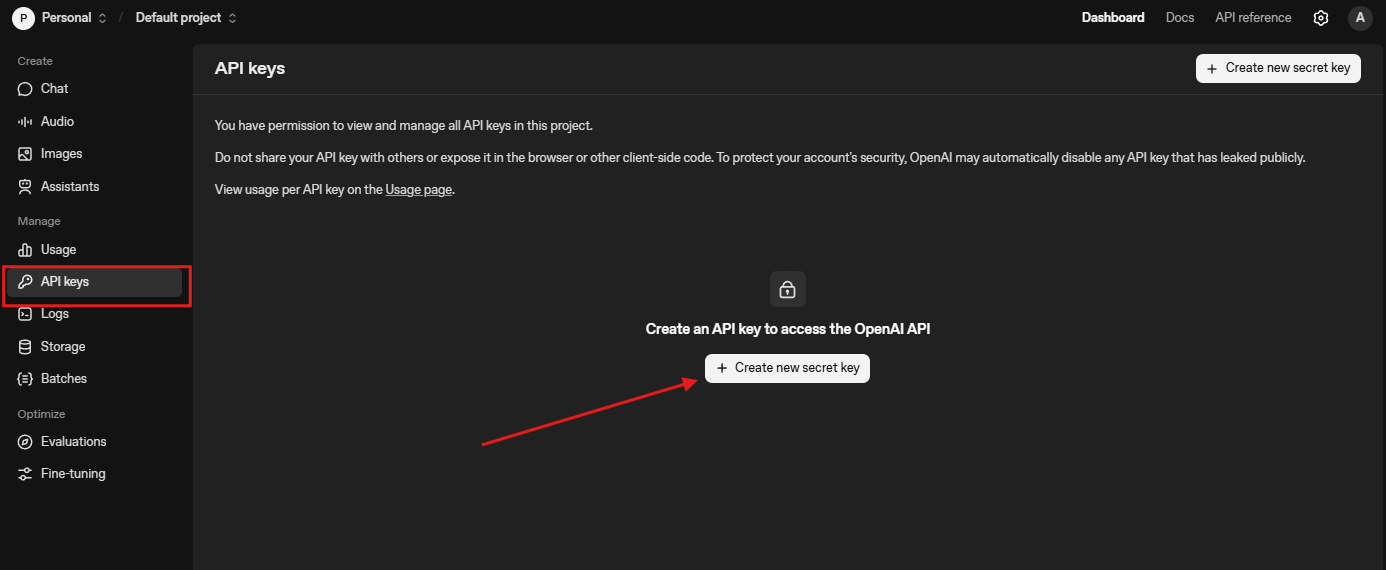

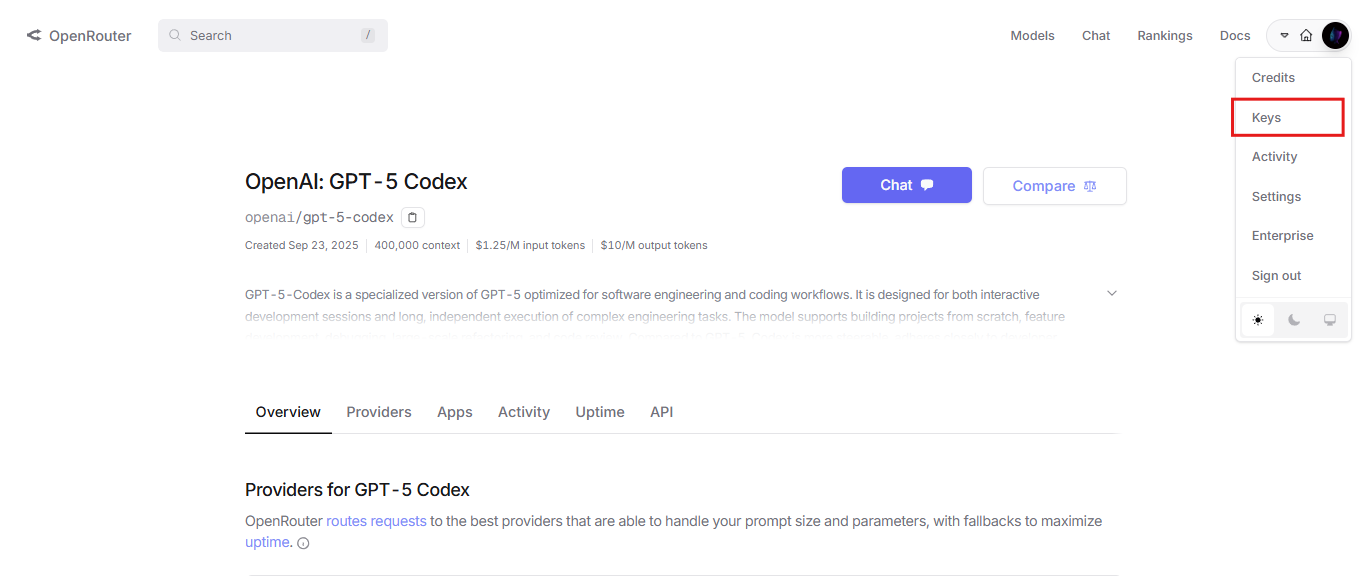

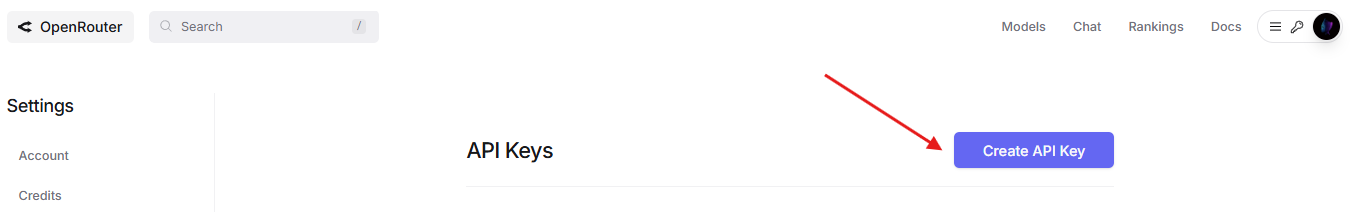

Developers begin by obtaining an API key from the OpenAI platform or third-party providers like OpenRouter. Register an account, navigate to the API section, and generate a key.

On OpenAI platform :

On OpenRouter :

This key authenticates requests, ensuring secure access.

Next, install necessary libraries. Python users employ the OpenAI SDK with pip install openai. Import the client and initialize it with the key. A basic request looks like this:

from openai import OpenAI

client = OpenAI(api_key='your-api-key')

response = client.chat.completions.create(

model='gpt-5-codex',

messages=[{'role': 'user', 'content': 'Write a Python function to sort a list.'}]

)

print(response.choices[0].message.content)

This code sends a prompt and retrieves the generated function. Users adjust parameters like top_p for nucleus sampling or presence_penalty to refine outputs.

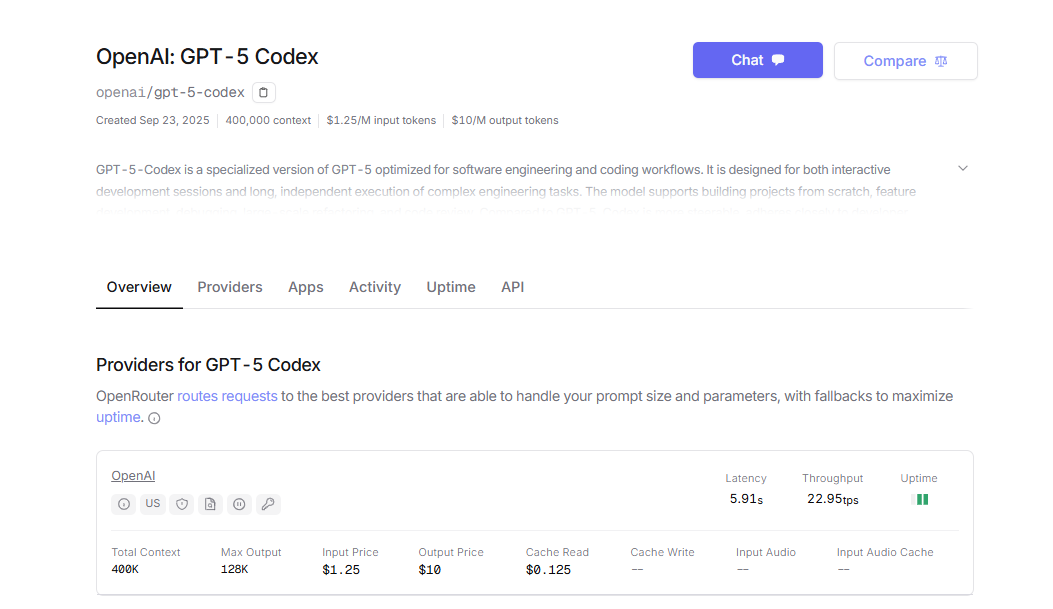

For OpenRouter, configure the base URL to https://openrouter.ai/api/v1 and include the site header for routing. This setup allows access to gpt-5-codex without direct OpenAI billing, often at similar rates.

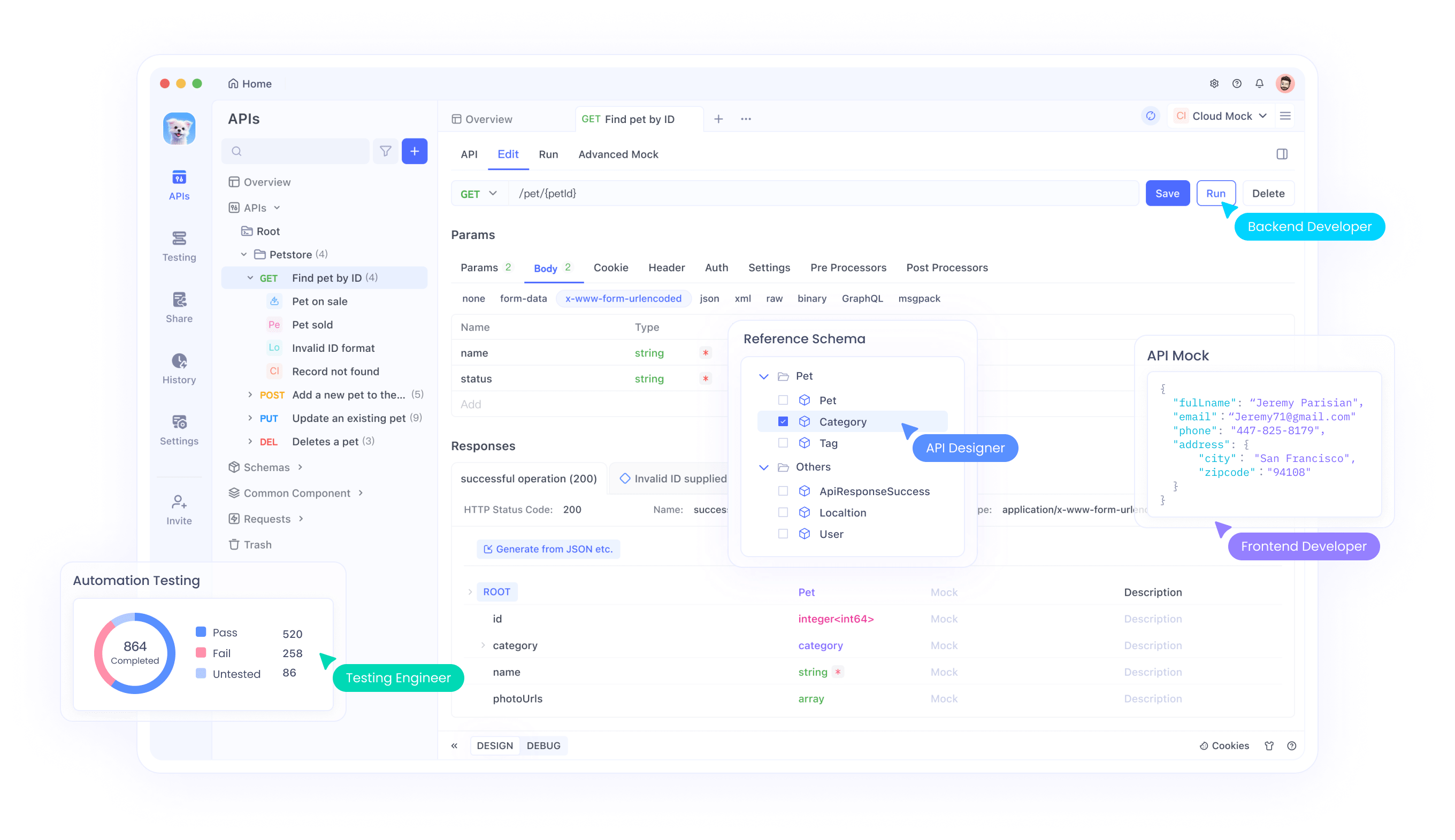

Additionally, integrate error handling. The API returns status codes—200 for success, 429 for rate limits—so implement retries with exponential backoff. Tools like Apidog facilitate this by offering visual interfaces to build and debug requests, reducing manual coding efforts.

Once set up, test endpoints thoroughly. Send varied prompts to evaluate consistency, and monitor token consumption via response metadata. This approach ensures smooth deployment.

GPT-5-Codex API Pricing Across Different Platforms

Pricing structures vary by platform, but gpt-5-codex aligns closely with GPT-5 rates. On the OpenAI platform, users pay $1.25 per million input tokens and $10 per million output tokens. This pay-per-use model suits scalable applications, with discounts for cached inputs at $0.125 per million tokens.

OpenRouter mirrors these costs for gpt-5-codex, charging $1.25 per million input tokens and $10 per million output tokens, making it a viable alternative for routed access. Users benefit from OpenRouter's model router, which optimizes for availability without additional fees in most cases.

On Azure OpenAI Service, global deployment prices gpt-5-codex at $1.25 per million input tokens and $10 per million output tokens, with slight variations for data zones at $1.38 input and $11 output. This integration appeals to enterprises using Microsoft ecosystems.

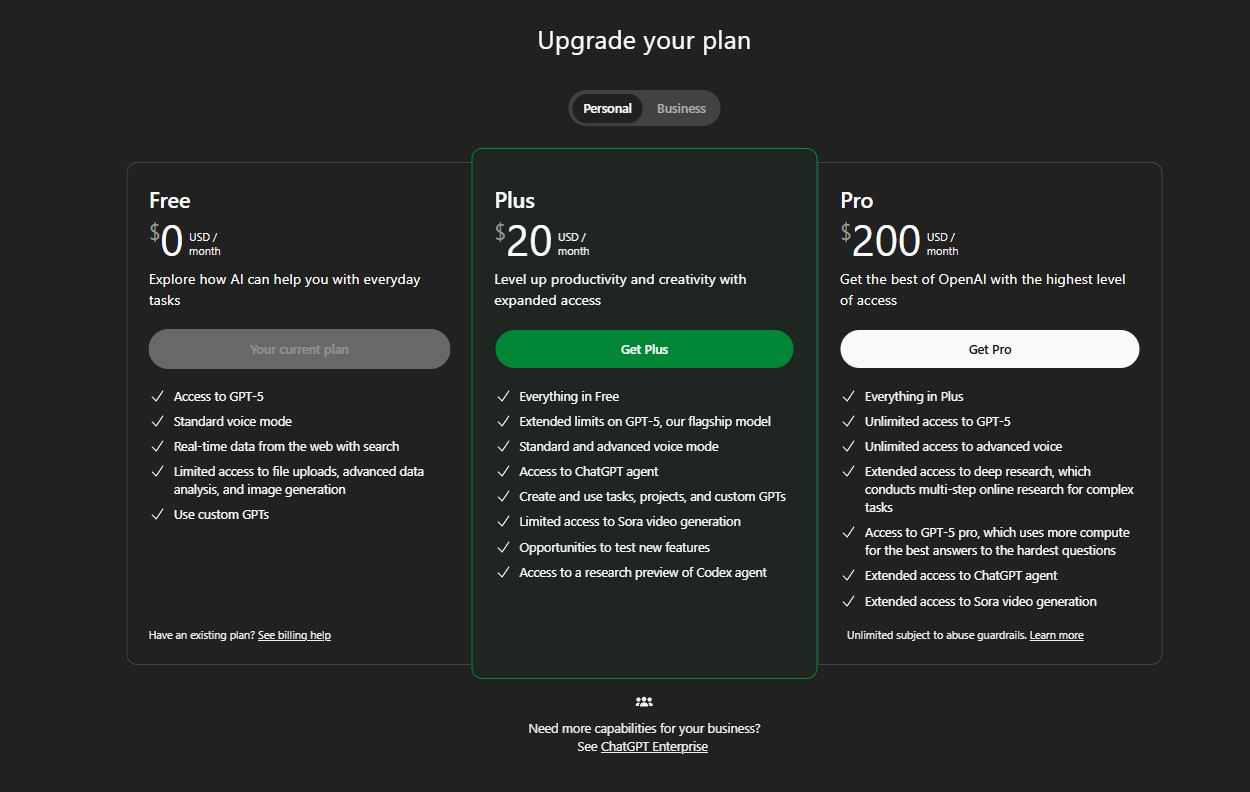

Subscription-based access through ChatGPT Plus at $20 monthly includes limited gpt-5-codex usage, while Pro at $200 offers extended limits. Developers calculate costs using tools like OpenAI's pricing calculator to estimate expenses based on token volumes.

However, high-output scenarios increase bills rapidly due to the 8x multiplier on output tokens. Teams mitigate this by optimizing prompts for concise responses.

Leveraging Apidog for GPT-5-Codex API Development

Apidog serves as an all-in-one API management tool that streamlines interactions with gpt-5-codex. Users design API specifications, generate mock servers, and test endpoints in a unified interface. For gpt-5-codex, import the OpenAPI schema and simulate calls to predict responses.

Apidog's collaboration features allow teams to share projects, version control APIs, and automate testing suites. This integration accelerates development cycles when building applications around gpt-5-codex.

Users export code from Apidog directly into IDEs, bridging the gap between API testing and implementation. Additionally, its free tier provides essential tools, making it accessible for individual developers exploring gpt-5-codex.

Real-World Use Cases for GPT-5-Codex API

Software teams employ gpt-5-codex for automating unit test generation. Provide function code, and the API creates comprehensive tests covering edge cases, improving code coverage.

In web development, it designs full-stack applications. Prompts specifying frameworks like React and Node.js yield integrated codebases with database schemas.

Moreover, data scientists use it for scripting machine learning pipelines. Gpt-5-codex generates TensorFlow or PyTorch models from descriptions, handling data preprocessing and evaluation metrics.

Enterprises integrate it into CI/CD pipelines via API calls, where it reviews pull requests and suggests improvements. This automation reduces review times.

Educational platforms leverage gpt-5-codex for tutoring, explaining code concepts interactively. Students query algorithms, receiving step-by-step breakdowns.

However, in regulated industries like finance, users validate outputs against compliance standards. The API's versatility extends to game development, crafting scripts for Unity or Unreal Engine.

Best Practices for Optimizing GPT-5-Codex API Usage

Developers craft precise prompts to maximize efficiency. Include examples in messages for few-shot learning, guiding the model toward desired outputs.

Additionally, batch requests when possible to minimize API calls. Use system messages to set roles, like "You are a senior Python developer," enhancing response quality.

Monitor usage dashboards on platforms to track spending and adjust strategies. Implement caching for repeated prompts, leveraging discounted rates.

Furthermore, combine gpt-5-codex with other models for hybrid workflows—use it for code, then GPT-5 for natural language tasks.

Security teams scan generated code for vulnerabilities using tools like Snyk. Always review outputs manually in critical applications.

Apidog aids optimization by profiling API performance, identifying bottlenecks in integration.

Challenges and Limitations of GPT-5-Codex API

Despite its strengths, gpt-5-codex occasionally hallucinates code, producing non-functional snippets. Users mitigate this with verification steps.

The high output token cost demands concise prompting. Long contexts consume resources quickly.

Moreover, the model lacks real-time internet access, relying on trained knowledge up to its cutoff. For current libraries, supplement with external data.

Ethical concerns arise in code ownership—generated content may resemble existing repositories. Developers attribute appropriately.

Platform downtimes affect availability, though OpenRouter provides redundancy.

Conclusion

OpenAI plans updates to gpt-5-codex, expanding context to 1 million tokens and adding native tool integrations. Emerging competitors like Anthropic's models challenge it, fostering innovation.

Additionally, advancements in fine-tuning allow custom versions for niche domains. The API evolves toward fully autonomous agents, handling entire projects. Developers prepare by upskilling in prompt engineering and API management with tools like Apidog. Gpt-5-codex transforms coding, offering unprecedented efficiency. As adoption grows, it reshapes software development landscapes.