The GPT-5 API from OpenAI represents a significant leap in AI capabilities, offering developers unmatched power for building intelligent applications. Whether you’re creating advanced chatbots, automating complex workflows, or generating dynamic content, the GPT-5 API provides the tools to bring your ideas to life.

What Is GPT 5 and Why It Matters

GPT 5 is the latest-generation AI from OpenAI, released on August 7, 2025. It serves as a unified system with built-in reasoning choosing between fast or deep thinking depending on the request’s complexity. It outpaces previous models in writing, coding, health advice, and more, while cutting hallucinations and sycophancy significantly.

This model suits scenarios where expert-level intelligence and reliability make a difference.

To get started, you need an OpenAI account, a generated API key, and a development environment. Tools like Apidog simplify testing and debugging, making it easier to integrate the GPT-5 API into your projects. Let’s explore the prerequisites to ensure a smooth setup.

Prerequisites for Using the GPT-5 API

Before integrating the GPT-5 API, ensure you have the following:

- OpenAI Account and API Key: Sign up at OpenAI’s platform and generate an API key from the dashboard to authenticate requests.

- Development Environment: Set up a coding environment with a language like Python, Node.js, or cURL that supports HTTP requests.

- Apidog Installation: Download Apidog to test and manage API calls efficiently. Its intuitive interface helps validate responses and debug issues.

- Basic API Knowledge: Understand HTTP methods (POST, GET) and JSON formatting for structuring requests.

With these in place, you’re ready to configure your environment for the GPT-5 API. Next, we’ll cover the setup process in detail.

Setting Up Your Environment for the GPT-5 API

To interact with the GPT-5 API, configure your development environment using Python, a popular choice due to its simplicity and robust OpenAI SDK. Follow these steps:

Step 1: Install the OpenAI SDK

Install the OpenAI Python library to simplify API interactions. Run the following command in your terminal:

pip install openai

This installs the latest version of the OpenAI SDK, compatible with GPT-5.

Step 2: Secure Your API Key

Generate an API key from the OpenAI dashboard. Store it securely in an environment variable to prevent accidental exposure. For example, in Python, use the os module:

import os

os.environ["OPENAI_API_KEY"] = "your-api-key-here"

Alternatively, use a .env file with a library like python-dotenv for better security.

Step 3: Verify Apidog Installation

Install Apidog to streamline testing. After downloading, configure it to send requests to the GPT-5 API endpoint (https://api.openai.com/v1/chat/completions). Add your API key to the Authorization header in Apidog’s interface for quick testing.

Step 4: Test Connectivity

Send a simple test request to verify your setup. Here’s a Python example:

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": "Hello, GPT-5!"}]

)

print(response.choices[0].message.content)

If successful, you’ll receive a response from GPT-5. Use Apidog to monitor the request and response for debugging. Now, let’s structure API requests for optimal performance.

Structuring GPT-5 API Requests

The GPT-5 API uses the /v1/chat/completions endpoint, accepting JSON-formatted POST requests. A typical request includes the model, messages, and optional parameters like temperature and max_tokens. Here’s an example:

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "system", "content": "You are a coding assistant."},

{"role": "user", "content": "Write a Python function to calculate Fibonacci numbers."}

],

temperature=0.7,

max_tokens=500

)

print(response.choices[0].message.content)

Key Parameters

- Model: Specify

gpt-5,gpt-5-mini, orgpt-5-nanobased on your needs. - Messages: An array of message objects defining the conversation context.

- Temperature: Controls randomness (0.0–2.0). Lower values (e.g., 0.7) ensure more focused outputs.

- Max Tokens: Limits response length to manage costs and performance.

- Verbosity: A new GPT-5 parameter (

low,medium,high) to control response detail.

Use Apidog to test different parameter combinations and analyze response structures. Next, we’ll explore how to handle API responses effectively.

Handling GPT-5 API Responses

The GPT-5 API returns JSON responses containing the model’s output, metadata, and usage details. A sample response looks like this:

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1694016000,

"model": "gpt-5",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Here's a Python function to calculate Fibonacci numbers:\n```python\ndef fibonacci(n):\n if n <= 0:\n return []\n if n == 1:\n return [0]\n fib = [0, 1]\n for i in range(2, n):\n fib.append(fib[i-1] + fib[i-2])\n return fib\n```"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 20,

"completion_tokens": 80,

"total_tokens": 100

}

}

Parsing Responses

Extract the response content using Python:

content = response.choices[0].message.content

print(content)

Monitor token usage (usage.total_tokens) to track costs. Apidog’s interface displays response data clearly, helping you identify issues like incomplete outputs. If errors occur, check the error field in the response for details.

Practical Use Cases for the GPT-5 API

The GPT-5 API’s versatility makes it ideal for various applications. Here are three practical use cases:

1. Code Generation

GPT-5 excels at generating functional code for complex tasks. For example, prompt it to create a web app:

response = client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "user", "content": "Create a single-page HTML app for a to-do list with JavaScript."}

]

)

The response includes complete, executable code. Test the output using Apidog to ensure correctness.

2. Content Creation

Automate blog post generation by providing a topic and guidelines:

response = client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "user", "content": "Write a 500-word blog post about AI trends in 2025."}

]

)

Refine outputs with Apidog to ensure the content meets your style and length requirements.

3. Data Analysis

Use GPT-5 to analyze datasets or generate insights:

response = client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "user", "content": "Analyze this CSV data and summarize trends: [insert data]."}

]

)

Apidog helps validate the API’s response structure for large datasets.

Optimizing GPT-5 API Performance

To maximize efficiency and minimize costs, follow these optimization strategies:

- Use Specific Prompts: Craft clear, detailed prompts to reduce irrelevant outputs. For example, instead of “Write about AI,” use “Write a 300-word article on AI ethics in healthcare.”

- Leverage Variants: Choose

gpt-5-mini($0.25/1M input tokens, $2/1M output tokens) orgpt-5-nano($0.05/1M input tokens, $0.40/1M output tokens) for cost-sensitive tasks. The fullgpt-5model costs $1.25/1M input tokens and $10/1M output tokens. - Monitor Token Usage: Track

usagein responses to stay within budget. Refer to OpenAI’s pricing page for details. - Test with Apidog: Run small-scale tests to optimize prompts before scaling.

- Adjust Verbosity: Set the

verbosityparameter to balance response detail and token usage.

By optimizing prompts and selecting the right model variant, you can achieve high performance at lower costs. Now, let’s address common issues you might encounter.

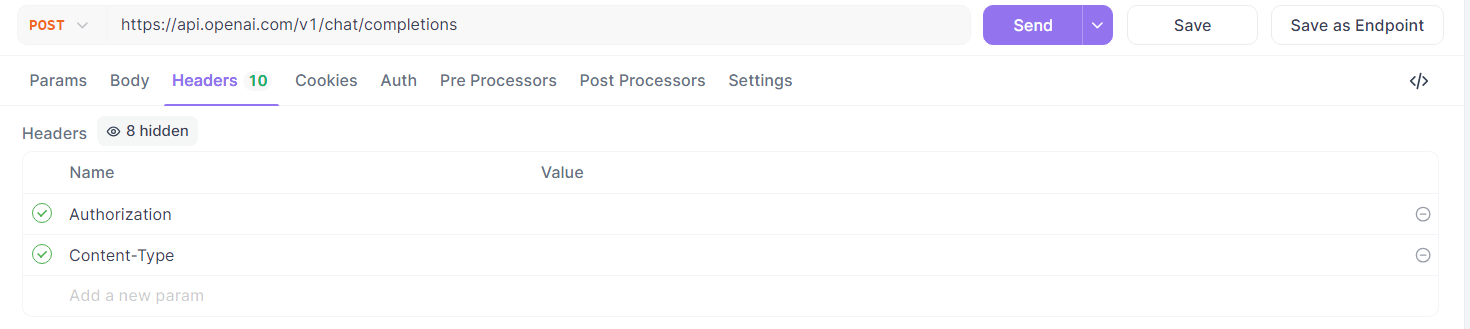

Troubleshooting Common GPT-5 API Issues

Despite its robustness, the GPT-5 API may present challenges. Here are common issues and solutions:

- Authentication Errors: Ensure your API key is valid and correctly included in the

Authorizationheader. Verify it in Apidog’s settings. - Rate Limits: Exceeding usage limits triggers errors. Monitor your quota in the OpenAI dashboard and upgrade to a paid tier (e.g., Pro at $200/month for unlimited GPT-5 access) if needed.

- Unexpected Outputs: Refine prompts for clarity and test variations in Apidog to identify optimal phrasing.

- High Costs: Use

gpt-5-miniorgpt-5-nanofor less complex tasks to reduce token consumption.

Apidog’s debugging tools help identify issues quickly by visualizing request and response data. Next, we’ll explore how Apidog enhances GPT-5 API integration.

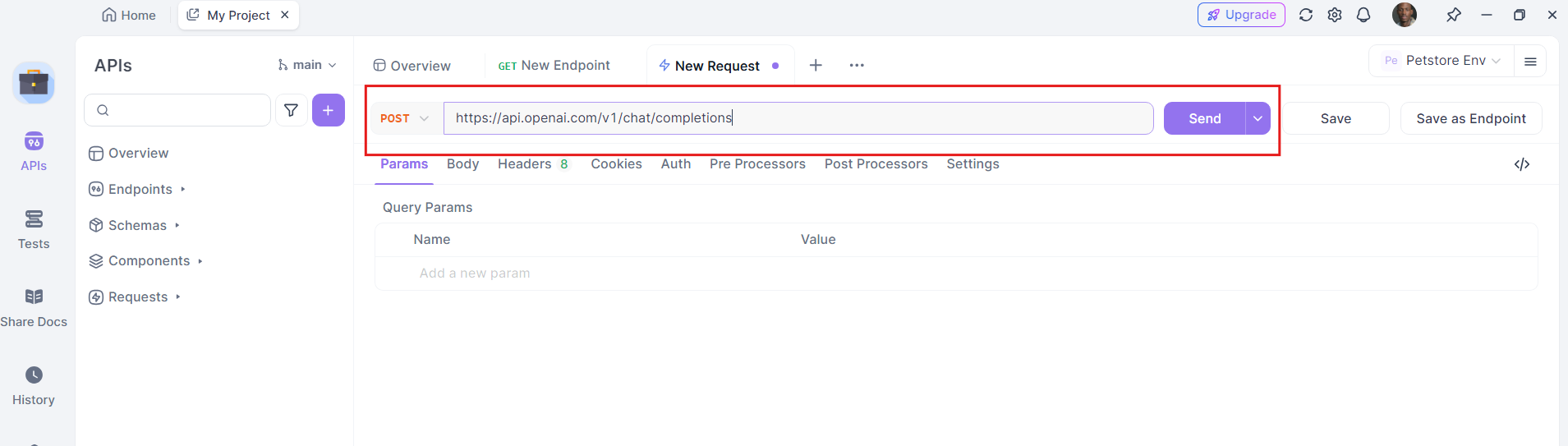

Quick Setup: Using GPT 5 API with Apidog

Get started smoothly by following these steps:

Install Apidog: Download and launch—it’s free.

Set Up a New API Request:

Method: POST

URL: https://api.openai.com/v1/chat/completions

Headers:

Authorization: Bearer YOUR_API_KEY

Content-Type: application/json

Craft Your Payload:

{

"model": "gpt-5",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain the GPT-5 pricing pros and cons clearly."}

],

"reasoning_effort": "low",

"verbosity": "medium"

}

Adjust "model" to "gpt-5-mini" or "gpt-5-nano" as needed, and use "reasoning_effort" or "verbosity" params to control output detail. (OpenAI)

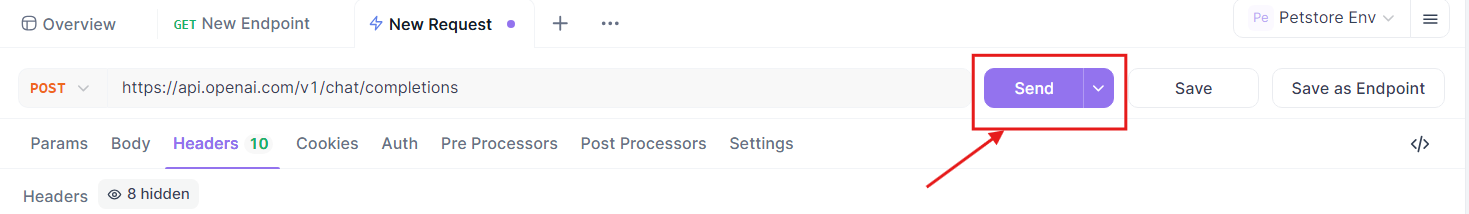

Run and Validate: Send the request via Apidog. Inspect the completion response directly inside Apidog.

Iterate Gradually: Transition smoothly from mini to full GPT 5 as cost and complexity demands.

Best Practices for Using the GPT-5 API

To ensure optimal results, adopt these best practices:

- Secure API Keys: Store keys in environment variables or a vault solution.

- Monitor Costs: Regularly check token usage in the OpenAI dashboard and optimize prompts.

- Iterative Testing: Use Apidog to test prompts incrementally before deploying at scale.

- Stay Updated: Follow OpenAI’s blog for API updates and new features.

- Use Safe Completions: GPT-5’s safe completions training ensures safer responses for sensitive queries. Craft prompts to leverage this feature.

By following these practices, you’ll maximize the GPT-5 API’s potential while maintaining security and efficiency.

API Pricing You Should Know

OpenAI offers GPT 5 in three versions—gpt-5, gpt-5-mini, and gpt-5-nano. They let you balance performance, speed, and cost:

| Model | Input Tokens (per 1 M) | Output Tokens (per 1 M) |

|---|---|---|

| gpt-5 | $1.25 | $10.00 |

| gpt-5-mini | $0.25 | $2.00 |

| gpt-5-nano | $0.05 | $0.40 |

For comparison, competing models like Gemini 2.5 Flash are more expensive for similar tasks. The Pro tier ($200/month) offers unlimited GPT-5 access, ideal for heavy users. Always check OpenAI’s pricing page for updates.

Conclusion

You now have a straightforward guide to using the GPT 5 API, complete with pricing insight, API-use guidance, and how Apidog streamlines everything—available free to tool up fast.

Continue tweaking reasoning_effort, verbosity, and model size to match your project’s needs. Combine that with Apidog’s clean design for rapid iteration.