In the world of software engineering, the ability to discover and optimize algorithms automatically is a game changer for backend teams, QA engineers, and API developers. Google DeepMind’s AlphaEvolve represents a major step forward, leveraging the Gemini large language model (LLM) family within an evolutionary framework to autonomously generate, test, and refine code for complex problems in mathematics, computer science, and engineering.

This article breaks down the technical architecture of AlphaEvolve, how it uses Gemini LLMs to drive code evolution, and what this means for engineering teams focused on performance, scalability, and automation. We’ll also compare AlphaEvolve to previous systems and discuss its practical implications for API-focused teams aiming to streamline their workflows.

If you’re searching for tools that help automate documentation, optimize workflows, and replace legacy solutions like Postman, Apidog delivers with integrated API testing, collaboration, and beautiful API documentation — all at a more affordable price.

Boost your team’s productivity with an all-in-one platform designed for developers.

What Is AlphaEvolve?

AlphaEvolve is an automated system for the discovery and optimization of algorithms. Unlike traditional AI code completion or code review tools, AlphaEvolve runs a fully automated loop: It mutates code, evaluates variants against well-defined criteria, and evolves solutions—without requiring manual intervention at every step.

Key Use Cases for Engineering Teams

- Optimizing compute-intensive kernels (e.g., matrix multiplication in ML workloads)

- Improving job scheduling heuristics for large-scale infrastructure

- Accelerating hardware circuit designs (e.g., Verilog for TPUs)

- Tackling open mathematical problems with combinatorial complexity

How AlphaEvolve Works: Technical Overview

1. Defining the Problem

Every AlphaEvolve run starts with a clear, machine-testable setup:

- Baseline Program: The starting point, usually in Python, C++, Verilog, or JAX.

- Evaluation Function: Automated scripts or functions that score each code variant for correctness and performance (speed, memory, energy, output quality, etc.).

- Target Regions: Specific code sections that AlphaEvolve is allowed to modify.

Why this matters: Engineering teams can frame optimization tasks in a reproducible way, ensuring that improvements are measurable and aligned with real-world goals.

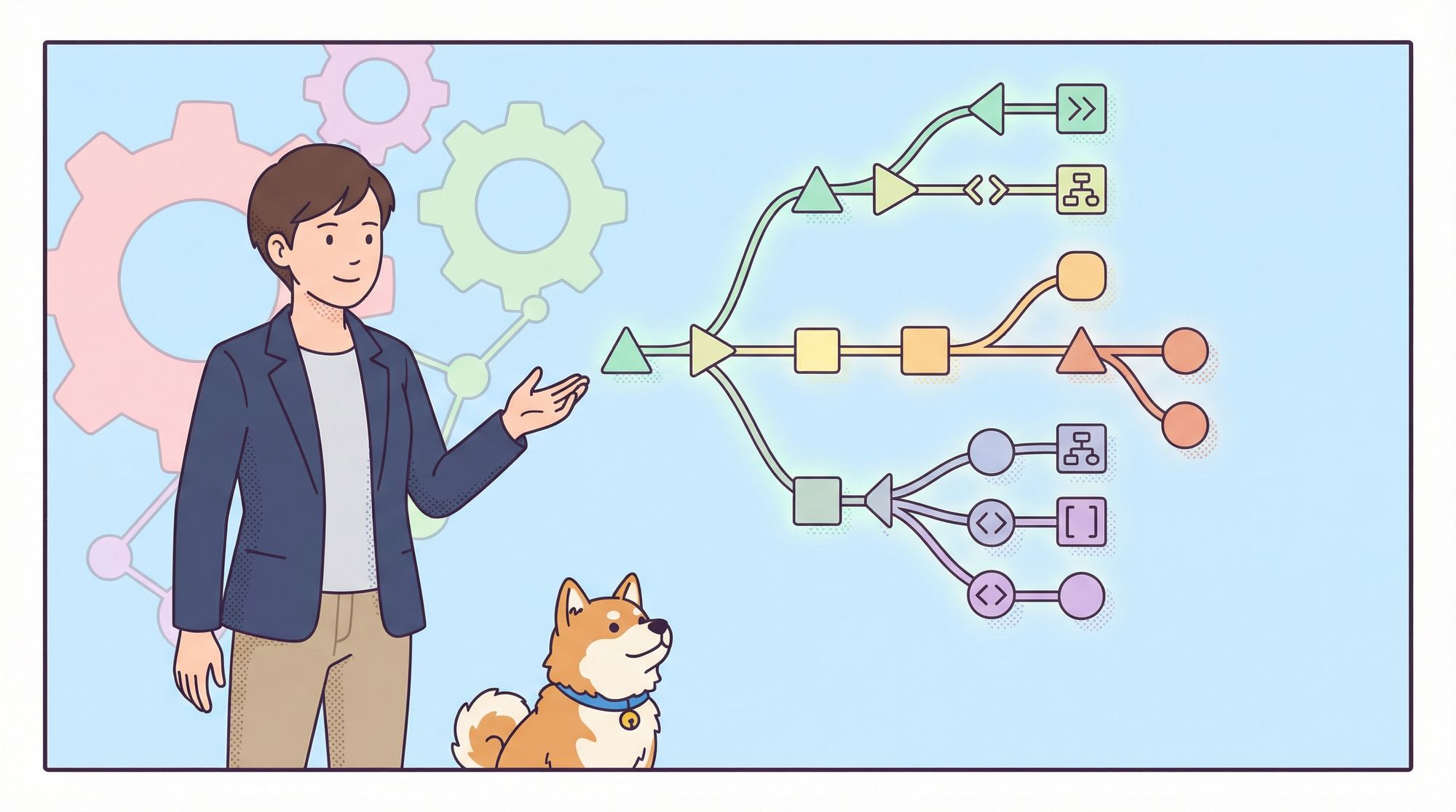

2. Code Evolution via LLM-Guided Mutation

AlphaEvolve maintains a program database of all generated and evaluated code variants. Its workflow includes:

- Prompt Sampling: Intelligent selection of “parent” code samples—either top performers or those offering diversity.

- LLM-Driven Mutation:

- Gemini Flash generates a wide range of quick, diverse code mutations.

- Gemini Pro refines promising candidates with deeper reasoning and more complex changes.

- Diff-Based Edits: Mutations are often incremental code “diffs” rather than full rewrites, allowing for focused, controlled exploration.

Practical example: For optimizing a matrix multiplication kernel, the LLM might suggest a new way to tile the computation or reorder loops, based on both prior variants and prompt context.

3. Automated Evaluation and Selection

Every “child” code variant is:

- Compiled (if needed) and tested for correctness (using test suites, property-based checks, or formal verification).

- Profiled for performance on key metrics (speed, memory, energy, etc.).

- Ranked using multi-objective scoring, supporting trade-offs between, for example, speed and accuracy.

The evolutionary controller then:

- Favors high performers for retention.

- Maintains diversity to avoid local optima (e.g., using MAP-Elites).

- Updates the program database for the next generation.

This loop runs at scale, sometimes for millions of iterations across distributed infrastructure, converging on increasingly optimal solutions.

4. The Role of Gemini LLMs

Unlike older genetic programming systems that rely on random mutations, AlphaEvolve leverages Gemini LLMs for:

- Contextual, syntax-aware code changes based on prompts containing code, feedback, and problem description.

- Creative solutions: LLMs can propose algorithmic ideas beyond incremental tweaks.

- Efficient exploration: Diff-based edits allow for rapid iteration without starting from scratch.

- Code refinement: Gemini Pro improves code clarity and efficiency, not just correctness.

For engineering teams, this power translates to faster discovery of high-quality algorithms and optimizations that would otherwise require extensive manual tuning.

Real-World Achievements with AlphaEvolve

AlphaEvolve has already outperformed human experts and traditional methods in several domains:

-

Matrix Multiplication:

- Discovered a method for 4x4 complex matrices using only 48 multiplications (previous best: 49), breaking a decades-old record.

- Impact: Even small improvements in kernel efficiency can lead to significant gains at massive scale (e.g., deep learning training workloads).

-

Data Center Job Scheduling:

- Developed a new heuristic for Google’s Borg system, recovering 0.7% of worldwide compute capacity—equivalent to thousands of servers.

- Impact: Direct cost and energy savings for large-scale infrastructure teams.

-

AI Model Training Acceleration:

- Optimized the Pallas kernel for Gemini model training, speeding up matrix ops by 23% and reducing overall training time by 1%.

- Impact: Weeks shaved off multi-million-dollar training jobs.

-

Hardware Design:

- Proposed Verilog changes for TPU arithmetic circuits, simplifying hardware without sacrificing correctness.

- Impact: More efficient chips and lower energy use.

-

Mathematical Discovery:

- Improved or rediscovered state-of-the-art results in 75% of >50 open mathematical problems, including a new lower bound for the 11-dimensional kissing number problem.

Why This Matters for API and Backend Teams

Modern API development and backend engineering increasingly depend on efficient algorithms—whether for data processing, scheduling, or even hardware-aware optimization (e.g., for cloud-based ML inference). Tools like AlphaEvolve hint at a future where automated code evolution is part of the engineering workflow.

For API teams, integrating robust automation—from test generation to performance tuning—is essential. Apidog answers this need by offering:

- API documentation generation that is both automated and human-readable

- Seamless integration of testing, mocking, and collaboration

- A unified platform designed for developer productivity (more details)

- Cost-effective replacement for legacy tools like Postman (compare here)

How AlphaEvolve Compares to Earlier Systems

- AlphaTensor: Focused on matrix multiplication via tensor-game search; AlphaEvolve is broader, operating on arbitrary code in multiple languages.

- FunSearch: Evolved mathematical functions, often in restricted languages; AlphaEvolve works with real-world codebases, supports diff-based LLM mutation, and targets infrastructure optimization.

AlphaEvolve stands out for its generality, LLM-guided mutation process, and direct operation on source code.

Technical Challenges and Future Directions

Current Limitations

- Sample Inefficiency: Evolutionary search can require massive numbers of evaluations; increasing efficiency remains a priority.

- Evaluator Design: Building automated, robust evaluators for complex or subjective tasks is still a bottleneck.

- Scalability: Evolving very large, interdependent codebases (e.g., OS kernels) remains challenging.

- Generalization: Capturing and transferring the “knowledge” gained in one optimization run to improve LLMs for future tasks is an open research area.

- Ambiguity: AlphaEvolve excels when objectives are clear and machine-testable; handling ambiguous or subjective requirements is out of scope for now.

Promising Research Directions

- More sophisticated evolutionary strategies (co-evolution, adaptive mutations)

- Enhanced LLM prompting and interactive refinement

- Automated evaluator generation from high-level specs

- Closer integration with formal verification tools

- Broader accessibility for domain experts via improved tools and interfaces

The Bottom Line: AI-Driven Optimization in Modern Engineering

AlphaEvolve’s architecture—combining LLMs, evolutionary algorithms, and automated evaluation—marks a new era of AI-driven algorithm discovery. For API, backend, and infrastructure teams, this approach offers a glimpse into a future where software and hardware optimizations are faster, more reliable, and increasingly automated.

Ready to bring automation and collaboration to your API lifecycle? Try Apidog to streamline your development, testing, and documentation workflows.