The artificial intelligence landscape has witnessed a seismic shift with the introduction of GLM-4.5, Z.ai's groundbreaking open-source language model that challenges the dominance of proprietary solutions.

Modern developers face an increasingly complex challenge when selecting AI models for production environments. Furthermore, the need for sophisticated reasoning, reliable coding assistance, and robust agentic capabilities has never been more critical. GLM-4.5 emerges as a compelling solution that addresses these requirements while maintaining the flexibility and transparency that open-source development demands.

Understanding GLM-4.5's Revolutionary Architecture

GLM-4.5 represents a fundamental reimagining of how large language models should approach intelligent task execution. The model employs a Mixture-of-Experts (MoE) architecture with 355 billion total parameters and 32 billion active parameters per forward pass, creating an optimal balance between computational efficiency and performance capability.

Additionally, the architecture incorporates advanced hybrid reasoning mechanisms that enable more stable behavior during long-context, multi-turn interactions. The design philosophy prioritizes practical deployment considerations while maintaining state-of-the-art performance across diverse application domains.

The technical foundation extends beyond traditional transformer architectures through innovative attention mechanisms and optimized parameter distribution strategies. Consequently, GLM-4.5 achieves remarkable efficiency gains that translate into tangible benefits for production deployments.

Performance Benchmarks That Redefine Excellence

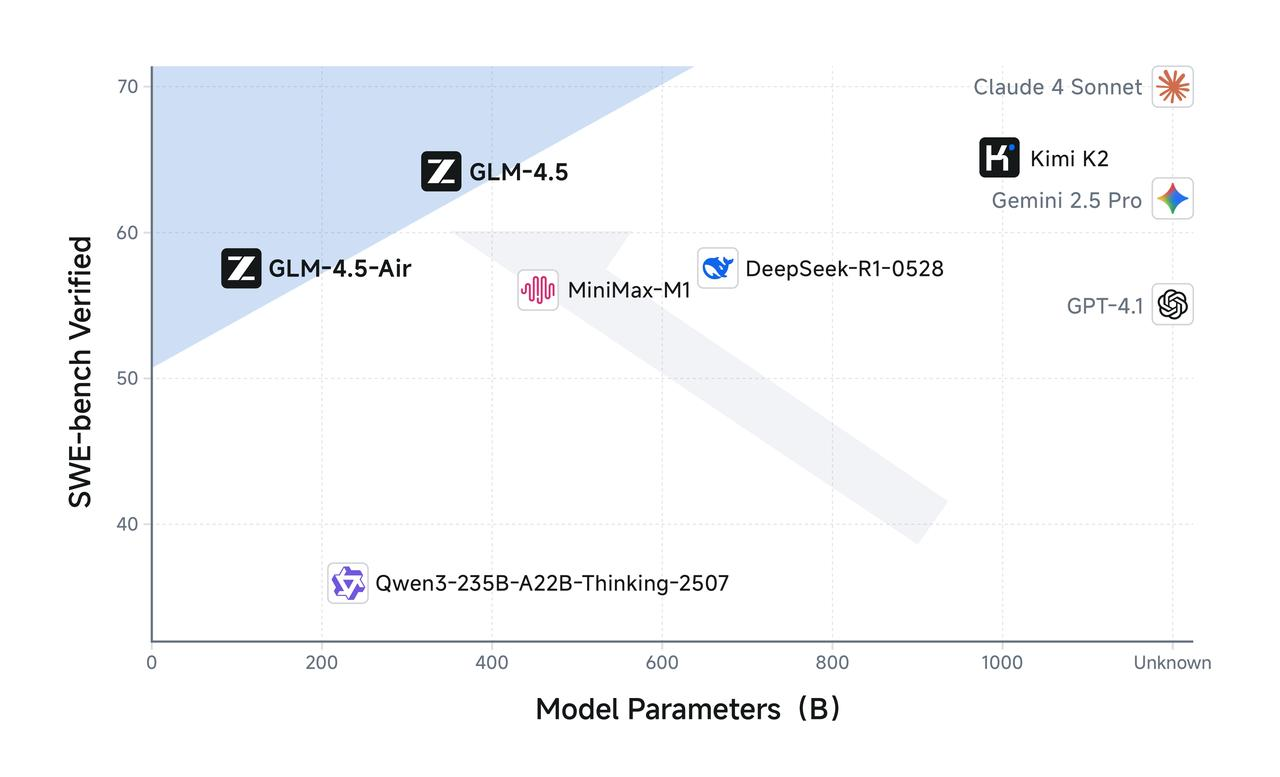

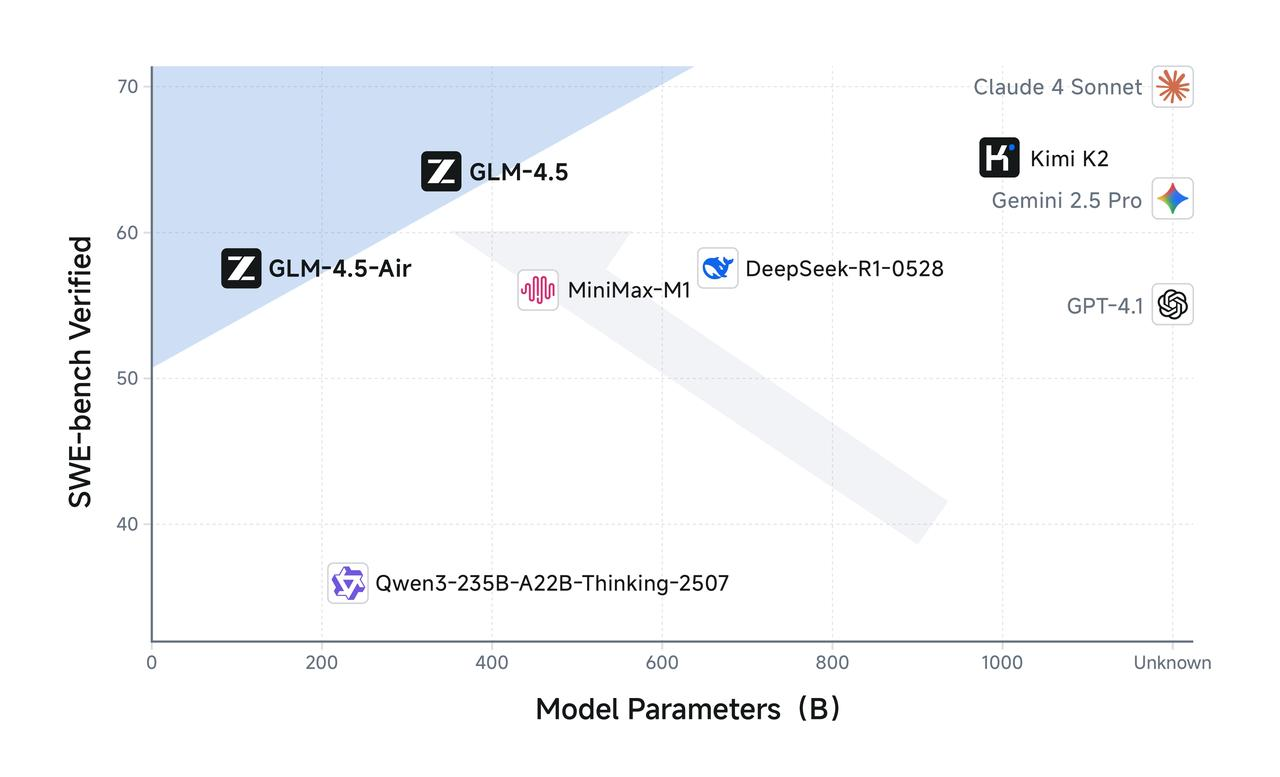

GLM-4.5 achieves exceptional performance with a score of 63.2, ranking 3rd place among all proprietary and open-source models across 12 industry-standard benchmarks covering agentic, reasoning, and coding capabilities. These results demonstrate the model's versatility and reliability across critical application domains.

Moreover, the benchmark performance reveals significant advantages in specific technical areas. GLM-4.5 achieves 70.1% on TAU-Bench, 91.0% on AIME 24, and 64.2% on SWE-bench Verified, establishing new standards for open-source model capabilities in mathematical reasoning and software engineering tasks.

The comprehensive evaluation methodology ensures that performance metrics reflect real-world application scenarios rather than synthetic benchmarks. Subsequently, developers can trust these results when making architectural decisions for production systems.

Comparative Analysis Against Industry Leaders

When positioned against established competitors, GLM-4.5 demonstrates remarkable competitive positioning. The model's performance closely approaches proprietary solutions while maintaining complete transparency and customization flexibility that closed-source alternatives cannot provide.

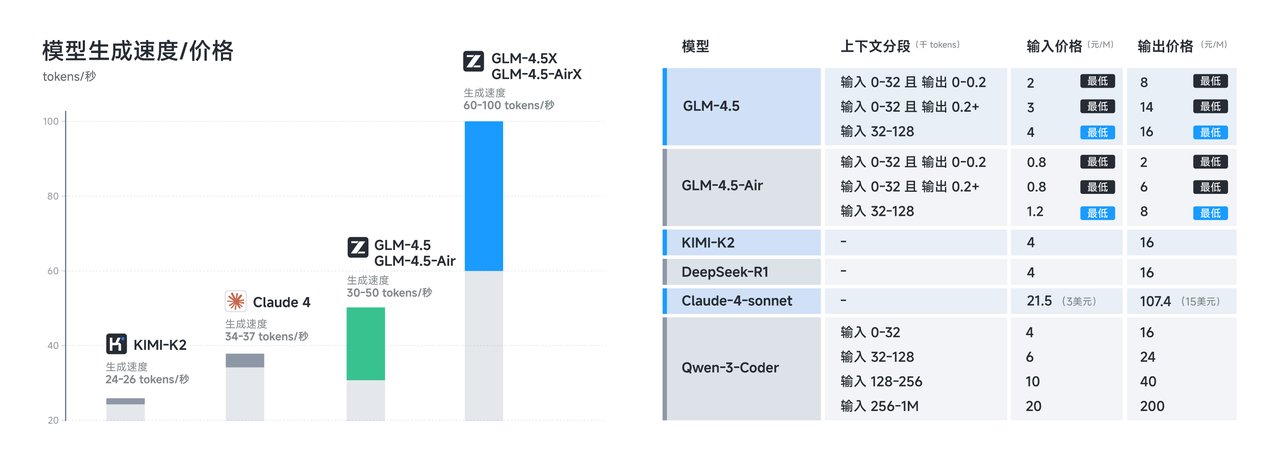

Furthermore, the cost-effectiveness analysis reveals substantial advantages for organizations seeking high-performance AI capabilities without the recurring expenses associated with proprietary API services. The model can run on just eight Nvidia H20 chips—half what DeepSeek requires, significantly reducing infrastructure requirements and operational costs.

The performance-to-cost ratio represents a paradigm shift in how organizations approach AI implementation strategies. Therefore, GLM-4.5 enables smaller teams and organizations to access capabilities previously reserved for well-funded enterprises.

Advanced Coding Capabilities for Modern Development

GLM-4.5's coding proficiency extends across multiple programming languages and development paradigms. The model supports code generation in Python, JavaScript, Java, C++, Go, Rust, and many other languages, providing comprehensive coverage for diverse development environments.

Additionally, the model's understanding of software engineering principles enables it to generate contextually appropriate code that adheres to best practices and industry standards. The integration of advanced debugging capabilities further enhances the development workflow by identifying potential issues and suggesting optimizations.

The agentic coding functionality represents a significant advancement in AI-assisted development. Consequently, developers can leverage GLM-4.5 for complex refactoring tasks, architecture design decisions, and automated testing scenarios that require deep understanding of code relationships and dependencies.

Integration with Development Workflows

Modern development environments require seamless integration with existing toolchains and workflows. GLM-4.5's API compatibility and flexible deployment options enable straightforward integration with popular development platforms and continuous integration systems.

Moreover, the model's ability to understand project context and maintain consistency across multiple files and modules makes it particularly valuable for large-scale software projects. The contextual awareness extends to understanding coding conventions, architectural patterns, and domain-specific requirements.

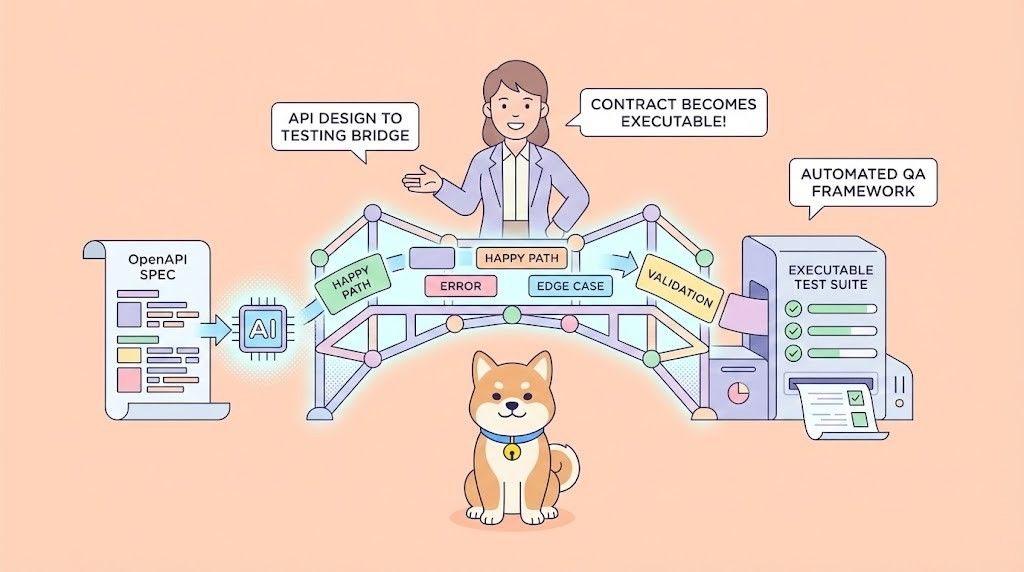

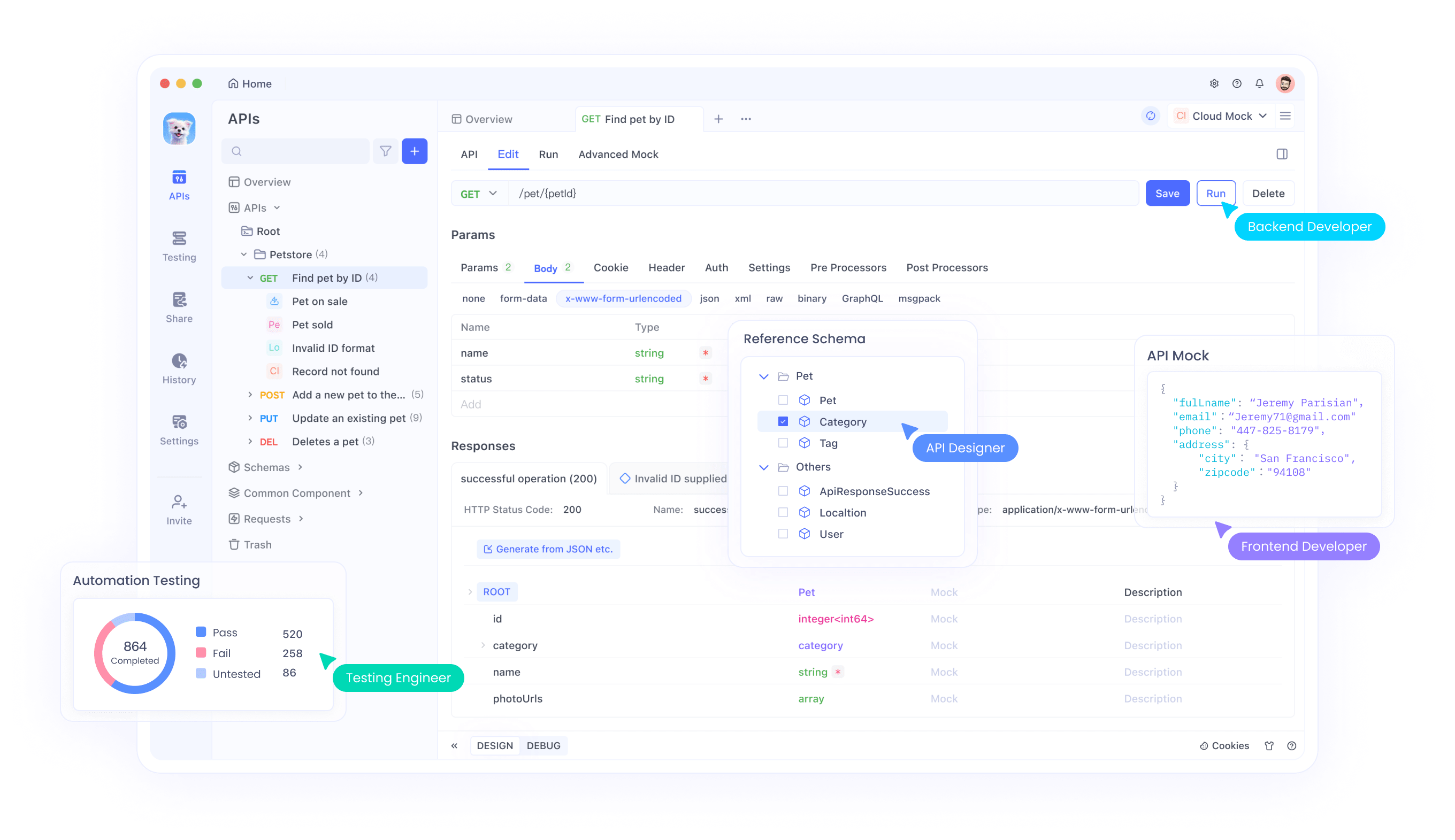

When combined with Apidog's comprehensive API testing framework, developers can systematically validate GLM-4.5's code generation capabilities across different scenarios and ensure consistent quality standards throughout the development lifecycle.

Agentic Capabilities That Transform User Interactions

GLM-4.5 particularly enhances agentic capabilities, including agentic coding, deep search, and general tool-using, establishing new possibilities for autonomous task execution and complex workflow automation.

The agentic architecture enables GLM-4.5 to decompose complex requests into manageable subtasks, execute them systematically, and synthesize results into coherent solutions. Furthermore, the model's ability to maintain context across extended interactions allows for sophisticated multi-step problem-solving scenarios.

These capabilities prove particularly valuable in scenarios requiring iterative refinement, exploratory analysis, and adaptive response generation. Subsequently, applications can provide more intelligent and responsive user experiences that adapt to changing requirements and evolving contexts.

Tool Integration and External System Connectivity

GLM-4.5's tool-using capabilities extend beyond simple API calls to encompass sophisticated integration patterns with external systems and services. The model can understand tool documentation, generate appropriate parameter configurations, and handle error scenarios gracefully.

Additionally, the intelligent tool selection mechanism enables GLM-4.5 to choose optimal tools for specific tasks based on context, requirements, and available resources. This capability significantly reduces the complexity of building sophisticated AI-powered applications that require multiple system integrations.

The robust error handling and recovery mechanisms ensure reliable operation in production environments where external dependencies may experience intermittent issues or changing availability patterns.

Technical Implementation Considerations

Successful GLM-4.5 deployment requires careful consideration of infrastructure requirements, scaling strategies, and performance optimization techniques. The model provides 8x better performance per computational cost compared to dense models of similar capability, enabling efficient resource utilization across diverse deployment scenarios.

Furthermore, the hybrid MoE architecture allows for flexible scaling strategies that can adapt to varying workload patterns and resource constraints. Organizations can implement progressive scaling approaches that align with usage growth and budget considerations.

The deployment flexibility extends to various hosting environments, including cloud platforms, on-premises infrastructure, and hybrid configurations that balance cost, performance, and data privacy requirements.

Memory and Compute Optimization Strategies

Effective GLM-4.5 deployment involves sophisticated memory management and compute optimization techniques that maximize performance while minimizing resource consumption. The model's architecture supports various optimization approaches including quantization, pruning, and dynamic batching strategies.

Additionally, the intelligent caching mechanisms can significantly improve response times for frequently accessed patterns and reduce overall computational overhead. These optimizations prove particularly valuable in high-throughput production environments.

When implementing GLM-4.5 with Apidog's testing framework, developers can systematically evaluate the impact of different optimization strategies on model performance and identify optimal configurations for specific use cases.

API Design and Integration Patterns

GLM-4.5's API design follows modern RESTful principles while incorporating advanced features for streaming responses, batch processing, and stateful conversations. The comprehensive API documentation provides clear guidance for implementing various integration patterns and handling edge cases.

Moreover, the API's flexibility accommodates different application architectures and usage patterns without requiring significant modifications to existing systems. The backward compatibility ensures smooth migration paths for applications currently using other language models.

The robust authentication and authorization mechanisms provide enterprise-grade security features that meet compliance requirements for sensitive applications and regulated industries.

Rate Limiting and Performance Optimization

Production API implementations require sophisticated rate limiting and performance optimization strategies to ensure reliable service delivery and optimal resource utilization. GLM-4.5's API includes configurable rate limiting mechanisms that can adapt to different usage patterns and subscription tiers.

Furthermore, the intelligent load balancing and request queuing systems help maintain consistent response times even during peak usage periods. These features prove essential for applications with unpredictable traffic patterns or seasonal usage variations.

Fine-Tuning and Customization Opportunities

GLM-4.5 supports multiple fine-tuning approaches: LoRA (Low-Rank Adaptation) for efficient training, full parameter fine-tuning for maximum customization, and RLHF (Reinforcement Learning from Human Feedback) for alignment. These options enable organizations to tailor the model's behavior to specific domains and use cases.

Additionally, the comprehensive fine-tuning documentation and example scripts accelerate the customization process while ensuring adherence to best practices. The modular architecture allows for targeted improvements in specific capability areas without affecting overall model performance.

The fine-tuning infrastructure supports various data formats and training methodologies, enabling organizations to leverage existing datasets and domain expertise effectively.

Domain-Specific Adaptation Strategies

Successful GLM-4.5 customization requires strategic approaches to domain adaptation that balance specialization with general capability preservation. The model's architecture supports incremental learning approaches that can incorporate new knowledge without catastrophic forgetting of existing capabilities.

Furthermore, the sophisticated evaluation frameworks enable systematic assessment of fine-tuning effectiveness across different metrics and use cases. These tools prove essential for organizations seeking to optimize model performance for specific applications.

The collaborative fine-tuning environments facilitate team-based model development and enable knowledge sharing across different customization projects within organizations.

Security and Privacy Considerations

GLM-4.5's open-source nature enables comprehensive security auditing and customization to meet specific privacy requirements. Organizations can implement additional security layers, modify data handling procedures, and ensure compliance with relevant regulations and industry standards.

Moreover, the model's local deployment capabilities provide complete control over data processing and storage, eliminating concerns about third-party data access or retention policies. This control proves particularly valuable for organizations handling sensitive information or operating in regulated industries.

The transparent architecture enables security teams to understand model behavior, identify potential vulnerabilities, and implement appropriate mitigation strategies tailored to specific threat models and risk profiles.

Data Governance and Compliance

Implementing GLM-4.5 in enterprise environments requires careful consideration of data governance requirements and compliance obligations. The model's flexibility enables implementation of sophisticated data handling policies that align with organizational requirements and regulatory mandates.

Additionally, the comprehensive logging and audit capabilities provide detailed visibility into model usage patterns, data access patterns, and decision-making processes. These features support compliance reporting and security monitoring requirements.

Conclusion: Embracing the Future of Open-Source AI

GLM-4.5 represents a transformative advancement in open-source artificial intelligence that combines exceptional performance with unprecedented flexibility and transparency. The model's comprehensive capabilities across reasoning, coding, and agentic tasks position it as an ideal foundation for next-generation intelligent applications.

Organizations leveraging GLM-4.5 with comprehensive API testing platforms like Apidog gain significant advantages in development velocity, deployment reliability, and ongoing maintenance efficiency. These tools enable systematic validation of model performance, streamlined integration processes, and robust monitoring capabilities that ensure successful production implementations.