Developers constantly seek tools that enhance productivity without inflating costs. GLM-4.5 emerges as a game-changer in this space, offering robust capabilities for coding tasks. When you pair it with Claude Code, you gain access to advanced AI assistance that rivals top-tier models. M

Understanding GLM-4.5: The Foundation of Advanced AI Coding

Z.ai develops GLM-4.5 as a flagship large language model tailored for agent-oriented applications. Engineers at Z.ai employ a Mixture-of-Experts (MoE) architecture, which activates 32 billion parameters out of a total 355 billion during each forward pass. This design optimizes efficiency, allowing the model to handle complex tasks without excessive computational overhead. Additionally, GLM-4.5 undergoes pretraining on 15 trillion tokens, followed by fine-tuning on specialized datasets for code generation, reasoning, and agent behaviors.

The model supports a 128k token context window, enabling it to process extensive codebases or multi-step instructions in one go. Developers appreciate this feature because it reduces the need for repeated prompts. Furthermore, GLM-4.5 incorporates hybrid reasoning modes: Thinking Mode for intricate problems and Non-Thinking Mode for quick responses. You activate these via the thinking.type parameter in API calls, providing flexibility based on task demands.

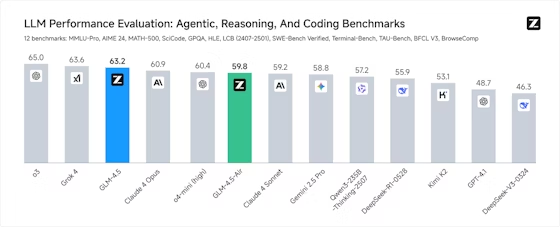

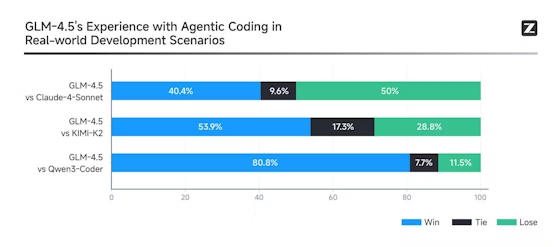

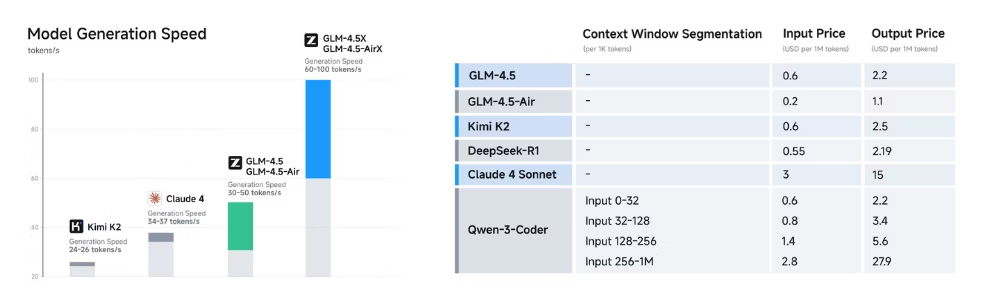

Benchmarks underscore GLM-4.5's prowess. It ranks second globally across 12 suites, including MMLU Pro for multifaceted reasoning and LiveCodeBench for real-time coding challenges. In practical terms, this means GLM-4.5 generates Python scripts or JavaScript functions with high accuracy, often outperforming models like Claude 3.5 Sonnet in function-calling tasks. However, its true strength lies in agentic functions, where it invokes tools, browses the web, or engineers software components autonomously.

GLM-4.5-Air, a lighter variant with 106 billion total parameters and 12 billion active, complements the main model for scenarios demanding speed over raw power. Both variants integrate seamlessly with development tools, making them ideal for coding environments. As a result, teams adopt GLM-4.5 to streamline workflows, from debugging legacy code to prototyping new applications.

What Is Claude Code and Why Integrate It with GLM-4.5?

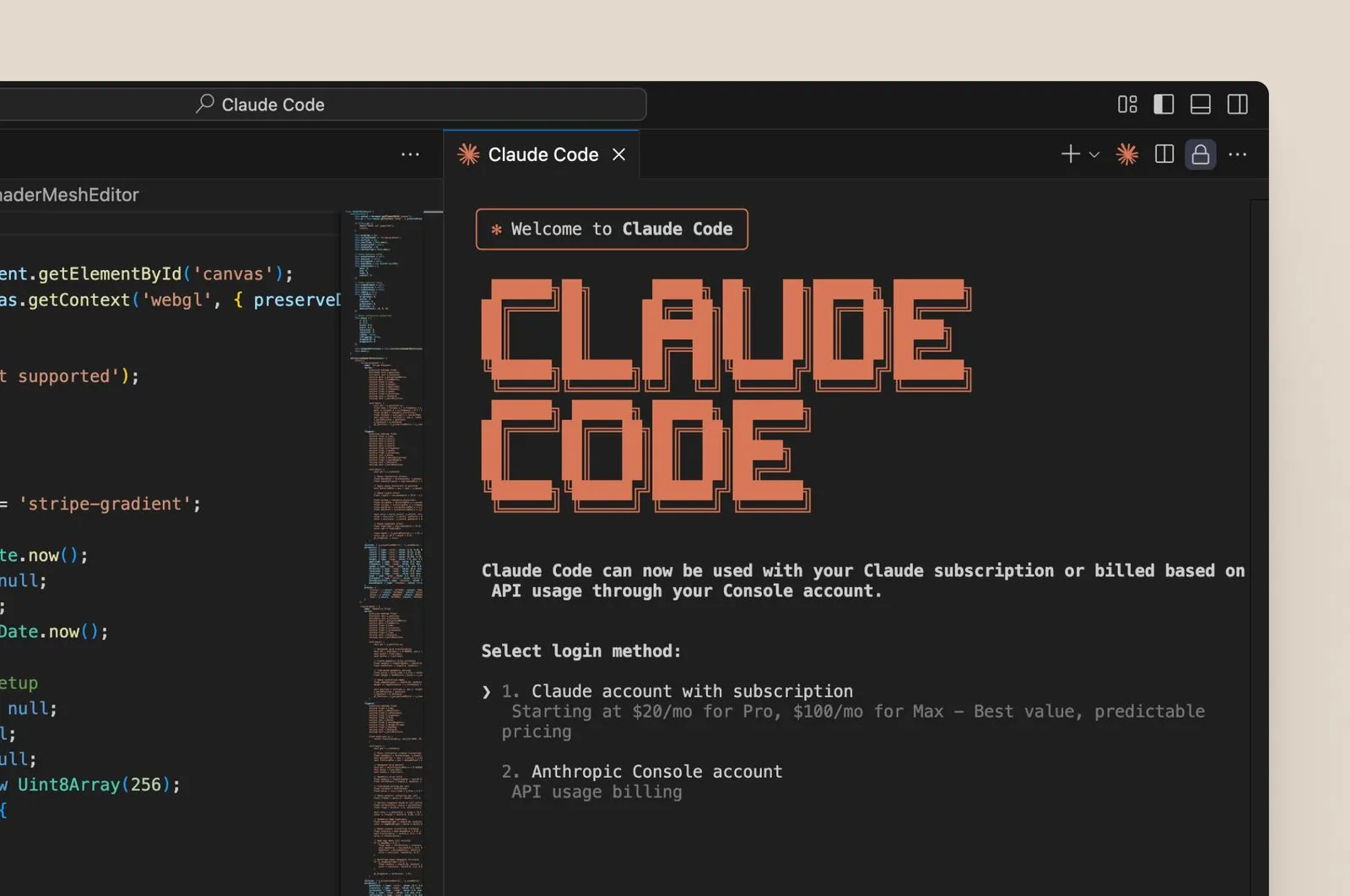

Claude Code serves as a terminal-based coding assistant that leverages AI to automate development tasks. Users install it as a CLI tool or integrate it into IDEs like VS Code. Originally designed around Anthropic's Claude models, Claude Code executes commands, generates code snippets, and manages repositories through natural language inputs. For instance, you might instruct it to "refactor this function for better performance," and it responds with optimized code.

The integration with GLM-4.5 occurs through Z.ai's Anthropic-compatible API endpoint. This compatibility allows you to swap out Claude models for GLM-4.5 without altering Claude Code's core functionality. Consequently, developers route requests to Z.ai's servers, benefiting from GLM-4.5's superior tool invocation rates—up to 90% success in benchmarks.

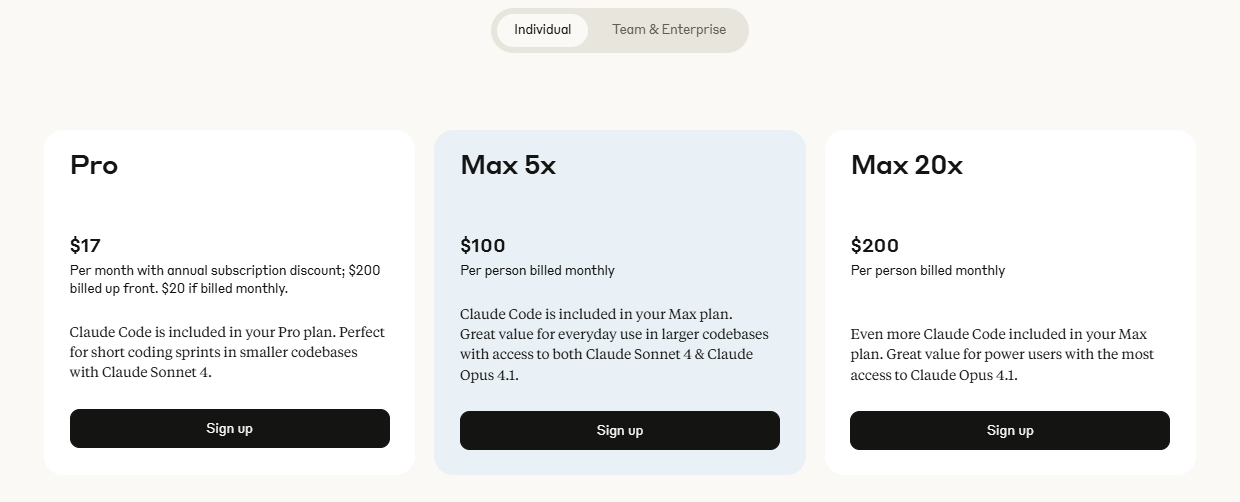

Why make this switch? GLM-4.5 offers cost advantages and enhanced performance in agentic coding. Traditional Claude plans can cost $100-$200 monthly for heavy usage, but Z.ai's GLM Coding Plans start at $3 for Lite and $15 for Pro, providing 3x the usage of comparable Claude tiers. This affordability attracts indie developers and startups. Moreover, GLM-4.5 excels in domains like front-end development and bug fixing, completing tasks in minutes that might take hours manually.

Benefits of Using GLM-4.5 with Claude Code

Combining GLM-4.5 and Claude Code yields tangible advantages. First, you achieve faster iteration cycles. GLM-4.5's generation speed exceeds 100 tokens per second, enabling real-time code suggestions in Claude Code. This rapidity proves crucial during debugging sessions, where quick fixes prevent workflow interruptions.

Second, the integration enhances accuracy. GLM-4.5's reinforcement learning fine-tuning ensures reliable outputs, reducing hallucinations in code generation. For example, it adheres to best practices in languages like Java or C++, incorporating error handling and optimization automatically. Therefore, developers spend less time revising AI-generated code.

Third, cost efficiency stands out. The GLM Coding Pro plan at $15/month unlocks intensive tasks with 3x the capacity of Claude's Max plan. Lite at $3 suits lightweight needs, making advanced AI accessible. Powered by GLM-4.5 and GLM-4.5-Air, these plans promise upcoming tool integrations, expanding capabilities further.

Security also improves. Z.ai's API supports structured outputs like JSON, ensuring predictable responses in Claude Code. Additionally, context caching minimizes redundant computations, lowering latency in long sessions.

Finally, the open-source nature of GLM-4.5 allows customization. Teams fine-tune the model for domain-specific tasks, integrating it deeper into Claude Code workflows. Overall, this pairing transforms coding from a solitary endeavor into an AI-augmented process.

Step-by-Step Guide: Setting Up GLM-4.5 in Claude Code

You start by preparing your environment. Install Claude Code via its official CLI or extension. Next, sign up at Z.ai's platform to obtain an API key. Subscribe to a GLM Coding Plan if your usage exceeds free tiers—Lite works for beginners.

Configure the integration. In Claude Code's settings, locate the API configuration file (often ~/.claude/config.yaml). Update the base URL to Z.ai's endpoint: https://api.z.ai/api/paas/v4/chat/completions. Insert your API key in the authorization header.

Test the setup. Launch Claude Code and issue a simple command: "Generate a Python function to sort a list." GLM-4.5 processes this through the compatible API, returning code. Verify output for correctness.

Enable advanced features. Set the thinking.type to "enabled" for complex tasks. This activates GLM-4.5's deep reasoning, ideal for architectural decisions. Use streaming by adding stream: true to requests, allowing progressive code display in Claude Code.

Handle tools. GLM-4.5 supports function calls—define tools in your prompt, and the model invokes them. For web browsing, include a browser tool; Claude Code routes these seamlessly.

Troubleshoot issues. If authentication fails, regenerate your key. For rate limits, monitor usage in Z.ai's dashboard. Apidog aids here by simulating calls before live integration.

Testing GLM-4.5 API with Apidog: Ensuring Reliability

Apidog streamlines API testing, making it essential for GLM-4.5 integration. You create a new project in Apidog and import Z.ai's OpenAPI spec. Define endpoints like /chat/completions.

Construct requests. Set headers with your API key and content-type as JSON. In the body, specify the model as "glm-4.5" and add messages array for prompts.

Run tests. Apidog executes calls, displaying responses with status codes. Assert expected outputs, such as JSON-structured code.

Automate scenarios. Build test suites for variations—test Thinking Mode versus Non-Thinking, or different temperatures (0.6 for balanced creativity).

Monitor performance. Apidog tracks latency and errors, helping optimize parameters before Claude Code deployment.

Integrate with CI/CD. Export Apidog scripts to pipelines, ensuring GLM-4.5 reliability post-updates.

Advanced Usage: Leveraging GLM-4.5's Agentic Features in Claude Code

GLM-4.5 shines in agentic tasks. You define agents in Claude Code prompts, and GLM-4.5 orchestrates them. For instance, create a refactoring agent: "Analyze this codebase and suggest improvements."

Use multi-tool invocation. GLM-4.5 handles chains—browse docs, generate code, test it—all within one session.

Fine-tune for specifics. Upload datasets to Z.ai for custom models, then route Claude Code to them.

Scale with caching. Store contexts in GLM-4.5's cache, speeding up iterative coding.

Combine variants. Switch to GLM-4.5-Air for quick tasks, reserving full GLM-4.5 for intensive ones.

Pricing and Plans: Making GLM-4.5 Affordable for Claude Code Users

Z.ai tailors plans for Claude Code. Lite at $3/month offers 3x Claude Pro usage for casual coding. Pro at $15/month triples Claude Max, suiting professionals.

Free tiers exist for testing, but plans unlock unlimited potential. Compare: Standard API costs $0.2/M input tokens, but plans bundle for savings.

Common Challenges and Solutions

Latency spikes? Optimize prompts. Errors in tool calls? Refine definitions. Apidog identifies these early.

Conclusion

Integrating GLM-4.5 with Claude Code empowers developers. Follow these steps, leverage Apidog, and transform your coding practice. The combination delivers efficiency, accuracy, and affordability—start today.