The release of advanced AI models like Google's Gemini 2.5 Pro generates significant excitement within the developer community. Its enhanced reasoning, coding capabilities, and multimodal understanding promise to revolutionize how we build applications. However, accessing cutting-edge models often comes with questions about cost and availability. Many developers wonder, "How can I use Gemini 2.5 Pro for free?" While direct, unlimited API access typically involves costs, there are legitimate ways to interact with this powerful model without any cost, primarily through its web interface, and even programmatically via community projects.

The Official Way to Use Gemini 2.5 Pro for Free: The Web Interface

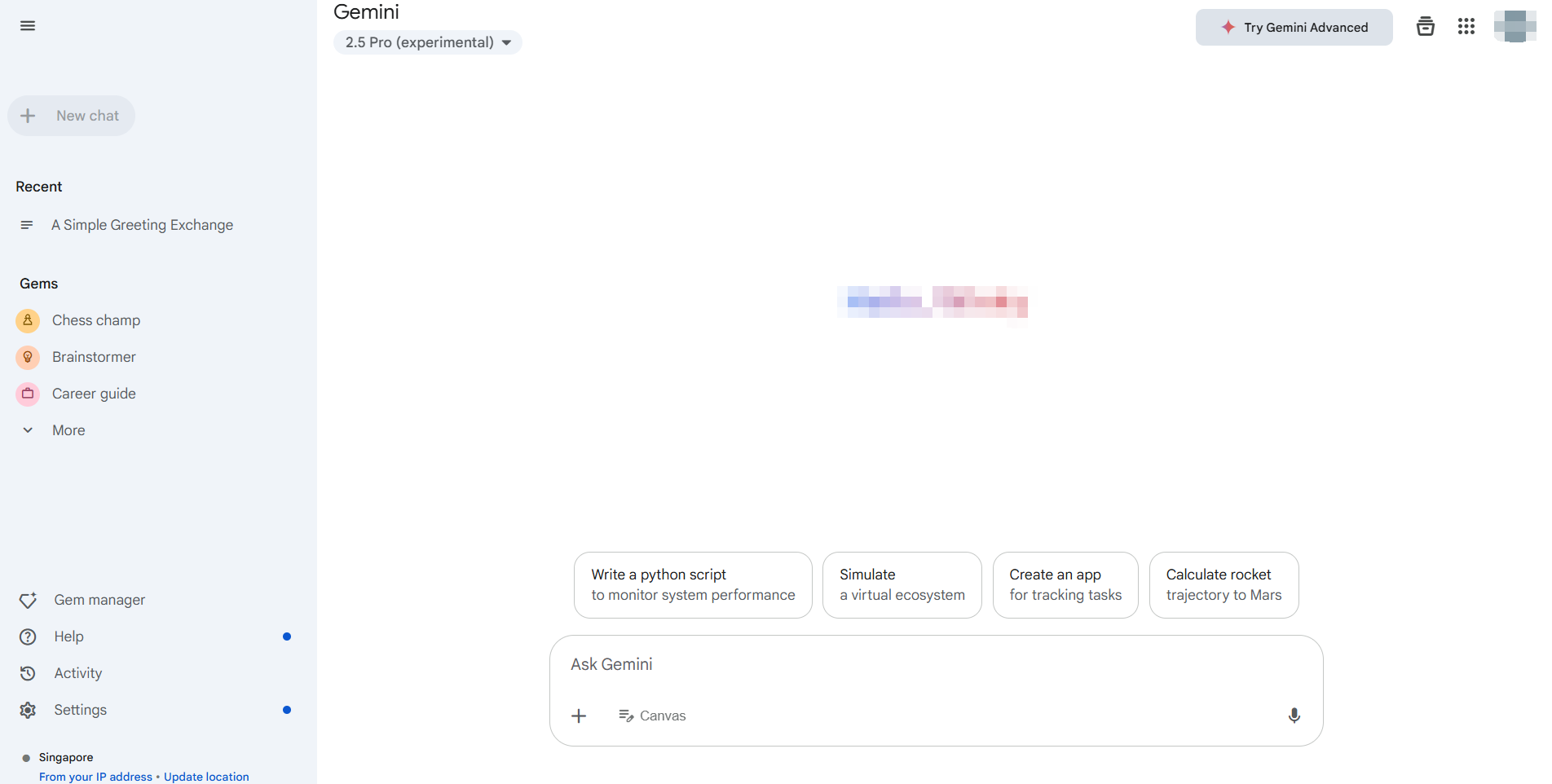

The most straightforward and officially supported method to use Gemini 2.5 Pro without any cost is through its dedicated web application, typically accessible at https://gemini.google.com/. Google provides access to its latest models, including Gemini 2.5 Pro (availability might vary by region and account status), through this interface for general users.

Key Advantages of the Web Interface:

- Zero Cost: This is the standard free tier offering direct interaction.

- Full Feature Access: You can usually leverage the model's core capabilities, including text generation, brainstorming, coding assistance, and potentially image generation (like with Imagen3, subject to availability) and interactions with Google Workspace extensions (Gmail, Docs, Drive, etc.).

- User-Friendly UI: The web interface is designed for ease of use, requiring no setup beyond logging into your Google account.

How to Use It:

- Navigate to the Gemini web application.

- Log in with your Google Account.

- Ensure Gemini 2.5 Pro is selected if multiple models are available (often indicated in settings or near the prompt input).

5. Start interacting! Type your prompts, upload files (if supported), and explore its capabilities.

For many users and developers needing to evaluate Gemini 2.5 Pro, test prompts, or perform non-programmatic tasks, the official web interface is the ideal, cost-free solution. It provides direct access to the model's power without requiring API keys or dealing with usage quotas associated with the paid API. This method perfectly addresses the need to use Gemini 2.5 Pro for free for exploration and manual interaction.

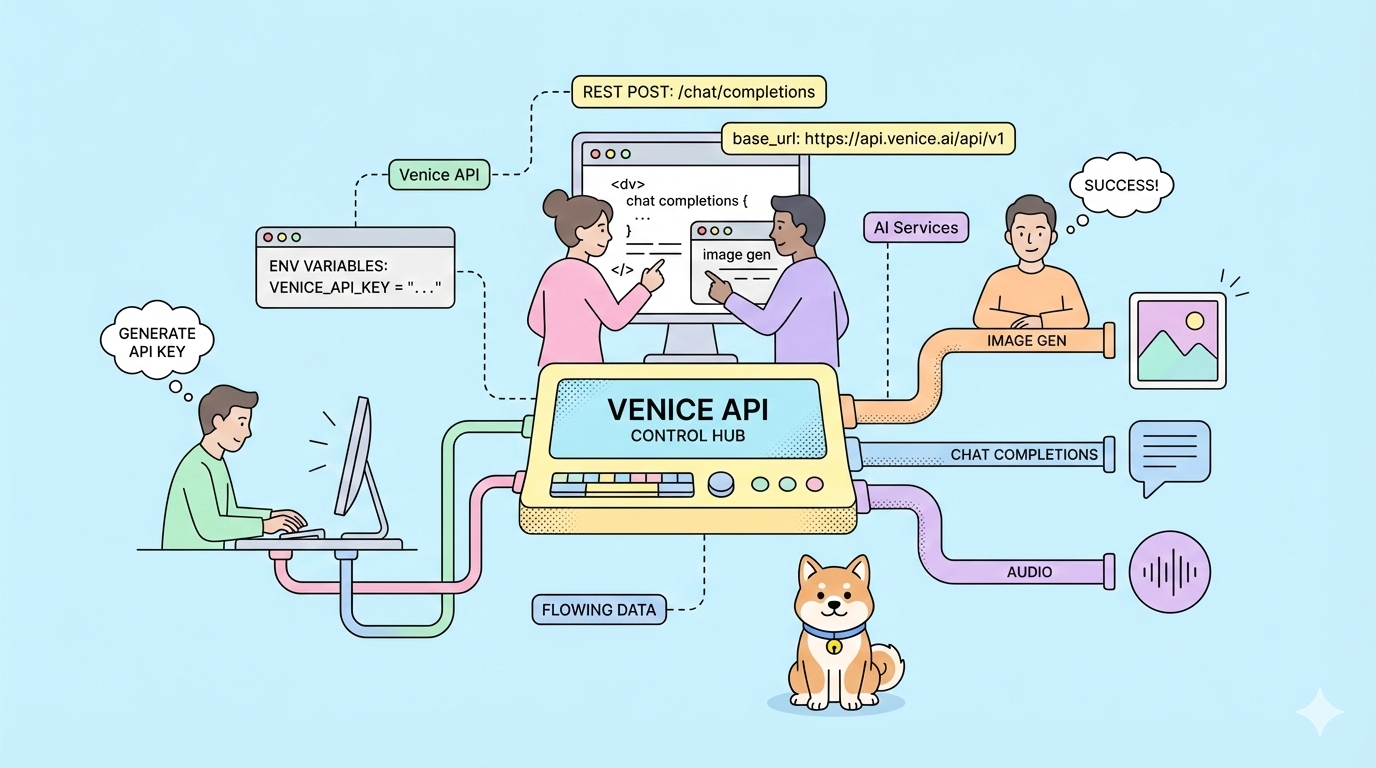

How to Use Gemini 2.5 Pro Programmatically Without Direct API Cost

While the web interface is great for manual use, developers often need programmatic access for integration, automation, or batch processing. The official Google Generative AI API for models like Gemini 2.5 Pro typically involves costs based on token usage. However, the developer community often creates tools to bridge this gap.

One such tool, highlighted in community resources like the Gemini API Python library, allows developers to interact with the free Gemini web interface programmatically.

Crucial Disclaimer: It is essential to understand that this is NOT the official Google Generative AI API. These libraries work by reverse-engineering the web application's private API calls and using browser cookies for authentication.

- Unofficial: Not supported or endorsed by Google.

- Potentially Unstable: Relies on web interface elements that can change without notice, breaking the library.

- Security Considerations: Requires extracting authentication cookies from your browser, which carries inherent security risks if not handled carefully.

- Terms of Service: Usage might violate Google's terms of service for the web application.

Despite these caveats, such libraries offer a way for developers to experiment and use Gemini 2.5 Pro without any cost beyond the free tier limitations of the web interface itself, but in an automated fashion.

Step-by-Step Guide:

Prerequisites: Ensure you have Python 3.10+ installed.

Installation: Install the library using pip:

pip install -U gemini_webapi

# Optional: Install browser-cookie3 for easier cookie fetching

pip install -U browser-cookie3Authentication (The Tricky Part):

- Log in to

gemini.google.comin your web browser. - Open Developer Tools (F12), go to the

Networktab, and refresh the page. - Find a request (any request usually works).

- In the request headers (usually under "Cookies"), find and copy the values for

__Secure-1PSIDand__Secure-1PSIDTS. Treat these like passwords! - Alternative: If

browser-cookie3is installed, the library might be able to import cookies automatically if you are logged in via a supported browser, simplifying this step but still relying on local browser state.

Initialization & Basic Usage:

import asyncio

from gemini_webapi import GeminiClient

from gemini_webapi.constants import Model # Import Model constants

# --- Authentication ---

# Method 1: Manually paste cookies (handle securely!)

Secure_1PSID = "YOUR__SECURE_1PSID_COOKIE_VALUE"

Secure_1PSIDTS = "YOUR__SECURE_1PSIDTS_COOKIE_VALUE" # May be optional

async def run_gemini():

# Method 2: Use browser-cookie3 (if installed and logged in)

# client = GeminiClient(proxy=None) # Attempts auto-cookie import

# Initialize with manual cookies

client = GeminiClient(Secure_1PSID, Secure_1PSIDTS, proxy=None)

await client.init(timeout=30) # Initialize connection

# --- Select Model and Generate Content ---

prompt = "Explain the difference between REST and GraphQL APIs."

# Use the Gemini 2.5 Pro model identifier

print(f"Sending prompt to Gemini 2.5 Pro...")

response = await client.generate_content(prompt, model=Model.G_2_5_PRO) # Use the specific model

print("\nResponse:")

print(response.text)

# Close the client session (important for resource management)

await client.close()

if __name__ == "__main__":

asyncio.run(run_gemini())Check out more integration details here.

Exploring Further: Libraries like this often support features available in the web UI, such as multi-turn chat (client.start_chat()), file uploads, image generation requests, and using extensions (@Gmail, @Youtube), mirroring the web app's capabilities programmatically.

Remember, this approach automates interaction with the free web tier, not the paid API. It allows programmatic experimentation with Gemini 2.5 Pro at no direct API cost but comes with significant reliability and security warnings.

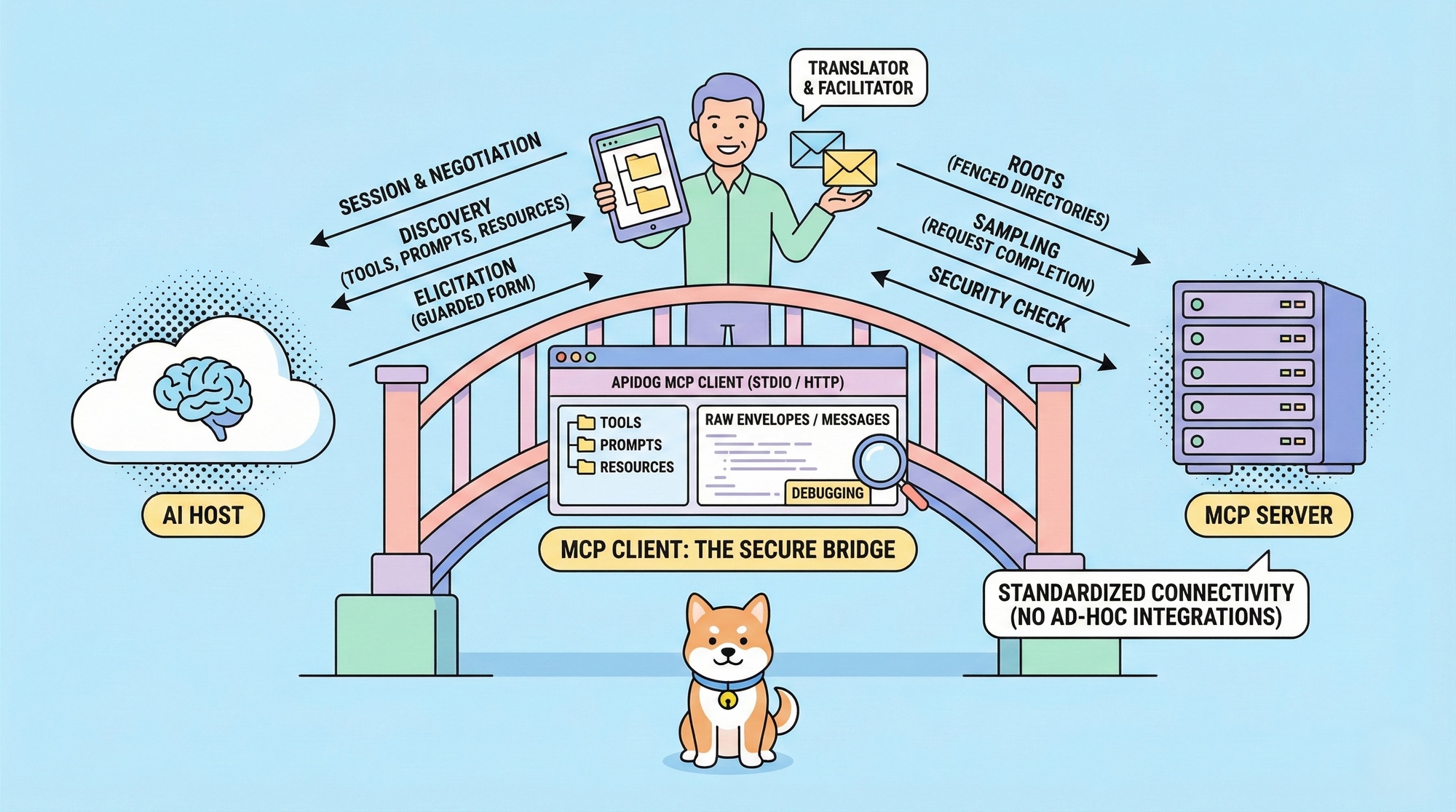

The Debugging Challenge with LLMs

Whether you interact with Gemini 2.5 Pro via the web, the official API, or unofficial libraries, understanding and debugging the responses is crucial, especially when dealing with complex or streaming outputs. Many LLMs, particularly in API form, utilize Server-Sent Events (SSE) to stream responses token by token or chunk by chunk. This provides a real-time feel but can be challenging to debug using standard HTTP clients.

Traditional API testing tools might simply display raw SSE data: chunks, making it difficult to piece together the complete message or understand the flow of information, particularly the model's "thought process" if provided. This is where a specialized tool becomes invaluable.

Introducing Apidog: A comprehensive, all-in-one API development platform designed to handle the entire API lifecycle – from design and documentation to debugging, automated testing, and mocking. While powerful across the board, Apidog particularly excels in handling modern API protocols, making it an exceptional API testing tool for developers working with LLMs. Its SSE debugging features are specifically built to address the challenges of streaming responses.

Master Real-Time LLM Responses

Working with LLMs like Gemini 2.5 Pro often involves Server-Sent Events (SSE) for streaming results. This technology allows the AI model to push data to your client in real-time, showing generated text or even reasoning steps as they happen. While great for user experience, debugging raw SSE streams in basic tools can be a nightmare of fragmented messages.

Apidog, as a leading API testing tool, transforms SSE debugging from a chore into a clear process. Here's how Apidog helps you tame LLM streams:

- Automatic SSE Detection: When you send a request to an LLM endpoint using Apidog, if the response header

Content-Typeistext/event-stream, Apidog automatically recognizes it as an SSE stream. - Real-Time Timeline View: Instead of just dumping raw

data:lines, Apidog presents the incoming messages chronologically in a dedicated "Timeline" view. This allows you to see the flow of information exactly as the server sends it. - Intelligent Auto-Merging: This is a game-changer for LLM SSE debugging. Apidog has built-in intelligence to recognize common streaming formats used by major AI providers, including:

- OpenAI API Compatible Format (used by many)

- Gemini API Compatible Format

- Claude API Compatible Format

- Ollama API Compatible Format (for locally run models, often JSON Streaming/NDJSON)

If the stream matches these formats, Apidog automatically merges the individual message fragments (data: chunks) into the complete, coherent final response or intermediate steps. No more manually copying and pasting chunks! You see the full picture as the AI intended.

4. Visualizing Thought Process: For models that stream their reasoning or "thoughts" alongside the main content (like certain configurations of DeepSeek), Apidog can often display this meta-information clearly within the timeline, providing invaluable insight into the model's process.

Using Apidog for SSE debugging means you get a clear, real-time, and often automatically structured view of how your LLM endpoint is responding. This makes identifying issues, understanding the generation process, and ensuring the final output is correct significantly easier compared to generic HTTP clients or traditional API testing tools that lack specialized SSE handling.

Conclusion: Accessing Gemini 2.5 Pro for Free

You can explore Gemini 2.5 Pro for free through its official web interface. Developers looking for API-like access without fees can try community-built tools, though these come with risks like instability and potential terms-of-service issues.

For working with LLMs like Gemini—especially when dealing with streaming responses—traditional tools fall short. That’s where Apidog shines. With features like automatic SSE detection, timeline views, and smart merging of fragmented data, Apidog makes debugging real-time responses much easier. Its custom parsing support further streamlines complex workflows, making it a must-have for modern API and LLM development.