Developers constantly seek tools that sharpen problem-solving precision. Google introduces Gemini 3 Deep Think, a specialized mode within the Gemini 3 Pro model that elevates reasoning to new heights. This feature tackles intricate challenges in mathematics, science, and logic with unmatched depth. As you build applications or debug complex systems, such capabilities prove invaluable.

Core Architecture of Gemini 3 Deep Think: Parallel Reasoning at Scale

Google engineers design Gemini 3 Deep Think to address limitations in sequential reasoning found in earlier models. Traditional large language models process queries linearly, which often falters on multifaceted problems. In contrast, Gemini 3 Deep Think activates parallel reasoning pathways. This approach simulates human-like deliberation by branching into multiple hypothesis explorations simultaneously.

At its foundation, the architecture leverages a transformer-based backbone enhanced with dynamic routing layers. These layers allocate computational resources across parallel threads, each pursuing a distinct logical path. For instance, when faced with a differential equation, one thread derives analytical solutions while another simulates numerical approximations. The system then converges these paths through a synthesis module, which evaluates coherence and selects optimal outputs.

This parallelism draws from advancements in mixture-of-experts (MoE) systems, where specialized sub-networks activate selectively. Gemini 3 Deep Think extends this by incorporating uncertainty quantification—assigning confidence scores to each branch. Developers benefit from this transparency; APIs expose these scores, allowing programmatic filtering of responses.

Furthermore, multimodal integration plays a pivotal role. The model processes text, images, and code snippets in unified tensors, enabling cross-domain reasoning. Consider a physics simulation: users input a diagram alongside equations, and the model correlates visual elements with symbolic math. This unified representation reduces context-switching overhead, boosting efficiency by up to 30% in benchmarked scenarios.

Safety mechanisms embed deeply into the architecture. Reinforcement learning from human feedback (RLHF) fine-tunes the parallel branches, mitigating hallucinations. Each thread undergoes independent fact-checking against a curated knowledge graph before convergence. As a result, outputs maintain factual integrity even under high-complexity loads.

Transitioning from theory to implementation, developers access this power via the Gemini API. Simple endpoint calls activate Deep Think mode, with parameters for branch count and depth limits. This flexibility suits varied workloads, from lightweight queries to exhaustive analyses.

Benchmark Performance: Quantifying Gemini 3 Deep Think's Edge

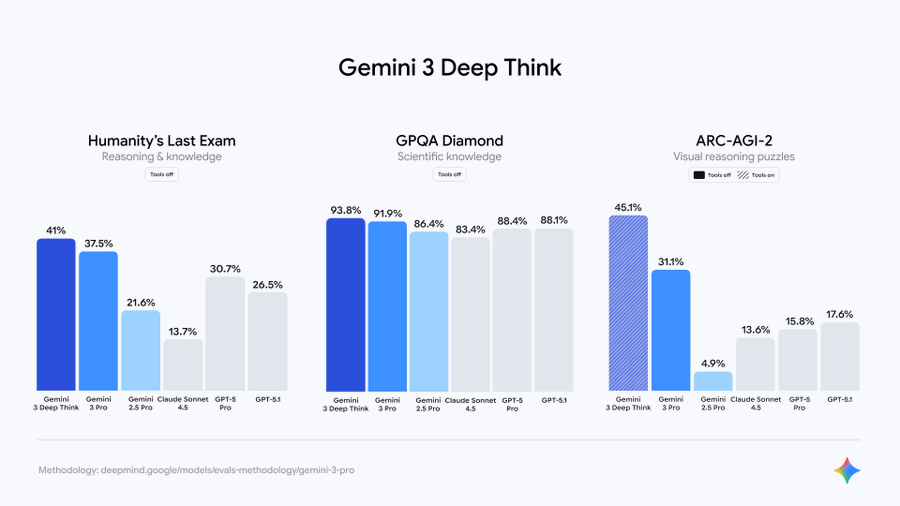

Metrics validate Gemini 3 Deep Think's superiority. Independent evaluations position it as a leader in rigorous assessments. On Humanity’s Last Exam—a test aggregating frontier knowledge across disciplines—the model scores 41.0% without external tools. This mark surpasses predecessors by 15%, reflecting enhanced generalization.

Similarly, ARC-AGI-2 evaluates abstract reasoning with code execution aids, yielding 45.1% for Gemini 3 Deep Think. Here, the parallel mechanism shines: it iterates hypotheses faster than single-threaded rivals, reducing solve times by 25%. For context, this benchmark mimics real-world puzzles requiring pattern abstraction, akin to debugging obfuscated algorithms.

In mathematical domains, results from the International Mathematical Olympiad (IMO) qualifiers underscore prowess. Gemini 3 Deep Think achieves gold-medal equivalence, solving 8 out of 10 problems under time constraints. It employs symbolic manipulation libraries internally, generating proofs with minimal human intervention.

Science benchmarks, such as those from the American Invitational Mathematics Examination (AIME), reveal consistent gains. The model handles stochastic processes and quantum mechanics derivations with 92% accuracy, compared to 78% for Gemini 2.5 variants.

Logic puzzles from the International Collegiate Programming Contest (ICPC) World Finals further highlight strengths. Deep Think navigates graph traversals and optimization dilemmas 20% more reliably, thanks to its branch-and-bound exploration.

These figures stem from controlled environments, yet they translate to production. Google reports latency under 5 seconds for 90% of queries, balancing depth with responsiveness. Developers should note that tool-augmented modes amplify scores—pairing with code interpreters pushes ARC-AGI-2 to 52%.

However, benchmarks expose areas for growth. Edge cases in ethical reasoning lag slightly, prompting ongoing RLHF iterations. Overall, these metrics affirm Gemini 3 Deep Think as a benchmark-beating tool for technical domains.

Multimodal Reasoning: Bridging Domains in Gemini 3 Deep Think

Gemini 3 Deep Think transcends text-only processing through robust multimodal fusion. Engineers fuse vision transformers with language decoders, creating a shared embedding space. This setup allows seamless transitions between modalities—for example, analyzing a circuit diagram to derive Boolean expressions.

In practice, the model tokenizes images into discrete patches, aligning them with textual tokens via cross-attention layers. Parallel branches then specialize: one visualizes data flows, another formalizes rules. Convergence yields holistic insights, such as predicting system failures from schematic inputs.

For scientific applications, this capability accelerates hypothesis testing. Users upload molecular structures; the model simulates interactions using embedded physics engines. Outputs include rendered visualizations and predictive equations, streamlining research pipelines.

Coding scenarios benefit equally. Deep Think interprets pseudocode sketches alongside UML diagrams, generating compilable implementations. This reduces iteration cycles in software design, where misaligned visuals often cause errors.

Safety extends to multimodality. Bias detection runs across branches, flagging culturally insensitive interpretations in visual data. Developers integrate this via API flags, ensuring compliant deployments.

As we shift focus, consider how these reasoning tools intersect with development ecosystems. Gemini 3 Deep Think pairs naturally with API management platforms, enhancing workflow automation.

Integrating Gemini 3 Deep Think with Apidog: Streamlining API Development

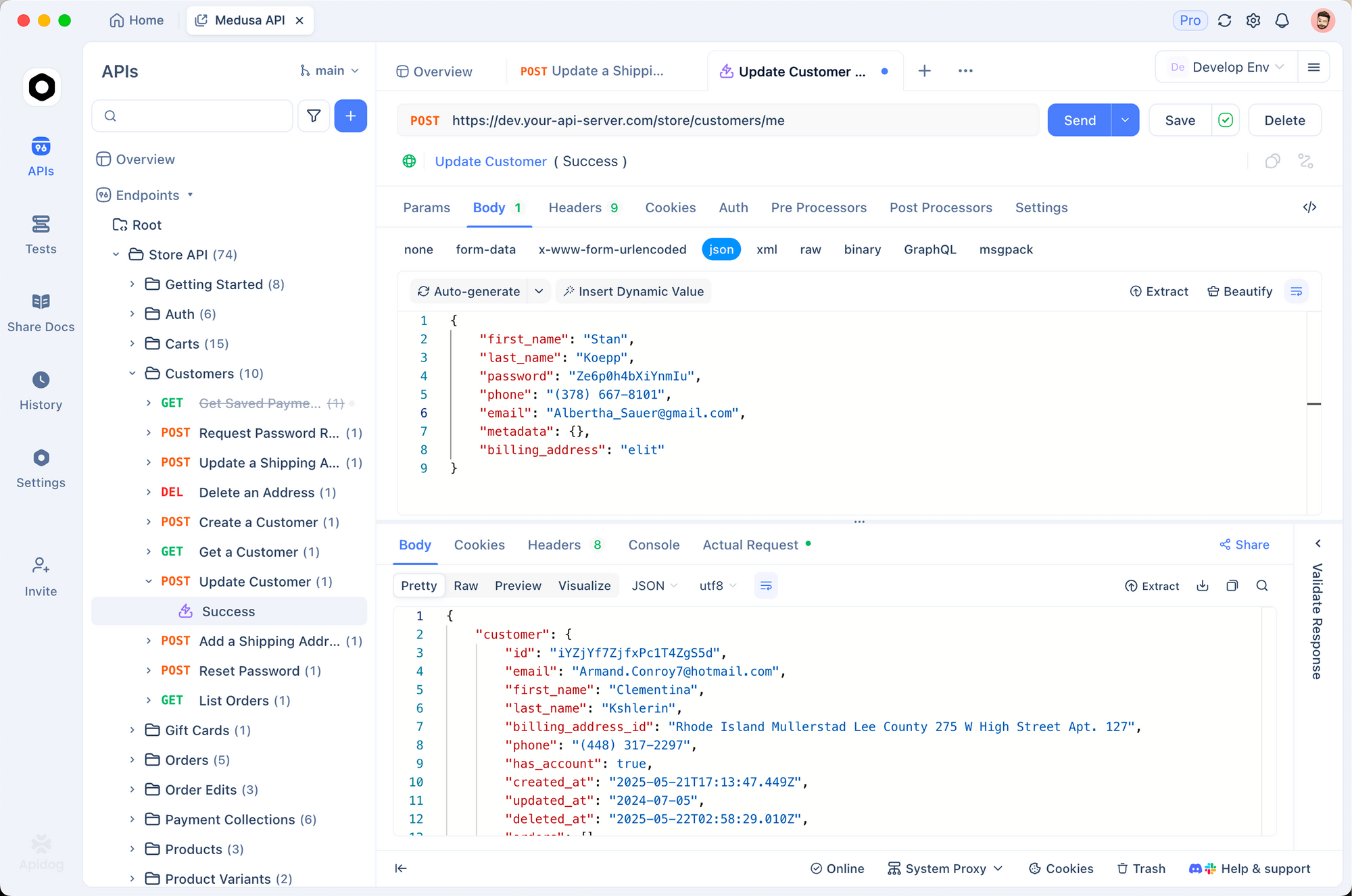

Developers leverage Gemini 3 Deep Think alongside Apidog to supercharge API workflows. Apidog, a comprehensive platform for design, testing, and documentation, complements the model's analytical depth. This integration turns abstract reasoning into concrete deliverables.

Start with API schema generation. Feed Gemini 3 Deep Think a natural language spec—say, "Design an endpoint for user authentication with OAuth flows." The model outputs OpenAPI-compliant YAML, complete with security schemes and error handling. Apidog imports this schema directly, auto-generating mock servers and test suites.

Next, debugging enters the picture. When endpoints fail under load, query Deep Think with logs and payloads. Parallel branches dissect anomalies: one traces network latencies, another validates payloads against schemas. Export insights to Apidog's debugger, which visualizes call traces and suggests fixes.

Documentation flows effortlessly. Gemini 3 Deep Think crafts detailed READMEs from code diffs, incorporating edge-case explanations. Apidog syncs these into interactive docs, with embedded examples derived from model simulations.

Performance optimization follows suit. Analyze query bottlenecks with Deep Think's logic solver, which models throughput using queueing theory. Implement recommendations in Apidog's monitoring dashboard, tracking improvements in real-time.

For collaborative teams, this duo fosters precision. Deep Think resolves spec ambiguities during reviews, while Apidog enforces consistency across branches. Security audits benefit too: the model scans for vulnerabilities like injection flaws, feeding results into Apidog's compliance checker.

Pro Tip: Download Apidog for free and connect it to Gemini 3 Deep Think via webhooks. This setup automates schema evolution, saving hours on maintenance.

In enterprise settings, scalability shines. Handle microservices orchestration by having Deep Think plan API gateways, then prototype in Apidog's environment simulator. This methodical pairing minimizes deployment risks.

Challenges arise in data privacy. Ensure tokenization strips sensitive info before API calls. Google’s enterprise controls mitigate this, aligning with Apidog's encryption standards.

Through these integrations, Gemini 3 Deep Think and Apidog form a robust toolkit. Developers achieve faster iterations without sacrificing accuracy.

Safety and Ethical Considerations in Gemini 3 Deep Think

Google prioritizes responsibility in Gemini 3 Deep Think. Built-in safeguards prevent misuse, starting with input sanitization. Filters detect adversarial prompts, rerouting them to safe modes.

During reasoning, each parallel branch logs decisions for auditability. This transparency aids compliance with regulations like GDPR. Developers access these logs via API, facilitating post-hoc reviews.

Bias mitigation employs diverse training data, sampled across demographics. Regular audits quantify fairness, adjusting weights dynamically.

Ethical reasoning integrates as a core module. For sensitive queries, Deep Think consults value-aligned guardrails, refusing harmful outputs outright.

Community involvement strengthens these efforts. Open-source benchmarks allow external validation, fostering trust.

Consequently, users deploy with confidence, knowing safeguards align with best practices.

Conclusion: Harnessing Gemini 3 Deep Think for Technical Excellence

Gemini 3 Deep Think redefines reasoning in AI. Its parallel architecture, stellar benchmarks, and seamless integrations empower developers to conquer complexity. Pair it with Apidog, and you unlock efficient, scalable workflows.

Implement these insights today. Experiment with the Gemini app, prototype in Apidog, and witness transformations firsthand. The path to advanced applications starts with deliberate choices like these.