Google DeepMind recently unveiled the Gemini 2.5 Pro, a AI model that pushes the boundaries of reasoning, coding, and problem-solving. This experimental release is state-of-the-art across numerous benchmarks, making it a powerful tool for developers and enterprises. Whether you’re building intelligent applications or solving complex problems, the Gemini 2.5 Pro API offers unparalleled capabilities to integrate advanced AI into your projects.

Why Use the Gemini 2.5 Pro API?

Gemini 2.5 Pro is a "thinking model," meaning it reasons through problems step-by-step, mimicking human thought processes. This results in more accurate and context-aware responses, especially for complex tasks like math, science, and coding.

Gemini 2.5 Pro excels in benchmarks like Humanity’s Last Exam (18.8% score) and SWE-Bench Verified (63.8% with a custom agent setup). Its ability to handle a 1-million-token context window (with 2 million coming soon) makes it ideal for processing large datasets, including text, images, and code repositories.

Now that we’ve established its capabilities, let’s explore how to integrate the Gemini 2.5 Pro API into your applications.

Prerequisites for Using the Gemini 2.5 Pro API

Before you can start using the Gemini 2.5 Pro API, you need to prepare your development environment. Follow these steps to ensure a smooth setup:

Obtain an API Key: First, visit the Google AI Studio API Key page to generate your API key. You’ll need a Google account to access this.

Once logged in, click “Get an API Key” and save the key securely.

Install Python: The Gemini 2.5 Pro API is accessible via Python. Ensure you have Python 3.7 or higher installed on your system. You can download it from the official Python website if needed.

Set Up a Project Environment: Create a virtual environment to manage dependencies. Run the following commands in your terminal:

python -m venv gemini_env

source gemini_env/bin/activate # On Windows, use `gemini_env\Scripts\activate`

Install Required Libraries: Install the google-generativeai library, which provides the interface to interact with the Gemini API. Use this command:

pip install google-generativeai

Install Apidog: To test and manage your API requests, download and install Apidog. This tool will help you debug and optimize your API calls efficiently.

With these prerequisites in place, you’re ready to start coding. Let’s move on to configuring the API.

Configuring the Gemini 2.5 Pro API in Your Project

To use the Gemini 2.5 Pro API, you need to configure your project to authenticate with Google’s servers. Here’s how to do it:

Import the Library: Start by importing the google.generativeai library in your Python script. Add this line at the top:

import google.generativeai as genai

Set Up Your API Key: Configure the library with your API key. Replace "YOUR_API_KEY" with the key you obtained from Google AI Studio:

genai.configure(api_key="YOUR_API_KEY")

Select the Model: Specify that you want to use the Gemini 2.5 Pro model. The model ID for the experimental version is gemini-2.5-pro-exp-03-25. You can set it like this:

model = genai.GenerativeModel("gemini-2.5-pro-exp-03-25")

Now that your project is configured, let’s explore how to make your first API request.

Making Your First Request with the Gemini 2.5 Pro API

The Gemini 2.5 Pro API supports various types of requests, including text generation, multimodal inputs, and streaming responses. Let’s start with a simple text-based request to understand how the API works.

Step 1: Create a Text Prompt

Define a prompt that you want the model to respond to. For example, let’s ask the model to explain a technical concept:

prompt = "Explain how neural networks work in simple terms."

Step 2: Send the Request

Use the generate_content method to send the prompt to the API and get a response:

response = model.generate_content(prompt)

print(response.text)

Step 3: Handle the Response

The API will return a response in the response.text attribute. A sample output might look like this:

Neural networks are like a brain for computers. They’re made of layers of "neurons" that process data. First, you feed the network some input, like a picture of a cat. Each neuron in the first layer looks at a small part of the input and passes its findings to the next layer. As the data moves through the layers, the network learns patterns—like the shape of a cat’s ears or whiskers. By the final layer, the network decides, "This is a cat!" It learns by adjusting connections between neurons using math, based on examples you give it.

This simple example demonstrates the power of Gemini 2.5 Pro in explaining complex topics. Next, let’s explore how to handle multimodal inputs.

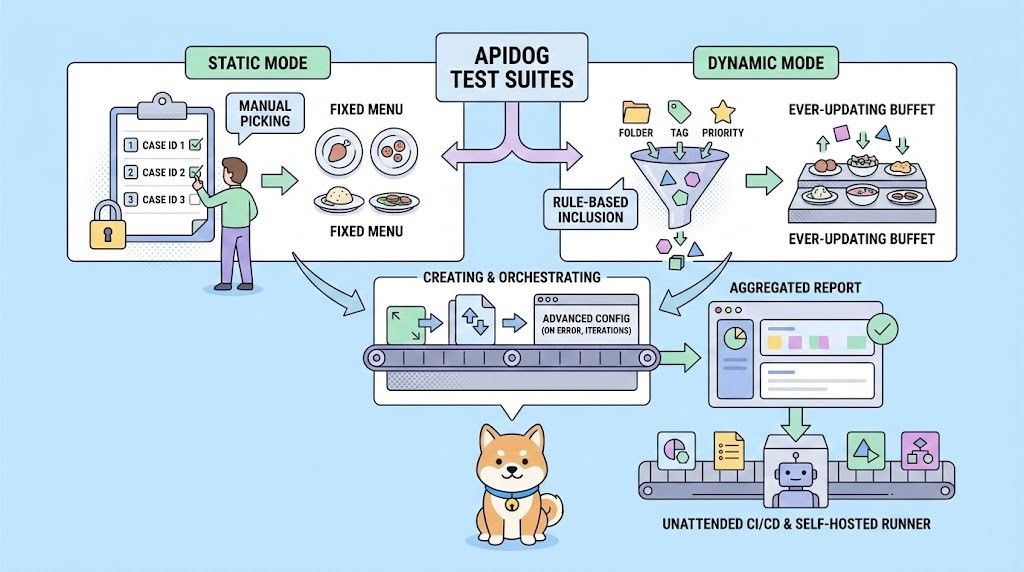

Testing and Optimizing API Requests with Apidog

When working with the Gemini 2.5 Pro API, testing and debugging your requests is crucial to ensure they work as expected. This is where Apidog comes in. Apidog is a powerful API management tool that simplifies the process of sending, testing, and analyzing API requests.

Step 1: Set Up Apidog

After downloading and installing Apidog, create a new project. Add a new API request by selecting the HTTP method POST and entering the Gemini API endpoint URL (you can find this in the Google AI Studio documentation).

Step 2: Configure the Request

In Apidog, set the following:

- Headers: Add

Authorization: Bearer YOUR_API_KEY. - Body: Use a JSON structure to define your request.

Step 3: Send and Analyze the Request

Click the “Send” button in Apidog to execute the request. Apidog will display the response, including the status code, response time, and the actual response body. This allows you to quickly identify any issues, such as authentication errors or incorrect payloads.

Step 4: Optimize Your Requests

Apidog also provides tools to save and reuse requests, set up automated tests, and monitor API performance. For example, you can create a test case to verify that the API returns a valid response for different prompts, ensuring your integration is robust.

By using Apidog, you can streamline your development workflow and ensure your Gemini 2.5 Pro API integration is efficient and error-free. Let’s move on to advanced use cases.

Conclusion

The Gemini 2.5 Pro API is a game-changer for developers looking to integrate advanced AI capabilities into their applications. From text generation to multimodal processing and advanced coding, this API offers a wide range of possibilities. By following the steps in this guide, you can set up, configure, and use the API to build powerful AI-driven solutions. Additionally, tools like Apidog make it easier to test and optimize your API requests, ensuring a seamless development experience.

Start experimenting with the Gemini 2.5 Pro API today and unlock the potential of Google’s most intelligent AI model. Whether you’re building a game, solving complex problems, or analyzing multimodal data, this API has you covered.