What if you could ship AI-powered features without burning through your runway? OpenAI offers up to $100,000 in free API credits to early-stage startups through its Startup Credits Program. No revenue requirements, just a real product with traction! For developers building on GPT-4, computer vision, or embeddings, this transforms infrastructure costs from a monthly liability into a competitive advantage.

Bootstrapped startups and pre-revenue companies face a brutal reality. OpenAI's standard pricing consumes runway fast. $0.03 per 1K input tokens for GPT-4o adds up quickly when you're processing thousands of user requests daily. Most developers either throttle their AI features or burn cash they don't have. The Startup Credits Program eliminates this trade-off entirely, letting you build ambitious AI features without worrying about the next API bill.

Understanding OpenAI Startup Credits Program

OpenAI's Startup Credits Program provides tiered credit allocations based on company stage and VC backing. The structure works as follows:

Tier 1: $2,500 Credits

- Available to all eligible startups

- No VC referral required

- Valid for 12 months from approval

Tier 2: $15,000-$25,000 Credits

- Requires affiliation with a program partner

- Partners include accelerators (Y Combinator, Techstars) and VCs (a16z, Sequoia, Thrive Capital)

- 12-month validity period

Tier 3: $50,000-$100,000+ Credits

- Reserved for highly competitive applications

- Typically requires top-tier VC backing or exceptional traction metrics

- Extended validity and priority support included

Credits apply to all OpenAI API services: GPT-4o, GPT-4o mini, DALL-E 3, Whisper, embeddings, and fine-tuning. You cannot use credits for ChatGPT Plus subscriptions or enterprise contracts—they're strictly for API consumption.

The program partners with over 200 VCs and accelerators globally. Notable partners include Lightspeed Venture Partners, Jason Calacanis's LAUNCH Fund, Floodgate, and Bloomberg Beta. Each partner receives referral codes they distribute to portfolio companies.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Application Requirements and Eligibility

OpenAI maintains specific criteria to qualify for startup credits. Understanding these requirements before applying saves time and increases approval odds.

Company Stage Requirements

Your startup must be less than 5 years old from incorporation date. Pre-revenue companies qualify—OpenAI explicitly states "no revenue requirement, just a real product & traction." However, you need demonstrable user engagement: active beta users, waitlist signups, or validated market demand.

Product Requirements

You must have a functional product or prototype. Ideas without implementation don't qualify. The product should meaningfully integrate OpenAI's API—not just wrap ChatGPT in a different interface. Ideal candidates use GPT-4 for core functionality: document analysis, code generation, conversational agents, or multimodal processing.

Legal Structure

Your company needs formal incorporation. Sole proprietorships and unregistered businesses are ineligible. You must provide:

- Certificate of incorporation

- Tax ID/EIN documentation

- Cap table showing ownership structure

VC Referral Requirement (Tier 2+)

For credits above $2,500, you need a referral code from a program partner. This creates a gatekeeping mechanism—OpenAI relies on VC partners to pre-filter applications. If you're not in an accelerator or VC portfolio, you have three options:

- Apply to partner accelerators—Y Combinator, Techstars, and 500 Startups all provide referral codes to accepted companies

- Network with partner VCs—Attend demo days, pitch events, or warm introductions to secure codes

- Start with Tier 1—Build traction with $2,500 credits, then reapply with stronger metrics

Geographic Restrictions

The program is currently limited to startups in supported OpenAI countries. Sanctioned regions and certain jurisdictions are excluded. Check OpenAI's geographic availability before applying.

Step-by-Step Application Process

The application process varies by tier. Here's how to navigate each path:

Tier 1 ($2,500) Application

Navigate to openai.com/startups. Click "Apply Now" and select "Apply directly to OpenAI."

Complete the application form:

- Company Information: Legal name, incorporation date, website, employee count

- Product Description: What you built, what problem it solves, how you use OpenAI's API

- Traction Metrics: Active users, revenue (if any), growth rate, funding raised

- Use Case Details: Specific API endpoints you plan to use, expected monthly token consumption

Upload required documents:

- Certificate of incorporation

- Cap table

- Product demo or screenshots

Submit and wait for review. OpenAI typically responds within 2-4 weeks. Approved startups receive credits within 48 hours of acceptance.

Tier 2+ Application (With VC Referral)

Obtain a referral code from your accelerator or VC partner. The code format is typically PARTNER-XXXX-XXXX.

Visit openai.com/startups and click "Apply with referral."

The referral application is shorter:

- Referral Code: Enter your partner-provided code

- Company Verification: Confirm legal name and incorporation match partner records

- Use Case Description: Brief explanation of how you'll use the credits

Partner-referred applications process faster—usually within 1 week—because the VC has already vetted your company.

Application Tips

Be specific about API usage. Instead of "we use GPT-4," write "we process 50,000 customer service tickets monthly using GPT-4o for intent classification and response generation, consuming approximately 15M input tokens and 5M output tokens."

Show traction with metrics. "1,000 MAU growing 20% month-over-month" beats "we have users."

Demonstrate technical competence. Mention specific models (GPT-4o, text-embedding-3-large), architectures (RAG pipelines, agent frameworks), and infrastructure (vector databases, caching layers).

Alternative Credit Programs

If you don't qualify for OpenAI's Startup Credits or need additional resources, several alternatives provide free or subsidized API access.

Microsoft for Startups Founders Hub

Microsoft offers $2,500 in Azure OpenAI Service credits plus $1,000 in Azure OpenAI Service Standard Inference credits (limited time). The program has broader eligibility—no VC referral required.

Apply at microsoft.com/startups. Requirements:

- Less than $10M total funding raised

- Less than $1M annual revenue

- Less than 5 years old

Benefits include technical support, Azure infrastructure credits up to $150,000, and access to OpenAI models through Azure's API—functionally identical to direct OpenAI access with enterprise-grade SLAs.

Azure OpenAI Service

If you're already using Microsoft Azure, apply for Azure OpenAI Service access. Microsoft occasionally offers promotional credits for new AI workloads. The service provides the same GPT-4, DALL-E, and Whisper models as direct OpenAI, plus enterprise features like private endpoints and compliance certifications.

Poe Developer Platform

Quora's Poe offers free API access to multiple models including GPT-4o, Claude, and Gemini through a unified interface. While not a direct OpenAI credit, it lets you prototype and test AI features without API costs. Limited to 100 requests/day on the free tier, but sufficient for development and small-scale deployments.

Community and Research Grants

OpenAI sporadically offers research credits for academic institutions and nonprofit organizations working on AI safety, alignment, or beneficial applications. These require detailed proposals and are highly competitive, but provide substantial uncapped credits for qualified projects.

Startup-Specific Promotions

Monitor OpenAI's blog and Twitter/X account for limited-time promotions. During major product launches (GPT-4o, o1), OpenAI sometimes offers bonus credits to active developers. The research preview program for o1-preview and o1-mini provided free access to new models before general pricing.

Maximizing Your Credits

Once approved, strategic credit usage extends your runway significantly.

Track Consumption Programmatically

Monitor API usage in real-time to avoid surprises:

import openai

import os

client = openai.OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# Get current usage

usage = client.usage.get()

print(f"Daily requests: {usage.daily_requests}")

print(f"Monthly spend: ${usage.total_usage / 100:.2f}")

Set up alerts when you hit 50%, 75%, and 90% of your credit allocation. Use the Usage Dashboard at platform.openai.com/usage for visual tracking.

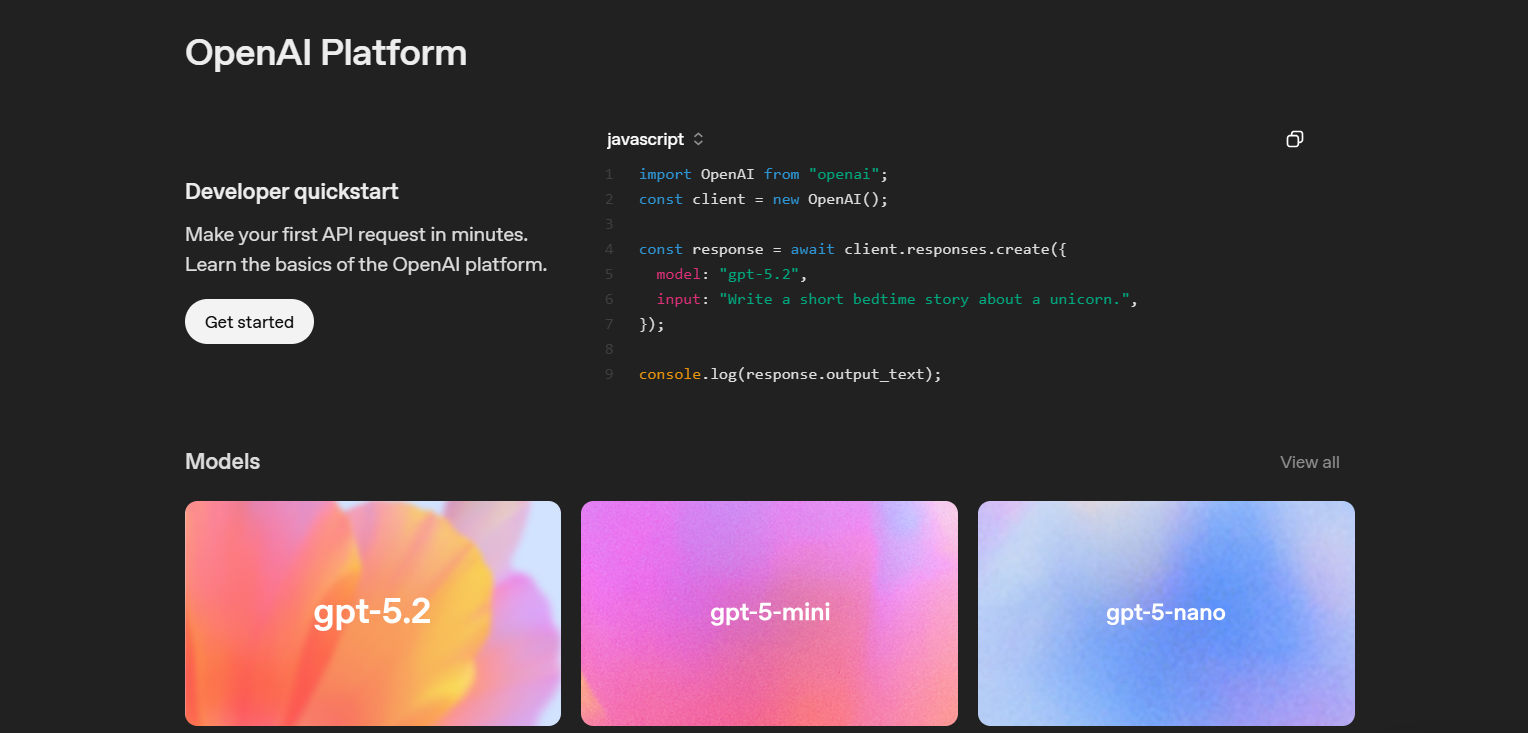

Optimize Model Selection

Use GPT-4o mini for 95% of tasks—it's 20x cheaper than GPT-4o and handles most queries adequately. Reserve GPT-4o for complex reasoning, code generation, or when users specifically request premium quality.

Implement model routing logic:

def select_model(prompt_complexity):

if prompt_complexity < 0.7:

return "gpt-4o-mini" # $0.00015 per 1K input tokens

else:

return "gpt-4o" # $0.005 per 1K input tokens

# Estimate complexity based on token count, presence of code, or user tier

Implement Caching

Cache embeddings and completions to reduce redundant API calls:

from functools import lru_cache

import hashlib

@lru_cache(maxsize=10000)

def get_embedding(text):

text_hash = hashlib.md5(text.encode()).hexdigest()

# Check cache first

cached = redis.get(f"embed:{text_hash}")

if cached:

return json.loads(cached)

# API call if not cached

response = client.embeddings.create(

model="text-embedding-3-small",

input=text

)

embedding = response.data[0].embedding

# Cache for 30 days

redis.setex(f"embed:{text_hash}", 2592000, json.dumps(embedding))

return embedding

Batch Processing

Use batch API for non-real-time workloads. Batched requests cost 50% less than synchronous calls:

# Create batch file

batch_requests = [

{

"custom_id": f"req-{i}",

"method": "POST",

"url": "/v1/chat/completions",

"body": {

"model": "gpt-4o-mini",

"messages": [{"role": "user", "content": prompt}]

}

}

for i, prompt in enumerate(prompts)

]

# Upload and submit batch

batch_file = client.files.create(

file=json.dumps(batch_requests).encode(),

purpose="batch"

)

batch = client.batches.create(

input_file_id=batch_file.id,

endpoint="/v1/chat/completions",

completion_window="24h"

)

Structured Outputs

Use JSON mode and function calling to reduce token waste from parsing unstructured responses:

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": "Extract invoice data"}],

response_format={"type": "json_object"},

functions=[{

"name": "extract_invoice",

"parameters": {

"type": "object",

"properties": {

"invoice_number": {"type": "string"},

"total": {"type": "number"}

}

}

}],

function_call={"name": "extract_invoice"}

)

This guarantees parseable output without retry logic or regex parsing.

Conclusion

OpenAI's Startup Credits Program removes the financial barrier to building AI-powered products. With up to $100,000 available through partner referrals—or $2,500 direct to OpenAI—you can ship ambitious features, acquire users, and prove traction before spending a dollar on API costs. The program's structure rewards real products with demonstrated usage, not just pitch decks and promises.

Apply with specific use cases, clear traction metrics, and technical competence. If you lack a VC referral, start with Tier 1 credits, build measurable growth, and leverage that momentum to secure partner introductions. Alternative programs like Microsoft Founders Hub provide parallel paths to free credits.

When building on OpenAI's API—whether consuming startup credits or managing production workloads—streamline your development workflow with Apidog. It handles API testing, documentation generation, and team collaboration so you can focus on shipping features instead of debugging HTTP requests.