Let’s face it: AI coding assistants like GitHub Copilot are fantastic, but their subscription costs can burn a hole in your wallet. Enter DeepSeek R1—a free, open-source language model that rivals GPT-4 and Claude 3.5 in reasoning and coding tasks . Pair it with Cline, a VS Code plugin that turns this AI into a full-fledged coding agent, and you’ve got a powerhouse setup that writes, debugs, and even executes code autonomously—all without spending a dime.

In this guide, I’ll walk you through everything you need to know, from installing Cline to optimizing DeepSeek R1 for your projects. Let’s get started!

What Makes DeepSeek R1 Special?

1. It’s Free (Yes, Really!)

Unlike proprietary models, DeepSeek R1 is fully open-source and commercially usable. No token limits, no hidden fees—just raw AI power .

2. Performance That Rivals Paid Models

DeepSeek R1 excels in coding, math, and logical reasoning. For example, its 32B parameter variant outperforms OpenAI’s o1-mini in code generation benchmarks, and its 70B model matches Claude 3.5 Sonnet in complex tasks .

3. Flexible Deployment Options

Run it locally for privacy and speed, or use DeepSeek’s affordable API (as low as $0.01 per million tokens) for cloud-based access .

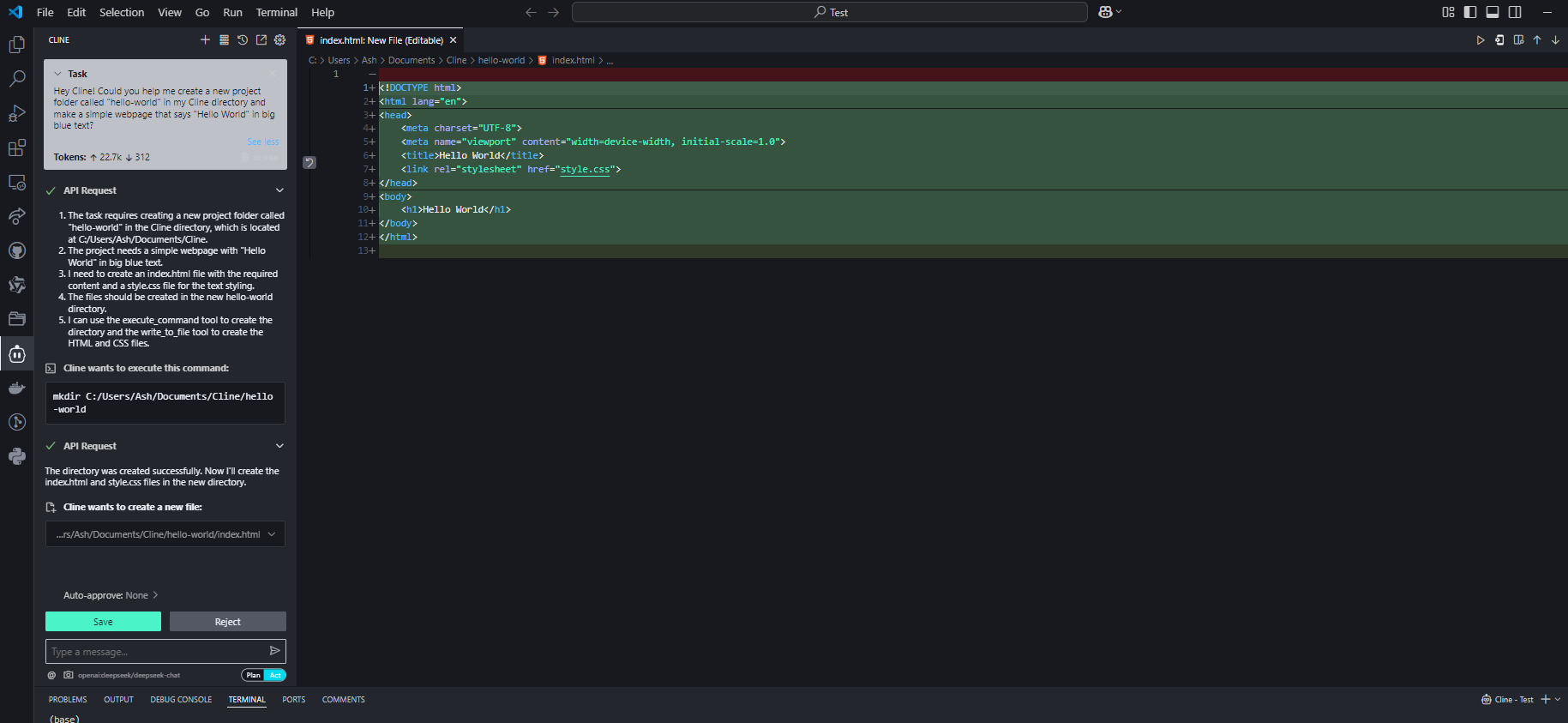

Setting Up DeepSeek R1 in VS Code with Cline

Step 1: Install the Cline Plugin

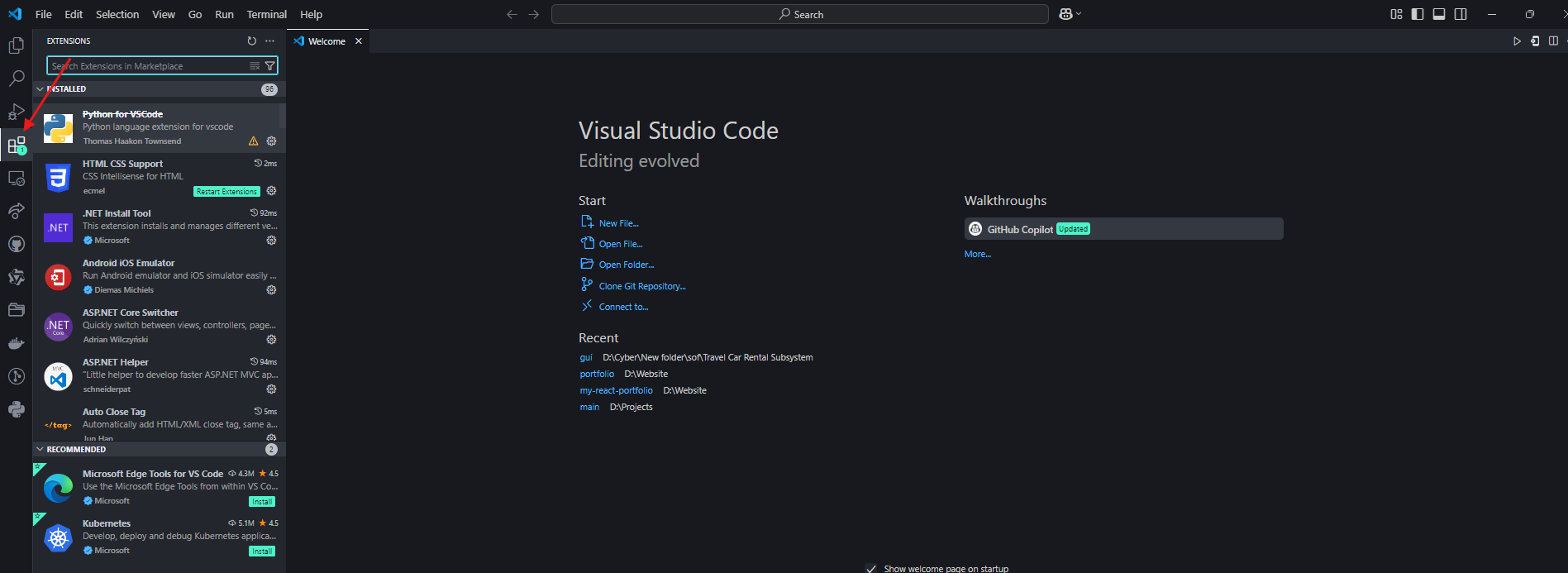

- Open VS Code and navigate to the Extensions tab.

2. Search for “Cline” and install it.

3. Click the robot icon in the left sidebar to activate Cline .

Step 2: Choose Your DeepSeek R1 Workflow

Option A: Local Setup (Free, Privacy-First)

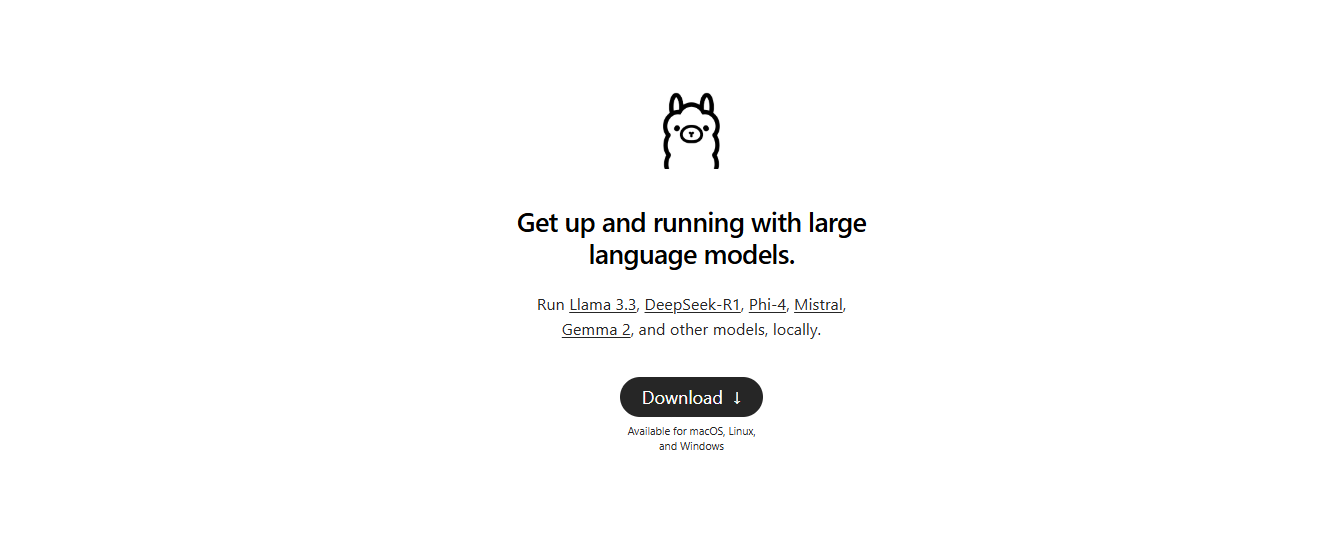

- Install Ollama: Download it from ollama.com to manage local AI models .

2. Pull the Model: In your terminal, run:

ollama pull deepseek-r1:14b # For mid-tier hardware (e.g., RTX 3060)

Smaller models like 1.5b work for basic tasks, but 14B+ is recommended for coding .

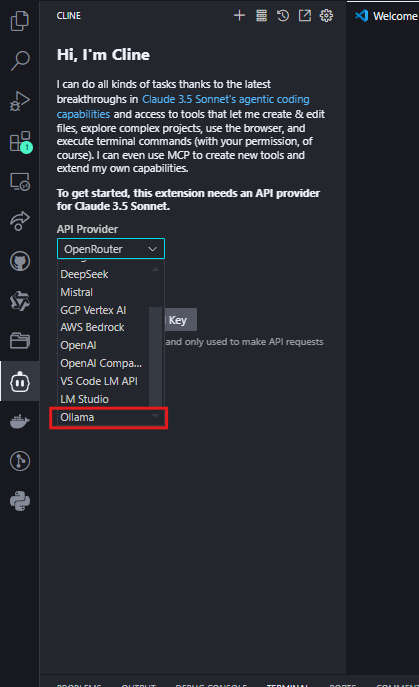

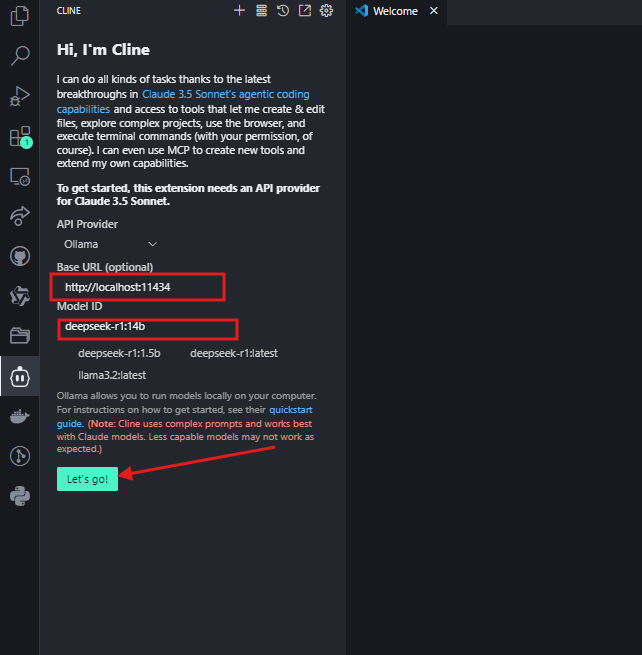

- Configure Cline:

- Set the API Provider to “Ollama”.

- Enter

http://localhost:11434as the Base URL and select your model (e.g.,deepseek-r1:14b) .

Click "Lets go" and you can now use it.

Option B: OpenRouter Integration (Flexible Model Switching)

For developers who want access to multiple AI models (including DeepSeek R1) through a single API key, OpenRouter offers a streamlined solution. This is ideal if you occasionally need to compare outputs with models like GPT-4 or Claude but want DeepSeek R1 as your default.

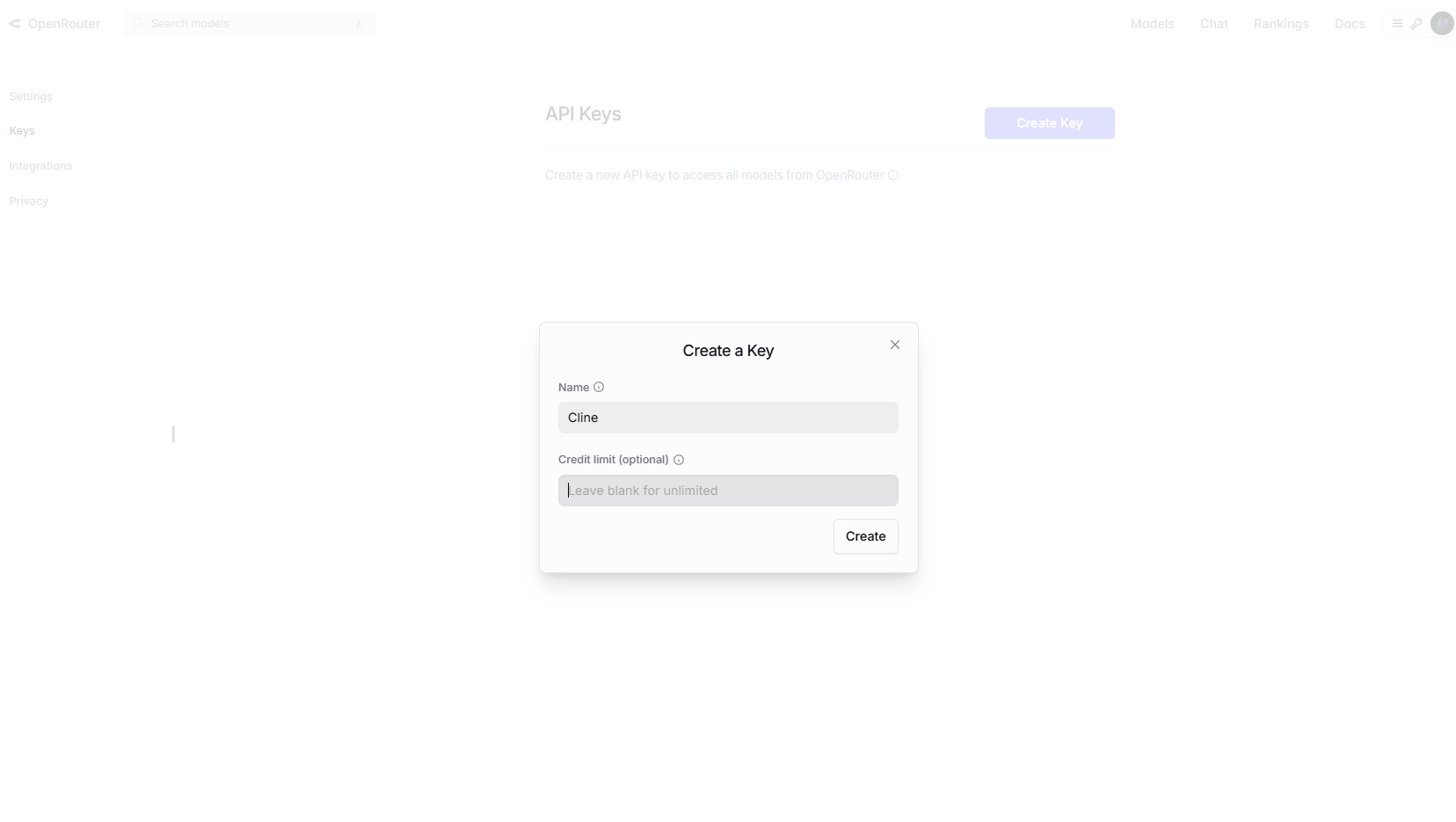

Step 1: Get Your OpenRouter API Key

- Visit OpenRouter.ai and sign up.

- Navigate to API Keys and create a new key.

Optional: Enable spending limits in account settings for cost control.

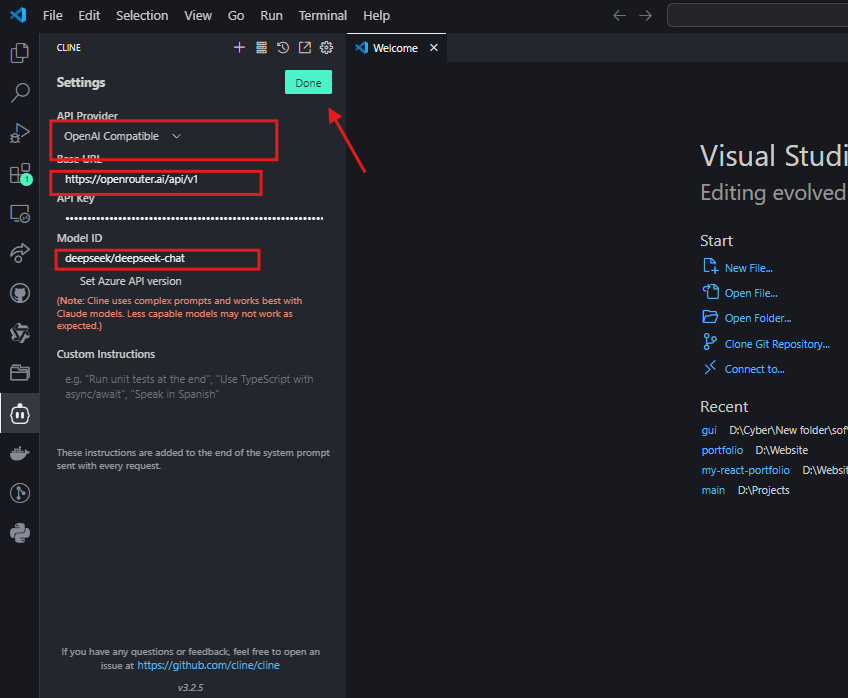

Step 2: Configure Cline for OpenRouter

- In VS Code, open Cline’s settings.

- Select “OpenAI-Compatible” as the API provider.

- Set the Base URL to

https://openrouter.ai/api/v1. - Paste your OpenRouter API key.

- In the Model ID field, enter

deepseek/deepseek-chat

Step 3: Test the Integration

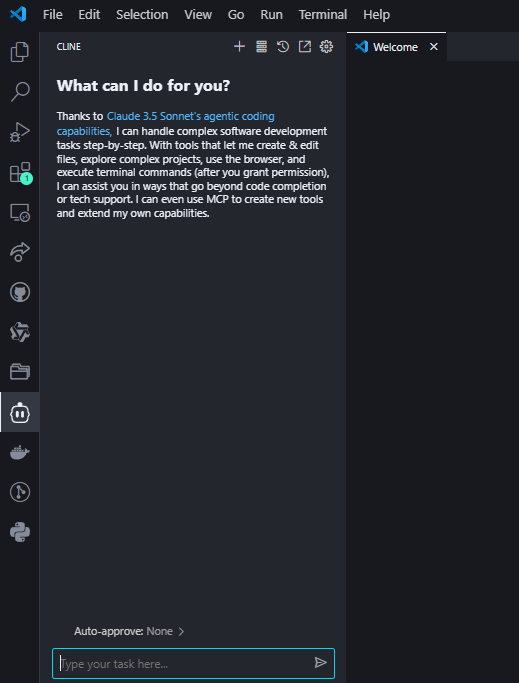

Ask Cline a coding question to confirm the setup:

If configured correctly, DeepSeek R1 will generate code with explanations in Cline’s interface.

Why Choose OpenRouter?

- Multi-Model Access: Easily switch between DeepSeek R1 and 50+ other models (e.g., GPT-4, Claude) without reconfiguring APIs4.

- Cost Transparency: Track token usage across all models in one dashboard4.

- Fallback Support: Automatically route requests to backup models if DeepSeek R1’s API is overloaded4.

Cost Considerations

While OpenRouter’s pricing for DeepSeek R1 aligns closely with direct API costs (~$0.01 per million input tokens), always check their pricing page for real-time rates. For heavy users, OpenRouter’s unified billing can simplify expense management25.

Pro Tips for Optimizing Performance

1. Model Size vs. Hardware

| Model | RAM Needed | Recommended GPU |

|---|---|---|

| 1.5B | 4GB | Integrated |

| 7B | 8–10GB | NVIDIA GTX 1660 |

| 14B | 16GB+ | RTX 3060/3080 |

| 70B | 40GB+ | RTX 4090/A100 |

Tip: Quantized models (e.g., Q4_K_M.gguf) reduce VRAM usage by 30% without major quality loss .

2. Prompt Engineering

- For Code: Include explicit instructions like “Use Python 3.11 and type hints” .

- For Debugging: Paste error logs and ask “Explain this error and fix it” .

Troubleshooting Common Issues

1. Slow Responses

- Fix: Switch to a smaller model or enable GPU acceleration in Ollama with

OLLAMA_GPU_LAYERS=12.

2. Hallucinations or Off-Track Answers

- Fix: Use stricter prompts (e.g., “Answer using only the provided context”) or upgrade to larger models like 32B .

3. Cline Ignoring File Context

- Fix: Always provide full file paths (e.g.,

/src/components/Login.jsx) instead of vague references .

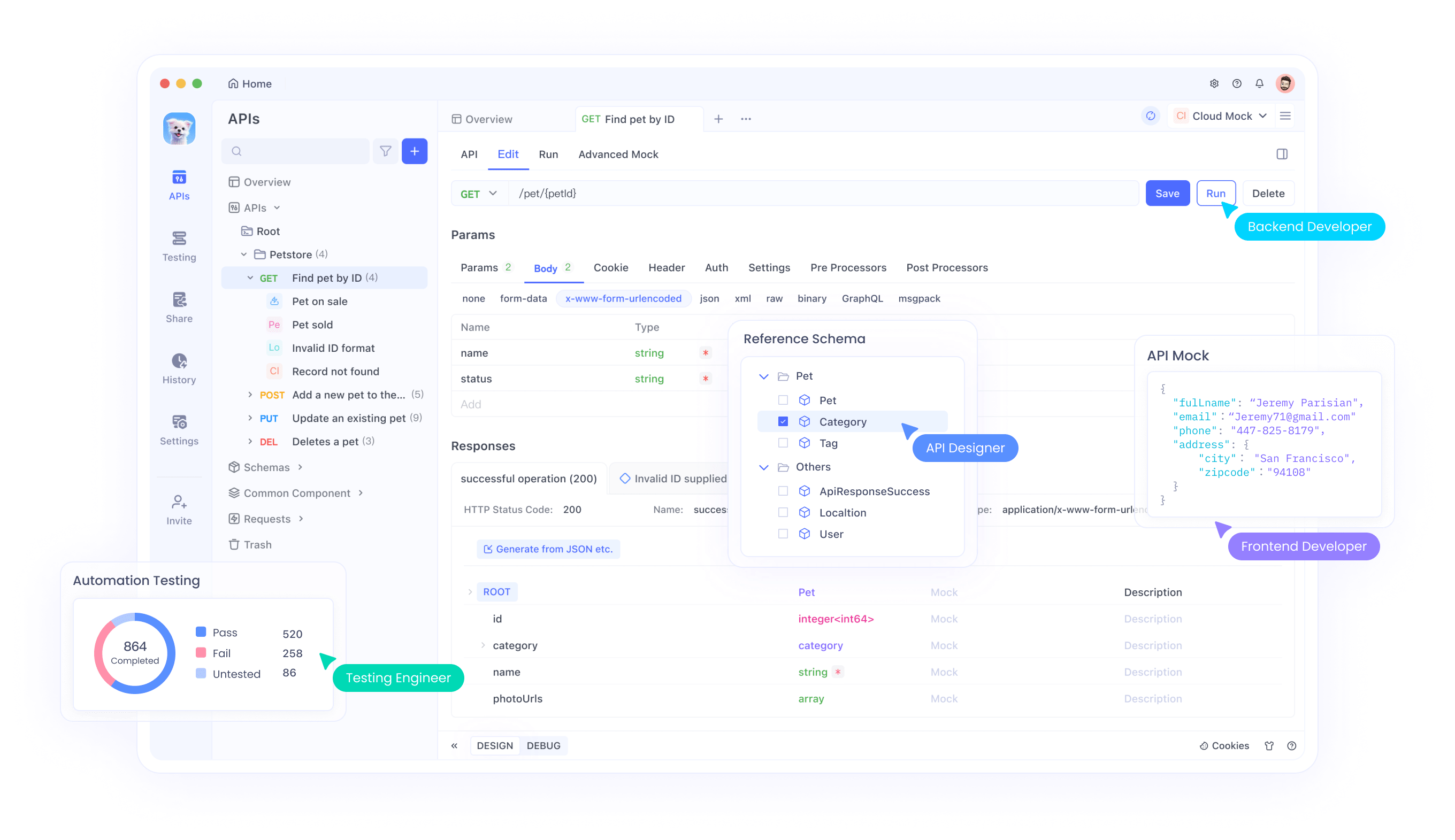

Advanced Use Cases with Apidog Integration

Once your DeepSeek R1 + Cline setup is running, use Apidog to:

- Test API Endpoints: Validate DeepSeek’s responses programmatically.

- Automate Workflows: Chain Cline’s code generation with API calls (e.g., deploy a generated script to AWS).

- Monitor Performance: Track latency and accuracy over time .

Wrapping Up: Why This Combo Wins

DeepSeek R1 and Cline aren’t just tools—they’re a paradigm shift. You get GPT-4-level smarts without the cost, full control over privacy, and a workflow that feels like pairing with a senior developer.

Ready to supercharge your coding?

- Install Cline and Ollama.

- Choose your DeepSeek R1 model.

- Build something amazing—and let me know how it goes!

Don’t forget to download Apidog to streamline API testing and automation. It’s the perfect sidekick for your AI-powered coding journey!