Hey there, fellow developer! If you’ve ever worked on automated testing, you know the sinking feeling of seeing a test fail even though nothing has changed in the code. Let's set a scene, I bet it's all too familiar. You push your beautifully crafted code, confident it's your best work yet. You trigger the continuous integration (CI) pipeline and wait for that satisfying green checkmark. But instead, you get a big, angry red X. Your heart sinks. "What did I break?!" You frantically check the logs, only to find... a random test failure. You rerun it: sometimes it passes, sometimes it doesn't.

Sound familiar? You, my friend, have just been victimized by a flaky test.

And here’s the truth: flaky tests waste developer time, slow down CI/CD pipelines, and create massive frustration across teams. Flaky tests are the haunting poltergeists of software development. They fail unpredictably and seemingly at random, eroding trust in your entire testing process, wasting countless hours of investigation, and slowing down delivery to a crawl. In fact, they're such a universal pain point that industry leaders like Google have published extensive research on eliminating them.

But here's the good news: flaky tests aren't magic. They have specific, identifiable causes. And what can be identified can be fixed. You can deal with them once you understand their root causes.

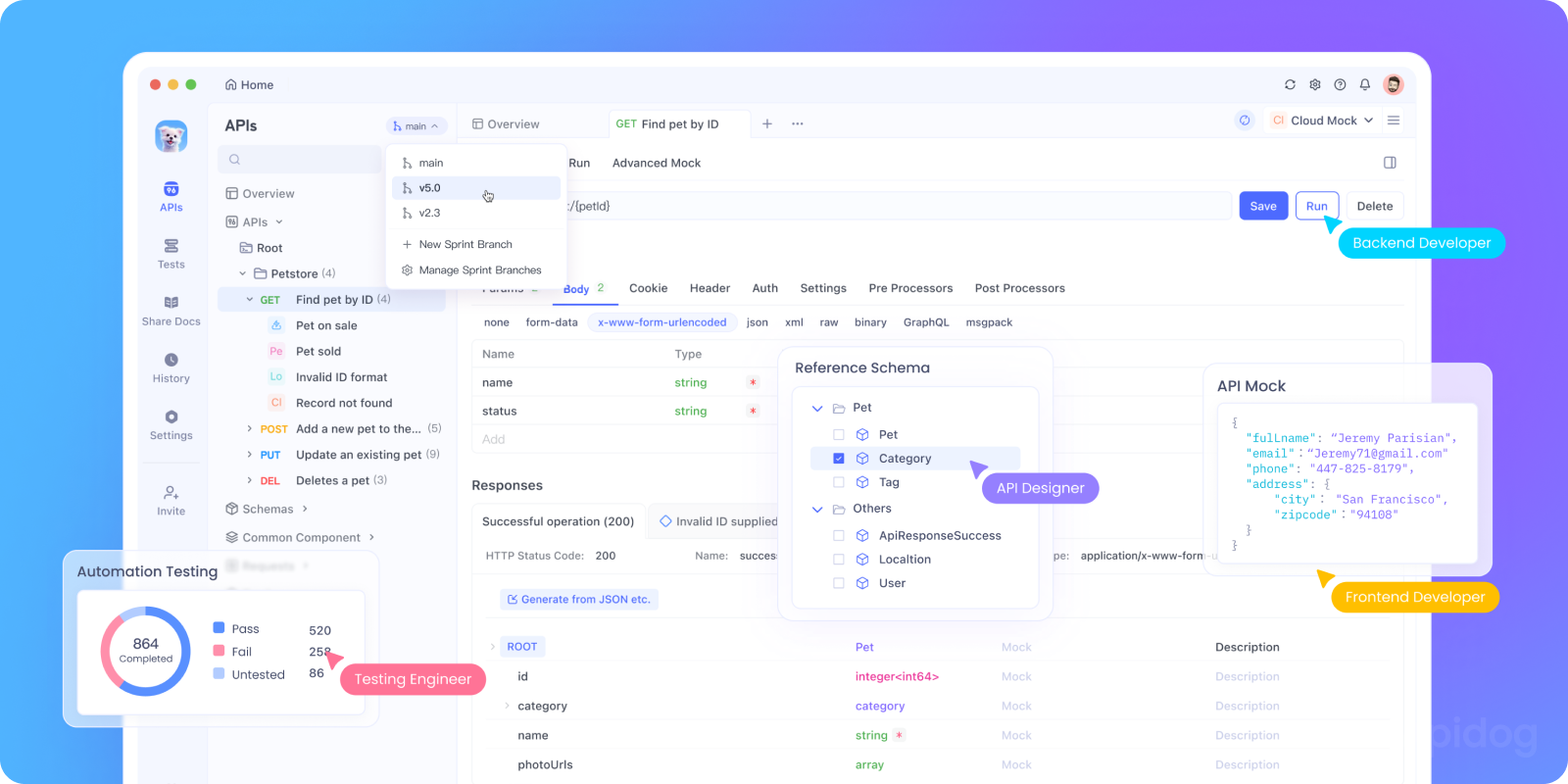

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

What Exactly Is a Flaky Test, Anyway?

Before we list the culprits, let's define our nemesis. A flaky test is a test that exhibits both passing and failing behavior when run multiple times on the same, identical version of the code. It's not a test that fails consistently because of a bug. It's a test that fails inconsistently, making it a noisy and unreliable indicator of code health.

For example:

- Run #1 → ✅ Pass

- Run #2 → ❌ Fail

- Run #3 → ✅ Pass again

The cost of these tests is immense. They lead to:

- The "Re-Run and Pray" Cycle: Wasting developer and CI resources.

- Alert Fatigue: When tests fail often for no reason, teams start ignoring failures, which means real bugs slip through.

- Slower Development Velocity: Broken builds and investigation time slow down the entire team.

Why Flaky Tests Are Dangerous for Teams

You might think, "It’s just one test failing, I’ll rerun it." But here's the problem:

- Lost Trust → Developers stop trusting test results.

- Slower CI/CD → Pipelines get clogged with retries.

- Hidden Bugs → Real issues get ignored because people assume "oh, it’s just flaky."

- Increased Costs → More reruns mean more time, resources, and infrastructure.

According to industry studies, some companies spend up to 40% of testing time dealing with flakiness. That’s huge!

Now, let's meet the usual suspects.

The Causes and Fixes of Flaky Tests

1. Asynchronous Operations and Race Conditions

This is arguably the king of flaky tests. In modern applications, everything is asynchronous API calls, database operations, UI updates. If your test doesn't wait properly for these operations to complete, it's essentially guessing. Sometimes it guesses right (the operation finishes fast), and sometimes it guesses wrong (it's slow), leading to a failure.

Why it happens: Your test code executes synchronously, but the application code it's testing does not.

Example: A test that clicks a "Save" button and immediately checks the database for the new record without waiting for the save operation's network request to complete.

The Fix:

- Use Explicit Waits: Never use static

sleep()orsetTimeout()calls. These are a primary source of flakiness because you're either waiting too long (slowing down tests) or not long enough (causing failures). - Employ Waiting Strategies: Use tools that allow you to wait for a specific condition. For example:

- UI Tests: Wait for an element to be visible, clickable, or to contain specific text.

- API Tests: Wait for a specific HTTP response status or for a payload to appear in the database.

- Selenium/WebDriver: Use

WebDriverWaitwithexpected_conditions. - Cypress: Cypress has automatic waiting built-in for most commands, which is fantastic for avoiding this issue.

2. Test Isolation Issues

Tests should be like polite strangers: they shouldn't leave a mess for the next person. When tests share state and don't clean up after themselves, they can easily interfere with each other. Test A creates a user "test@example.com", passes, but doesn't delete it. Test B then tries to create the same user and fails because of a unique constraint violation.

Why it happens: Shared resources like databases, caches, or file systems are modified by one test, altering the starting state for the next test.

The Fix:

- Ensure Full Isolation: Each test should set up its own required data and tear it down completely afterward. This is the golden rule.

- Use Transactions: A powerful pattern is to run each test inside a database transaction and then roll it back at the end. This leaves the database completely pristine.

- Generate Unique Data: Use unique identifiers (like UUIDs or timestamps) in test data to avoid conflicts. For example,

test.user.<timestamp>@example.com.

3. Dependencies on External Services

Does your test suite call a third-party API for payment processing, weather data, or email validation? If so, you've introduced a massive point of failure that is entirely outside your control. That API could be slow, rate-limiting you, down for maintenance, or have changed its response format slightly all of which will fail your tests through no fault of your own.

Why it happens: The test's success is coupled to the health and performance of an external system.

The Fix:

- Mock and Stub External Services: This is the most important strategy. Instead of making a real HTTP call, intercept the request and return a fake, predetermined response that simulates a success or error case.

- Use Tools for Mocking: This is where Apidog shines. Apidog allows you to create powerful mocks for your APIs. You can define exactly what response an API should return for a given request, completely eliminating reliance on the real, flaky external service. Your tests become fast, reliable, and predictable.

- Use Service Virtualization: For more complex scenarios, tools that simulate the behavior of entire external systems can be used.

4. Unmanaged Test Data

Similar to isolation issues, but broader. If your tests assume a specific state of the database (e.g., "there must be exactly 5 users" or "a product with ID 123 must exist"), they will fail the moment that assumption is false. This often happens with tests run against a shared development or staging database that is constantly changing.

Why it happens: Tests make implicit assumptions about the environment's data state.

The Fix:

- Explicitly Manage All Data: A test should never assume anything about the world. It should create all the data it needs to run.

- Use Factories and Fixtures: Libraries like

factory_bot(Ruby) or similar patterns in other languages help you easily generate the precise data needed for each test. - Avoid Hard-Coded IDs: Never rely on a specific record ID existing. Create the record and use its generated ID in your test assertions.

5. Concurrency and Parallel Test Execution

Running tests in parallel is essential for speed. However, if your tests aren't designed for it, they will stomp on each other. Two tests running at the same time might try to access the same file, use the same port on a local server, or modify the same database record.

Why it happens: Tests are executed simultaneously but were written with the assumption they would run alone.

The Fix:

- Design for Parallelism from the Start: Assume tests will run in parallel.

- Isolate Resources: Ensure each parallel test runner has its own isolated environment: a unique database schema, a unique port for local servers, etc.

- Use Thread-Safe Operations: Be mindful of any shared in-memory state.

6. Reliance on System Time

"Does this test fail after 5 PM?" It sounds silly, but it happens. Tests that use the real system time (new Date(), DateTime.Now) can behave differently depending on when they are run. A test checking if a "daily report" was generated might pass when run once at 11:59 PM and then fail when run again two minutes later at 12:01 AM.

Why it happens: The system clock is an external, changing input.

The Fix:

- Mock the Time: Use libraries that allow you to "freeze" or "travel" in time. Libraries like

timecop(Ruby),freezegun(Python), orMockito'smockStaticforjava.time(Java) let you set a specific time for your test, making it completely deterministic.

7. Non-Deterministic Code in Tests

This one is subtle. If the code under test is non-deterministic (e.g., it uses a random number generator or shuffles a list), your test can't make a consistent assertion on its output.

Why it happens: The application logic itself has randomness.

The Fix:

- Seed Random Number Generators: Most random number generators can be seeded with a fixed value. This makes the sequence of "random" numbers identical every time, making the test deterministic.

- Test Behavior, Not Implementation: Instead of asserting on the exact output of a

shuffle()function (which is, by definition, random), assert on the behavior. For example, assert that the output list contains all the same elements as the input list, just in a different order. Or, mock the shuffle function to return a fixed order during the test.

8. Brittle UI Selectors

This is the classic front-end test flakiness. Your test finds an element on the page using a CSS selector like #main > div > div > div:nth-child(3) > button. A developer then slightly adjusts the HTML structure adding a new div for styling and boom, your selector is broken, even though the functionality is perfectly fine.

Why it happens: Selectors are too tightly coupled to the DOM structure, which is volatile.

The Fix:

- Use Robust Locators: Prioritize selectors that are less likely to change.

- Best: Use a dedicated

data-testidattribute (e.g.,<button data-testid="sign-up-button">). This decouples testing from styling and structure. - Good: Use IDs (

#submit-button), but only if they are stable and not used for CSS. - Okay: Use ARIA roles or text content, but be wary of internationalization and text changes.

- Avoid: Complex, nested CSS/XPath paths based on structure.

9. Resource Leaks and Cleanup Failures

Tests that don't properly close resources can cause subsequent tests to fail in weird ways. This could be leaving open database connections, not closing browser instances, or not deleting temporary files. Eventually, the system runs out of resources, causing timeouts or crashes.

Why it happens: The test code doesn't have proper teardown/cleanup logic.

The Fix:

- Use

beforeEach/afterEachHooks: Structure your tests to always clean up in a dedicated teardown phase, even if the test fails. Most test frameworks provide hooks for this. - Employ the Right Patterns: Use patterns like the

usingstatement (C#) ortry-with-resources(Java) to ensure resources are automatically closed.

10. Environment Inconsistencies

"The test works on my machine!" This classic cry is often caused by environment flakiness. Differences in operating systems, browser versions, Node.js versions, installed libraries, or environment variables between a developer's local machine and the CI server can cause tests to fail unpredictably.

Why it happens: The test environment is not reproducible.

The Fix:

- Containerize Everything: Use Docker to define your test environment. A

Dockerfileensures that every test run local and CI happens in an identical, controlled environment. - Version-Pin Everything: Use

package-lock.json,Gemfile.lock,Pipfile.lock, etc., to lock down the exact versions of all your dependencies. - Manage Configuration Securely: Use a consistent and secure method for handling environment variables and secrets needed for testing.

How to Detect Flaky Tests in Your Pipeline

Catching flaky tests early is key. Here are strategies:

- Rerun tests automatically → If a test passes after rerun, mark it as flaky.

- Track failure patterns → CI/CD logs often reveal recurring flaky tests.

- Isolate flaky tests → Tag them and run separately until fixed.

- Use monitoring tools → Tools like Jenkins, CircleCI, and GitHub Actions can report test flakiness.

Reducing Flaky Tests with Apidog

Since many flaky tests are related to APIs and external dependencies, Apidog helps you:

- Create mock servers so you don’t rely on unstable real APIs.

- Automate test scenarios with predictable results.

- Run performance tests to see how APIs behave under stress.

- Centralize all your API tests so you can detect flaky behavior early.

Instead of debugging random failures at 2 AM, you’ll know exactly whether it’s your code or a flaky external dependency.

Best Practices for Avoiding Flaky Tests

Here’s a quick checklist to reduce test flakiness:

- Write deterministic tests with predictable results.

- Use mocks/stubs for APIs and networks.

- Avoid hardcoded delays use event-driven waits.

- Reset test environments between runs.

- Monitor tests over time to spot flaky patterns.

- Document known flaky tests so the team is aware.

Building a Culture Against Flakiness

Fixing individual tests is one thing; preventing them is another. It requires a team culture that values test reliability.

- Don't Tolerate Flakiness: If a test is flaky, quarantine it immediately. Move it to a separate, non-blocking suite so it doesn't block deployments, but schedule time to fix it ASAP.

- Track Flaky Tests: Keep a visible list of known flaky tests and prioritize fixing them.

- Review Tests in Code Reviews: Treat test code with the same seriousness as production code. Look for the anti-patterns we've discussed during reviews.

Conclusion: From Flaky to Robust

Flaky tests are one of the most frustrating problems in software development, they are a nuisance, but they are a solvable one. They waste time, create mistrust, and slow down releases. By understanding these top 10 causes from async waits and test isolation to external mocks and brittle selectors you gain the power to not only fix them but also to write more robust, reliable tests from the beginning you can fix them systematically.

Remember, a test suite is a critical early warning system for your application's health. Its value is directly proportional to the trust the development team has in it. By ruthlessly eliminating flakiness, you rebuild that trust and create a faster, more confident development workflow. The best strategy? Design deterministic, isolated, and well-structured tests.

And for those particularly tricky API-related flakes, remember that a tool like Apidog can be your strongest ally. Its mocking and testing capabilities are designed specifically to create the stable, predictable environment your tests need to thrive. Apidog can save you from a world of flaky test pain by simulating stable environments. Now go forth and make your test suite unbreakable.