In the rapidly evolving world of AI-powered coding, speed is everything. Cursor, a popular AI coding IDE, promises to boost productivity with code generation, context awareness, and agentic tools. But lately, a growing chorus of users is asking: Why is Cursor so slow?

If you’re frustrated by laggy responses, slow code application, or a sluggish UI, you’re not alone. This article delves into real user concerns, explores the root causes of Cursor’s performance issues, and—most importantly—shows you how to fix them. We’ll also introduce a powerful tool that can be integrated into your Cursor workflow to help you code faster and smarter.

Why Is Cursor Slow? Real User Experiences and Concerns

Cursor’s promise is simple: pair-program with AI, edit code, and use agents with codebase-wide understanding. But for many, the reality has been a frustrating slowdown.

What are users experiencing?

- Laggy AI responses: Even on paid plans, users report 20–60 second delays for simple code generation.

- Slow UI: Typing, clicking, and even quitting the app can become painfully slow.

- High resource usage: Some users see their GPU spike to 90% during code application, with minimal VRAM or CUDA involvement.

- Crashes and freezes: Cursor sometimes hangs, restarts, or becomes unresponsive, especially with large codebases or long chat histories.

- Agent delays: AI agents hang mid-task or take 10+ minutes for simple actions.

- Memory leaks: Error logs show thousands of event listeners, suggesting possible memory leaks.

User Quotes:

“Cursor is extremely slow for you since the 0.49 update?” (See original source)

“I’m on the Pro plan, but it still takes 20–30 seconds just to generate a simple snippet.”(See original source)

“My laptop is not the best so that might be an issue, but it is a bigger problem now than it was before.”(See original source)

“Cursor AI runs extremely slow and hangs after a certain amount of usage. There is no problem when starting a project from scratch, but as it progresses, it uses a lot of memory.” (See original source)

Common triggers:

- Large codebases

- Long chat histories

- Recent updates (especially v0.49+)

- Extensions or plugins

- High system resource usage

What Causes Cursor to Be Slow?

Delving into the root causes, several factors can result in the slow performance of Cursor:

1. Large Codebases and Context Windows

- Cursor’s AI thrives on context, but loading thousands of files or a massive codebase can overwhelm the system.

- The more files and history loaded, the slower the response — especially when the context window is full.

2. Long Chat Histories

- Referencing past chats or keeping long chat windows open can cause significant lag.

- Possible Solution: Some users report that starting a new chat or project directory instantly restores speed.

3. Extensions and Plugins

- Extensions can consume resources or conflict with Cursor’s core processes.

- Possible Solution: Disabling all extensions often resolves performance issues.

4. Memory Leaks and Resource Management

- Error logs show thousands of event listeners, indicating possible memory leaks.

- High GPU usage with minimal VRAM/CUDA involvement suggests inefficient resource allocation.

5. Recent Updates and Bugs

- Many users noticed slowdowns after updating to v0.49 or later.

- Sometimes, rolling back to an earlier version or waiting for a patch helps.

6. System Limitations

- Older hardware, limited RAM, or running multiple heavy apps can exacerbate Cursor’s slowness.

Table: Common Causes of Cursor Slowness

| Cause | Symptom | Solution |

|---|---|---|

| Large codebase | Slow load, laggy AI | Limit files, split projects |

| Long chat history | UI lag, slow responses | Start new chat/project |

| Extensions | Crashes, high resource usage | Disable all extensions |

| Memory leaks | Increasing lag, errors | Restart app, report bug |

| Recent updates | New lag after update | Roll back, check for patches |

| System limitations | General slowness | Close other apps, upgrade RAM |

How to Fix Cursor Is Slow: Actionable Solutions

If you’re struggling with a slow Cursor experience, try these solutions—many are recommended by both users and Cursor’s official troubleshooting guides.

1. Start Fresh: New Chat or Project

- Open a new chat window and avoid referencing old chats.

- Move your project to a new directory to clear context.

- If possible, split large codebases into smaller projects.

2. Disable Extensions

- Launch Cursor with

cursor --disable-extensionsfrom the command line. - If performance improves, re-enable extensions one by one to find the culprit.

3. Clear Cache and Reinstall

- Sometimes, clearing Cursor’s cache or reinstalling the app resolves persistent lag.

- Note: There’s currently no “clear chat history” button—reinstalling is the only way to fully reset.

4. Monitor System Resources

- Use Task Manager (Windows) or Activity Monitor (Mac) to check CPU, GPU, and RAM usage.

- Close other heavy applications to free up resources.

5. Update or Roll Back

- Check for the latest Cursor updates—performance bugs are often fixed quickly.

- If a new update causes issues, consider rolling back to a previous version.

6. Check for Memory Leaks

- Review error logs for “potential listener LEAK detected.”

- Report persistent leaks to Cursor’s support team for investigation.

7. Optimize Chat and Context

- Avoid loading unnecessary files or keeping too many tabs open.

- Export important chat history and start fresh when needed.

While these issues can be daunting, there's a proactive step you can take to optimize a crucial part of your AI-assisted development, especially when working with APIs. This is where the Apidog MCP Server comes into play. Instead of Cursor potentially struggling to parse or access API specifications scattered across various formats or complex online documentation, the free Apidog MCP Server provides a streamlined, cached, and AI-friendly data source directly from your Apidog projects or OpenAPI files. This can significantly reduce the load on Cursor when it needs to understand and generate code based on API contracts, leading to a faster, more reliable Cursor coding workflow.

Supercharge Your Cursor Workflow with Apidog MCP Server (Free)

When your Cursor is slow, particularly during tasks involving API specifications, the root cause might be how Cursor accesses and processes this API data. Traditional API documentation, while human-readable, can be challenging for AI tools to parse efficiently. Apidog MCP Server offers a free and powerful way to enhance your Cursor coding workflow.

What is Apidog MCP Server?

Apidog is an all-in-one API development platform, and its Apidog MCP Server is a brilliant extension of its capabilities, designed specifically for AI-powered IDEs like Cursor. It allows your AI assistant to directly and efficiently access API specifications from your Apidog projects or local/online OpenAPI/Swagger files. This integration is not just a minor tweak; it's a fundamental improvement in how AI interacts with your API designs. Here are main benefits of Apidog MCP Sercer:

- Faster, more reliable code generation by letting the AI access your API specs directly

- Local caching for speed and privacy—no more waiting for remote lookups

- Seamless integration with Cursor, VS Code, and other IDEs

- Support for multiple data sources: Apidog projects, public API docs, Swagger/OpenAPI files

How Does It Help with Cursor's Slowness?

- Reduces context overload: By letting the AI fetch only relevant API data, you avoid loading massive codebases into Cursor’s context window.

- Minimizes lag: Local caching means less waiting for remote responses.

- Streamlines workflow: Generate, update, and document code faster—without hitting Cursor’s performance bottlenecks.

How to Integrate Apidog MCP Server with Cursor

Integrating the Apidog MCP Server with Cursor allows your AI assistant to tap directly into your API specifications. Here’s how to set it up:

Prerequisites:

Before you begin, ensure the following:

✅ Node.js is installed (version 18+; latest LTS recommended)

✅ You're using an IDE that supports MCP, such as: Cursor

Step 1: Prepare Your OpenAPI File

You'll need access to your API definition:

- A URL (e.g.,

https://petstore.swagger.io/v2/swagger.json) - Or a local file path (e.g.,

~/projects/api-docs/openapi.yaml) - Supported formats:

.jsonor.yaml(OpenAPI 3.x recommended)

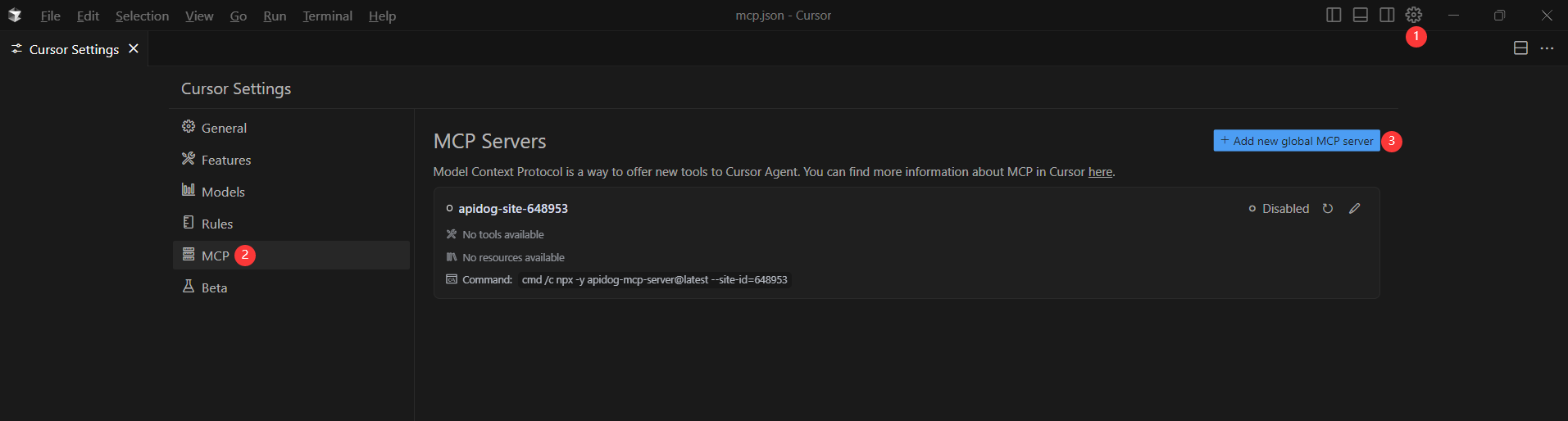

Step 2: Add MCP Configuration to Cursor

You'll now add the configuration to Cursor's mcp.json file.

Remember to Replace <oas-url-or-path> with your actual OpenAPI URL or local path.

- For MacOS/Linux:

{

"mcpServers": {

"API specification": {

"command": "npx",

"args": [

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}- For Windows:

{

"mcpServers": {

"API specification": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}Step 3: Verify the Connection

After saving the config, test it in the IDE by typing the following command in Agent mode:

Please fetch API documentation via MCP and tell me how many endpoints exist in the project.If it works, you’ll see a structured response that lists endpoints and their details. If it doesn’t, double-check the path to your OpenAPI file and ensure Node.js is installed properly.

Conclusion

Navigating the complexities of AI-assisted coding in Cursor requires both understanding its potential pitfalls and leveraging tools that enhance its capabilities. While issues like slow performance, resource hogging, and context limitations can be frustrating, many can be mitigated through proactive troubleshooting, such as starting fresh projects, managing extensions, and monitoring system resources.

Furthermore, integrating innovative solutions like the Apidog MCP Server can significantly streamline your workflow, especially when dealing with APIs. By providing Cursor with direct, optimized access to API specifications, you reduce its processing load and unlock faster, more reliable code generation.