Cursor has recently announced support for Anthropic's new Claude 4.0 models, including the highly anticipated Claude 4.0 Sonnet. This development has generated considerable excitement among developers looking to leverage its advanced coding capabilities. However, the rollout hasn't been without its hitches. Many Cursor users are encountering a frustrating roadblock: the "model claude-4-sonnet does not work with your current plan or api key" error, or similar messages indicating that Claude 4.0 Sonnet is not available.

This article will delve into the common issues preventing access to Claude 4.0 Sonnet in Cursor and provide community-tested solutions to get you up and running.

As a BONUS, we'll introduce a game-changing tool, the Apidog MCP Server, and guide you through integrating it with Cursor to seamlessly connect with your API specifications, thereby significantly enhancing your API development and debugging workflow.

We’re thrilled to share that MCP support is coming soon to Apidog! 🚀

— Apidog (@ApidogHQ) March 19, 2025

Apidog MCP Server lets you feed API docs directly to Agentic AI, supercharging your vibe coding experience! Whether you're using Cursor, Cline, or Windsurf - it'll make your dev process faster and smoother.… pic.twitter.com/ew8U38mU0K

Understanding the "Claude 4.0 Sonnet Not Available" Error in Cursor

The introduction of Claude 4.0 Sonnet into Cursor promised enhanced coding assistance, but for many, the initial experience has been marred by accessibility issues. Users frequently report errors such as:

- "The model claude-4-sonnet does not work with your current plan or api key"

- "We encountered an issue when using your API key: Provider was unable to process your request"

- "The model claude-4-sonnet-thinking does not work with your current plan or api key"

- A general inability to use Claude 4.0 Sonnet, even if other models work.

Based on Cursor's official documentation and user reports in Cursor Forums, here are the key reasons:

- Paid Plan Requirement: Claude 4 models are exclusively available to paid Cursor users. Free tier users cannot access them.

- Capacity Limitations: Due to high demand, Anthropic has temporarily restricted access to these models.

- Usage-Based Pricing: For Pro plan users, you may need to enable usage-based pricing to access Claude 4 Sonnet.

5 Proven Solutions to Solve the Issue "The model claude-4-sonnet does not work with your current plan or api key" in Cursor

If you're grappling with the "Claude 4.0 Sonnet is not available" message or similar errors in Cursor, try these troubleshooting steps:

1. Restart Cursor: This is the simplest yet often effective solution. A full restart of the Cursor application can refresh its connection and model availability.

2. Start a New Chat: If you find that even after restarting Cursor, the issue persists in existing chats. Starting an entirely new chat session may be the trick that enables access to Claude 4.0 Sonnet.

3. Verify Your Plan and Enable Usage-Based Pricing: Access to Claude 4.0 Sonnet is often tied to paid plans.

- Ensure you are on a Pro plan or a similar tier that explicitly includes access to newer Anthropic models.

- Some users on Pro plans reported needing to enable usage-based pricing to get Claude 4.0 Sonnet working, even if they had available fast requests. This seems to be a common workaround.

4. Free Users with Own API Keys: If you're a free Cursor user attempting to use your personal Anthropic API key, you might still face limitations. Anthropic itself may restrict access to its newest models like Claude 4.0 Sonnet via API keys that are not associated with a paid Anthropic plan or sufficient credits. The message "Anthropic’s latest models are currently only available to paid users" often appears in this context.

5. Patience During High Demand: For models like Claude 4.0 Opus, and potentially Sonnet during peak times, Cursor has indicated high demand. They suggest switching to 'auto-select' or trying again later. While this is more for Opus, it's a factor to consider for any newly launched, popular model.

By systematically working through these solutions, you can successfully resolve issues with Claude 4.0 Sonnet not being available in Cursor. Remember that the AI landscape is dynamic, and solutions can evolve.

Enhancing Your Cursor Workflow with Apidog MCP Server

While resolving access to Claude 4.0 Sonnet in Cursor is a significant step, developers, especially those working extensively with APIs, can further elevate their productivity by integrating their API specifications directly into their AI-powered IDE. This is where Apidog MCP Server can help.

What is Apidog MCP Server?

Apidog MCP Server allows your API specification to become a direct data source for AI assistants like the one in Cursor. Imagine your AI not just understanding natural language but also having an intricate, up-to-date knowledge of your project's API contracts. This integration empowers Cursor to:

- Generate or modify code (e.g., DTOs, client libraries, server stubs) accurately based on your API schema.

- Search through API specification content directly within the IDE.

- Understand API endpoints, request/response structures, and data models for more context-aware assistance.

- Add comments to code based on API field descriptions.

- Create MVC code or other structural components related to specific API endpoints.

In essence, the Apidog MCP Server bridges the gap between your API specifications and your AI assistant. This is particularly beneficial when you need to ensure that the code generated or modified by Claude 4.0 Sonnet (or any other model in Cursor) aligns perfectly with your API definitions. It reduces errors, speeds up development cycles, and ensures consistency between your API documentation and its implementation.

Connecting Apidog MCP Server to Cursor for Enhanced API Workflows

Integrating the Apidog MCP Server with Cursor allows your AI assistant to tap directly into your API specifications. Here’s how to set it up:

Prerequisites:

Before you begin, ensure the following:

✅ Node.js is installed (version 18+; latest LTS recommended)

✅ You're using an IDE that supports MCP, such as: Cursor

Step 1: Prepare Your OpenAPI File

You'll need access to your API definition:

- A URL (e.g.,

https://petstore.swagger.io/v2/swagger.json) - Or a local file path (e.g.,

~/projects/api-docs/openapi.yaml) - Supported formats:

.jsonor.yaml(OpenAPI 3.x recommended)

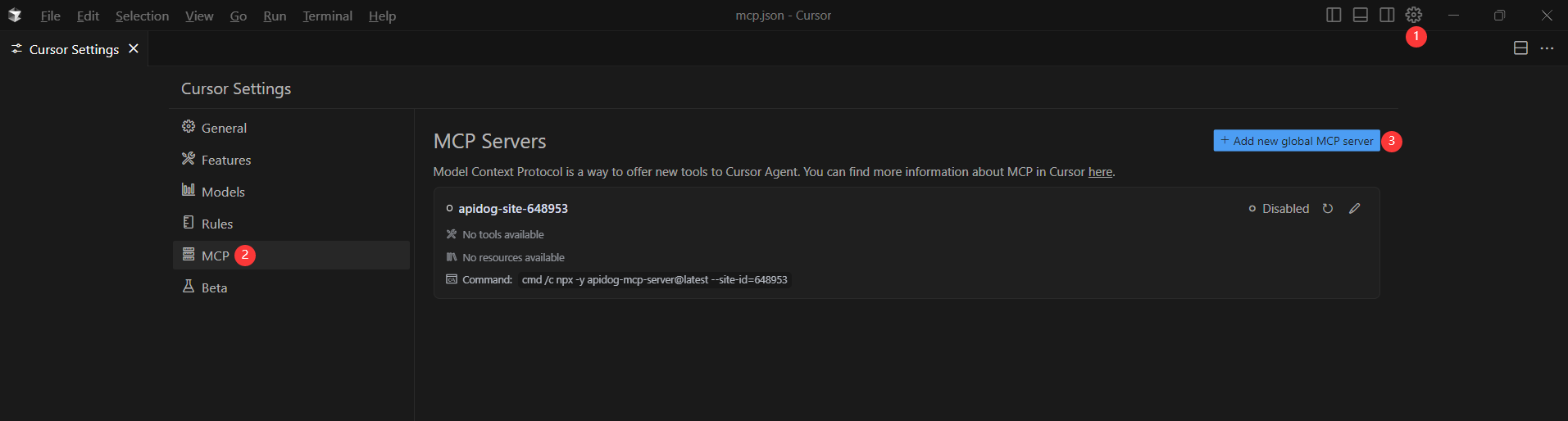

Step 2: Add MCP Configuration to Cursor

You'll now add the configuration to Cursor's mcp.json file.

Remember to Replace <oas-url-or-path> with your actual OpenAPI URL or local path.

- For MacOS/Linux:

{

"mcpServers": {

"API specification": {

"command": "npx",

"args": [

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}- For Windows:

{

"mcpServers": {

"API specification": {

"command": "cmd",

"args": [

"/c",

"npx",

"-y",

"apidog-mcp-server@latest",

"--oas=https://petstore.swagger.io/v2/swagger.json"

]

}

}

}Step 3: Verify the Connection

After saving the config, test it in the IDE by typing the following command in Agent mode:

Please fetch API documentation via MCP and tell me how many endpoints exist in the project.If it works, you’ll see a structured response that lists endpoints and their details. If it doesn’t, double-check the path to your OpenAPI file and ensure Node.js is installed properly.

Conclusion

While Claude 4 Sonnet access issues in Cursor can be frustrating, they highlight the importance of robust development tooling. By implementing Apidog MCP Server, you not only solve immediate workflow challenges but future-proof your API development process.

The combination of Cursor's AI capabilities with Apidog's API specification integration creates a powerful environment that:

- Reduces context switching between tools

- Minimizes manual coding errors

- Accelerates development cycles

- Ensures consistency between API contracts and implementation

For teams working with APIs, this integration is becoming essential rather than optional. As AI-assisted development evolves, tools like Apidog MCP Server that bridge the gap between specifications and code will define the next generation of developer productivity.