Are you looking to automate web data extraction for AI model training or analysis? Integrating AI-powered tools like Cline and Firecrawl MCP inside VS Code enables you to rapidly convert websites into LLM-ready text files—streamlining workflows for API developers, backend engineers, and anyone building with large language models.

This guide walks you through setting up and using Cline with Firecrawl MCP to generate LLMs.txt files, plus how Apidog can further enhance your API-driven workflow.

💡 Want to connect your AI coding workflow with API documentation? Apidog MCP server lets you feed your API specs directly into popular IDEs like Cursor for a seamless coding experience. Import your spec and let your tools handle the rest!

While working in AI-powered IDEs such as Cursor, you can further streamline your API lifecycle with Apidog. This free, integrated platform lets you design, test, mock, and document APIs — all in one place.

What Are Cline and Firecrawl MCP?

Cline: AI Coding Assistant for VS Code

Cline is an AI assistant extension for VS Code that leverages the Model Context Protocol (MCP) to automate complex developer tasks. It supports multiple AI models and APIs, and can create or manage custom tools—including MCP servers—for activities like web scraping and data extraction.

Firecrawl MCP Server: Advanced Web Scraping for LLMs

Firecrawl MCP Server brings robust web scraping to your LLM projects with:

- JavaScript rendering for dynamic pages

- Automatic retries and batch processing

- Structured data extraction optimized for LLM input

It’s especially suited for turning websites into structured, LLM-ready data.

Beginner’s Guide: How to Use Firecrawl for Web Scraping

Unlock web data with Firecrawl—transform websites into structured data for AI applications.

Apidog Blog | Ashley Goolam

Prerequisites

Before you start, make sure you have:

- VS Code (version 1.60 or higher)

- Node.js (version 14.x or higher)

- Cline Extension (latest version)

- Firecrawl API key

Step-by-Step: Setting Up Cline and Firecrawl MCP in VS Code

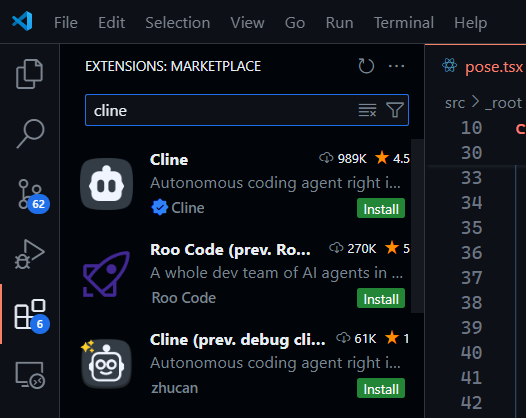

1. Install Cline Extension

- Open the Extensions Marketplace in VS Code.

- Search for "Cline" and install it.

2. Configure Cline

- Interact with Cline via the VS Code terminal or chat interface.

- Use it to automate tasks like file creation or command execution.

3. Enable MCP Capabilities

- Cline can add and manage MCP servers directly.

- Ask Cline to "add a tool" related to Firecrawl MCP; it will handle setup for you.

Setting Up Firecrawl MCP Server in Cline

Cline’s MCP marketplace simplifies adding and configuring servers like Firecrawl MCP—no manual steps required.

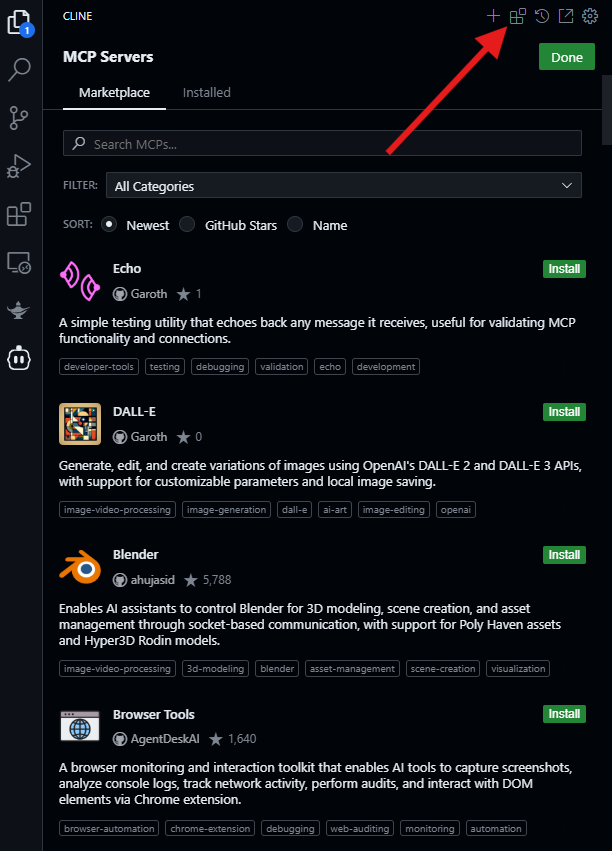

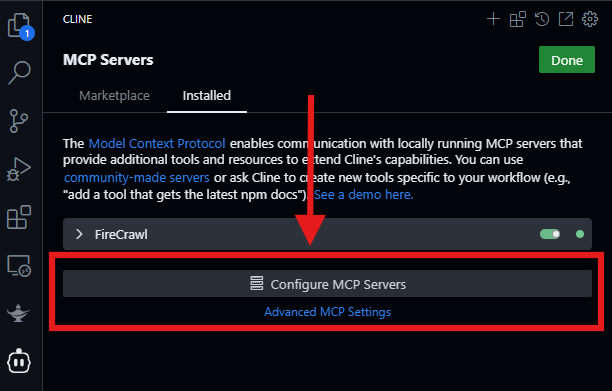

Step 1: Access the MCP Marketplace

- Open Cline in VS Code.

- Navigate to the MCP Servers Marketplace within Cline (similar to browsing VS Code extensions).

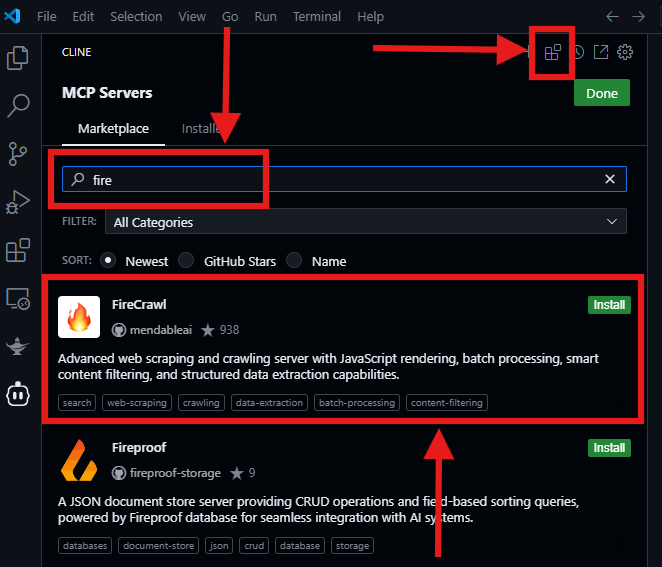

Step 2: Install Firecrawl MCP Server

- Search for "Firecrawl MCP" in the marketplace.

- Click to install it.

- After installation, check the "Installed" section to confirm.

Step 3: Configure Firecrawl MCP Server

-

Get Your API Key:

- Visit the Firecrawl website, sign up, and obtain your API key.

- Keep the key secure.

-

Update Cline Configuration:

- In Cline, select "Configure MCP Servers."

- Add your Firecrawl API key to the JSON config as shown below:

{

"mcpServers": {

"github.com/mendableai/firecrawl-mcp-server": {

"command": "cmd",

"args": [

"/c",

"set FIRECRAWL_API_KEY=<Replace with your firecrawl_api_key\"fc-\"> && npx -y firecrawl-mcp"

],

"env": {

"FIRECRAWL_API_KEY": <Replace with your firecrawl_api_key"fc-">

},

"disabled": false,

"autoApprove": []

}

}

}

- Verify Installation:

- Refresh the MCP servers.

- A green dot indicates successful configuration.

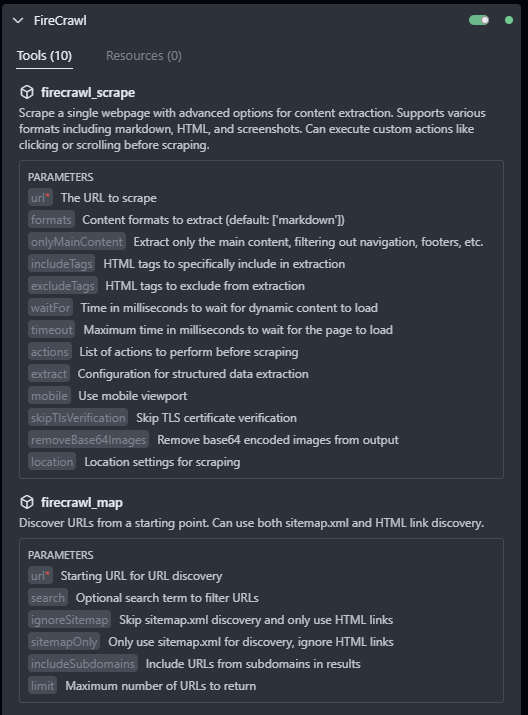

Step 4: Explore Available Firecrawl MCP Tools

- Click the dropdown beside Firecrawl MCP in Cline to see all available tools and their descriptions.

- Other installed MCP servers will be listed below.

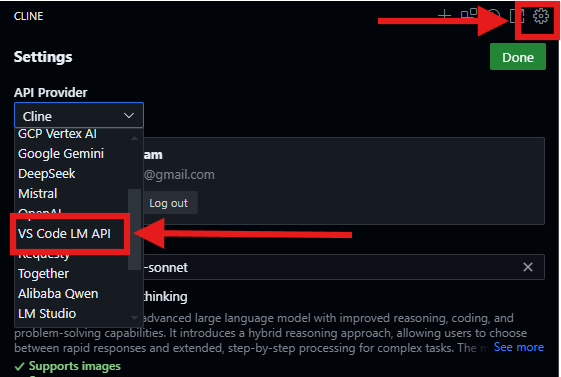

Managing API Providers in Cline

If you run out of free Cline API credits:

- Go to Cline’s settings.

- Change the API provider to "VS Code LM API" for free Claude 3.5 access (note: monthly usage limits apply).

- Ensure you have Copilot installed in VS Code for this feature.

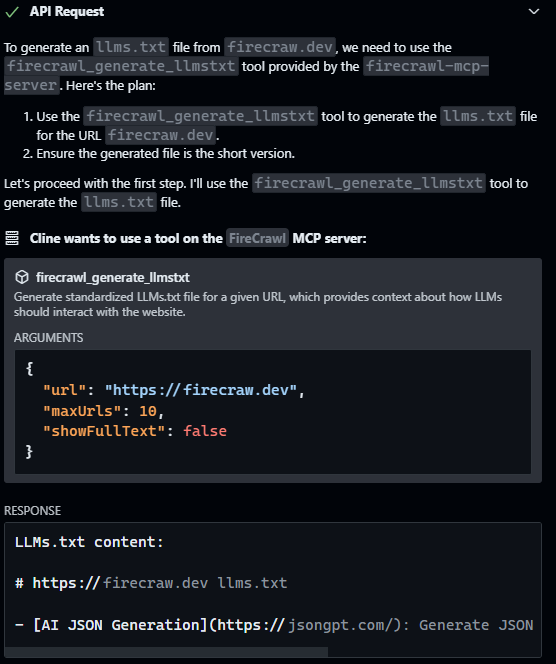

How to Generate LLMs.txt Files with Cline + Firecrawl MCP

- Command Cline to Generate Files:

Ask Cline, for example:

generate an llms.txt from firecraw.dev --short version

generate an llms.txt from firecraw.dev --short version

- Cline will execute the command, triggering Firecrawl MCP Server to scrape and generate your LLMs.txt files.

- Monitor Progress:

- Check Cline’s output or Firecrawl MCP logs for status.

- Retrieve Your Files:

- Once complete, access your generated

llms.txtand (optionally)llms-full.txtfiles—ready for LLM training or content analysis.

- Once complete, access your generated

Key Features & Benefits

-

Efficient Web Scraping:

Firecrawl MCP handles JavaScript-heavy, dynamic sites and supports batch processing. -

Customizable:

Configure rate limiting and batch settings to stay compliant and efficient. -

Seamless AI Integration:

Cline automates data prep for LLMs, saving time on manual steps.

Practical Use Cases for LLMs.txt Files

-

Data Analysis:

Extract and analyze web content for model training or reporting. -

Research Automation:

Collect structured data across many sites for research or competitive analysis. -

Content Summarization:

Use concise summaries fromllms.txtto quickly understand website content at scale.

Best Practices for Using Firecrawl MCP with Cline

-

Validate URLs:

Always check URLs for accessibility and proper formatting before scraping. -

Implement Rate Limiting:

Configure limits in Cline or Firecrawl MCP to avoid overloading target sites or exceeding API quotas. -

Backup Generated Data:

Regularly back up your LLMs.txt files to prevent data loss. -

Monitor API Usage:

Track your Firecrawl API consumption and set alerts to avoid service interruptions.

Conclusion

Combining Cline and Firecrawl MCP in VS Code provides a powerful, automated workflow for generating LLMs.txt files from web data—ideal for developers working with LLMs, data extraction, or research automation.

As you build smarter workflows, explore how Apidog fits into your stack. Apidog streamlines the API lifecycle—from design to testing to documentation—making it a top choice for modern development teams seeking a robust alternative to Postman.