FastAPI MCP Server is a revolutionary tool that bridges the gap between your FastAPI applications and AI agents using the Model Context Protocol (MCP). In an era where AI integration is becoming increasingly important, this zero-configuration solution allows you to expose your existing FastAPI endpoints as tools that can be discovered and utilized by AI models and agents with minimal effort.

Introduction to FastAPI MCP

The Model Context Protocol (MCP) is an emerging standard that enables AI models to interact with external tools and resources. FastAPI MCP Server serves as a bridge between your existing FastAPI applications and MCP-compatible AI agents like Anthropic's Claude, Cursor IDE, and others, without requiring you to rewrite your API or learn complex new frameworks.

What makes FastAPI MCP truly remarkable is its zero-configuration approach. It automatically converts your existing FastAPI endpoints into MCP-compatible tools, preserving your endpoint schemas, documentation, and functionality. This means AI agents can discover and interact with your APIs with minimal setup required from you.

You can find the FastAPI MCP server repository at: https://github.com/tadata-org/fastapi_mcp

Getting Started with FastAPI MCP

Installation

Before you can use FastAPI MCP, you'll need to install it. The developers recommend using uv, a fast Python package installer, but you can also use the traditional pip method:

Using uv:

uv add fastapi-mcp

Using pip:

pip install fastapi-mcp

Basic Implementation

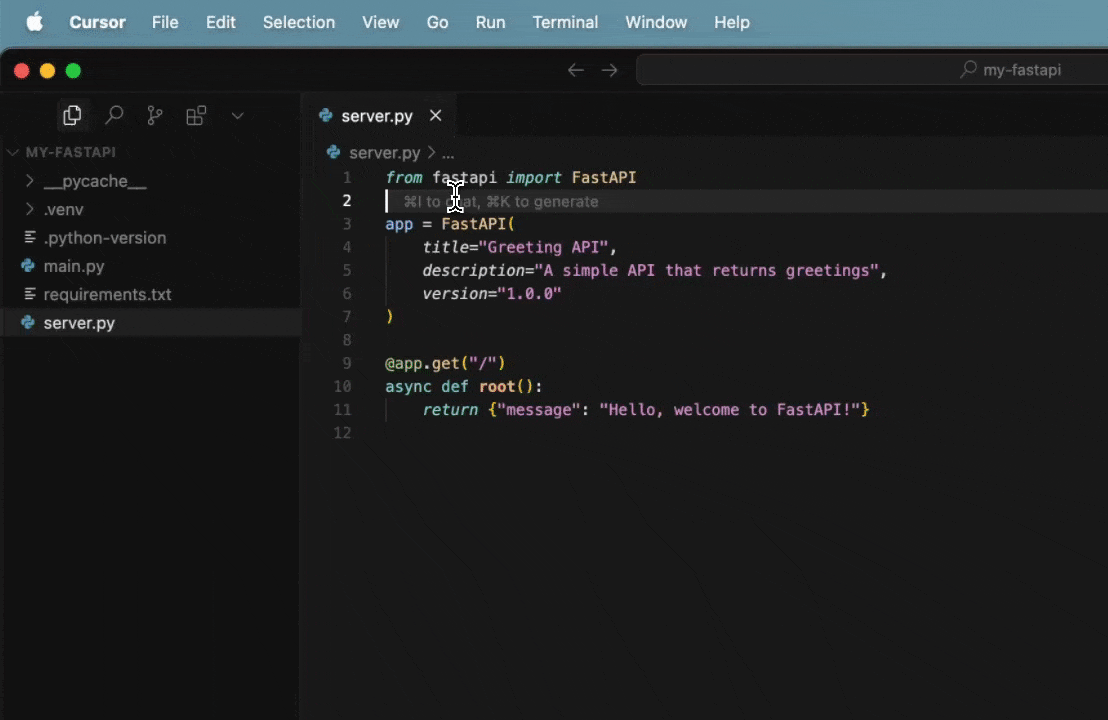

Integrating FastAPI MCP with your existing FastAPI application couldn't be simpler. Here's the most basic implementation:

from fastapi import FastAPI

from fastapi_mcp import FastApiMCP

# Your existing FastAPI application

app = FastAPI()

# Create the MCP server

mcp = FastApiMCP(

app,

name="My API MCP",

description="My API description",

base_url="http://localhost:8000" # Important for routing requests

)

# Mount the MCP server to your FastAPI app

mcp.mount()

That's it! With this simple code, your MCP server is now available at https://app.base.url/mcp. When an MCP-compatible client connects to this endpoint, it will automatically discover all your FastAPI routes as available tools.

Understanding MCP Tool Names and Operation IDs

When FastAPI MCP exposes your endpoints as tools, it uses the operation_id from your FastAPI routes as the MCP tool names. FastAPI automatically generates these IDs when not explicitly provided, but they can be cryptic and less user-friendly.

Compare these two approaches:

# Auto-generated operation_id (less user-friendly)

@app.get("/users/{user_id}")

async def read_user(user_id: int):

return {"user_id": user_id}

# Explicit operation_id (more user-friendly)

@app.get("/users/{user_id}", operation_id="get_user_info")

async def read_user(user_id: int):

return {"user_id": user_id}

In the first example, the tool might be named something like "read_user_users__user_id__get", while the second example would simply be "get_user_info". For better usability, especially when AI agents interact with your tools, it's recommended to explicitly define operation IDs that are clear and descriptive.

Advanced Configuration Options

FastAPI MCP provides several customization options to tailor how your API is exposed to MCP clients.

Customizing Schema Description

You can control how much information is included in the tool descriptions:

mcp = FastApiMCP(

app,

name="My API MCP",

base_url="http://localhost:8000",

describe_all_responses=True, # Include all possible response schemas

describe_full_response_schema=True # Include full JSON schema details

)

Filtering Endpoints

You might want to control which endpoints are exposed as MCP tools. FastAPI MCP offers several filtering mechanisms:

# Only include specific operations by operation ID

mcp = FastApiMCP(

app,

include_operations=["get_user", "create_user"]

)

# Exclude specific operations

mcp = FastApiMCP(

app,

exclude_operations=["delete_user"]

)

# Filter by tags

mcp = FastApiMCP(

app,

include_tags=["users", "public"]

)

# Exclude by tags

mcp = FastApiMCP(

app,

exclude_tags=["admin", "internal"]

)

# Combine filtering strategies

mcp = FastApiMCP(

app,

include_operations=["user_login"],

include_tags=["public"]

)

Note that you cannot combine inclusion and exclusion filters of the same type (operations or tags), but you can use operation filters together with tag filters.

Deployment Strategies

FastAPI MCP offers flexibility in how you deploy your MCP server.

Mounting to the Original Application

The simplest approach is mounting the MCP server directly to your original FastAPI app as shown in the basic example. This creates an endpoint at /mcp in your existing application.

Deploying as a Separate Service

You can also create an MCP server from one FastAPI app and mount it to a different app, allowing you to deploy your API and its MCP interface separately:

from fastapi import FastAPI

from fastapi_mcp import FastApiMCP

# Your original API app

api_app = FastAPI()

# Define your endpoints here...

# A separate app for the MCP server

mcp_app = FastAPI()

# Create MCP server from the API app

mcp = FastApiMCP(

api_app,

base_url="http://api-host:8001" # URL where the API app will be running

)

# Mount the MCP server to the separate app

mcp.mount(mcp_app)

# Now run both apps separately:

# uvicorn main:api_app --host api-host --port 8001

# uvicorn main:mcp_app --host mcp-host --port 8000

This separation can be beneficial for managing resources, security, and scaling.

Updating MCP After Adding New Endpoints

If you add endpoints to your FastAPI app after creating the MCP server, you'll need to refresh the server to include them:

# Initial setup

app = FastAPI()

mcp = FastApiMCP(app)

mcp.mount()

# Later, add a new endpoint

@app.get("/new/endpoint/", operation_id="new_endpoint")

async def new_endpoint():

return {"message": "Hello, world!"}

# Refresh the MCP server to include the new endpoint

mcp.setup_server()

Connecting to Your MCP Server

Once your FastAPI app with MCP integration is running, clients can connect to it in various ways.

Using Server-Sent Events (SSE)

Many MCP clients, like Cursor IDE, support SSE connections:

- Run your application

- In Cursor → Settings → MCP, use the URL of your MCP server endpoint (e.g.,

http://localhost:8000/mcp) as the SSE URL - Cursor will automatically discover all available tools and resources

Using MCP Proxy for Clients Without SSE Support

For clients that don't support SSE directly (like Claude Desktop):

- Run your application

- Install the MCP proxy tool:

uv tool install mcp-proxy - Configure your client to use mcp-proxy

For Claude Desktop on Windows, create a configuration file (claude_desktop_config.json):

{

"mcpServers": {

"my-api-mcp-proxy": {

"command": "mcp-proxy",

"args": ["http://127.0.0.1:8000/mcp"]

}

}

}

For macOS, you'll need to specify the full path to the mcp-proxy executable, which you can find using which mcp-proxy.

Real-World Use Cases

FastAPI MCP opens up numerous possibilities for AI-powered applications:

- Data Analysis Tools: Expose data processing endpoints that AI agents can use to analyze user data without requiring custom integrations for each AI model.

- Content Management Systems: Allow AI tools to fetch and update content via your existing CMS API, making content creation and management more efficient.

- Custom Search Engines: Enable AI assistants to search your specialized databases or content repositories through a simple API interface.

- E-commerce Operations: Let AI agents check inventory, product information, or place orders through your existing e-commerce API endpoints.

- Document Processing: Provide AI tools with the ability to create, convert, or analyze documents using your backend document processing capabilities.

Best Practices

To get the most out of FastAPI MCP, consider these best practices:

- Use explicit operation IDs: Define clear, descriptive operation IDs for all your endpoints to make them more usable by AI agents.

- Provide comprehensive documentation: Include detailed descriptions for each endpoint and parameter to help AI models understand how to use your tools effectively.

- Use consistent parameter naming: Adopt consistent naming conventions for similar parameters across different endpoints.

- Consider security implications: Be mindful of what endpoints you expose via MCP and implement proper authentication where needed.

- Monitor usage: Track how AI agents are using your MCP tools to identify patterns, errors, or areas for improvement.

Conclusion

FastAPI MCP Server represents a significant step forward in making backend services accessible to AI agents. By automatically converting your existing FastAPI endpoints into MCP-compatible tools with zero configuration, it eliminates the need for custom integrations or complex adjustments to your API design.

As the MCP ecosystem continues to grow and more AI models adopt this standard, having your APIs exposed through FastAPI MCP will position your services to be readily accessible to a wide range of AI agents and tools. Whether you're building internal tooling, developer services, or public APIs, FastAPI MCP offers a straightforward path to making your services AI-accessible.

By following the guidance in this article, you can quickly integrate FastAPI MCP into your existing FastAPI applications and start exploring the possibilities of AI-powered automation and interaction with your services.