Developers constantly seek robust AI models that deliver reliable results without excessive costs or complexity. Baidu addresses this need with ERNIE X1.1, a cutting-edge reasoning model that enhances factual accuracy, instruction following, and agentic capabilities. Launched at Wave Summit 2025, this model builds on ERNIE 4.5 foundations, incorporating end-to-end reinforcement learning for superior performance. Users access it through the ERNIE Bot, Wenxiaoyan app, or the Qianfan Model-as-a-Service (MaaS) platform via API, making it versatile for both individual and enterprise applications.

This article guides you through every aspect of using the ERNIE X1.1 API. We start with an overview of the model, then move to setup procedures, and finally explore advanced usage scenarios. By following these steps, you integrate ERNIE X1.1 efficiently into your workflows.

What Is ERNIE X1.1? Key Features and Capabilities

Baidu designs ERNIE X1.1 as a multimodal deep-thinking reasoning model that tackles complex tasks involving logical planning, reflection, and evolution. It reduces hallucinations significantly, improves instruction adherence by 12.5%, and boosts agentic functions by 9.6% compared to its predecessor, ERNIE X1. Additionally, it achieves 34.8% higher factual accuracy, making it ideal for applications like knowledge Q&A, content generation, and tool calling.

The model supports extensive context windows, up to 32K tokens in some variants, allowing it to process long-form inputs without losing coherence. Developers leverage its multimodal capabilities to handle text, images, and even video analysis, expanding use cases beyond traditional language models. For instance, ERNIE X1.1 identifies image content accurately and simulates physical scenarios, such as particles in a rotating 3D container.

Moreover, ERNIE X1.1 prioritizes reliability by providing accurate information over misleading instructions, which sets it apart in safety-critical environments. Baidu deploys it on the Qianfan platform, ensuring scalability for high-volume API calls. This setup enables seamless integration with existing systems, whether you build chatbots, recommendation engines, or data analysis tools.

Transitioning to performance metrics, ERNIE X1.1 demonstrates dominance in benchmarks, as shown in recent evaluations.

ERNIE X1.1 Benchmark Performance: Outshining Competitors

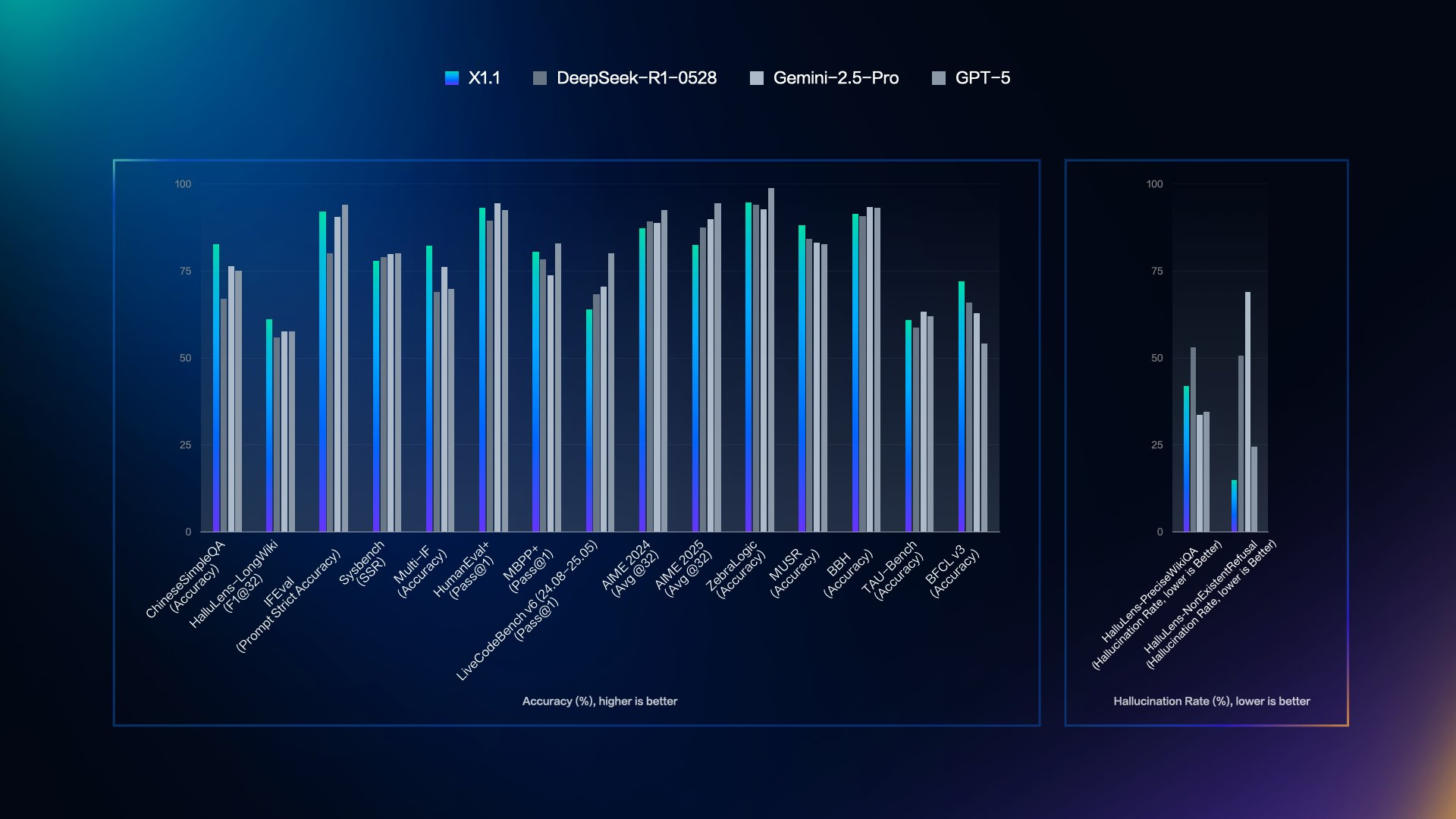

Baidu evaluates ERNIE X1.1 against top models like DeepSeek R1-0528, Gemini 2.5 Pro, and GPT-5, revealing its strengths in accuracy and low hallucination rates. A key visualization from the launch illustrates these comparisons across multiple benchmarks.

In contrast, the hallucination rate panel covers HalluQA (Hallu-Precision, Lower Non-Existent is Better), Hallu Lens (Rate, Lower is Better), and others. Here, ERNIE X1.1 shows the lowest bars, indicating minimal errors – for example, under 25% in HalluQA.

This data underscores how ERNIE X1.1 surpasses DeepSeek in overall performance while staying on par with GPT-5. Developers benefit from these metrics when selecting models for precision-demanding tasks. Now, let's shift to practical implementation by setting up access.

Getting Started with ERNIE X1.1 API on Qianfan Platform

You begin by registering on Baidu AI Cloud's Wenxin Qianfan platform. Visit the official site and create a developer account using your email or phone number. Once verified, apply for API access to ERNIE models. Baidu reviews applications quickly, often within hours, granting you client ID and secret keys.

Next, install the necessary SDKs. Python users employ the Qianfan SDK via pip: pip install qianfan. This library handles authentication and requests efficiently. For other languages like Java or Go, Baidu provides equivalent SDKs with similar interfaces.

After installation, configure your environment variables. Set QIANFAN_AK to your access key and QIANFAN_SK to your secret key. This step secures your credentials without hardcoding them in scripts. With setup complete, you proceed to authentication.

Authenticating Requests to ERNIE X1.1 API

The Qianfan platform uses OAuth 2.0 for authentication. You generate an access token by sending a POST request to the token endpoint. In Python, the Qianfan SDK automates this process. For manual implementation, construct a request like this:

import requests

url = "https://aip.baidubce.com/oauth/2.0/token"

params = {

"grant_type": "client_credentials",

"client_id": "YOUR_CLIENT_ID",

"client_secret": "YOUR_CLIENT_SECRET"

}

response = requests.post(url, params=params)

access_token = response.json()["access_token"]

Store the token, which expires after 30 days, and refresh it as needed. Attach it to API calls via the access_token query parameter. This method ensures secure communication. However, always handle tokens carefully to avoid exposure.

Once authenticated, you target the appropriate endpoints for ERNIE X1.1.

ERNIE X1.1 API Endpoints and Request Structure

Baidu structures ERNIE APIs under the /chat path. For ERNIE X1.1, use the endpoint /chat/ernie-x1.1 or /chat/ernie-x1.1-32k for extended context, based on available variants. Confirm the exact model name in your Qianfan dashboard, as it may appear as "ernie-x1.1-preview" during initial rollout.

Send POST requests with JSON bodies. Key parameters include:

messages: A list of dictionaries with "role" (user or assistant) and "content".temperature: Controls randomness (0.0-1.0, default 0.8).top_p: Nucleus sampling threshold (default 0.8).stream: Boolean for streaming responses.

An example request body:

{

"messages": [

{"role": "user", "content": "Explain quantum computing."}

],

"temperature": 0.7,

"top_p": 0.9,

"stream": false

}

Headers must include Content-Type: application/json and the access token in the URL: https://aip.baidubce.com/rpc/2.0/ai_custom/v1/wenxinworkshop/chat/ernie-x1.1?access_token=YOUR_TOKEN.

This format supports single-turn and multi-turn conversations. For multimodal inputs, add "type" fields like "image" with URLs or base64 data.

Handling Responses from ERNIE X1.1 API

Responses arrive in JSON format. Key fields:

result: The generated text.usage: Token counts for prompt, completion, and total.id: Unique response identifier.

In streaming mode, process chunks via Server-Sent Events (SSE), where each event contains partial results. Parse them like this in Python:

import qianfan

chat_comp = qianfan.ChatCompletion()

resp = chat_comp.do(model="ERNIE-X1.1", messages=[{"role": "user", "content": "Hello"}], stream=True)

for chunk in resp:

print(chunk["result"])

Check for errors in the "error_code" field. Common issues include invalid tokens (code 110) or rate limits (code 18). Monitor usage to stay within quotas, which vary by subscription – free tiers limit to 100 QPS, while paid plans scale higher.

With basics covered, developers often turn to tools like Apidog for streamlined testing.

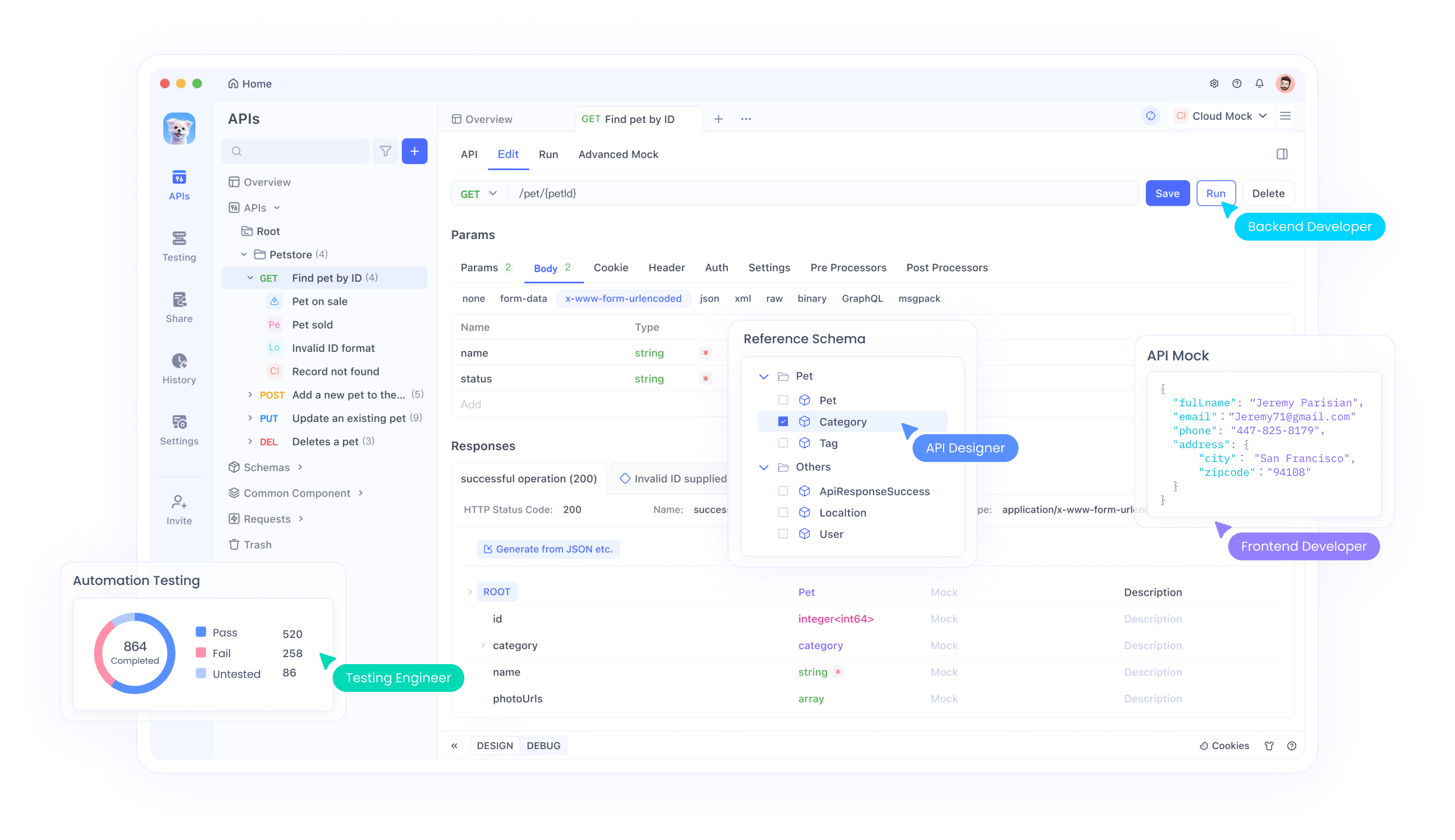

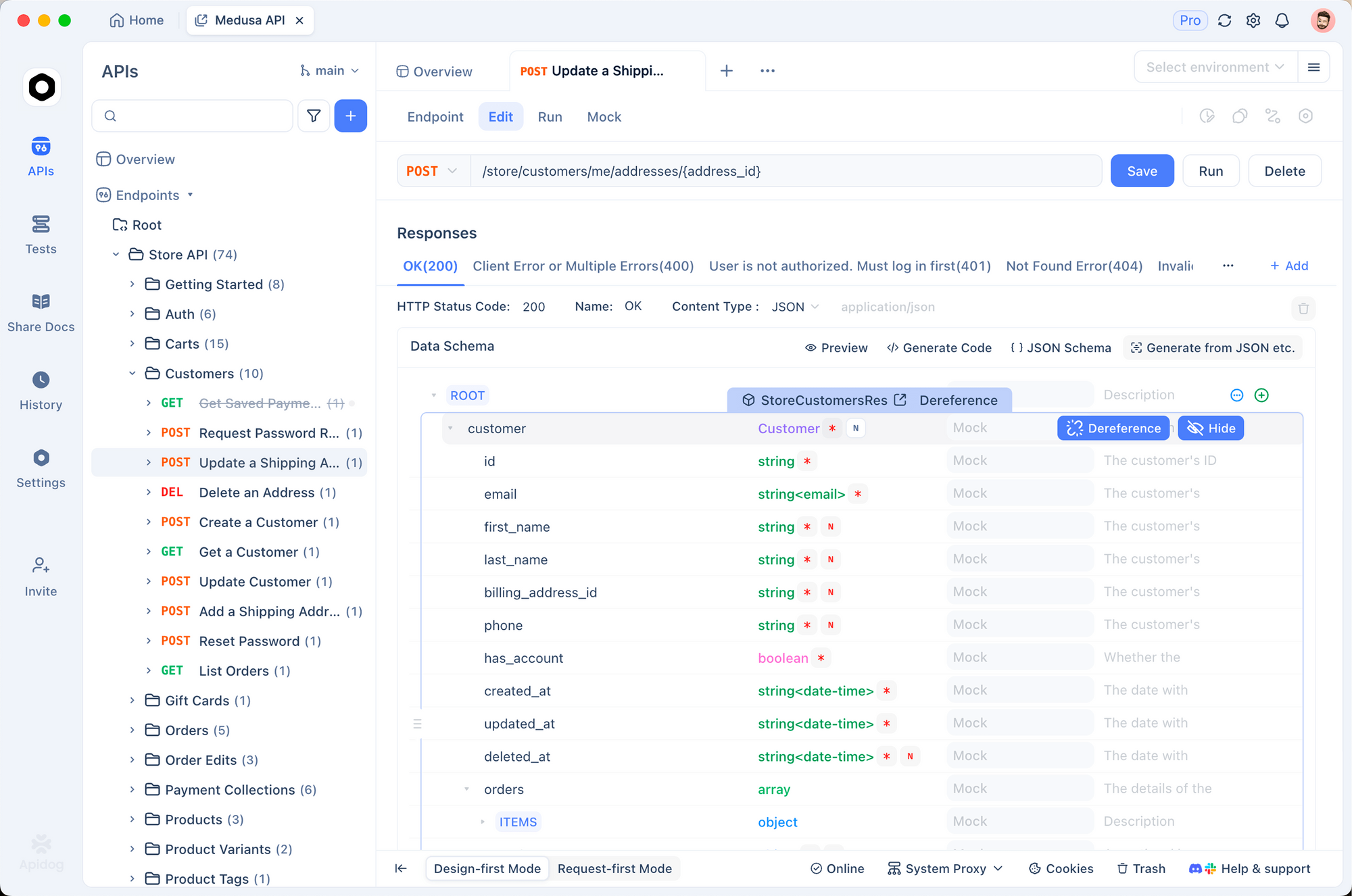

Using Apidog for ERNIE X1.1 API Testing

Apidog serves as an excellent platform for testing ERNIE X1.1 API endpoints. It offers intuitive interfaces for designing, debugging, and automating requests, making it superior for collaborative development.

First, download and install Apidog from their official site. Create a new project and import the ERNIE API specification. Apidog supports OpenAPI imports, so download Baidu's spec if available, or manually add endpoints.

To set up a request, navigate to the API section and create a new POST method for /chat/ernie-x1.1. Input your access token in the query parameters. In the body tab, build the JSON structure with messages and parameters. Apidog's variable system lets you parameterize tokens or prompts for reuse.

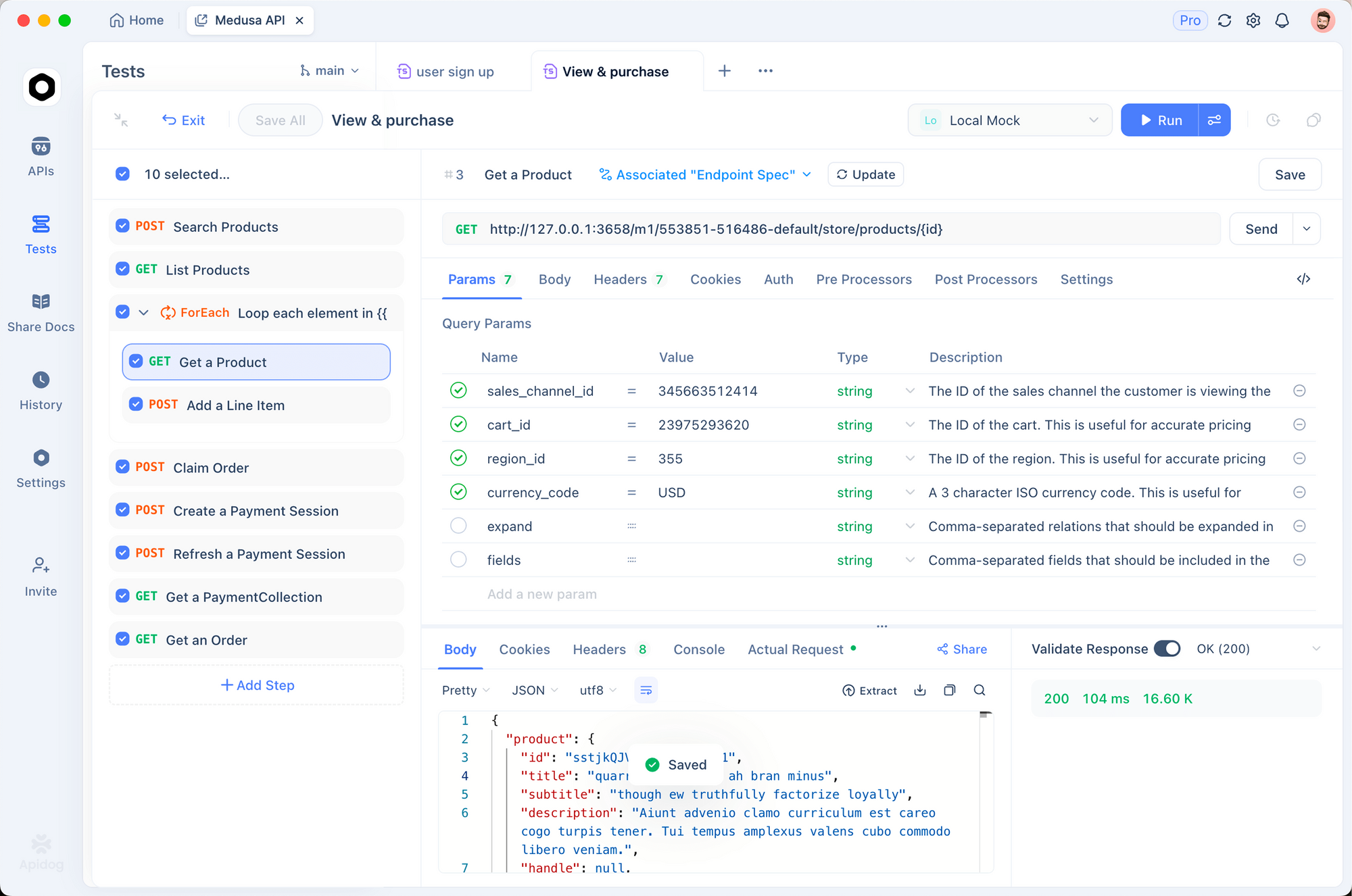

Send the request and inspect responses in real-time. Apidog highlights JSON structures, tracks timings, and logs errors. For example, test a simple query: "Generate a Python script for data analysis." Analyze the "result" field for accuracy.

Additionally, automate tests by creating scenarios. Link multiple requests to simulate multi-turn chats – one for initial prompt, another for follow-up. Use assertions to validate response keys, like ensuring "usage.total_tokens" < 1000.

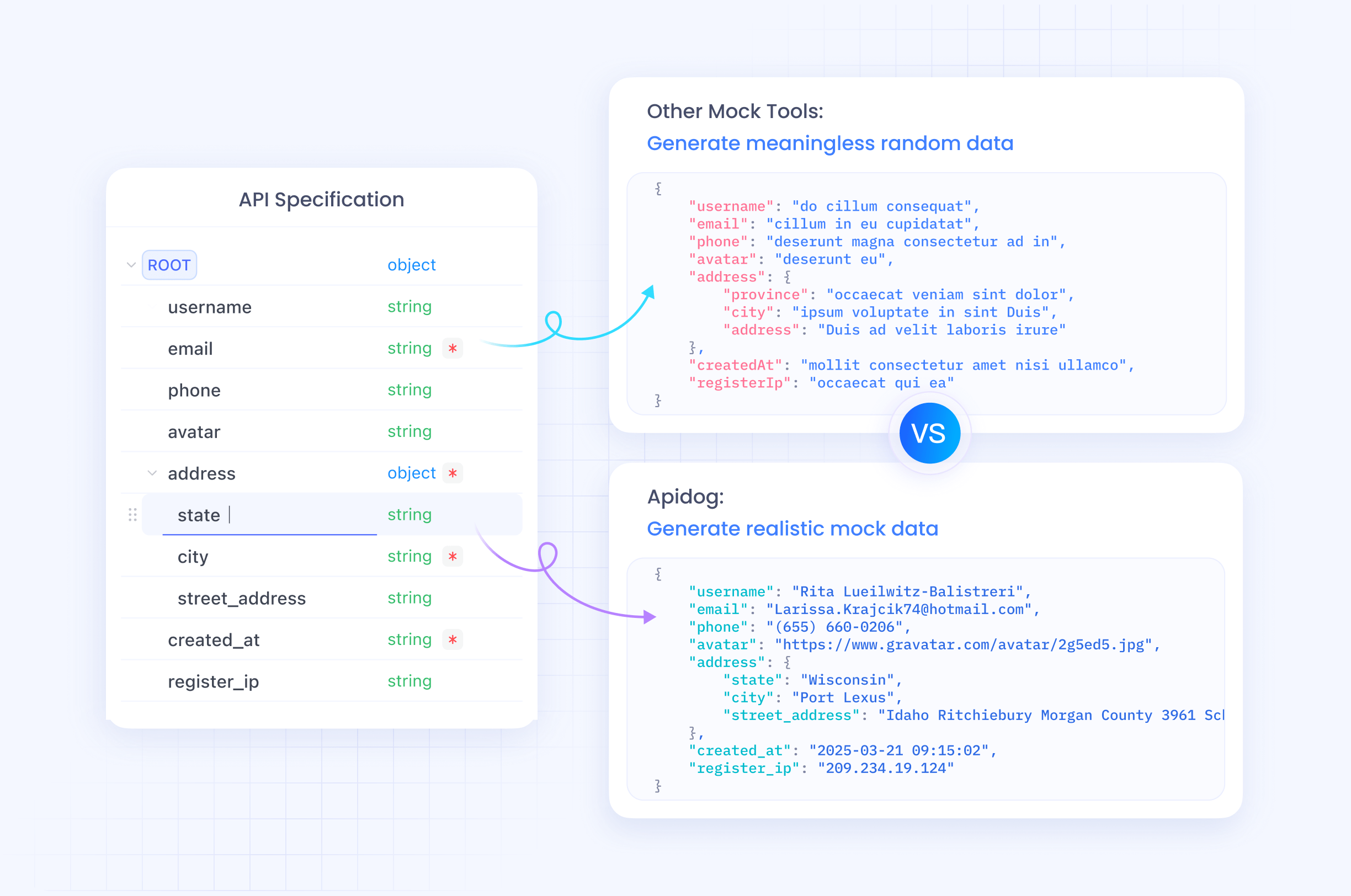

Apidog excels in mocking for offline development. Create mock servers mimicking ERNIE X1.1 responses, allowing team collaboration without live API calls. Share projects via links for feedback.

For advanced testing, integrate data sets. Upload CSV files with varied prompts and run batch tests to evaluate consistency. This approach reveals edge cases, such as long inputs triggering context limits.

By incorporating Apidog, you accelerate development cycles. However, remember to comply with Baidu's rate limits during extensive testing.

Advanced Features of ERNIE X1.1 API

ERNIE X1.1 shines in agentic tasks, where it calls external tools. Enable this by including "tools" in requests – arrays of function definitions with names, descriptions, and parameters. The model responds with tool calls if needed, which you execute and feed back.

For instance, define a weather tool:

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather",

"parameters": {

"type": "object",

"properties": {"location": {"type": "string"}}

}

}

}

]

ERNIE X1.1 processes the prompt, outputs a tool_call, and you respond with the result in the next message.

Furthermore, leverage multimodal inputs. Upload images via base64 in messages: {"role": "user", "content": [{"type": "image_url", "image_url": {"url": "data:image/jpeg;base64,..."}}]}. This enables vision-based reasoning, like describing scenes or analyzing charts.

Integrate with frameworks like LangChain for chained operations. Use QianfanChatEndpoint:

from langchain_community.chat_models import QianfanChatEndpoint

llm = QianfanChatEndpoint(model="ernie-x1.1")

response = llm.invoke("Summarize this text...")

This abstraction simplifies complex workflows. Rate limits typically cap at 2000 tokens per minute for input, but enterprise plans offer custom scaling. Pricing starts low – around $0.28 per million input tokens for X1 variants.

Best Practices for ERNIE X1.1 API Integration

Engineers optimize performance by crafting precise prompts. Use system messages to set roles: {"role": "system", "content": "You are a helpful assistant."}. This guides behavior effectively.

Monitor token usage to control costs. Trim prompts and use truncation parameters. Additionally, implement retries for transient errors, like network issues, with exponential backoff.

Secure your API keys in environment variables or vaults. Avoid logging sensitive data in responses. For production, employ caching for frequent queries to reduce calls.

Test across languages, as ERNIE X1.1 excels in Chinese but handles English well. Benchmark your integrations against the model's strengths, such as low hallucinations in factual Q&A.

Finally, stay updated via Baidu's developer portal for new features or model updates.

Troubleshooting Common ERNIE X1.1 API Issues

Users encounter authentication errors if tokens expire. Refresh them promptly. Invalid model names trigger 404 responses – verify "ernie-x1.1" in your dashboard.

Rate limit exceeds cause 429 errors. Implement queuing or upgrade plans. For incomplete responses, increase max_tokens parameter up to the model's limit.

Debug with Apidog's logs, which capture full request/response cycles. If multimodal fails, ensure image formats match supported types (JPEG, PNG).

Contact Baidu support for persistent issues, providing error codes and timestamps.

Conclusion: Elevate Your AI with ERNIE X1.1 API

ERNIE X1.1 empowers developers to build intelligent, reliable applications. From setup to advanced integrations, this guide equips you with the knowledge to harness its full potential. Incorporate Apidog for efficient testing, and watch your projects thrive.

As AI evolves, models like ERNIE X1.1 lead the way. Start implementing today, and experience the difference in accuracy and efficiency.