Engineers and developers constantly seek powerful language models that balance performance, efficiency, and accessibility. DeepSeek-V3.2-Exp emerges as a significant advancement in this domain, offering a robust solution for handling complex AI tasks. This experimental model, developed by DeepSeek-AI, builds directly on the foundation of DeepSeek-V3.1-Terminus. It incorporates innovative features that address key challenges in large-scale language processing, particularly in long-context scenarios.

DeepSeek-V3.2-Exp boasts 685 billion parameters, making it one of the most capable open-source models available today. At its core lies the DeepSeek Sparse Attention (DSA) mechanism, which enables fine-grained sparse attention computations. This innovation reduces computational overhead while preserving output quality, allowing the model to process extended contexts more efficiently than its predecessors. Benchmarks demonstrate that DeepSeek-V3.2-Exp performs on par with DeepSeek-V3.1-Terminus across diverse tasks, including reasoning, coding, and agentic tool use.

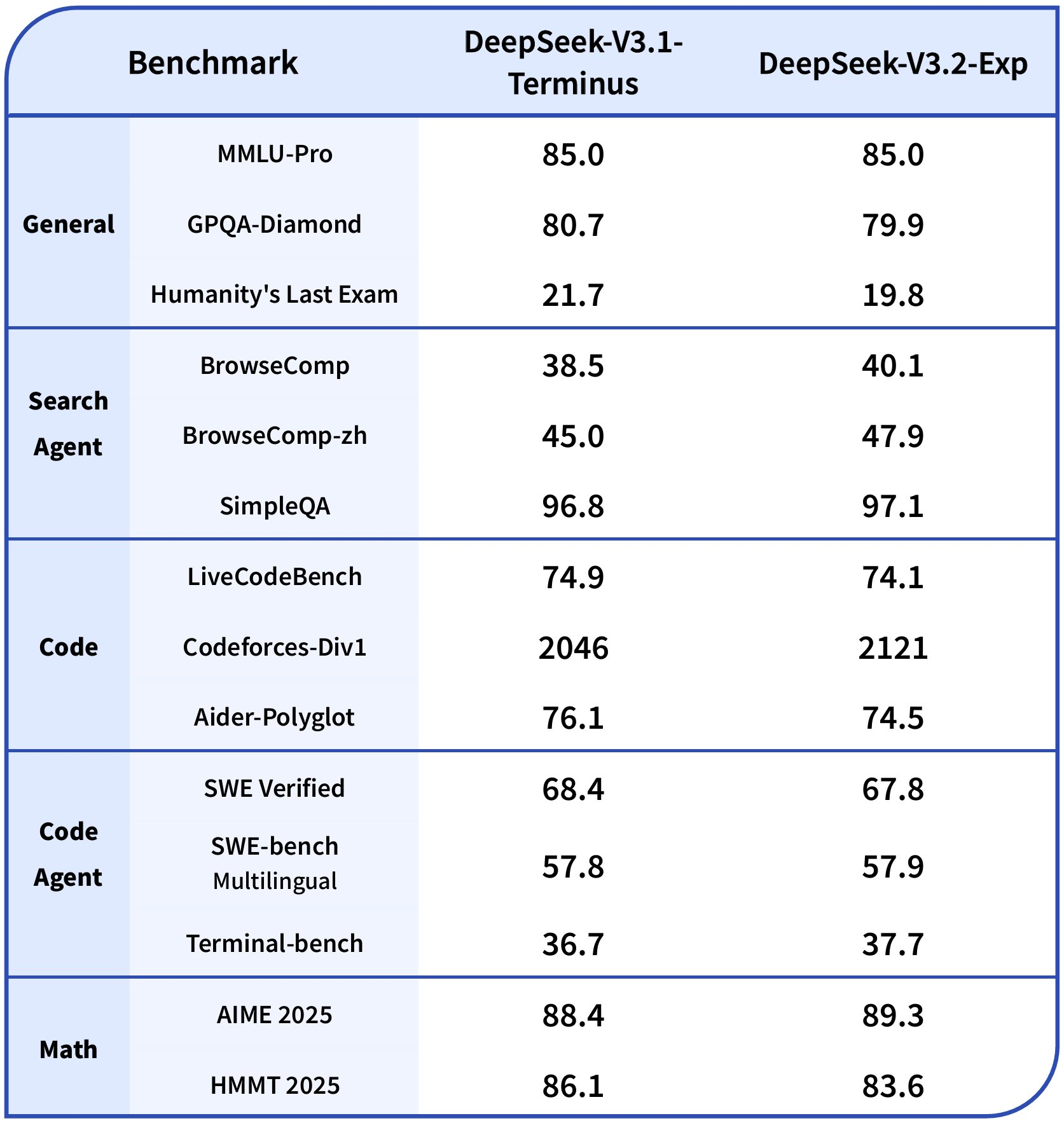

For instance, in reasoning benchmarks without tool use, DeepSeek-V3.2-Exp achieves scores such as 85.0 on MMLU-Pro and 89.3 on AIME 2025. In agentic scenarios, it excels with 40.1 on BrowseComp and 67.8 on SWE Verified. These results stem from aligned training configurations that rigorously evaluate the impact of sparse attention. Moreover, the model's open-source nature, hosted on Hugging Face, encourages community contributions and local deployments.

Transitioning from model overview to practical implementation, the next step involves accessing the DeepSeek-V3.2-Exp API itself.

Accessing the DeepSeek-V3.2-Exp API

Once you grasp the capabilities of DeepSeek-V3.2-Exp, you proceed to access its API for real-world applications. DeepSeek provides a straightforward API that aligns with industry standards, facilitating quick integration into existing systems.

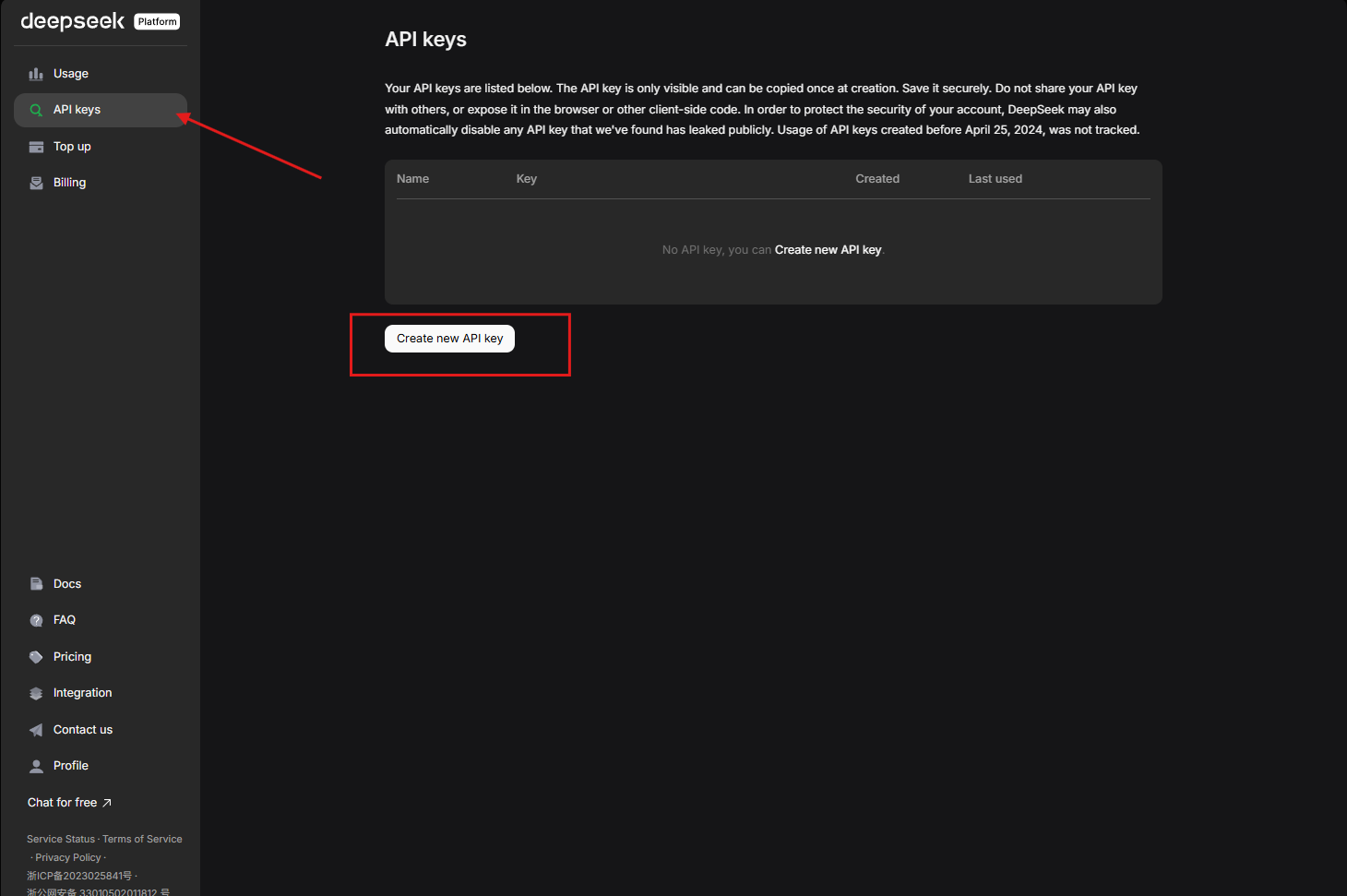

First, register on the DeepSeek platform to obtain credentials.

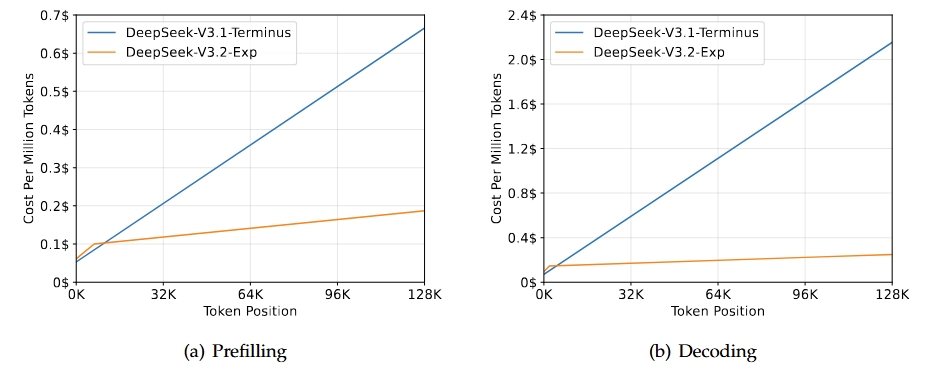

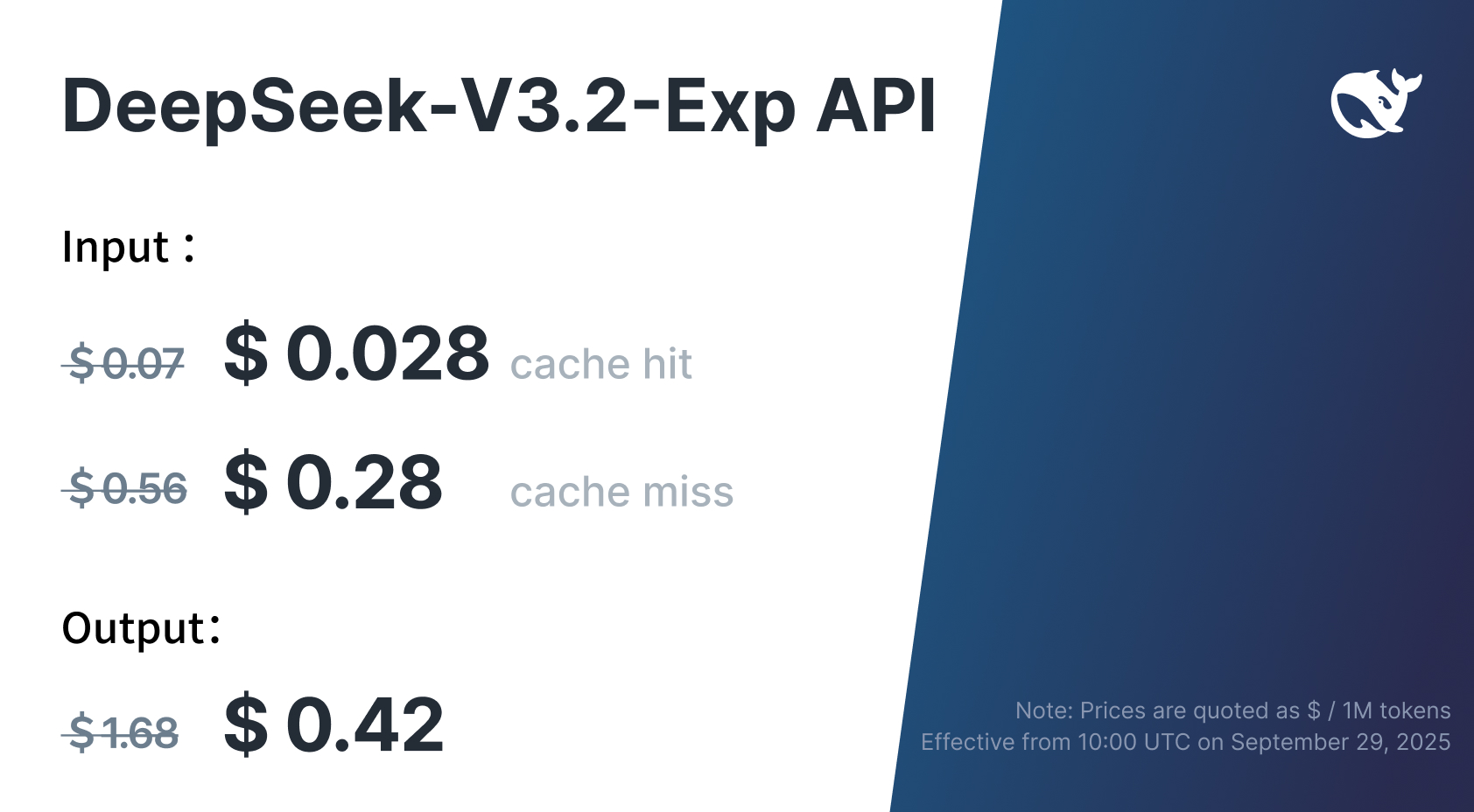

The API supports compatibility with popular frameworks like OpenAI's SDK, which simplifies adoption for teams already familiar with similar interfaces. Set the base URL to https://api.deepseek.com for standard access, which defaults to DeepSeek-V3.2-Exp. This setup ensures you tap into the model's enhanced efficiency, including the 50%+ reduction in API pricing announced alongside the release.

For comparative purposes, DeepSeek temporarily maintains access to DeepSeek-V3.1-Terminus via a specialized endpoint: https://api.deepseek.com/v3.1_terminus_expires_on_20251015. This allows engineers to benchmark performance differences, such as inference speed improvements from DSA. However, note that this endpoint expires on October 15, 2025, at 15:59 UTC, so plan your tests accordingly.

In addition, the API extends compatibility to Anthropic's ecosystem. Adjust the base URL to https://api.deepseek.com/anthropic for Claude-like interactions, or https://api.deepseek.com/v3.1_terminus_expires_on_20251015/anthropic for the prior version. This flexibility supports diverse development environments, from web apps to command-line tools.

With access established, authentication forms the critical next layer to secure your interactions.

Authentication and API Key Management

Security underpins reliable API usage, so you authenticate requests using API keys. DeepSeek requires you to generate a key from the platform dashboard . This key serves as your unique identifier, granting access to models like DeepSeek-V3.2-Exp.

Include the key in the Authorization header of each request: Bearer ${DEEPSEEK_API_KEY}. This method aligns with RESTful best practices, ensuring encrypted transmission over HTTPS. Always store keys securely—use environment variables in code or secret management services like AWS Secrets Manager to avoid exposure.

Furthermore, monitor usage through the platform's dashboard, which tracks token consumption and billing. Given the price reductions, DeepSeek-V3.2-Exp offers cost-effective scaling; however, implement rate limiting in your applications to prevent unexpected charges. For teams, rotate keys periodically and revoke compromised ones immediately.

Building on authentication, you now explore the core endpoints that power interactions with DeepSeek-V3.2-Exp.

Key Endpoints and Request Formats for DeepSeek-V3.2-Exp API

The DeepSeek-V3.2-Exp API centers on essential endpoints that handle chat completions, reasoning, and function calling. Primarily, you interact via the /chat/completions endpoint, which processes conversational inputs.

Construct POST requests to https://api.deepseek.com/chat/completions with JSON bodies. Specify the model as "deepseek-chat" for standard mode or "deepseek-reasoner" for enhanced thinking capabilities. The messages array holds the conversation history: system prompts define behavior, while user roles input queries.

For example, a basic request body looks like this:

{

"model": "deepseek-chat",

"messages": [

{

"role": "system",

"content": "You are a technical expert."

},

{

"role": "user",

"content": "Explain sparse attention."

}

],

"stream": false

}

Set "stream" to true for real-time responses, ideal for interactive applications. Headers must include Content-Type: application/json and the Authorization bearer token.

Additionally, the API supports multi-round conversations by appending assistant responses to the messages array for subsequent calls. This maintains context across interactions, leveraging DeepSeek-V3.2-Exp's long-context strengths.

Moreover, incorporate function calling for tool integrations. Define tools in the request, and the model selects appropriate ones based on the query. This endpoint enhances agentic workflows, such as data retrieval or code execution.

Shifting focus to outputs, understanding response structures ensures effective parsing in your code.

Response Structures and Handling in DeepSeek-V3.2-Exp API

Responses from the DeepSeek-V3.2-Exp API follow a predictable JSON format, enabling straightforward integration. A non-stream response includes fields like id, object, created, model, choices, and usage.

The choices array contains the generated content: each choice has a message with role "assistant" and the response text. Usage details track prompt_tokens, completion_tokens, and total_tokens, aiding cost monitoring.

For stream responses, the API sends Server-Sent Events (SSE). Each chunk arrives as a data event, with JSON objects containing delta updates to the content. Parse these incrementally to build the full response, which suits live chat interfaces.

Handle errors gracefully—common codes include 401 for authentication failures and 429 for rate limits. Implement exponential backoff retries to maintain reliability.

With requests and responses covered, practical code examples illustrate implementation.

Python Code Examples for Integrating DeepSeek-V3.2-Exp API

Developers often start with Python due to its simplicity and rich libraries. Leverage the OpenAI SDK for compatibility:

import openai

openai.api_base = "https://api.deepseek.com"

openai.api_key = "your_api_key_here"

response = openai.ChatCompletion.create(

model="deepseek-chat",

messages=[

{"role": "system", "content": "You are a coding assistant."},

{"role": "user", "content": "Write a Python function to calculate Fibonacci numbers."}

],

stream=False

)

print(response.choices[0].message.content)

# This code generates a complete response. For streaming:

def stream_response():

stream = openai.ChatCompletion.create(

model="deepseek-chat",

messages=[...],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content is not None:

print(chunk.choices[0].delta.content, end="")

# Extend this to multi-turn chats by storing and appending messages. For function calling:

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string"}

},

"required": ["location"]

}

}

}

]

Include tools in the create call, then execute the selected function based on the response.

Beyond basic examples, advanced use cases involve JSON mode for structured outputs. Set response_format to {"type": "json_object"} to enforce JSON responses, useful for data extraction tasks.

Continuing from code, integrating with specialized tools like Apidog elevates your development process.

Integrating DeepSeek-V3.2-Exp API with Apidog

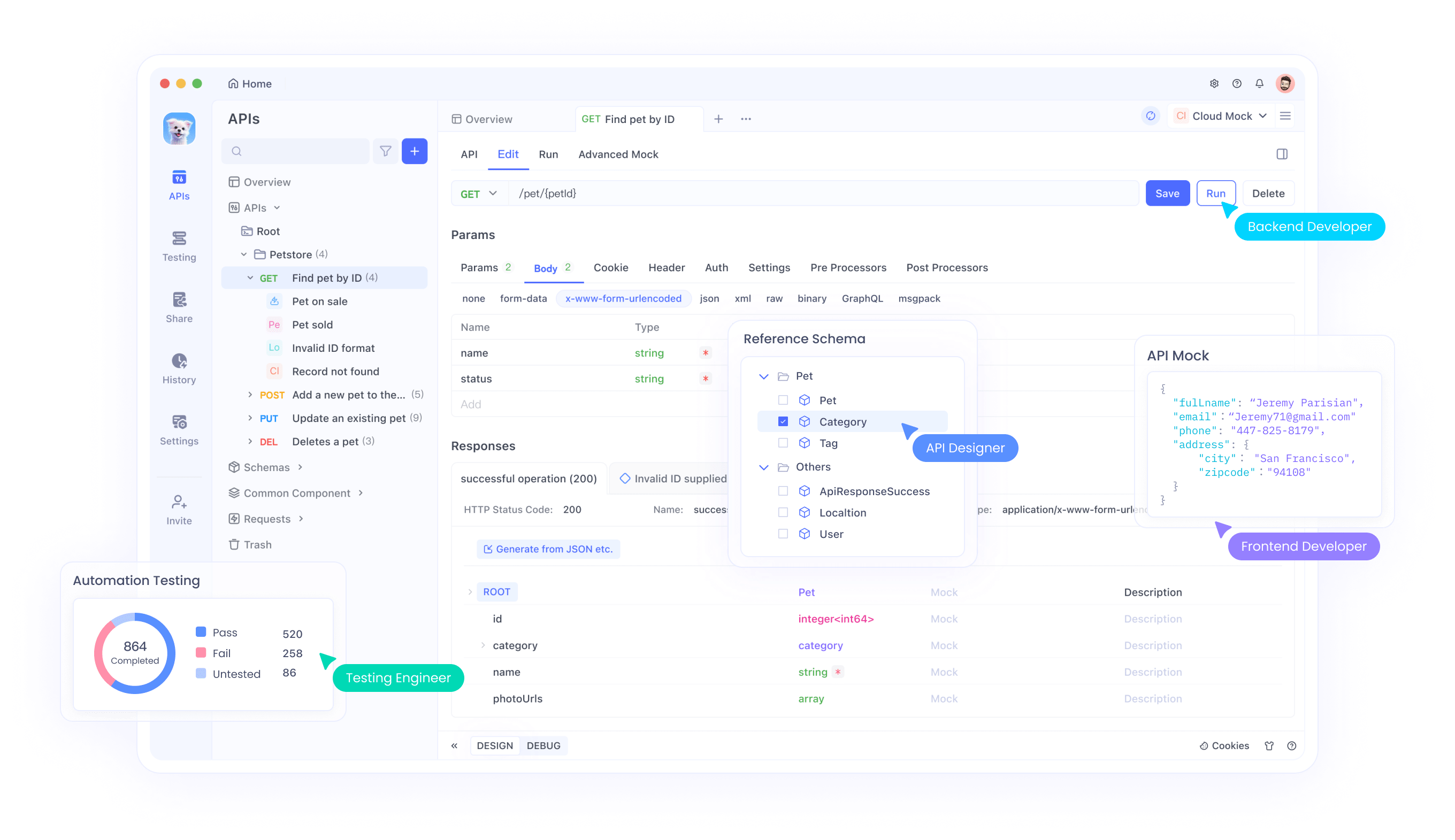

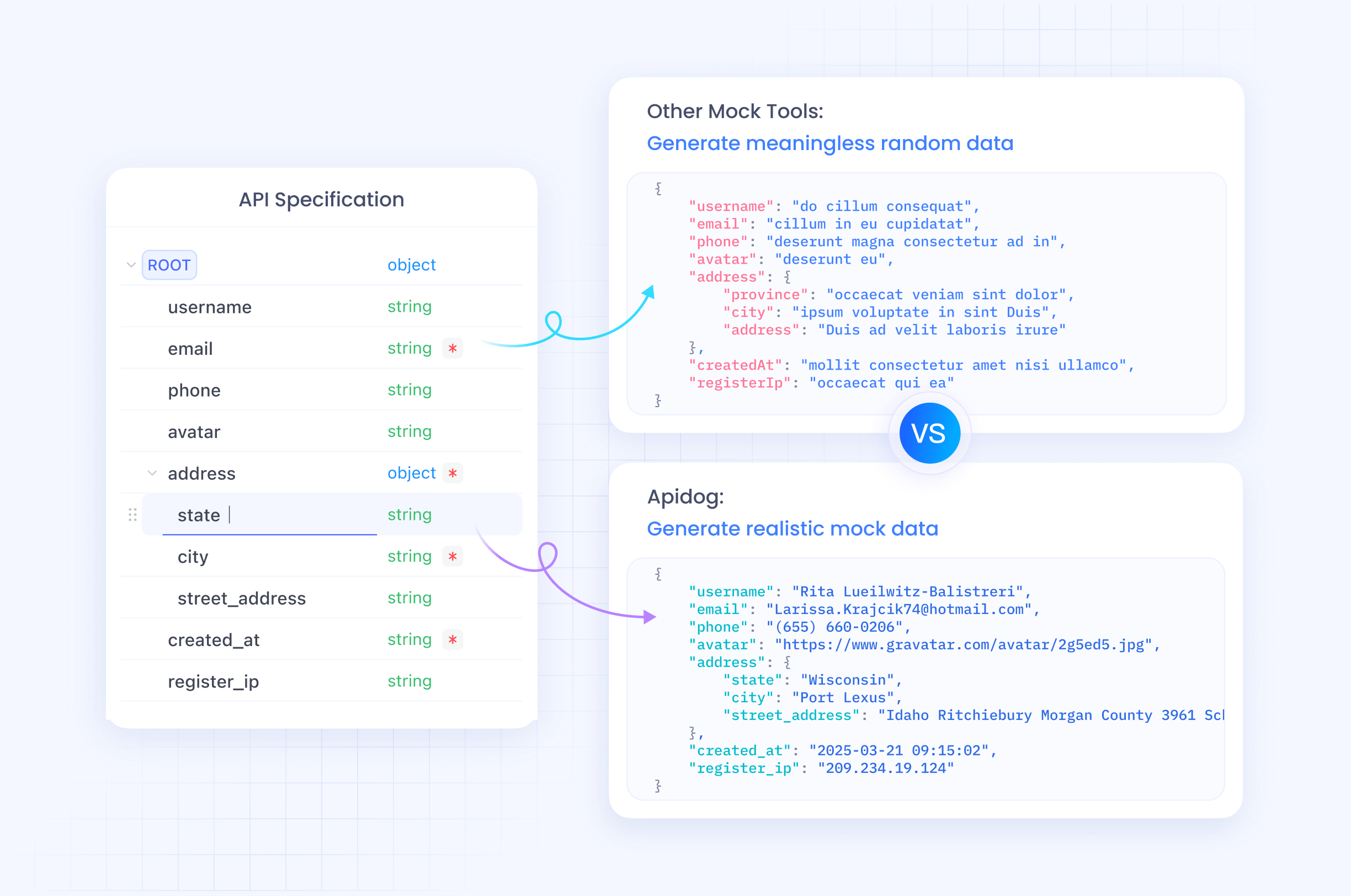

Apidog stands out as a versatile API management tool that accelerates testing and integration. You import DeepSeek-V3.2-Exp API specifications directly into Apidog, creating collections for endpoints like chat completions.

Start by generating an API key in DeepSeek, then configure Apidog's environment variables to store it securely. Use Apidog's request builder to craft POST calls: set the URL, headers, and body, then send to receive responses instantly.

Apidog excels in mocking responses for offline development—simulate DeepSeek-V3.2-Exp outputs to test edge cases without incurring API costs. Additionally, generate code snippets in languages like Python or JavaScript from successful requests, speeding up implementation.

For debugging, Apidog's timeline view traces request histories, identifying issues in authentication or parameters. Since DeepSeek-V3.2-Exp supports long contexts, test extended prompts in Apidog to verify performance.

Moreover, collaborate with teams by sharing Apidog projects, ensuring consistent API usage across developers. This integration not only saves time but also enhances reliability when deploying AI features.

As you scale, best practices ensure optimal results from DeepSeek-V3.2-Exp API.

Best Practices for Using DeepSeek-V3.2-Exp API

Optimize prompts to maximize DeepSeek-V3.2-Exp's strengths. Use clear, concise system prompts to guide behavior, and chain-of-thought techniques in reasoner mode for complex problems.

Monitor token usage—DeepSeek-V3.2-Exp handles up to 128K contexts, but efficiency drops with excessive length. Truncate histories intelligently to stay within limits.

Implement caching for frequent queries to reduce calls, and batch requests where possible for high-throughput scenarios.

Security-wise, sanitize user inputs to prevent prompt injections, and log interactions for auditing.

For performance tuning, experiment with temperature and top_p parameters: lower values yield deterministic outputs, while higher ones foster creativity.

Furthermore, conduct A/B testing between deepseek-chat and deepseek-reasoner modes to select the best fit for your application.

Transitioning to comparisons, evaluate DeepSeek-V3.2-Exp against predecessors.

Comparing DeepSeek-V3.2-Exp with Previous Models

DeepSeek-V3.2-Exp advances beyond DeepSeek-V3.1-Terminus primarily through DSA, which boosts inference speed by 3x in some cases while maintaining benchmark parity.

In coding tasks, it scores 2121 on Codeforces versus 2046, showing slight improvements. However, in humanity-focused exams, minor dips occur, like 19.8 versus 21.7 on Humanity's Last Exam, highlighting areas for refinement.

Access the prior model temporarily for direct comparisons, adjusting base URLs as noted. This reveals DSA's efficiency gains in long-context processing, crucial for applications like document summarization.

Use tools like Apidog to run parallel tests, logging metrics for informed decisions.

Expanding further, explore use cases where DeepSeek-V3.2-Exp shines.

Troubleshooting Common Issues with DeepSeek-V3.2-Exp API

Encounter 401 errors? Verify your API key and header format.

Rate limits hit? Implement backoff logic: wait progressively longer between retries.

Unexpected outputs? Refine prompts or adjust parameters like max_tokens.

For stream issues, ensure your client handles SSE correctly, parsing chunks without buffering delays.

If contexts exceed limits, summarize prior messages before appending.

Report persistent problems via DeepSeek's feedback form, contributing to model improvements.

Finally, consider local deployments for enhanced control.

Local Deployment and Advanced Configurations

Beyond API, run DeepSeek-V3.2-Exp locally using Hugging Face weights. Convert checkpoints with provided scripts, specifying expert counts (256) and model parallelism based on GPUs.

Launch inference demos for interactive testing, utilizing TileLang or CUDA kernels for optimized performance.

This setup suits privacy-sensitive applications or offline environments.

In summary, DeepSeek-V3.2-Exp API empowers developers with cutting-edge AI capabilities.

Conclusion: Harnessing DeepSeek-V3.2-Exp for Future Innovations

DeepSeek-V3.2-Exp represents a leap in efficient AI modeling, with its API providing accessible entry points. From authentication to advanced integrations, this guide equips you to build robust applications. Experiment, iterate, and push boundaries—small refinements in prompts or setups often yield substantial gains.