DeepSeek engineers release DeepSeek-V3.1-Terminus as an iterative enhancement to their V3.1 model, addressing user-reported issues while amplifying core strengths. This version focuses on practical improvements that developers value in real-world applications, such as consistent language outputs and robust agent functionalities. As AI models evolve, teams like DeepSeek prioritize refinements that boost reliability without overhauling the foundation. Consequently, DeepSeek-V3.1-Terminus emerges as a polished tool for tasks ranging from code generation to complex reasoning.

This release underscores DeepSeek's commitment to open-source innovation. The model now resides on Hugging Face, allowing immediate access for experimentation. Engineers build upon the V3.1 base, introducing tweaks that enhance performance across benchmarks. As a result, users experience fewer frustrations, such as mixed Chinese-English responses or erratic characters, which previously hindered seamless interactions.

Understanding the Architecture of DeepSeek-V3.1-Terminus

DeepSeek architects design DeepSeek-V3.1-Terminus with a hybrid Mixture of Experts (MoE) framework, mirroring the structure of its predecessor, DeepSeek-V3. This approach combines dense and sparse components, allowing the model to activate only relevant experts for specific tasks. Consequently, it achieves high efficiency, processing queries with reduced computational overhead compared to fully dense models.

At its core, the model boasts 685 billion parameters, distributed across expert modules. Engineers employ BF16, F8_E4M3, and F32 tensor types for these parameters, optimizing for both precision and speed. However, a noted issue involves the self-attention output projection not fully adhering to the UE8M0 FP8 scale format, which DeepSeek plans to resolve in upcoming iterations. This minor flaw does not detract significantly from overall functionality but highlights the iterative nature of model development.

Furthermore, DeepSeek-V3.1-Terminus supports both thinking and non-thinking modes. In thinking mode, the model engages in multi-step reasoning, drawing on internal logic to handle complex problems. Non-thinking mode, by contrast, prioritizes rapid responses for straightforward queries. This duality stems from post-training on an extended V3.1-Base checkpoint, which incorporates a two-phase long-context extension method. Developers collect additional long documents to bolster the dataset, extending training phases for better context handling.

Key Improvements in DeepSeek-V3.1-Terminus Over Previous Versions

DeepSeek engineers refine DeepSeek-V3.1-Terminus by tackling feedback from the V3.1 release, resulting in tangible enhancements. Primarily, they reduce language inconsistencies, eliminating frequent Chinese-English mix-ups and random characters that plagued earlier outputs. This change ensures cleaner, more professional responses, especially in multilingual environments.

Additionally, agent upgrades stand out as a major advancement. Code Agents now handle programming tasks with heightened accuracy, while Search Agents improve retrieval efficiency. These improvements stem from refined training data and updated templates, allowing the model to integrate tools more seamlessly.

Benchmark comparisons reveal these gains quantitatively. For instance, in reasoning mode without tool use, MMLU-Pro scores rise from 84.8 to 85.0, and GPQA-Diamond improves from 80.1 to 80.7. Humanity's Last Exam sees a substantial jump from 15.9 to 21.7, demonstrating stronger performance on challenging evaluations. LiveCodeBench remains nearly stable at 74.9, with minor fluctuations in Codeforces and Aider-Polyglot.

Shifting to agentic tool use, the model excels further. BrowseComp increases from 30.0 to 38.5, and SimpleQA climbs from 93.4 to 96.8. SWE Verified advances to 68.4 from 66.0, SWE-bench Multilingual to 57.8 from 54.5, and Terminal-bench to 36.7 from 31.3. Although BrowseComp-zh dips slightly, overall trends indicate superior reliability.

Moreover, DeepSeek-V3.1-Terminus achieves these without sacrificing speed. It responds faster than some competitors while maintaining quality comparable to DeepSeek-R1 on difficult benchmarks. This balance arises from optimized post-training, incorporating long-context data for better generalization.

Performance Benchmarks and Evaluations for DeepSeek-V3.1-Terminus

Evaluators assess DeepSeek-V3.1-Terminus across diverse benchmarks, revealing its strengths in reasoning and tool integration. In non-tool reasoning, the model scores 85.0 on MMLU-Pro, showcasing broad knowledge retention. GPQA-Diamond reaches 80.7, indicating proficiency in graduate-level questions.

Furthermore, Humanity's Last Exam at 21.7 highlights improved handling of esoteric topics. Coding benchmarks like LiveCodeBench (74.9) and Aider-Polyglot (76.1) demonstrate practical utility, though Codeforces dips to 2046, suggesting areas for further tuning.

Transitioning to agentic scenarios, BrowseComp's 38.5 score reflects enhanced web navigation capabilities. SimpleQA's near-perfect 96.8 underscores accuracy in query resolution. SWE-bench suites, including Verified (68.4) and Multilingual (57.8), affirm its software engineering prowess. Terminal-bench at 36.7 shows competence in command-line interactions.

Comparatively, DeepSeek-V3.1-Terminus outperforms V3.1 in most metrics, achieving a 68x cost advantage with minimal performance trade-offs. It rivals closed-source models in efficiency, making it ideal for business applications.

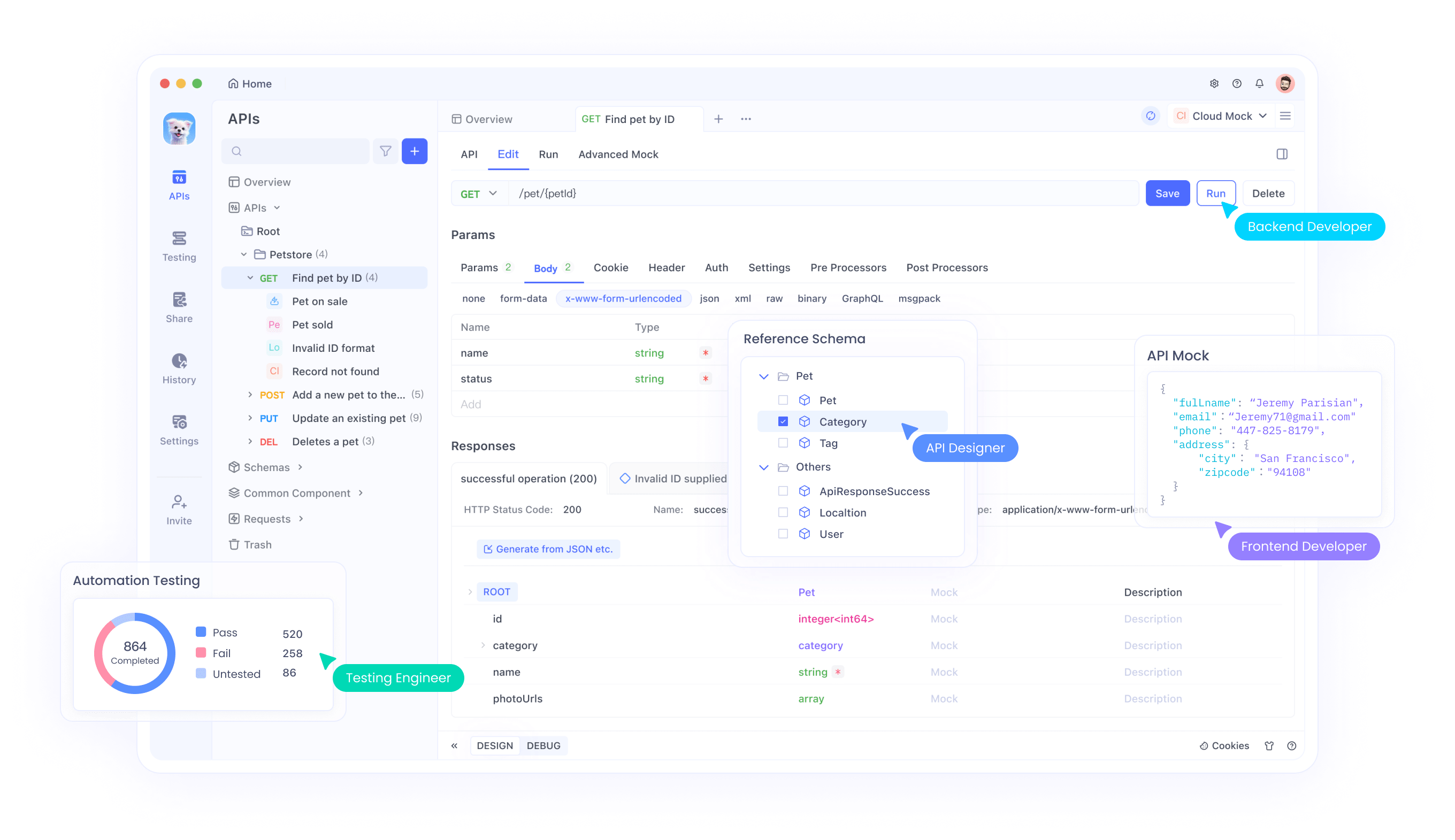

Integrating DeepSeek-V3.1-Terminus with APIs and Tools Like Apidog

Developers integrate DeepSeek-V3.1-Terminus via its OpenAI-compatible API, simplifying adoption. They specify 'deepseek-chat' for non-thinking mode or 'deepseek-reasoner' for thinking mode.

To begin, users generate an API key on the DeepSeek platform. With Apidog, they set up environments by entering the base URL (https://api.deepseek.com) and storing the key as a variable. This setup facilitates testing chat completions and function calls.

Moreover, Apidog supports debugging, allowing developers to verify responses efficiently. For function calling, they define tools in requests, enabling the model to invoke external functions dynamically.

Pricing remains competitive at $1.68 per million output tokens, encouraging widespread use. Integrations extend to frameworks like Geneplore AI or AI/ML API, supporting multi-agent systems.

Comparisons with Competing AI Models

DeepSeek-V3.1-Terminus competes effectively against models like DeepSeek-R1, matching quality in reasoning while responding faster. It surpasses V3.1 in tool use, with BrowseComp gains of 8.5 points.

Against proprietary options, it offers open-source accessibility and cost efficiency. For example, it approaches Sonnet-level performance in benchmarks.

Moreover, its hybrid modes provide versatility absent in some competitors. Therefore, it appeals to budget-conscious developers seeking robust features.

Deployment Strategies for DeepSeek-V3.1-Terminus

Engineers deploy the model locally using the DeepSeek-V3 repo. For cloud, platforms like AWS Bedrock host it.

Optimized inference code in the repository aids setup. Therefore, scalability suits various environments.

Advanced Features: Function Calling and Tool Integration

Developers implement function calling by defining schemas in API requests. This enables dynamic interactions, like querying databases.

Apidog assists in testing these features, ensuring robust integrations.

Cost Analysis and Optimization Tips

At low per-token costs, DeepSeek-V3.1-Terminus offers value. Optimize by selecting modes wisely—non-thinking for simple tasks.

Monitor usage via Apidog to manage expenses effectively.

User Feedback and Community Reception

Users celebrate the release, noting stability gains. Some anticipate V4, reflecting high expectations.

Forums like Reddit buzz with discussions on its agentic strengths.

Conclusion: Embracing DeepSeek-V3.1-Terminus in AI Development

DeepSeek-V3.1-Terminus refines AI capabilities, offering developers a powerful, efficient tool. Its improvements in agents and language pave the way for innovative applications. As teams adopt it, the model continues to evolve, driven by community input.