Developers often seek robust APIs to power AI applications. DeepSeek-V3.1 API stands out as a versatile option. It offers advanced language modeling capabilities. You access features like chat completions and tool integrations. This post explains how you use it step by step.

First, obtain an API key from the DeepSeek platform. Sign up on their site and generate the key. With that, you start making requests.

Next, understand the core components. DeepSeek-V3.1 builds on large-scale models. It supports contexts up to 128K tokens. You handle complex queries efficiently. Furthermore, it includes thinking modes for deeper reasoning. As you proceed, note how these elements fit together.

What Is DeepSeek-V3.1 and Why Choose It?

DeepSeek-V3.1 represents an evolution in AI models. Engineers at DeepSeek-ai developed it as a hybrid architecture. The model totals 671 billion parameters but activates only 37 billion during inference. This design reduces computational demands while maintaining high performance.

You find two main variants: DeepSeek-V3.1-Base and the full DeepSeek-V3.1. The base version serves as a foundation for further training. It underwent a two-phase long context extension. In the first phase, training expanded to 630 billion tokens for 32K context. Then, the second phase added 209 billion tokens for 128K context. Additional long documents enriched the dataset.

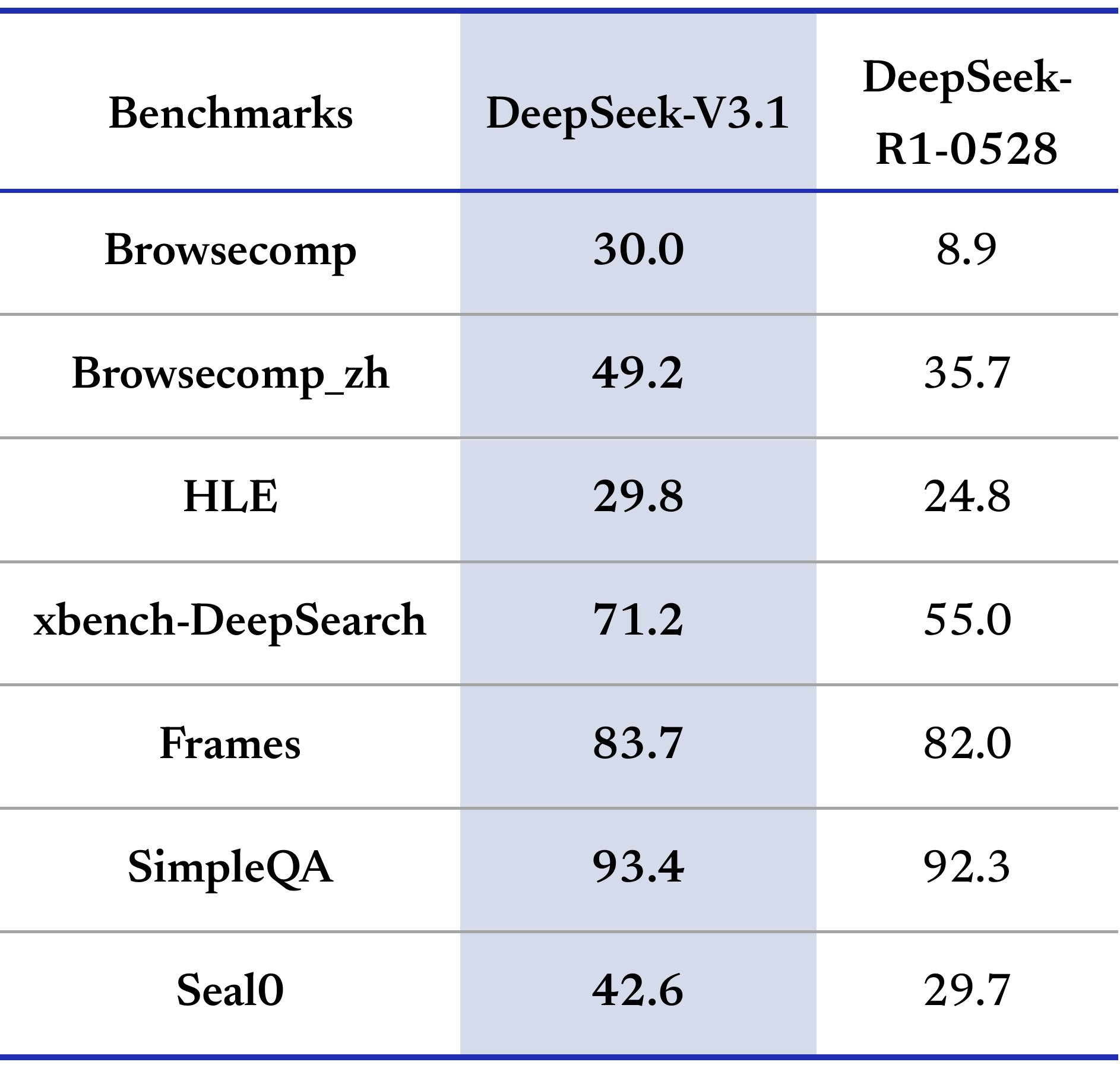

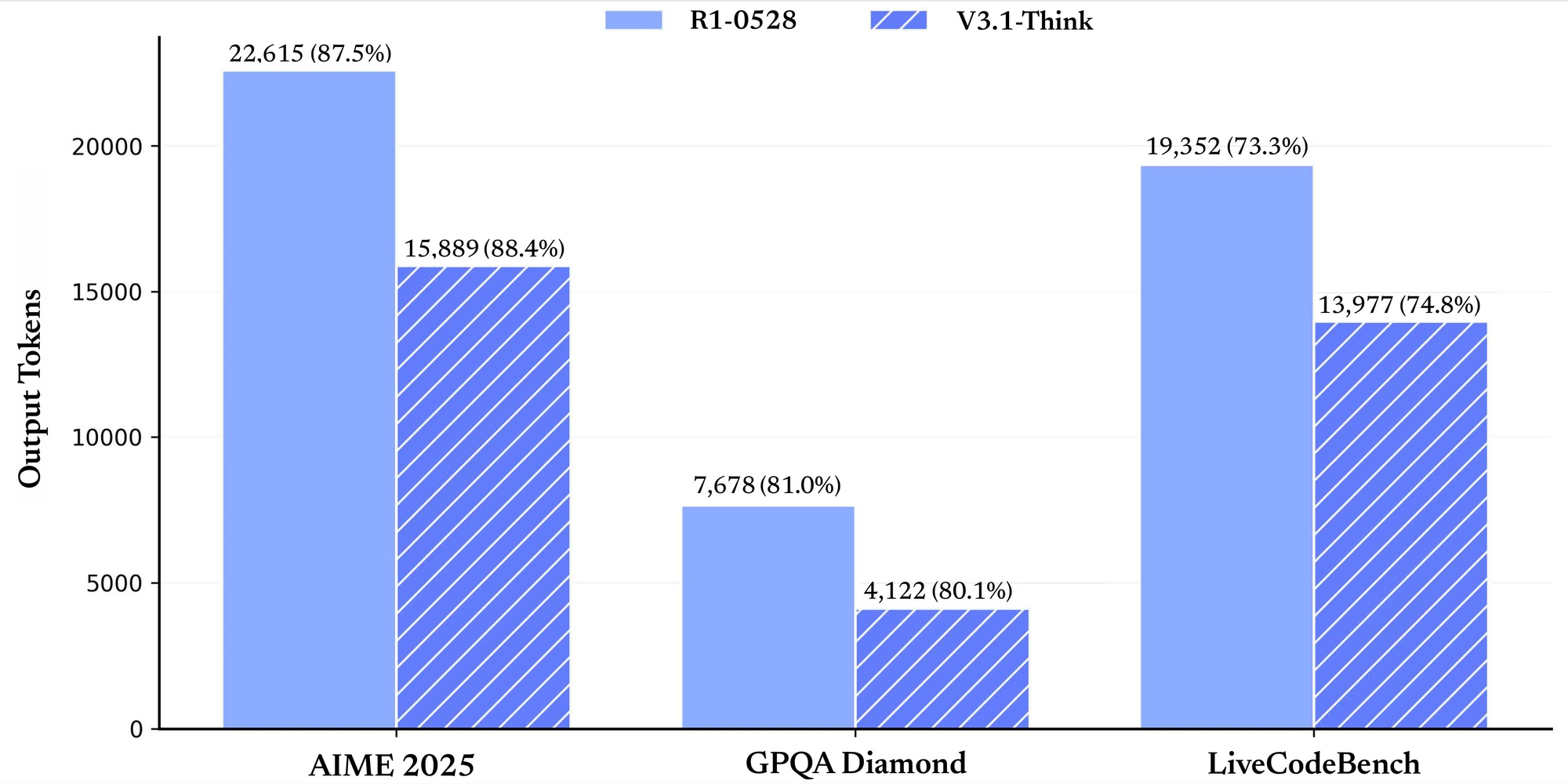

Performance benchmarks highlight its strengths. For general tasks, it scores 91.8 on MMLU-Redux in non-thinking mode and 93.7 in thinking mode. On GPQA-Diamond, it reaches 74.9 and 80.1 respectively. In code-related evaluations, LiveCodeBench yields 56.4 in non-thinking and 74.8 in thinking. Math benchmarks like AIME 2024 show 66.3 and 93.1. These numbers demonstrate reliability across domains.

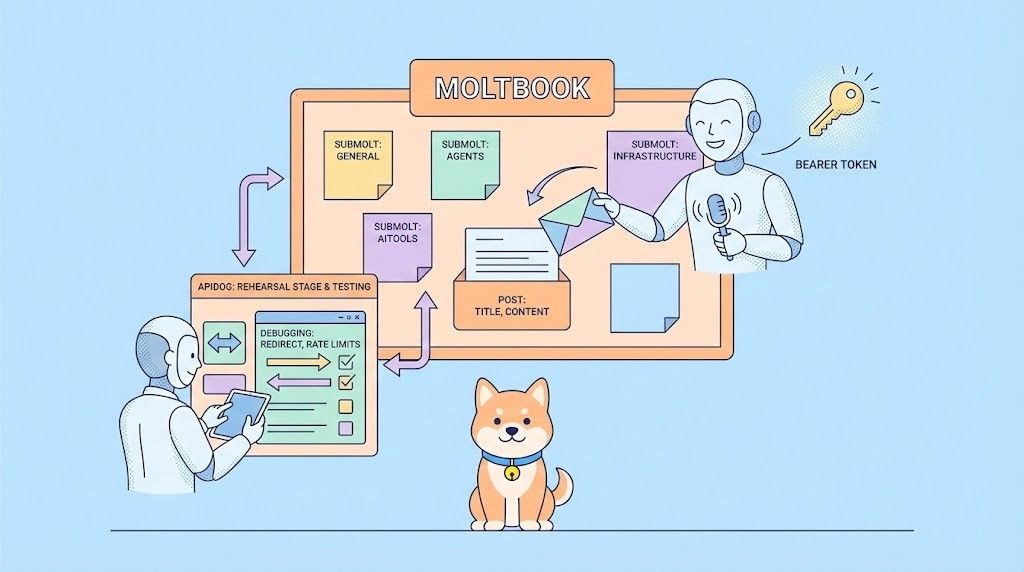

Why select DeepSeek-V3.1 API? It excels in agent tasks and tool calling. You integrate it for search agents or code agents. Compared to other APIs, it offers cost-effective pricing and compatibility features. As a result, teams adopt it for scalable AI solutions. Transitioning to setup, prepare your environment carefully.

Getting Started with DeepSeek-V3.1 API Integration

You begin by setting up your development environment. Install necessary libraries. For Python, use pip to add requests or compatible SDKs. DeepSeek-V3.1 API endpoints follow standard HTTP protocols. Base URL is https://api.deepseek.com.

Generate your API key from the dashboard. Store it securely in environment variables. For example, set DEEPSEEK_API_KEY in your shell. Now, make your first request. Use the chat completion endpoint. Send a POST to /chat/completions.

Include headers with Authorization: Bearer your_key. Body contains model as "deepseek-chat", messages array, and parameters like max_tokens. A simple request looks like this:

import requests

url = "https://api.deepseek.com/chat/completions"

headers = {

"Authorization": "Bearer YOUR_API_KEY",

"Content-Type": "application/json"

}

data = {

"model": "deepseek-chat",

"messages": [{"role": "user", "content": "Hello, DeepSeek-V3.1!"}],

"max_tokens": 100

}

response = requests.post(url, headers=headers, json=data)

print(response.json())

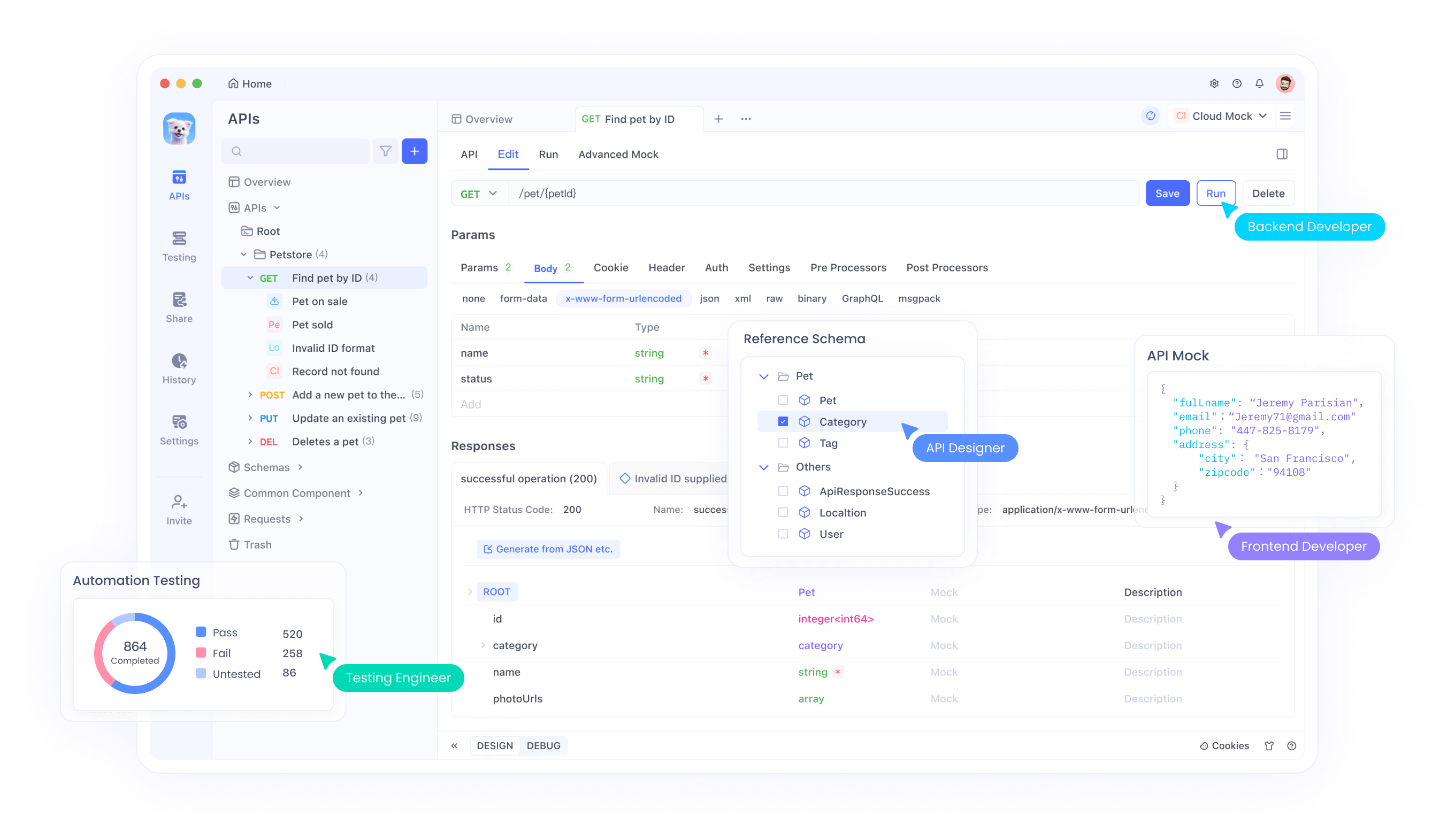

This code fetches a response. Check the content field for output. If errors occur, verify the key and payload. Furthermore, test with Apidog. Import the endpoint and simulate calls. Apidog visualizes responses, aiding debugging.

Explore model options. DeepSeek-chat suits general chats. DeepSeek-reasoner handles reasoning tasks. Select based on needs. As you advance, incorporate streaming for real-time outputs. Set stream to true in the request. Process chunks accordingly.

Security matters too. Use HTTPS always. Limit key exposure. Rotate keys periodically. With basics covered, move to advanced features like function calling.

Mastering Function Calling in DeepSeek-V3.1 API

Function calling enhances DeepSeek-V3.1 API. You define tools that the model invokes. This allows dynamic interactions, like fetching weather data.

Define tools in the request. Each tool has type "function", name, description, and parameters. Parameters use JSON schema. For example, a get_weather tool:

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City name"}

},

"required": ["location"]

}

}

}

Include this in the tools array of your chat completion request. The model analyzes the user message. If relevant, it returns tool_calls in the response. Each call has id, name, and arguments.

Handle the call. Execute the function locally. For get_weather, query an external API or mock data. Append the result as a tool message:

{

"role": "tool",

"tool_call_id": "call_id_here",

"content": "Temperature: 24°C"

}

Send the updated messages back. The model generates a final response.

Use strict mode for better validation. Set strict to true and use beta base URL. This enforces schema compliance. Supported types include string, number, array. Avoid unsupported fields like minLength.

Best practices include clear descriptions. Test tools with Apidog to mock responses. Monitor for errors in arguments. As a result, your applications become more interactive. Next, examine compatibility with other ecosystems.

Leveraging Anthropic API Compatibility in DeepSeek-V3.1

DeepSeek-V3.1 API supports Anthropic format. This lets you use Anthropic SDKs seamlessly. Set base URL to https://api.deepseek.com/anthropic.

Install Anthropic SDK: pip install anthropic. Configure environment:

export ANTHROPIC_BASE_URL=https://api.deepseek.com/anthropic

export ANTHROPIC_API_KEY=YOUR_DEEPSEEK_KEY

Create messages:

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="deepseek-chat",

max_tokens=1000,

system="You are helpful.",

messages=[{"role": "user", "content": [{"type": "text", "text": "Hi"}]}]

)

print(message.content)

This works like Anthropic but uses DeepSeek models. Supported fields: max_tokens, temperature (0-2.0), tools. Ignored: top_k, cache_control.

Differences exist. No image or document support. Tool choice options limited. Use this for migrating from Anthropic. Test with Apidog to compare responses. Consequently, you expand your toolkit without rewriting code.

Understanding DeepSeek-V3.1 Model Architecture and Tokenizer

DeepSeek-V3.1-Base forms the core. It uses hybrid design for efficiency. Context length reaches 128K, ideal for long documents.

Training involved extended phases. First, 32K with 630B tokens. Then, 128K with 209B. FP8 format ensures compatibility.

Tokenizer config: add_bos_token true, model_max_length 131072. BOS token "<|begin▁of▁sentence|>", EOS "<|end▁of▁sentence|>". Chat template handles roles like User, Assistant, think tags.

Apply templates for conversations. For thinking mode, wrap reasoning in tags. This boosts performance in complex tasks.

You load the model via Hugging Face. Use from_pretrained("deepseek-ai/DeepSeek-V3.1"). Tokenize inputs carefully. Monitor token counts to stay under limits. Thus, you optimize for accuracy.

Pricing and Cost Management for DeepSeek-V3.1 API

Pricing affects adoption. DeepSeek-V3.1 API charges per million tokens. Models: deepseek-chat and deepseek-reasoner.

From September 5, 2025, 16:00 UTC: Both models cost $0.07 for cache hit input, $0.56 cache miss input, $1.68 output.

Before that, standard (00:30-16:30 UTC): deepseek-chat $0.07 hit, $0.27 miss, $1.10 output; reasoner $0.14 hit, $0.55 miss, $2.19 output. Discount (16:30-00:30): half prices approximately.

No free tiers mentioned. Calculate costs: Estimate tokens per request. Use caching for repeated inputs. Optimize prompts to reduce tokens.

Track usage in the dashboard. Set budgets. With Apidog, simulate calls to predict costs. Therefore, manage expenses effectively.

Best Practices and Troubleshooting for DeepSeek-V3.1 API

Follow guidelines for success. Craft concise prompts. Provide context in messages.

Monitor latency. Long contexts slow responses. Chunk inputs if possible.

Secure data: Avoid sending sensitive info.

Troubleshoot: Check status codes. 401 means invalid key. 429 too many requests.

Update SDKs regularly. Read docs for changes.

Scale: Batch requests if supported. Use async for parallelism.

Community forums help. Share experiences.

By applying these, you achieve reliable integrations.

Conclusion: Elevate Your AI Projects with DeepSeek-V3.1 API

You now know how to use DeepSeek-V3.1 API effectively. From setup to advanced features, it empowers developers. Incorporate Apidog for smoother workflows. Start building today and see the impact.