DeepSeek Open Source Week showcased a new wave of open-source AI infrastructure tools—bringing advanced model training, efficient parallelism, and high-performance data handling within reach for engineering teams. If you work with large-scale APIs, backend systems, or data pipelines, the innovations released by DeepSeek can help you scale faster and build more robust solutions.

This guide summarizes the week’s most impactful releases—each designed to solve real bottlenecks in modern AI and data workflows—and explains how integrating these tools with your API development stack (for example, using Apidog) can drive even greater productivity.

Why DeepSeek’s Open Source Projects Matter to API and Backend Developers

Modern AI and API-driven applications face challenges around speed, scalability, and efficient resource usage. DeepSeek’s open-source repositories tackle these issues head-on:

- Faster model inference and training for large language models (LLMs) and Mixture-of-Experts (MoE) architectures

- Better hardware and memory utilization on the latest GPUs

- Streamlined data access across distributed systems

- Easier integration and customization for teams of all sizes

Below, we break down each tool—complete with practical takeaways for backend and API-focused teams.

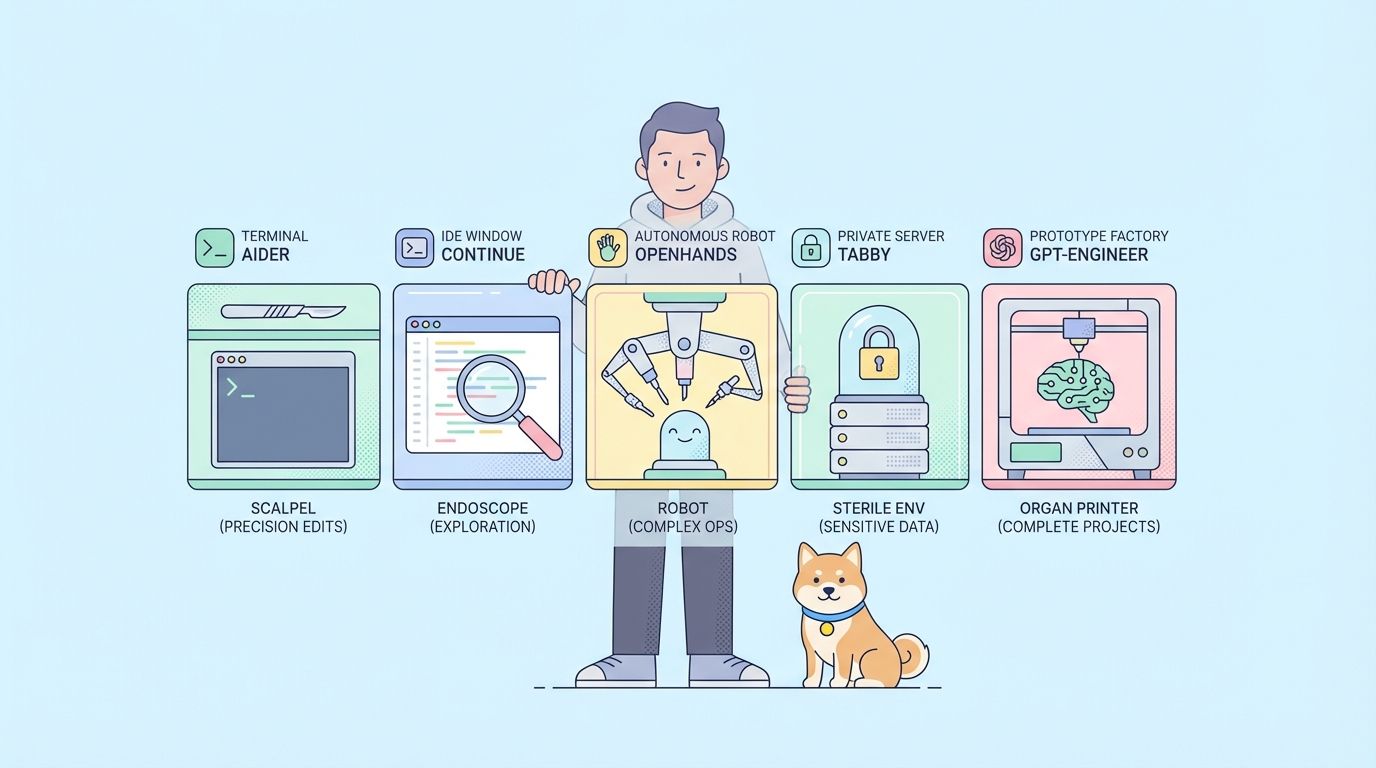

DeepSeek Open Source Week Releases: Quick Reference

| Repository Name | Description | GitHub Link |

|---|---|---|

| FlashMLA | Efficient MLA decoding kernel for Hopper GPUs | FlashMLA |

| DeepEP | Communication library for Mixture-of-Experts models | DeepEP |

| DeepGEMM | Optimized General Matrix Multiplication library | DeepGEMM |

| DualPipe: Optimized Parallelism Strategies | Framework for optimizing parallelism in distributed deep learning | Optimized Parallelism Strategies |

| 3FS: Fire-Flyer File System | Distributed file system optimized for ML workflows | Fire-Flyer File System |

| DeepSeek-V3/R1 Inference System | Large-scale inference system using cross-node Expert Parallelism | DeepSeek-V3/R1 Inference System |

API Development Tip: Accelerate Your Workflow with Apidog

Optimizing parallelism and data access is only part of the equation for high-performance systems. Efficient API development and testing are just as crucial—especially when connecting microservices, ML models, or data pipelines.

Apidog offers a unified platform to design, document, test, and mock APIs. For backend and AI engineers, this means:

- Automated testing for RESTful and GraphQL endpoints

- Seamless integration with CI/CD and data pipelines

- Rapid debugging and documentation, reducing manual overhead

By pairing DeepSeek’s open-source tools (like DualPipe and 3FS) with a robust API workflow in Apidog, you can build, test, and scale high-performance systems with fewer bottlenecks.

Ready to streamline your API and AI integration?

Day 1: FlashMLA — High-Performance Decoding for Hopper GPUs

FlashMLA is an open-source decoding kernel designed for NVIDIA Hopper GPUs, built to maximize throughput and minimize latency in AI workloads. Here’s why it’s relevant for backend teams:

- Extreme Performance: Utilizes Hopper’s 3000 GB/s memory bandwidth and 580 TFLOPS compute, unlocking real-time inference for demanding applications (e.g., LLM APIs, real-time analytics).

- Efficient Sequence Handling: Optimized for variable-length sequences—ideal for use cases like streaming text generation or adaptive dialog systems.

- Advanced Memory Management: BF16 support and a paged KV cache reduce overhead while keeping precision high.

- Open Collaboration: Built on lessons from FlashAttention and CUTLASS, FlashMLA invites community contributions for rapid evolution.

Example: API developers exposing LLM endpoints can use FlashMLA to reduce latency, supporting faster user-facing applications with fewer hardware resources.

Day 2: DeepEP — Scaling Mixture-of-Experts Model Communication

DeepEP solves a core challenge in scaling Mixture-of-Experts (MoE) models: efficient, low-latency GPU communication.

Key Benefits for Engineering Teams

- Optimized all-to-all communication—crucial for large MoE or distributed models

- Seamless integration with NVLink and RDMA for both intra- and inter-node use

- Dual kernel support for both high-throughput training and low-latency inference

- Native FP8 dispatch for memory and compute efficiency

Practical Use Case: Running large recommendation systems or real-time analytics? DeepEP ensures communication won’t become a bottleneck—allowing you to scale horizontally without sacrificing performance.

Day 3: DeepGEMM — FP8 Matrix Multiplication for Modern AI

DeepGEMM is an ultra-lightweight, FP8-optimized GEMM library for fast, efficient matrix computations—a core operation in AI and data science.

Why Backend and API Developers Should Care

- FP8 Precision: Reduces memory and compute requirements, ideal for cost-effective AI deployments

- Minimal Dependencies: ~300 lines of core logic—easy to integrate, audit, and customize

- JIT Compilation: Delivers real-time optimization as workloads change

- Versatility: Supports both dense and MoE layouts, outperforming many expert-tuned kernels

Use Case: If your backend triggers large AI workloads (like batched inference or training), DeepGEMM can help you achieve higher throughput and lower costs.

Day 4: DualPipe — Advanced Pipeline Parallelism & Load Balancing

DualPipe introduces a bidirectional pipeline parallelism algorithm, keeping GPUs busy by overlapping computation and communication—solving a common source of wasted resource time.

Highlights for Technical Teams

- Reduces GPU idle time during model training and inference

- Solves cross-node communication bottlenecks for LLMs and MoE architectures

- Integrates with EPLB (Expert-Parallel Load Balancer) to dynamically balance workloads across GPUs

- Open-source and modular for easy adoption and community-driven upgrades

Example: Teams struggling with slow model training cycles can use DualPipe and EPLB to cut training times dramatically, freeing up resources for experimentation and faster iteration.

Day 5: 3FS (Fire-Flyer File System) — High-Speed Distributed Data Access

3FS is a distributed, parallel file system built for high-speed access to massive datasets—solving data bottlenecks in AI and analytics pipelines.

Key Features

- Parallel data access across nodes, minimizing latency and maximizing throughput

- Designed for SSDs and RDMA networks for optimal hardware utilization

- Proven scalability: Up to 6.6 TiB/s read speeds in a 180-node cluster

- Fully open-source and customizable for unique workflows

Practical Example: Backend teams managing large training corpora or streaming data can adopt 3FS to reduce data loading times, speeding up both AI training and analytics.

Day 6: DeepSeek-V3/R1 Inference System — Efficient Large-Scale AI Inference

DeepSeek-V3/R1 Inference System brings a holistic approach to large-scale AI inference using cross-node Expert Parallelism. It optimizes both throughput and latency for serving advanced models in production.

How It Works

- Dynamic parallelism strategies: Adapts batch sizes and expert allocation between prefilling and decoding phases for maximum GPU efficiency.

- Dual-batch overlap: Hides communication latency by splitting requests and overlapping computation.

- Load balancers: Actively distribute computation and memory usage across GPUs for optimal performance.

Operational Insights:

- Scales up to 278 nodes (8 GPUs each) for peak loads.

- Delivers a theoretical profit margin above 500% (demonstrating cost efficiency at scale).

Engineering teams can draw inspiration from these strategies to architect their own scalable inference systems or optimize existing ones.

Takeaway: Building Efficient AI & API Systems with DeepSeek and Apidog

DeepSeek’s open-source week has armed backend, API, and AI engineers with a new suite of powerful, modular tools—each addressing a real-world bottleneck in modern development. By adopting these solutions and combining them with effective API design and testing workflows in Apidog, technical teams can achieve:

- Faster AI model development and deployment

- Reduced operational costs and resource waste

- Scalable, maintainable systems ready for production workloads

Unlock the full potential of your data and AI pipelines—start exploring these tools, and streamline your API development process with Apidog.