Cursor is emerging as a leading AI-powered coding IDE, seamlessly integrating large language models (LLMs) to accelerate development. One of Cursor’s standout features is its intelligent "Agent," which uses various tools—like web search, file reading, and code execution—to enhance coding productivity. But these powerful AI capabilities come with usage limits that every developer and technical lead should understand to avoid workflow interruptions and manage costs effectively.

This guide breaks down Cursor’s tool call limits, what happens when you reach them, how different modes like MAX impact your workflow, and actionable strategies for efficient usage—so engineering teams and API developers can get the most from AI-driven development.

💡 Looking for an API platform that streamlines teamwork, documentation, and testing? Apidog creates beautiful API documentation, boosts developer productivity, and offers a powerful alternative to Postman at a more accessible price.

What Are Tool Calls in Cursor? Understanding the Limits

When you interact with Cursor’s Agent, the AI doesn’t just answer from its internal knowledge. Instead, it may perform external actions, known as "tool calls," to fetch real-time data or analyze your codebase.

Common tool call examples:

- Web Search: Fetches the latest documentation, library updates, or examples

- File Reading: Analyzes your current project files for context, definitions, or dependencies

- Code Execution: (Where supported) Runs snippets to test or verify behavior

How limits work:

In standard Agent mode, Cursor allows up to 25 tool calls per interaction. This means that for each prompt or command, the AI can use up to 25 tools—searching, reading, and potentially executing code—before it requires further authorization from you.

What Happens When You Hit the 25 Tool Call Limit?

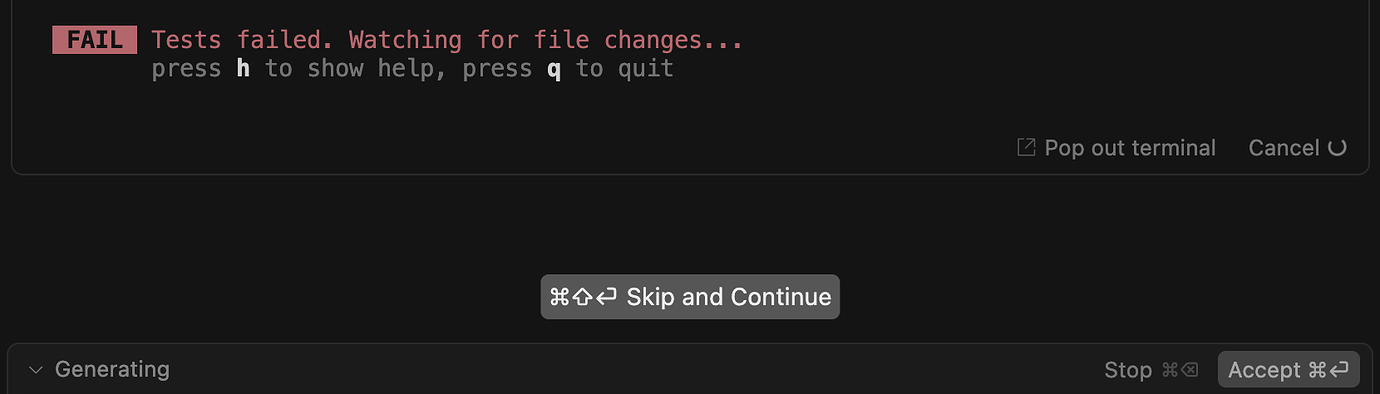

If the AI reaches the 25 tool call ceiling during a single interaction, Cursor will prompt you—typically with a "Continue" or "Skip and Continue" button. This is a checkpoint, not a failure: you’re given the choice to authorize additional actions.

- Pressing "Continue" authorizes the AI to perform one more tool call. If the task is complex, you may be prompted to continue multiple times.

- Billing impact: Each "Continue" click counts as a new request, affecting your usage quota—critical for users on free, Pro, or Business plans.

Tip: Frequent "Continue" usage can quickly consume your monthly request allocation. Monitoring your usage and refining prompts can help you avoid hitting these limits unexpectedly.

How to Avoid Hitting Cursor’s Tool Call Limit: Practical Strategies

For API-centric teams and backend engineers, maximizing Cursor’s efficiency means working within these boundaries. Here’s how:

-

Write Clear, Specific Prompts: Vague requests force more tool calls.

Example:- Instead of: "Fix my code errors."

- Use: "In

user_service.py, I get a TypeError on line 52: '...'. Can you analyzevalidate_user()starting at line 40 and suggest a fix?"

-

Break Large Tasks Into Steps:

Divide complex requests into smaller, sequential prompts. For example, first ask for a design outline, then request code generation, then ask for test cases. -

Leverage File Context:

Open relevant files in Cursor so the Agent can directly access them, reducing redundant searches or file reads. -

Combine Related Questions:

If you have several questions about the same API or library, ask them together. The AI can reuse information and minimize repeated tool usage. -

Monitor Tool Use in Chat:

Cursor’s chat often displays search results or file snippets. If you notice irrelevant or redundant tool calls, refine your prompt for better focus. -

Manual Intervention:

For simple lookups, sometimes it’s faster to check documentation or code manually, especially if the AI gets stuck.

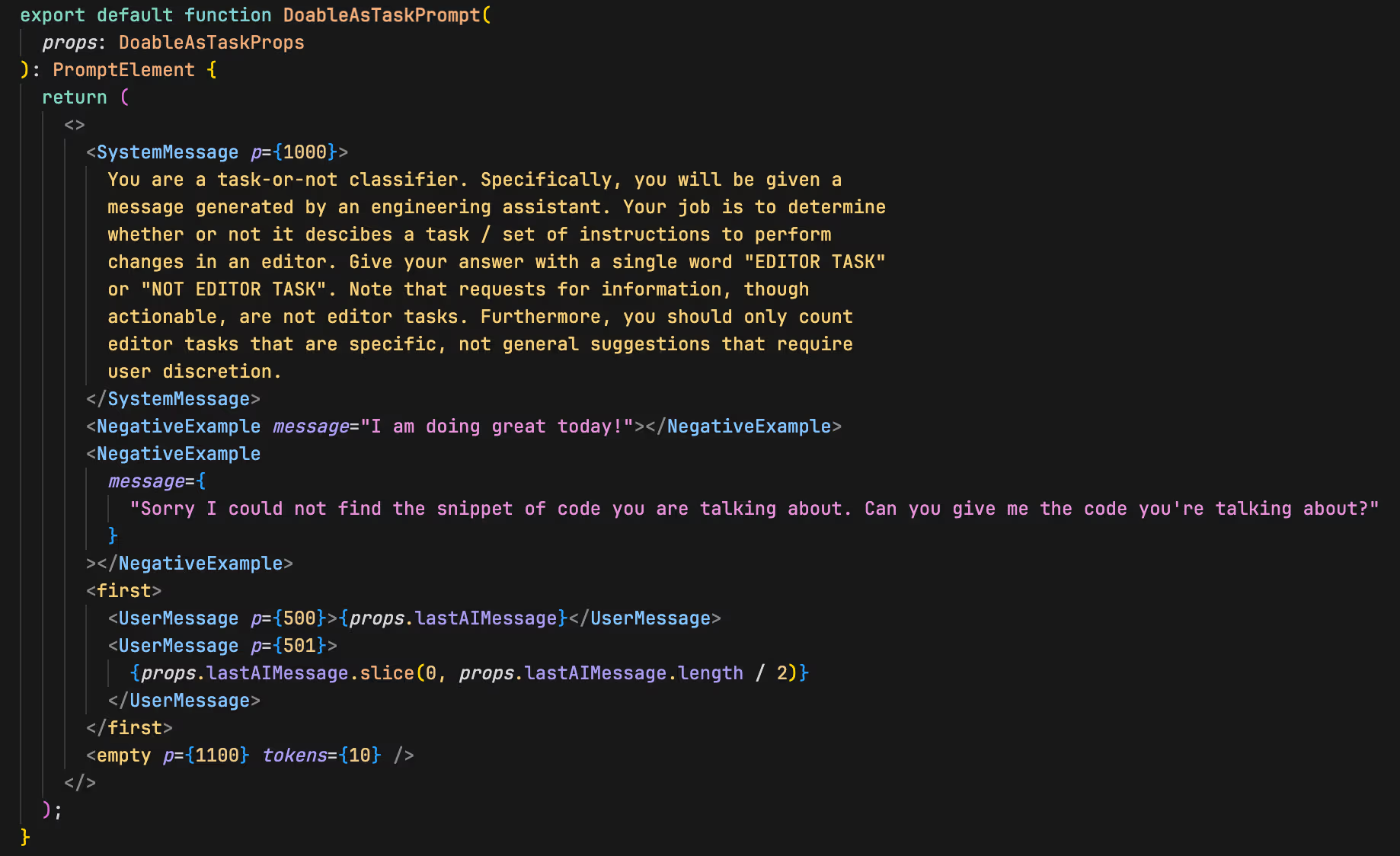

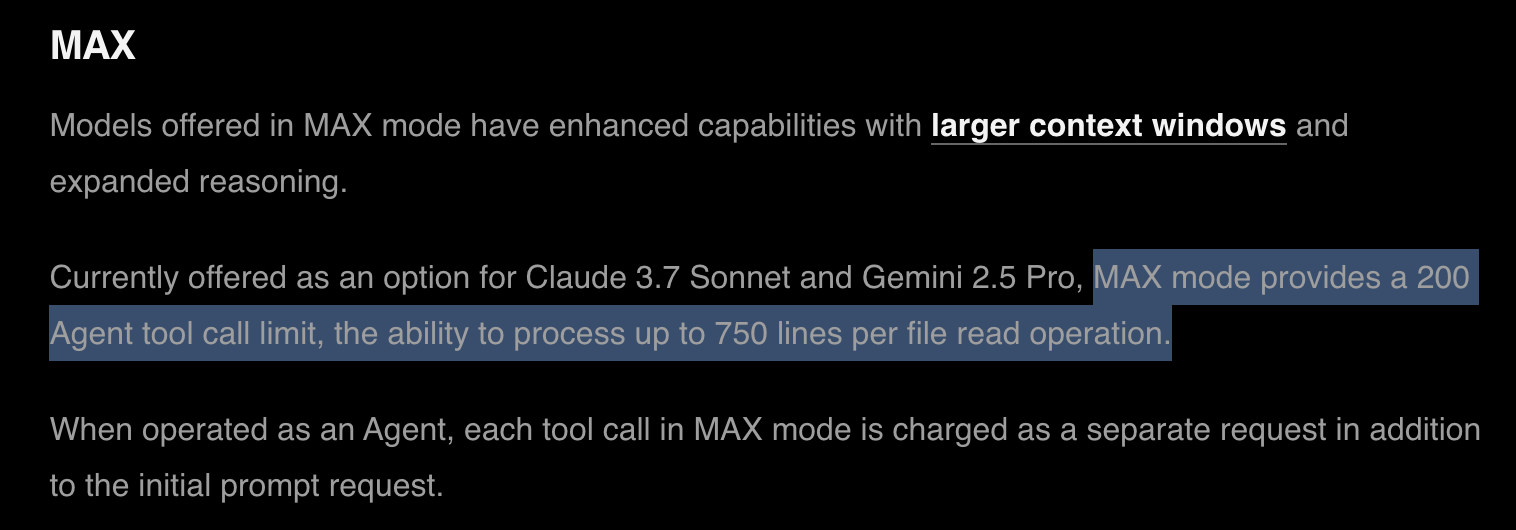

How Does MAX Mode Change Cursor’s Tool Call Limits?

For advanced workflows—like deep research, extensive code refactoring, or multi-step analysis—Cursor’s MAX mode is available for select models (Claude 3.7 Sonnet, Gemini 2.5 Pro).

Key Differences in MAX Mode

-

Tool Call Limit:

Raises the cap to 200 tool calls per interaction (vs. 25 in standard mode). -

Cost Structure:

- The initial prompt counts as one request.

- Each tool call in MAX mode is billed as an additional request (e.g., 1 prompt + 10 tool calls = 11 requests).

Implication:

MAX mode enables much larger, more complex interactions, but can rapidly consume your request quota—especially for tool-heavy tasks. Use MAX mode when your workflow truly demands it.

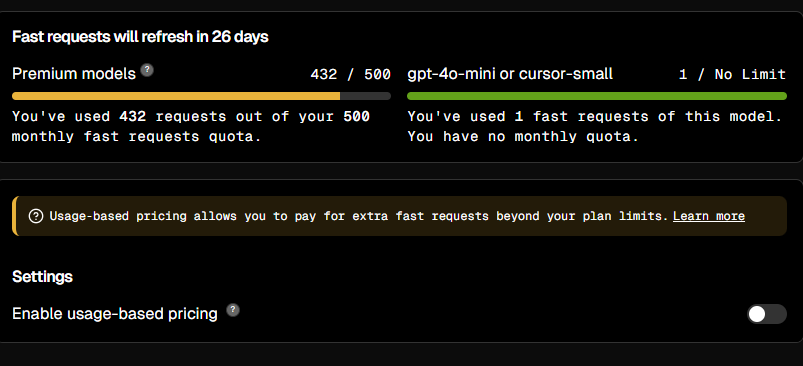

Should You Upgrade to Cursor Pro to Bypass Limits?

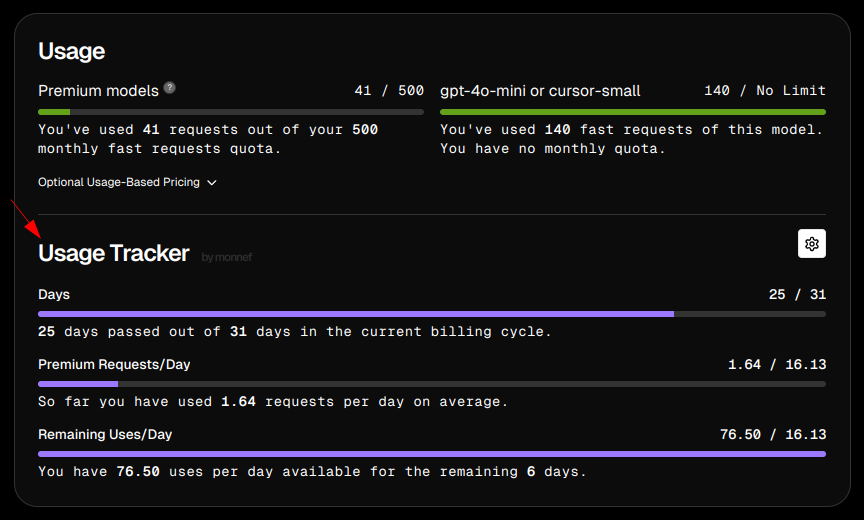

Pro and Business subscribers receive 500 premium requests per month, covering both standard and MAX tool calls (with the same per-request counting). If you exceed this allocation:

-

You may experience:

- Slower response times during peak usage

- Temporary limits on premium model access

-

Usage-Based Pricing:

Enable this option in your account settings to continue using premium models after reaching your monthly cap. Additional requests are billed per usage, with clear accounting for standard vs. MAX mode.

Pro tip: For teams running high-volume code reviews, API integrations, or automated testing, monitoring request consumption and understanding cost structures is essential.

File Reading Limits: How Much Code Can Cursor’s Agent See?

Cursor’s AI also has file reading limits—the number of lines it can analyze at a time:

- MAX mode: up to 750 lines

- Standard/Other modes: up to 250 lines

If your file exceeds these limits, the Agent may need to perform multiple reads (each counting as a tool call), or could miss context from widely separated code sections. For large files, focus prompts on relevant code regions to optimize tool call efficiency.

Track Your Usage and Stay Productive

Cursor’s dashboard and chat interface provide real-time visibility into your tool call usage, including:

- Tool call summaries (search results, file read snippets)

- "Continue" prompts and accepted actions

- MAX mode tool call counts

Best Practice:

Regularly check your usage stats in Cursor’s dashboard to avoid surprises. For API teams, this means more predictable modeling of project costs and AI-driven productivity.

Conclusion: Mastering Tool Call Limits for Efficient AI Development

Cursor’s tool call limits are designed to balance powerful AI assistance with system performance and cost transparency. By understanding the 25-call standard, the impact of "Continue," the capabilities and costs of MAX mode, and file reading constraints, you can tailor your prompts and workflows for optimal efficiency.

For technical teams, it’s about smart interaction: craft focused prompts, leverage the right mode for the task, monitor AI actions, and keep an eye on usage. And if you’re seeking an all-in-one platform for API design, testing, and collaboration, Apidog is built to keep your team moving fast—delivering robust documentation, productivity, and a cost-effective alternative to Postman.