Cursor 2.0 represents a significant advancement in AI-assisted software engineering, integrating frontier-level models with a redesigned interface that prioritizes agentic workflows. Developers now access tools that accelerate coding by handling complex tasks autonomously, from semantic searches across vast codebases to parallel execution of multiple agents.

Cursor 2.0 builds on the foundation of its predecessors, shifting from a traditional IDE to an agent-first environment. The Cursor team releases this version with two core innovations: Composer, their inaugural agent model, and a multi-agent interface that supports concurrent operations. These elements address key challenges in modern software development, such as latency in AI responses and interference during collaborative agent runs. Moreover, the update incorporates feedback from early testers, ensuring practical utility for professional engineers.

The release aligns with broader trends in AI for coding, where models evolve from simple autocompletion to full-fledged agents capable of planning, executing, and iterating on code. Cursor 2.0 positions itself as the optimal platform for this paradigm, leveraging custom training and infrastructure to deliver results in under 30 seconds for most tasks. Furthermore, it maintains compatibility with existing workflows while introducing features that reduce manual intervention.

What Sets Cursor 2.0 Apart in AI Coding Tools

Cursor 2.0 distinguishes itself through its focus on speed and intelligence, achieved via specialized training and architectural choices. The platform employs a mixture-of-experts (MoE) architecture for Composer, enabling efficient handling of long-context inputs essential for large-scale projects. This design allows the model to activate specific experts for coding subtasks, optimizing resource use and response times.

In comparison to earlier versions, Cursor 2.0 refines the Language Server Protocol (LSP) for faster diagnostics and hover tooltips, particularly in languages like Python and TypeScript. The team increases memory limits dynamically based on system RAM, mitigating leaks and enhancing stability in resource-intensive scenarios. Additionally, the update deprecates features like Notepads in favor of more integrated agent tools, streamlining the user experience.

Users report substantial productivity gains, as evidenced by community feedback on platforms like X. For instance, early adopters praise the seamless transition to agent mode, accessible via settings for existing users. This accessibility ensures developers can experiment without disrupting established habits.

The platform's changelog highlights technical refinements, including optimized text parsing for chat rendering and batched concurrent calls for file operations. These changes reduce latency, making Cursor 2.0 suitable for real-time collaboration in team environments.

Exploring Composer: Cursor's Frontier Agent Model

Composer stands as the cornerstone of Cursor 2.0, a custom-built model trained via reinforcement learning (RL) to excel in software engineering tasks. The model processes requests in a sandboxed environment, utilizing tools like file editing, terminal commands, and codebase-wide semantic search. This training regimen incentivizes efficient behaviors, such as parallel tool usage and evidence-based responses, resulting in emergent capabilities like automatic linter fixes and unit test generation.

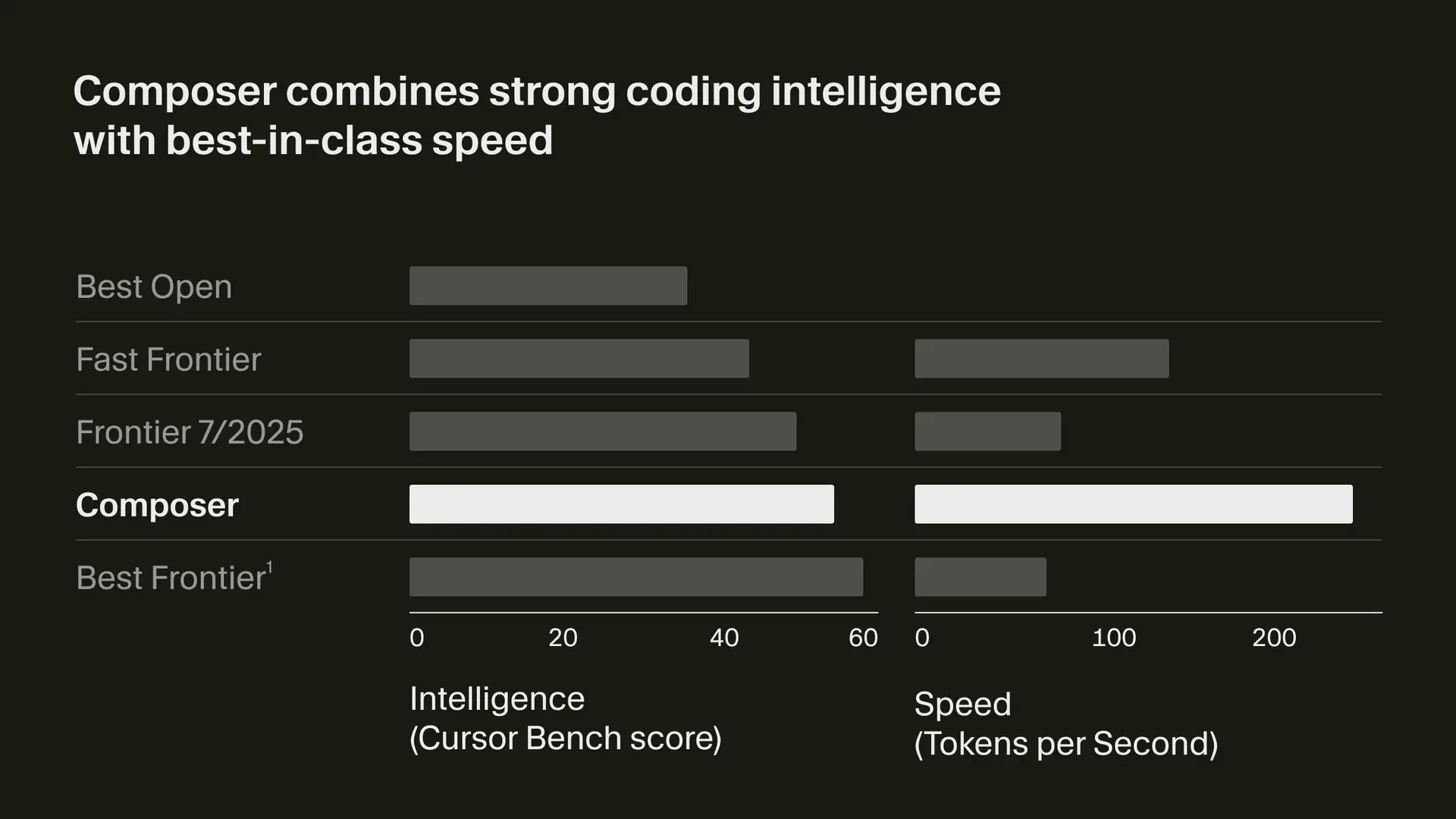

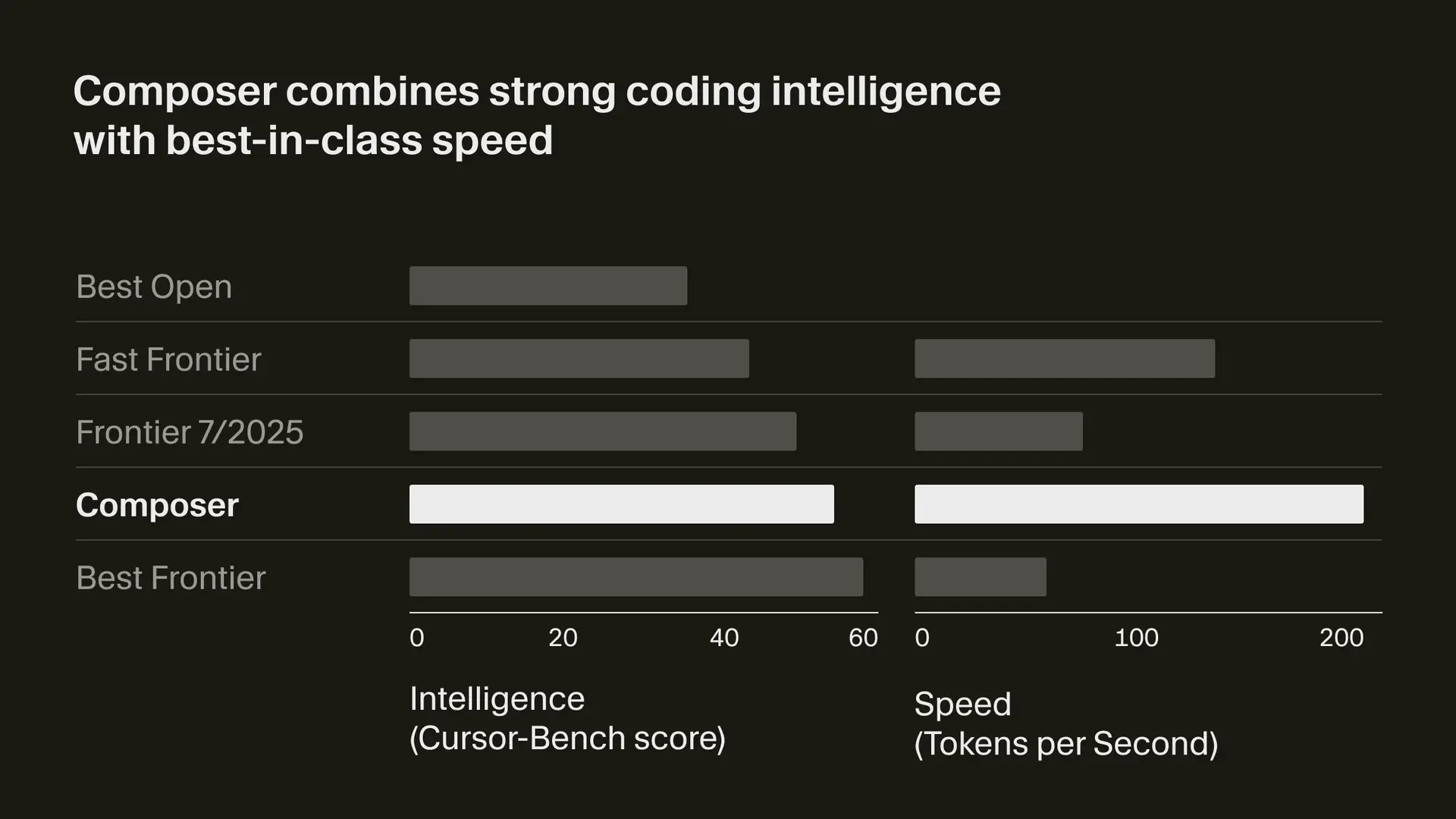

Technically, Composer operates as an MoE language model, supporting extended context windows for comprehensive codebase understanding. Its inference speed reaches up to 200 tokens per second, four times faster than comparable frontier models like GPT-5 or Sonnet 4.5, as benchmarked on Cursor Bench—a dataset of real engineering requests. This benchmark evaluates not only correctness but also adherence to best practices, positioning Composer in the "Fast Frontier" category.

The training infrastructure relies on PyTorch and Ray for scalable RL, employing MXFP8 precision to enable rapid inference without quantization overhead. During sessions, Composer interfaces with hundreds of concurrent sandboxes, handling bursty workloads through a rewritten virtual machine scheduler. Consequently, it integrates smoothly into Cursor's agent harness, allowing developers to maintain flow during iterative coding.

For example, in daily use at Cursor's own team, Composer tackles real tasks, from debugging to feature implementation. Users activate it via the new interface, where it plans and executes in parallel with other models for optimal results.

However, Composer lags behind top-tier models in raw intelligence for extremely complex problems, trading some depth for speed. Nevertheless, its specialization in coding makes it ideal for low-latency applications.

Multi-Agent Interface: Harnessing Parallelism for Efficiency

Cursor 2.0 introduces a multi-agent interface that enables up to eight agents to run concurrently, each in isolated git worktrees or remote machines to prevent conflicts. This setup leverages parallelism, where multiple models attempt the same prompt, and the system selects the best output. Such an approach significantly improves success rates on challenging tasks, as agents collaborate without interference.

The interface centers on agents rather than files, allowing users to specify outcomes while agents manage details. Developers access files via a sidebar, or revert to the classic IDE layout for in-depth exploration. Furthermore, the system supports background planning with one model and foreground building with another, enhancing flexibility.

Technical underpinnings include enhanced reliability for cloud agents, with 99.9% uptime and instant startup. The update also refines prompt UI, displaying files as inline pills and automating context gathering—eliminating manual tags like @Definitions.

In practice, this parallelism accelerates development cycles. For instance, running the same query across Composer, GPT-5 Codex, and Sonnet 4.5 yields diverse solutions, from which users choose or merge.

Enterprise features extend this capability, with admin controls for sandboxed terminals and audit logs for team events. Therefore, Cursor 2.0 suits both individual developers and large organizations.

Integrated Browser and Code Review Tools

A standout feature in Cursor 2.0 is the built-in browser tool, now generally available after beta testing. Agents use this to test code, iterate on UI issues, and debug client-side problems by taking screenshots and forwarding DOM information. This integration eliminates the need for external tools, allowing seamless workflows within the editor.

Code review receives similar attention, with simplified viewing of agent-induced changes across files. Users apply or undo diffs with a single action, reducing time spent switching contexts.

Additionally, sandboxed terminals ensure secure command execution, restricting internet access unless allowlisted.

These tools address bottlenecks in agentic coding, where review and testing often slow progress. By embedding them, Cursor 2.0 empowers agents to self-verify outputs, leading to more reliable results.

For example, an agent might run a web app locally via the browser, identify errors, and fix them iteratively. This capability proves invaluable for full-stack development, as noted in reviews where users praise the reduced debugging overhead.

Voice Mode and UI Enhancements

Cursor 2.0 incorporates voice mode, using speech-to-text for agent control. Custom keywords trigger executions, turning verbal ideas into code effortlessly. This feature complements the agent's steerability, allowing mid-run interruptions via shortcuts.

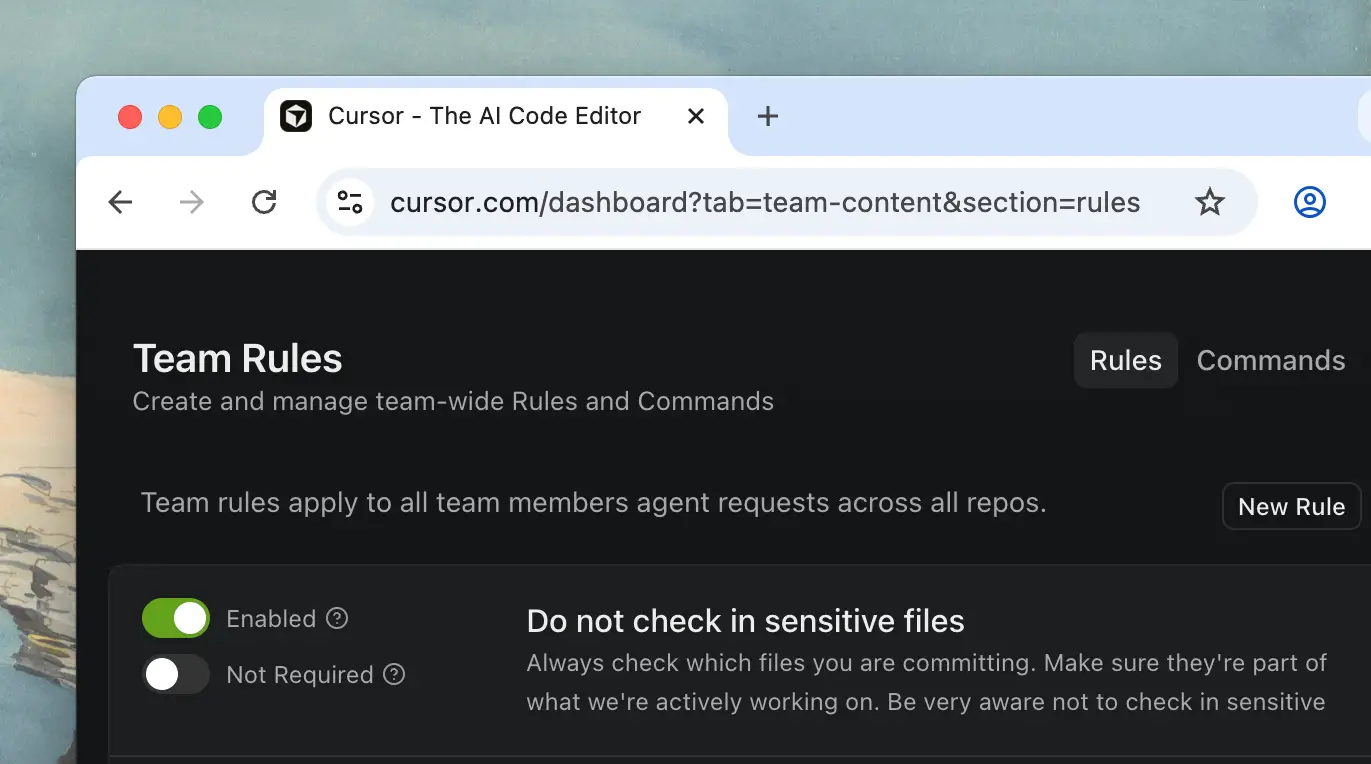

UI improvements include compact chat modes that hide icons and collapse diffs, alongside better copy/paste for prompts with context. Team commands, shareable via deeplinks, enable centralized management of rules and prompts.

Performance-wise, the update optimizes LSP for all languages, with noticeable gains in large projects. Memory usage drops, and loading speeds increase, making Cursor 2.0 responsive even on modest hardware.

Community Feedback and Real-World Adoption

Feedback from X and forums indicates strong enthusiasm for Cursor 2.0. Users like Kevin Leneway commend the model's speed and browser integration, while others share demos of multi-agent runs. However, some criticize the shift from traditional functionality, suggesting a learning curve.

Reviews highlight its enterprise potential, though it's not fully team-oriented out of the box. Non-coders find it overkill, but professionals appreciate the productivity boost.

Comparing Cursor 2.0 to Competitors

Versus VS Code with extensions, Cursor 2.0 offers deeper AI integration, outperforming in agentic tasks. Compared to Claude Code, it provides faster responses and parallel agents, though Claude excels in certain reasoning benchmarks.

In enterprise contexts, Cursor lags in native team features but shines in individual efficiency. Overall, its custom model gives it an edge in coding-specific scenarios.

Use Cases: From Prototyping to Production

Cursor 2.0 excels in prototyping, where agents generate boilerplate and test iterations quickly. In production, it aids debugging large codebases via semantic search.

For API development, Composer handles endpoint implementations, pairing well with Apidog for testing. Teams use multi-agents for parallel feature development, accelerating sprints.

Examples include building web apps, where browser tools verify frontend changes, or data pipelines, where voice mode speeds ideation.

Future Directions in Agentic Coding

Cursor 2.0 foreshadows a future where agents dominate development, with ongoing RL refinements promising smarter models. Integration with more tools and expanded enterprise features will likely follow.

As AI evolves, Cursor positions itself at the forefront, potentially influencing standards in software engineering.

Conclusion

Cursor 2.0 transforms coding through innovative agents and interfaces, delivering technical prowess that enhances developer productivity. Its features, from Composer to multi-agent parallelism, address real needs, making it a compelling choice. As adoption grows, it could redefine how engineers build software.