Running powerful models locally is increasingly important for privacy, speed, and cost efficiency. One of the latest innovations in this space is Command A—a state-of-the-art generative model designed for maximum performance with minimal compute. If you’re exploring local AI deployment options, this guide will walk you through how to run Command A locally using Ollama.

But what exactly is Command A? Why was it created, and how does it compare to leading models like GPT-4o and DeepSeek-V3? In this tutorial, we’ll dive deep into the purpose, performance benchmarks, and API pricing of Command A. We’ll also provide a step-by-step walkthrough for setting up and running Command A on your machine using Ollama.

What is Command A?

Command A is a cutting-edge generative AI model designed for enterprise applications. Developed by the Cohere Team, Command A is built to deliver maximum performance with minimal computational overhead. It’s engineered to run on local hardware with a serving footprint as small as two GPUs, compared to the 32 GPUs typically required by other models. Its architecture is optimized for fast, secure, and high-quality AI responses, making it an attractive option for private deployments.

At its core, Command A is a tool that facilitates advanced natural language processing and generation tasks. It serves as the backbone for applications that need to process large amounts of text quickly, handle complex instructions, and provide reliable responses in a conversational manner.

Who Would Use Command A?

Command A is designed for enterprise applications, offering high performance with minimal hardware requirements. It runs efficiently on just two GPUs (A100s or H100s) without sacrificing speed or accuracy. With a 256k context length, it excels in processing long documents, multilingual queries, and business-critical tasks.

Its agentic capabilities support autonomous workflows, while multilingual optimization ensures consistent responses across different languages. Additionally, lower hardware demands and faster token generation make it cost-effective, reducing both latency and operational expenses. Finally, local deployment enhances security, keeping sensitive data in-house and minimizing risks associated with cloud-based AI services.

These advantages make Command A a compelling choice for businesses looking to integrate powerful AI capabilities without incurring the high costs typically associated with state-of-the-art models.

API Pricing of Cohere Command A

Cost is a major consideration for any enterprise deploying AI solutions. Command A is designed to be both high-performing and cost-effective. Here’s how its pricing compares:

Cohere API Pricing for Command A:

- Input Tokens: $2.50 per 1 million tokens

- Output Tokens: $10.00 per 1 million tokens

When you compare these costs to those of cloud-based API access for other models, Command A’s private deployments can be up to 50% cheaper. This significant cost reduction is achieved through:

- Efficient Compute Usage: Requiring only two GPUs instead of dozens.

- Higher Throughput: Faster token generation reduces processing time, leading to lower operational costs.

- Private Deployment: Running the model on-premises not only enhances security but also avoids recurring cloud API costs.

For businesses that process large volumes of data or require continuous, high-speed interactions with AI, these pricing benefits make Command A a highly attractive option.

How to Install and Configure Ollama to Run Command A

Why Run Command-A Locally?

- Privacy: Your data stays on your device.

- Cost: No API fees or usage limits.

- Customization: Fine-tune the model for your needs.

- Offline Access: Use AI without an internet connection.

Prerequisites

- Ollama: Install it from Ollama.ai.

- Command A Model: you can get the

command-amodel directly from Ollama's official website under the "models" section.

Step 1: Install Ollama

Windows/macOS/Linux:

- Download Ollama from the official site, then run the installer. After installation, be sure to verify the installation using the following command:

ollama --version

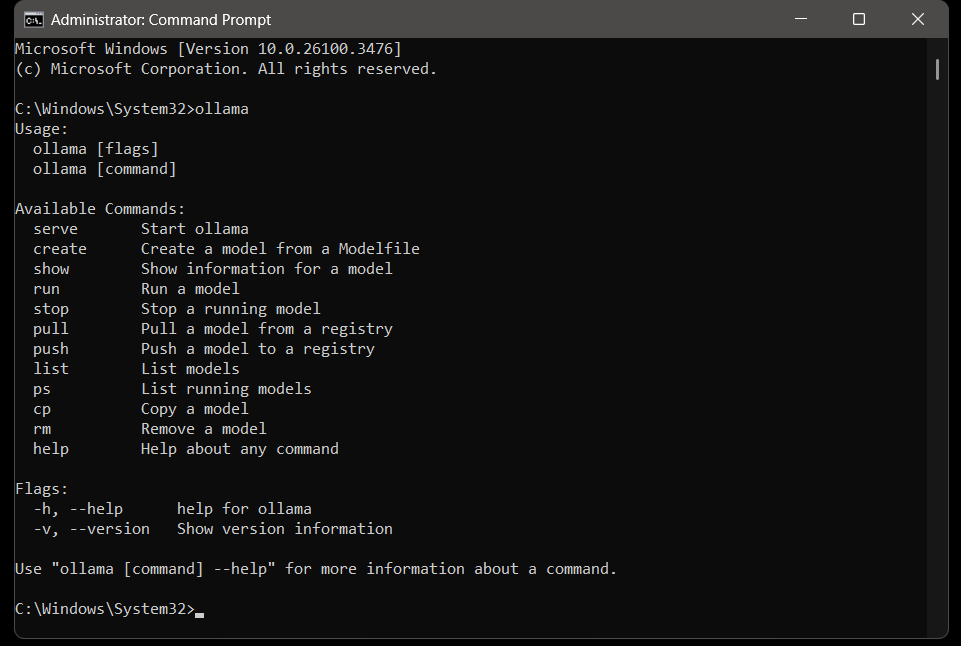

# Example Output: ollama version 0.1.23 When Ollama is installed on your system, you can run the command ollama to view Ollama's available commands.

Step 2: Pull the Command A Model

Ollama supports thousands of models via its library. Simply head over to their official site and search for command a under the "models" section.

# Pull the model

ollama pull command-a

# Pull the model and run it after completion

ollama run command-a

Note: If you have a custom Command-a model file, use:

ollama create command-a -f ModelfileTake note that the model is quite large and requires significant storage space. Before installation, check your available disk space and explore other models to find one that best fits your system’s capacity.

Step 3: Run Command A Locally

Start the Ollama server and run the model:

# Starts the server

ollama serve

# Loads the model

ollama run command-r Test it: Ask a question directly in the terminal:

# Sample Input Question

>>> What's the capital of Zambia?

# Sample Response

>>> LusakaTroubleshooting

Even with a robust setup, you might encounter issues. Here are some troubleshooting tips and best practices:

1. “Model Not Found” Error

- Verify the model's name:

ollama list - Pull the model again:

ollama pull command-a

2. API Connection Issues

- Ensure Ollama is running:

ollama serve

3. Slow Performance

- Use smaller models (e.g.,

command-r:3b). - Allocate more RAM to Ollama.

Command A vs GPT-4o & Deepseek V3

When evaluating AI models, it’s essential to compare them not just on theoretical performance but also on practical benchmarks and real-world use cases. Command-a has been benchmarked against models like GPT-4o and DeepSeek-V3, and the results are impressive:

- Performance Efficiency: Command-a delivers tokens at a rate of up to 156 tokens/sec, which is 1.75 times faster than GPT-4o and 2.4 times faster than DeepSeek-V3. This higher throughput means faster responses and improved user experience.

- Compute Requirements: While many models require up to 32 GPUs for optimal performance, Command-a runs effectively on only two GPUs. This drastic reduction in hardware requirements not only cuts costs but also makes it more accessible for private deployments.

- Context Length: With a context length of 256k tokens, Command-a outperforms many leading models that typically have shorter context windows. This allows Command-a to manage and understand much longer documents, a key advantage for enterprise applications.

- Human Evaluation: In head-to-head human evaluations on tasks spanning business, STEM, and coding, Command-a matches or exceeds the performance of larger, slower competitors. These evaluations are based on enterprise-focused accuracy, instruction following, and style, ensuring that Command-a meets real-world business requirements.

Overall, Command A’s design philosophy emphasizes efficiency, scalability, and high performance, making it a standout model in the competitive landscape of AI.

Final Thoughts

In this comprehensive tutorial, we’ve explored how to run Command A locally using Ollama, along with in-depth insights into what Command A is, why it was created, and how it compares to other state-of-the-art models like GPT-4o and DeepSeek-V3.

Command A is a cutting-edge generative model optimized for maximum performance with minimal hardware requirements. It excels in enterprise environments by offering faster token generation, a larger context window, and cost efficiency. With benchmarks showing that it can deliver tokens at up to 156 tokens per second—outperforming its competitors—and with significantly lower compute requirements, Command A represents the future of efficient AI.

We also detailed the API pricing, which stands at $2.50 per 1M input tokens and $10.00 per 1M output tokens, making Command A an economically attractive option for private deployments.

By following our step-by-step guide, you’ve learned how to:

- Set up the necessary environment for running Command-a.

- Configure Ollama to serve as the local engine powering Command-a.

- Understand the performance benefits and pricing structure that make Command-a stand out in the competitive AI landscape.

Now that you’re equipped with this knowledge, you can experiment with different enterprise queries, integrate additional functionalities, and further optimize your local AI deployments.

🚀 Boost your API development and testing workflow! Download Apidog for free and streamline your integration process with top-notch API testing tools.