Developers increasingly seek efficient AI models that balance performance with cost and speed. Claude Haiku 4.5 emerges as a powerful option in this landscape, offering advanced capabilities for various applications. This article provides a detailed examination of how engineers and programmers can implement the Claude Haiku 4.5 API in their projects. From initial setup to sophisticated integrations, you gain insights into maximizing its potential.

As you proceed through this guide, you encounter step-by-step instructions that build on each other. First, understand the core attributes of Claude Haiku 4.5, then move to practical implementation.

Understanding Claude Haiku 4.5: Core Features and Improvements

Anthropic designed Claude Haiku 4.5 as a compact yet intelligent model that prioritizes speed and efficiency. Engineers appreciate how it delivers near-frontier performance without the overhead of larger models. Specifically, Claude Haiku 4.5 achieves coding proficiency comparable to Claude Sonnet 4, but it operates at one-third the cost and more than twice the speed. This optimization stems from refined algorithms that reduce computational demands while maintaining high accuracy.

Transitioning from its predecessor, Claude Haiku 3.5, this version exhibits enhanced alignment and reduced rates of misaligned behaviors in safety evaluations. For instance, automated assessments reveal statistically lower occurrences of concerning outputs, making it a safer choice for production environments. Moreover, Claude Haiku 4.5 classifies under AI Safety Level 2 (ASL-2), which indicates minimal risks in areas like chemical, biological, radiological, and nuclear (CBRN) applications. This classification allows broader deployment compared to ASL-3 models like Claude Sonnet 4.5.

Key capabilities include real-time processing for low-latency tasks. Developers use it for chat assistants, customer service agents, and pair programming scenarios. In coding tasks, it excels by breaking down complex problems, suggesting optimizations, and debugging code in real time. Additionally, it supports multi-agent systems where a coordinating model like Claude Sonnet 4.5 delegates subtasks to multiple instances of Claude Haiku 4.5 for parallel execution. This approach accelerates workflows in software prototyping, data analysis, and interactive applications.

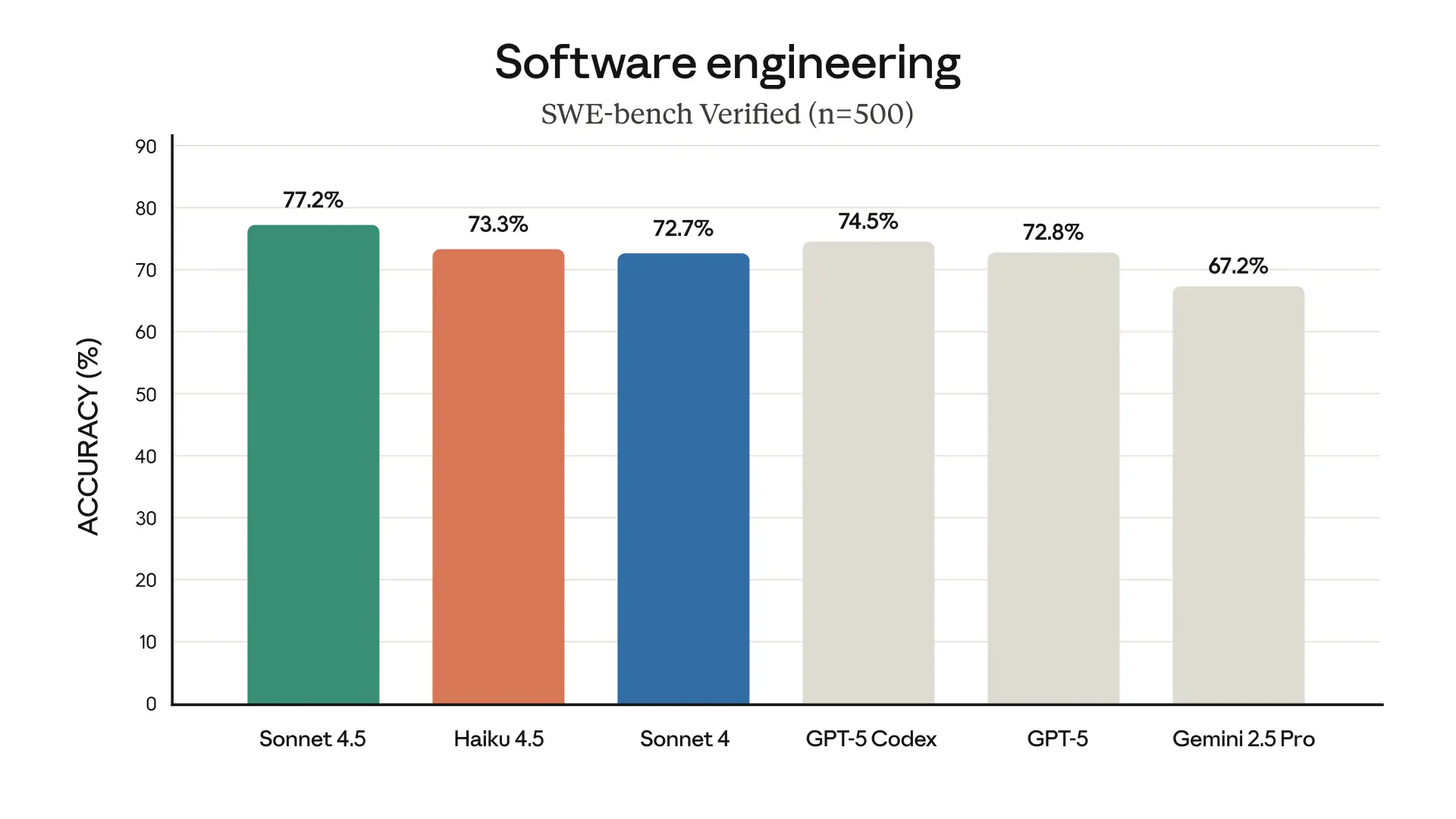

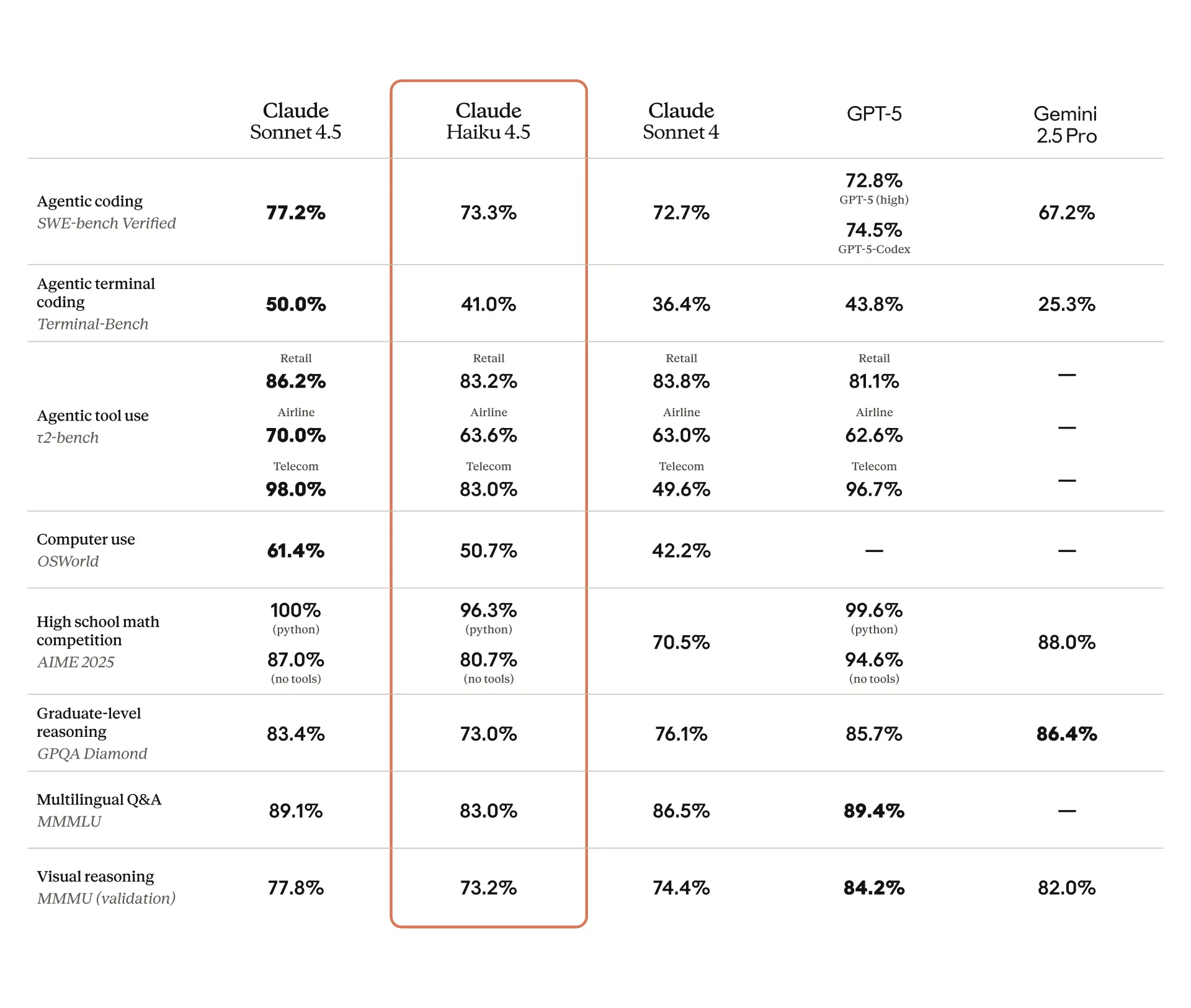

Benchmarks further validate its strengths. On the SWE-bench Verified, Claude Haiku 4.5 scores 73.3%, averaged over 50 trials in a Dockerized environment with a 128K thinking budget. It employs a simple scaffold including bash and file-editing tools, encouraging extensive tool usage—often over 100 times per task. Compared to competitors like OpenAI's GPT-5, it demonstrates superior performance in debugging and feature implementation. Other evaluations, such as Terminal-Bench (averaging 40.21% without thinking and 41.75% with a 32K budget) and OSWorld (with 100 max steps across four runs), highlight its reliability in agent-based and operating system interactions.

Furthermore, Claude Haiku 4.5 integrates seamlessly with platforms like Amazon Bedrock and Google Cloud's Vertex AI. Developers replace older models like Haiku 3.5 or Sonnet 4 directly, benefiting from its economical pricing structure. As you explore these features, consider how they align with your project requirements before advancing to setup procedures.

Pricing Details for Claude Haiku 4.5 API

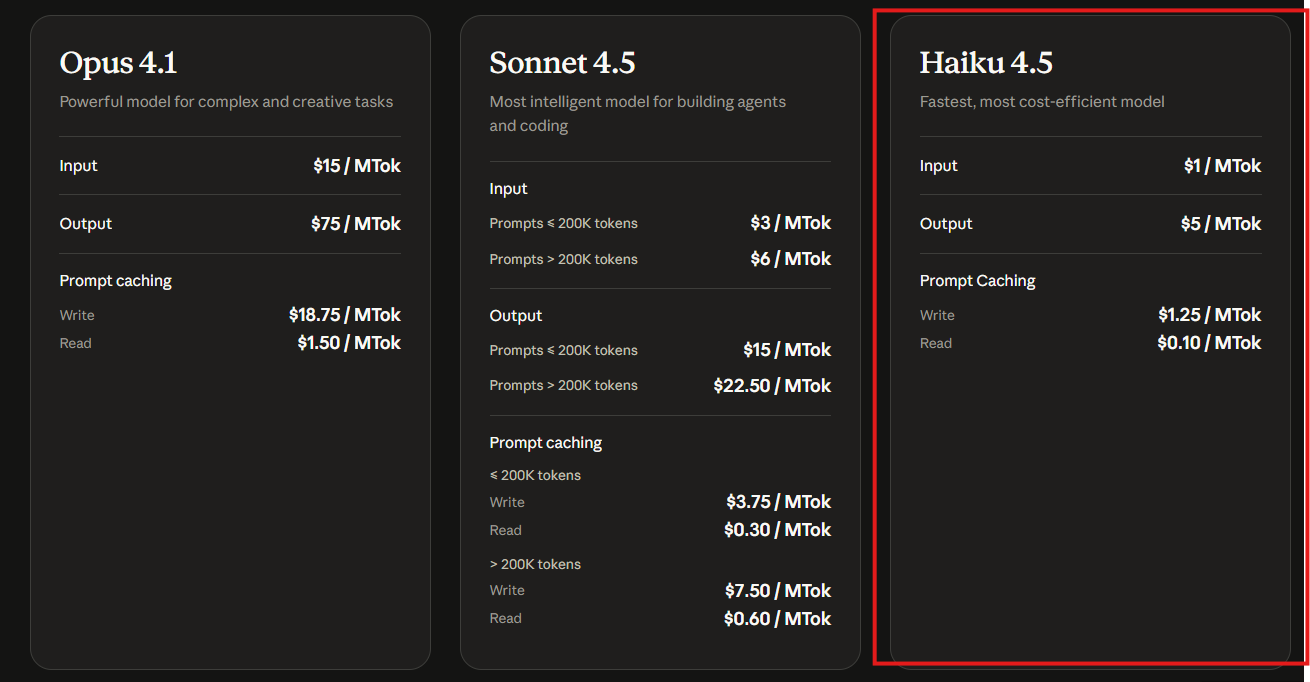

Cost efficiency forms a critical aspect of adopting any AI model. Anthropic prices Claude Haiku 4.5 at $1 per million input tokens and $5 per million output tokens. This structure positions it as the most affordable option in the Claude family, enabling high-volume usage without excessive expenses. For comparison, Claude Haiku 3.5 costs $0.80 per million input tokens and $1.60 per million output tokens, but the newer version offers superior performance at a competitive rate.

Additional features like prompt caching incur $1.25 per million write tokens and $0.10 per million read tokens, which optimize repeated queries in applications. Developers accessing the model through third-party platforms, such as Amazon Bedrock or Google Vertex AI, may encounter slight variations in billing based on provider fees, but the base rates remain consistent.

Organizations scaling AI integrations find this pricing advantageous for prototypes and production. For example, in a customer service bot handling thousands of interactions daily, the lower input costs reduce overall operational expenses. However, monitor token usage closely, as complex tasks with extensive thinking budgets can accumulate charges. Tools like Apidog assist in simulating and estimating costs during testing phases, ensuring budget adherence.

With pricing in mind, shift focus to acquiring access and configuring your environment for Claude Haiku 4.5 API usage.

Setting Up Access to the Claude Haiku 4.5 API

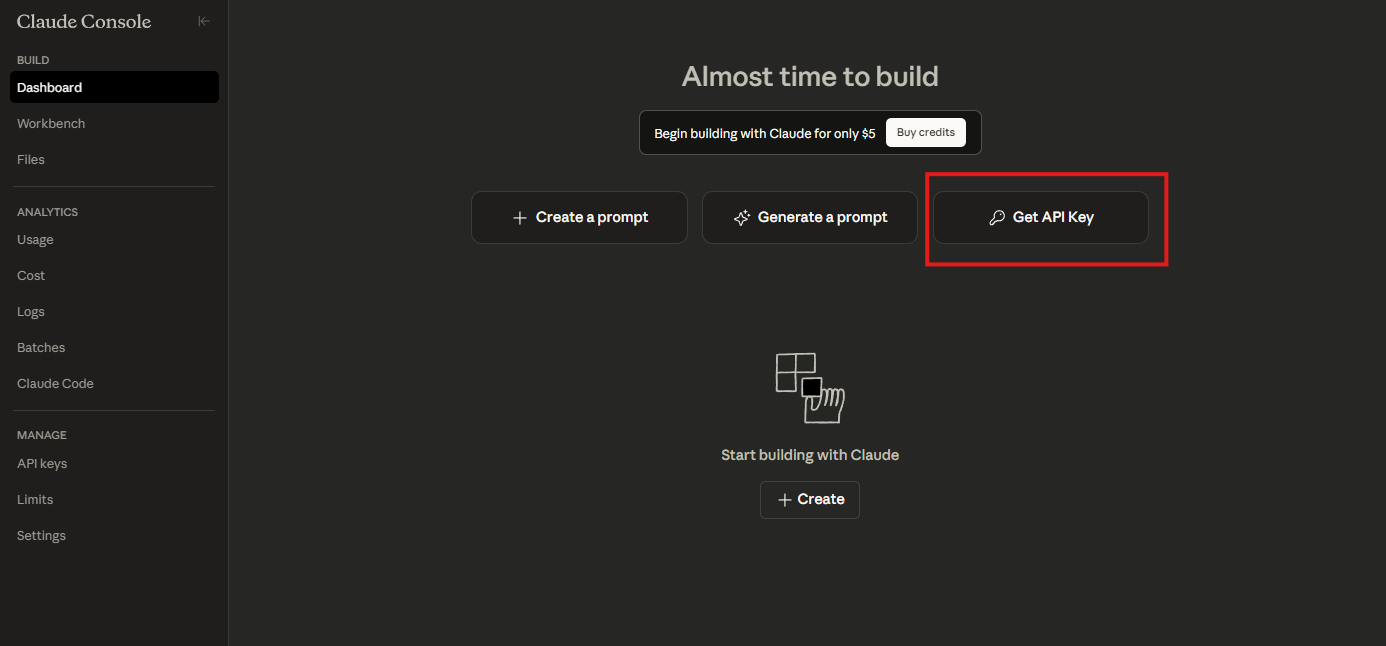

To begin working with Claude Haiku 4.5, secure an API key from Anthropic. Visit the Anthropic developer console and create an account if you lack one. Once logged in, generate a new API key under the API section. Store this key securely, as it authenticates all requests.

Next, install necessary libraries. For Python developers, use the official Anthropic SDK. Execute pip install anthropic in your terminal. This package simplifies interactions by handling authentication, request formatting, and response parsing.

Configure your environment by setting the API key as an environment variable: export ANTHROPIC_API_KEY='your-api-key-here'. Alternatively, pass it directly in code for testing purposes, though avoid this in production to prevent exposure.

For those using Amazon Bedrock, navigate to the AWS console, enable the Anthropic models, and select Claude Haiku 4.5. Bedrock provides a managed service, abstracting infrastructure management. Similarly, Google Vertex AI users access it through the Model Garden, where you select the model and integrate via REST APIs or SDKs.

Verify setup with a simple test request. In Python, import the client and send a basic message:

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-haiku-4-5",

max_tokens=1000,

temperature=0.7,

messages=[

{"role": "user", "content": "Hello, Claude Haiku 4.5!"}

]

)

print(message.content)

This code initializes the client, specifies the model, and processes a user message. Expect a response confirming the model's operation. If errors occur, check your key validity or network connectivity.

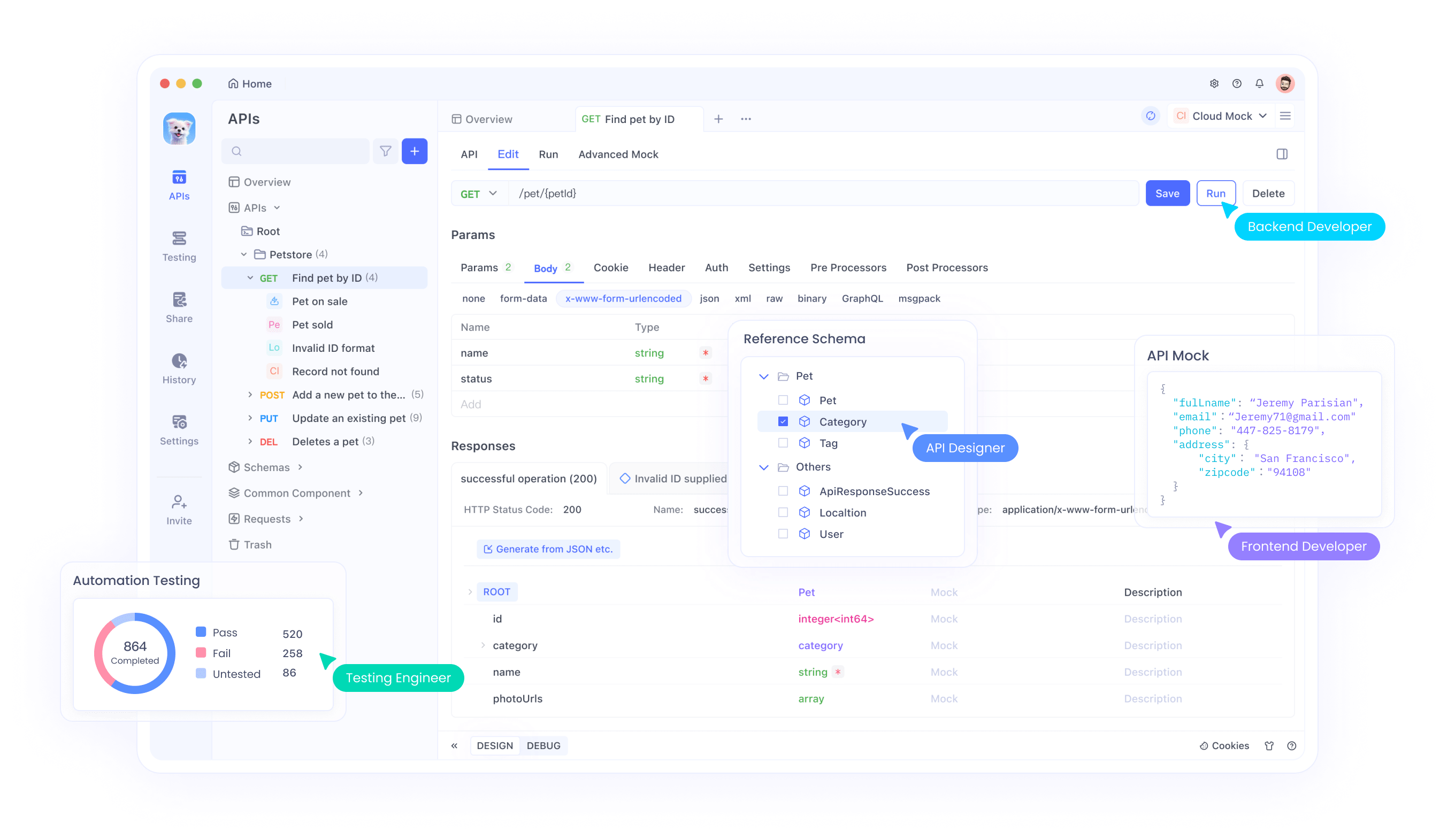

Apidog enhances this setup by allowing you to import OpenAPI specifications for the Claude API. Download Apidog, create a new project, and add the Anthropic endpoint. This facilitates mocking responses for offline development, ensuring your integration proceeds smoothly.

Once configured, proceed to explore basic API calls and their parameters.

Basic Usage of the Claude Haiku 4.5 API

The Claude Haiku 4.5 API centers on the messages endpoint, which handles conversational interactions. Developers construct requests with a list of messages, each containing a role (user or assistant) and content. The model generates completions based on this context.

Control output with parameters like max_tokens, which limits response length to prevent excessive generation. Set temperature between 0 and 1 to adjust randomness—lower values yield deterministic outputs suitable for technical tasks. Additionally, top_p influences diversity by sampling from the top probability mass.

For a coding example, query the model for a Python function:

message = client.messages.create(

model="claude-haiku-4-5",

max_tokens=500,

messages=[

{"role": "user", "content": "Write a Python function to calculate Fibonacci numbers recursively."}

]

)

print(message.content[0].text)

The response provides the function code, often with explanations. Claude Haiku 4.5's speed ensures quick iterations, ideal for debugging sessions.

Handle errors gracefully. Common issues include rate limits or invalid parameters. Implement retries with exponential backoff:

import time

def send_message_with_retry(client, params, max_retries=3):

for attempt in range(max_retries):

try:

return client.messages.create(**params)

except anthropic.APIError as e:

if attempt < max_retries - 1:

time.sleep(2 ** attempt)

else:

raise e

This function attempts the request multiple times, increasing wait periods. Such techniques maintain reliability in production.

Building on basics, integrate Apidog to test these calls. In Apidog, create a new API request, set the URL to https://api.anthropic.com/v1/messages, add headers like x-api-key with your key, and define the JSON body. Send the request and inspect responses, which Apidog formats for easy analysis.

As you master simple interactions, advance to more complex scenarios involving tools and agents.

Advanced Usage: Tool Integration and Multi-Agent Systems

Claude Haiku 4.5 supports tool calling, enabling the model to interact with external functions. Define tools in your request, and the model decides when to use them. For instance, create a tool for mathematical computations:

tools = [

{

"name": "calculator",

"description": "Perform arithmetic operations",

"input_schema": {

"type": "object",

"properties": {

"expression": {"type": "string"}

},

"required": ["expression"]

}

}

]

message = client.messages.create(

model="claude-haiku-4-5",

max_tokens=1000,

tools=tools,

messages=[

{"role": "user", "content": "What is 15 * 23?"}

]

)

If the model invokes the tool, process the input and provide results in subsequent messages. This extends capabilities beyond text generation.

In multi-agent setups, employ Claude Sonnet 4.5 for planning and Claude Haiku 4.5 for execution. The coordinator breaks tasks into subtasks, dispatching them to Haiku instances. For software development, one agent handles data fetching, another UI design, all in parallel.

Implement this with asynchronous calls:

import asyncio

async def execute_subtask(client, subtask):

return await asyncio.to_thread(client.messages.create,

model="claude-haiku-4-5",

max_tokens=500,

messages=[{"role": "user", "content": subtask}]

)

async def main():

subtasks = ["Fetch user data", "Design login page"]

results = await asyncio.gather(*(execute_subtask(client, task) for task in subtasks))

# Aggregate results

This code runs subtasks concurrently, leveraging Haiku's speed.

For testing such systems, Apidog's mock servers simulate tool responses, allowing offline validation. Configure mocks to return expected outputs, refining your agents before live deployment.

Moreover, optimize for extended thinking by allocating budgets up to 128K tokens. In benchmarks, this enhances performance on complex problems like AIME (averaged over 10 runs) or MMMLU across languages.

Transitioning to practical applications, examine real-world use cases where these features shine.

Use Cases for Claude Haiku 4.5 API

Organizations apply Claude Haiku 4.5 in diverse scenarios. In customer service, it powers bots that respond instantly to inquiries, reducing wait times. For example, integrate it with a messaging platform:

# Pseudocode for bot integration

def handle_message(user_input):

response = client.messages.create(

model="claude-haiku-4-5",

messages=[{"role": "user", "content": user_input}]

)

return response.content[0].text

This setup scales to handle high traffic efficiently.

In coding environments like GitHub Copilot or Cursor, Claude Haiku 4.5 provides suggestions via API. Developers enable it in public previews, entering keys for access.

For browser automation, its computer usage capabilities outperform predecessors. Build extensions where the model navigates pages, extracts data, or automates forms.

Education platforms use it for interactive tutoring, generating explanations and quizzes on demand. Data analysts employ it for query generation against databases, combining natural language with SQL tools.

In each case, Apidog facilitates testing by automating scenarios, ensuring robustness. For instance, create test suites that verify response times under load.

As you implement these, adhere to best practices to maximize efficiency.

Best Practices and Optimization Techniques

Maintain context consistency by managing message histories effectively. Limit conversations to essential exchanges to avoid token waste.

Monitor usage metrics through the Anthropic dashboard, adjusting parameters to balance cost and quality. For high-throughput applications, batch requests where possible.

Secure your integrations by rotating API keys regularly and using least-privilege principles. Implement logging to track anomalies.

Leverage caching for frequent prompts, reducing redundant computations. In code:

cache = {} # Simple in-memory cache

def cached_message(client, prompt):

if prompt in cache:

return cache[prompt]

response = client.messages.create(

model="claude-haiku-4-5",

messages=[{"role": "user", "content": prompt}]

)

cache[prompt] = response

return response

This stores results for reuse.

When testing with Apidog, define assertions for responses, such as checking for specific keywords or status codes.

Furthermore, experiment with sampling parameters. Default settings work well, but fine-tune temperature for creative tasks or top_p for focused outputs.

Address potential pitfalls, like over-reliance on tools, by prompting the model to think step-by-step.

By following these, you ensure reliable, scalable deployments.

Integrating Apidog for Enhanced API Testing

Apidog stands out as a comprehensive platform for API development and testing, particularly useful with Claude Haiku 4.5. It supports importing specifications, generating test cases, and mocking endpoints.

To integrate, install Apidog and create a project. Add the Claude API endpoint, authenticate with your key, and define requests. Apidog's AI features can even generate test cases from specs.

For Claude Haiku 4.5, test latency-sensitive applications by simulating real-time responses. Use its debugging tools to inspect JSON payloads and identify issues.

In multi-agent scenarios, Apidog chains requests, mimicking orchestrations.

This integration not only accelerates development but also ensures compliance with best practices.

Security and Ethical Considerations

Anthropic emphasizes safety in Claude Haiku 4.5, with low misaligned behavior rates. Developers must still implement safeguards, like content filters for user inputs.

Comply with data privacy regulations, avoiding sensitive information in prompts.

Ethically, use the model transparently, informing users of AI involvement.

These measures foster responsible adoption.

Troubleshooting Common Issues

Encounter rate limits? Implement backoffs as shown earlier.

Invalid responses? Adjust max_tokens or refine prompts.

Authentication failures? Verify key format and permissions.

Apidog aids by logging full interactions for analysis.

Future Developments and Updates

Anthropic continues evolving the Claude lineup. Monitor announcements for enhancements to Haiku 4.5, such as multimodal support.

Integrate updates seamlessly, as the API maintains backward compatibility.

Conclusion

Claude Haiku 4.5 API offers developers a versatile tool for building intelligent, efficient applications. By following this guide, you equip yourself to harness its full potential, from basic setups to advanced integrations. Remember, tools like Apidog amplify your efforts, providing free resources to test and refine.

As technology advances, small efficiencies compound into significant advantages. Apply these insights to your projects and observe the impact.