Claude Code and Claude API, both from Anthropic, represent two distinct approaches to leveraging AI for coding tasks. Developers use Claude Code as a terminal-based agent that automates routine operations, while they integrate Claude API directly into applications for customizable AI interactions. This article examines their differences to guide your selection.

Understanding Claude Code: Anthropic's Agentic Coding Tool

Developers turn to Claude Code when they need an efficient, terminal-integrated assistant that handles coding tasks autonomously. Anthropic designed this tool as a command-line interface (CLI) that embeds Claude's intelligence directly into the developer's environment. Unlike traditional chat-based AI, Claude Code operates agentically, meaning it executes actions like reading files, running commands, and modifying code without constant user intervention.

Anthropic released Claude Code as a research project, but it quickly gained traction for its ability to accelerate software development. For instance, developers input natural language instructions, and the tool interprets them to perform tasks such as debugging, refactoring, or even generating entire modules. It integrates with Claude 3.7 Sonnet, Anthropic's advanced model, to ensure high accuracy in code generation.

However, Claude Code does not function in isolation. It understands the codebase context by scanning directories and maintaining state across sessions. This capability allows it to suggest improvements based on existing patterns. Additionally, Anthropic provides best practices for using Claude Code, such as creating a dedicated file like CLAUDE.md to outline project guidelines, which helps maintain consistency.

Transitioning to practical applications, Claude Code excels in scenarios where speed matters. Developers report that it reduces time spent on boilerplate code by automating repetitive elements. For example, when bootstrapping a new Python project, a developer might command, "Initialize a Flask app with user authentication," and Claude Code generates the structure, installs dependencies via pip (if permitted), and even sets up basic tests.

Nevertheless, users must manage its limitations carefully. The tool occasionally produces code that compiles but fails in edge cases, particularly in languages like Rust where strict typing demands precision. Therefore, developers always verify outputs through manual reviews or integrated testing suites.

Expanding on its technical underpinnings, Claude Code leverages Anthropic's API under the hood but packages it in a user-friendly CLI wrapper. This setup minimizes latency compared to web-based interfaces, as operations occur locally with cloud-backed AI inference. Moreover, it supports version control integration, allowing seamless commits after code changes.

In terms of setup, installing Claude Code involves cloning its GitHub repository and configuring API keys. Once active, it transforms the terminal into an interactive coding partner. Developers appreciate this because it eliminates the need to switch between editors and browsers.

Furthermore, Claude Code's evolution reflects Anthropic's focus on agentic AI. Early versions emphasized basic code generation, but updates have introduced features like multi-step reasoning, where the tool breaks down complex tasks into subtasks. Consequently, it handles projects involving multiple languages or frameworks more effectively.

To illustrate, consider a full-stack developer working on a web application. They might use Claude Code to generate backend API endpoints in Node.js, then switch to frontend components in React all within the same session. This fluidity saves hours that developers otherwise spend on context switching.

Exploring Claude API: Programmatic Access to AI Power

Shifting focus, developers opt for Claude API when they require granular control over AI interactions in their applications. Anthropic's Claude API provides direct access to models like Claude 3.5 Sonnet and Opus, enabling programmatic calls for tasks such as code completion, analysis, or generation.

Unlike Claude Code's CLI-centric approach, the API integrates into any software ecosystem. Developers send requests via HTTP, specifying prompts, parameters, and context windows up to 200,000 tokens. This flexibility allows customization, such as fine-tuning responses for specific domains like machine learning or embedded systems.

Moreover, the API supports asynchronous operations, making it suitable for scalable applications. For example, a development team might build a custom IDE plugin that queries Claude API for real-time suggestions, enhancing collaborative coding.

Implementing Claude API demands more upfront effort. Developers must handle authentication, rate limiting, and error management themselves. Tools like Apidog assist here by providing an intuitive platform to design, debug, and mock API endpoints, ensuring smooth integration.

Transitioning to its strengths, Claude API delivers consistent performance with high uptime often 100% as reported in developer benchmarks. It also allows model selection, so users pick Sonnet for speed or Opus for complex reasoning.

Additionally, pricing follows a pay-per-use model: $3 per million input tokens and $15 per million output tokens. This structure benefits occasional users, as costs scale with usage rather than requiring a flat subscription.

Nevertheless, heavy users face escalating expenses. For instance, processing large codebases could consume thousands of tokens per request, leading to daily costs of $25–$35 in intensive sessions.

Expanding technically, Claude API uses JSON-based payloads for requests and responses. A typical call might include a system prompt like "You are a expert Python developer" followed by user input. The API then generates code snippets, explanations, or fixes.

Furthermore, developers enhance API usage with wrappers in languages like Python or JavaScript. Libraries such as anthropic-sdk simplify this, abstracting away boilerplate.

In practice, Claude API shines in automated pipelines. DevOps engineers, for example, incorporate it into CI/CD workflows to review pull requests automatically, flagging potential issues before merges.

Consequently, the API's extensibility makes it a foundation for building advanced tools. Many open-source projects leverage it to create domain-specific assistants, from SQL query optimizers to UI design generators.

Key Features: Claude Code vs Claude API Head-to-Head

Comparing features reveals how each tool addresses AI coding differently. Claude Code offers an out-of-the-box CLI experience, complete with built-in commands for file manipulation and execution. Developers activate it in their terminal, and it handles tasks agentically, such as running git diffs or cargo checks in Rust projects.

In contrast, Claude API provides raw access, requiring developers to build their interfaces. This means greater customization but also more development overhead.

Additionally, Claude Code includes context management features, like summarizing long conversations to preserve key details within token limits. The API, however, leaves this to the user, who must implement truncation or summarization logic.

Moreover, integration depth varies. Claude Code natively understands terminal environments, executing shell commands securely. Claude API, while versatile, needs explicit permissions and wrappers for similar functionality.

Transitioning to advanced capabilities, both support multi-model access, but Claude Code defaults to Sonnet with Opus options, whereas the API allows on-the-fly switching.

However, Claude Code's agentic nature enables autonomous workflows. For example, it can iterate on code until tests pass, a feature developers program manually with the API.

Furthermore, security considerations differ. Claude Code operates locally, reducing data exposure, while API calls transmit information to Anthropic's servers—though with robust encryption.

In terms of scalability, the API excels for team environments, as multiple instances can run concurrently without terminal conflicts.

Pros and Cons: Weighing the Trade-Offs

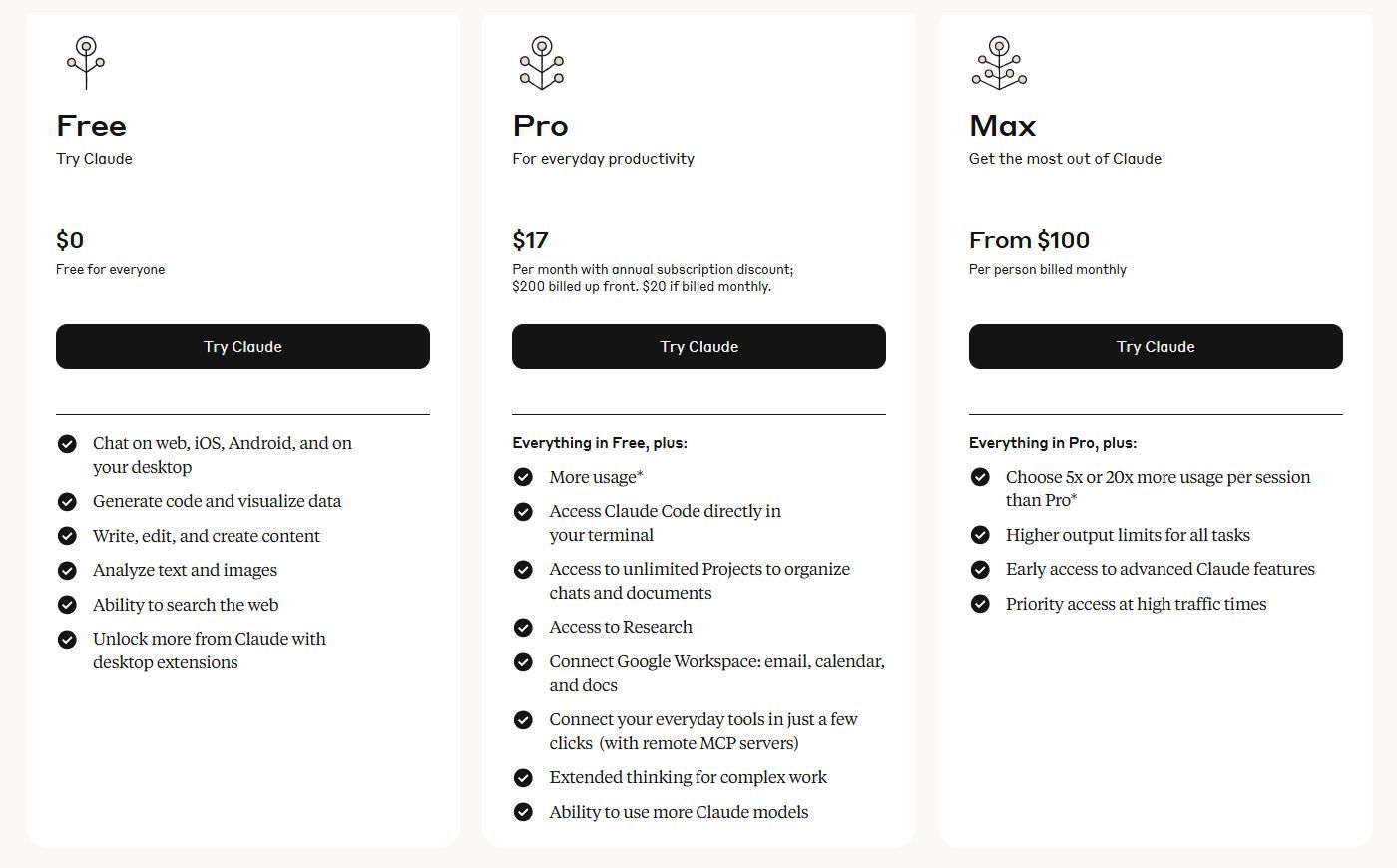

Evaluating pros and cons helps developers align tools with their needs. Claude Code boasts high productivity for solo developers, feeling like a pair-programming partner. Its fixed-cost model (via subscriptions) appeals to heavy users, potentially saving on per-request fees.

However, it suffers from variable response times due to overloads and occasional inconsistencies in output quality.

Conversely, Claude API ensures precision and reliability, with instant responses and 100% uptime. Developers value its flexibility for integrating into existing tools like Cline or custom scripts.

Nevertheless, costs accumulate quickly for intensive use, and it lacks the conversational flow of Claude Code.

Additionally, both tools handle errors differently. Claude Code might skip tasks prematurely, requiring restarts, while the API provides detailed error codes for debugging.

Moreover, community feedback highlights Claude Code's strength in bootstrapping projects but notes its struggles with large codebases due to context loss.

Use Cases: Real-World Applications for AI Coding

Applying these tools in practice demonstrates their value. Developers employ Claude Code for rapid prototyping, such as generating a full MERN stack app from a high-level description. It automates setup, code writing, and initial testing, allowing focus on business logic.

Transitioning to enterprise settings, teams use Claude API in code review bots, analyzing diffs and suggesting improvements via webhooks.

However, for educational purposes, Claude Code's interactive style teaches coding concepts through explanations and iterations.

Additionally, in open-source contributions, developers leverage the API for automated issue triaging, classifying bugs based on descriptions.

Furthermore, hybrid approaches emerge: using Claude Code for initial drafts and API for refinements in production scripts.

Pricing and Cost Analysis: Making the Economic Choice

Analyzing costs reveals clear distinctions. Claude API's token-based pricing suits light users for example, occasional coding tasks cost under $1 monthly.

In contrast, Claude Pro (often bundled with Code access) charges $20 flat, including higher limits and model variety.

However, for daily coding with 510,000 tokens monthly, API expenses reach $9.18, cheaper than Pro's $20.

Moreover, heavy developers report API costs hitting $25–$35 daily, making Code's fixed fee more economical.

Transitioning to long-term value, API's scalability avoids subscription waste during low-activity periods.

Additionally, tools like 16x Prompt track API usage, optimizing spending.

Integrating with Complementary Tools: CodeX CLI and Apidog

Enhancing these tools involves integrations. CodeX CLI, OpenAI's terminal agent, serves as a competitor, offering similar features but with different models. Developers compare it to Claude Code for tasks like natural language to code translation.

However, Apidog stands out for API-focused workflows. It streamlines Claude API usage by automating request generation, debugging, and mocking responses. Developers download Apidog for free to prototype integrations swiftly.

Moreover, combining Apidog with Claude API allows testing AI-generated endpoints without live calls, reducing tokens spent on errors.

Transitioning to CodeX CLI, it provides lightweight execution, ideal for cross-model experiments.

Furthermore, using Apidog's collaboration features, teams share API specs derived from Claude outputs.

Performance Benchmarks: Measuring Efficiency

Benchmarking shows Claude Code achieving faster task completion in terminal-bound scenarios, with average times under 30 seconds for simple fixes.

In contrast, API calls respond in milliseconds but require setup overhead.

However, for complex tasks, API's larger context window handles bigger projects better.

Additionally, error rates: Claude Code shows 10-20% functional issues, while API maintains higher accuracy with proper prompting.

Moreover, scalability tests indicate API supporting thousands of concurrent requests, unlike Code's single-session limit.

User Experiences: Insights from Developers

Gathering experiences, developers praise Claude Code for its intuitive feel, often describing it as "transformative" for solo work.

However, they critique its inconsistencies, suggesting regular context resets.

Conversely, API users highlight reliability but note cost vigilance.

Additionally, forums like Reddit discuss hybrids, using Code for ideation and API for deployment.

Furthermore, case studies from Anthropic show productivity gains of 2-3x in agentic coding.

When to Choose Claude Code or Claude API

Deciding depends on needs. Select Claude Code for terminal-driven, agentic automation in personal projects.

Choose Claude API for programmable, scalable integrations in teams.

However, budget-conscious users favor API for low usage, while heavy coders prefer Code's flat rate.

Moreover, consider tools like Apidog to maximize API efficiency.

Picking the Right Tool for Your AI Coding Journey

Ultimately, both Claude Code and Claude API empower developers, but your choice hinges on workflow preferences. Assess your usage patterns, integrate supportive tools, and experiment to find the optimal fit. Small differences in approach often lead to significant productivity shifts, so test both thoroughly.