In the world of AI-powered applications, Anthropic's Claude API has become a go-to solution for many developers seeking advanced language processing capabilities. However, as with any popular service, you're likely to encounter rate limits that can temporarily halt your application's functionality. Understanding these limits and implementing strategies to work within them is crucial for maintaining a smooth user experience.

For AI Coding, Claude has emerged as a powerful assistant for both casual users and developers. However, many users encounter a common frustration: rate limits.

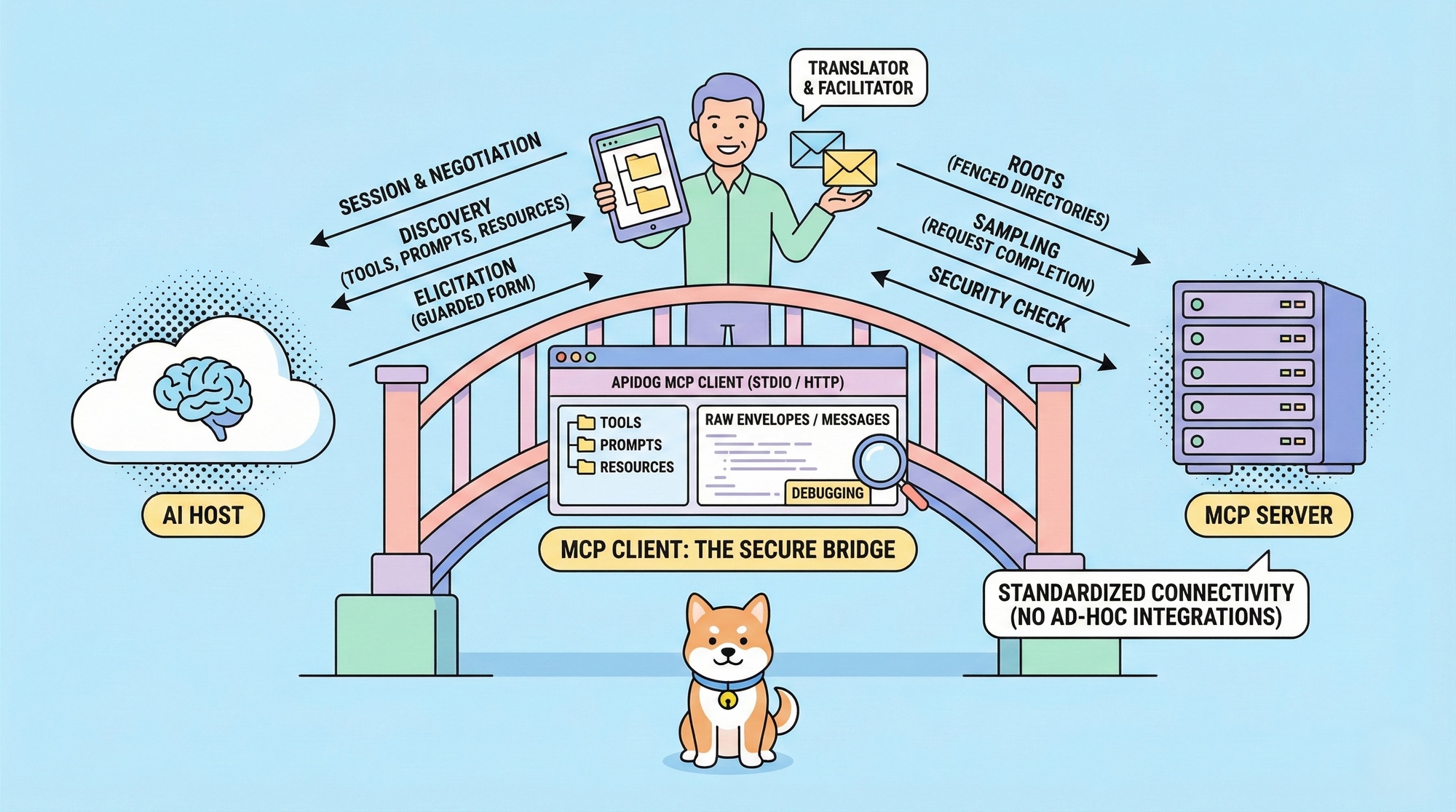

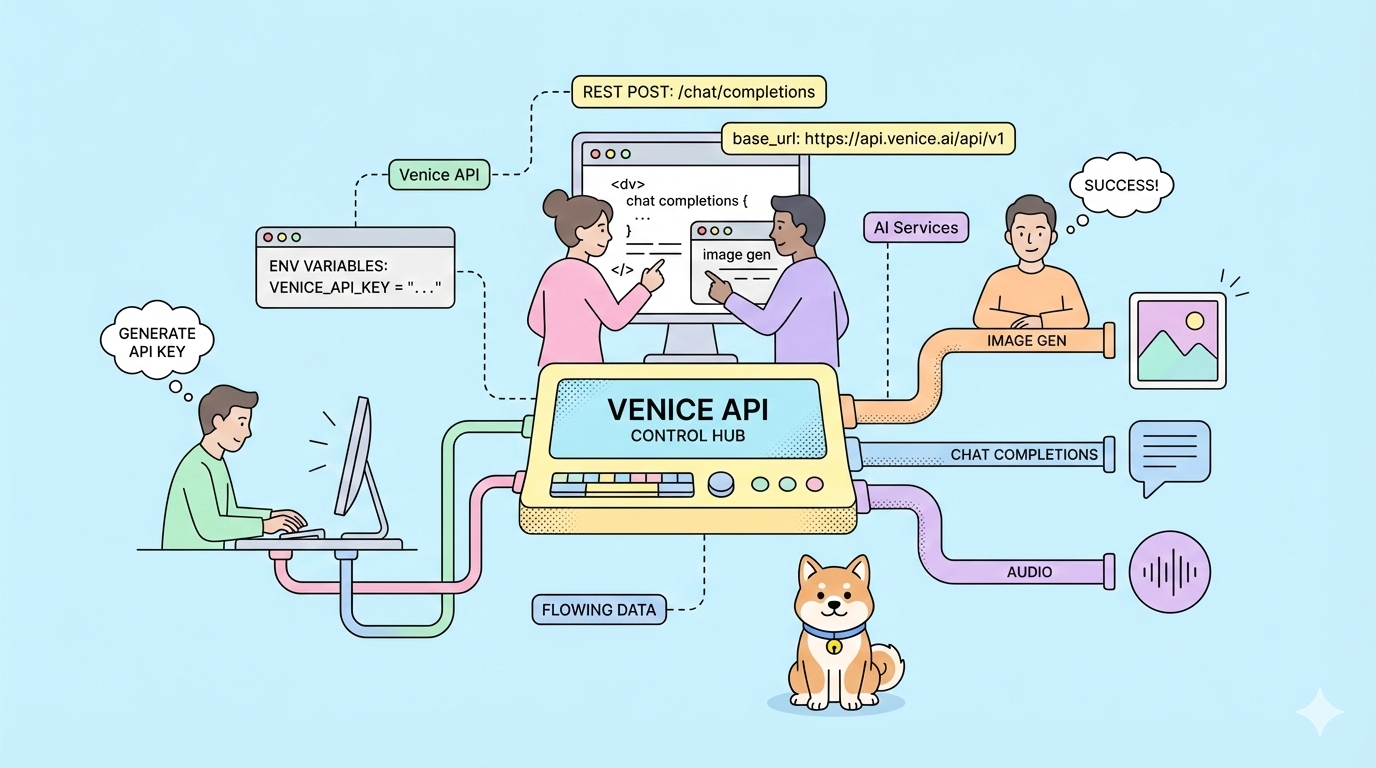

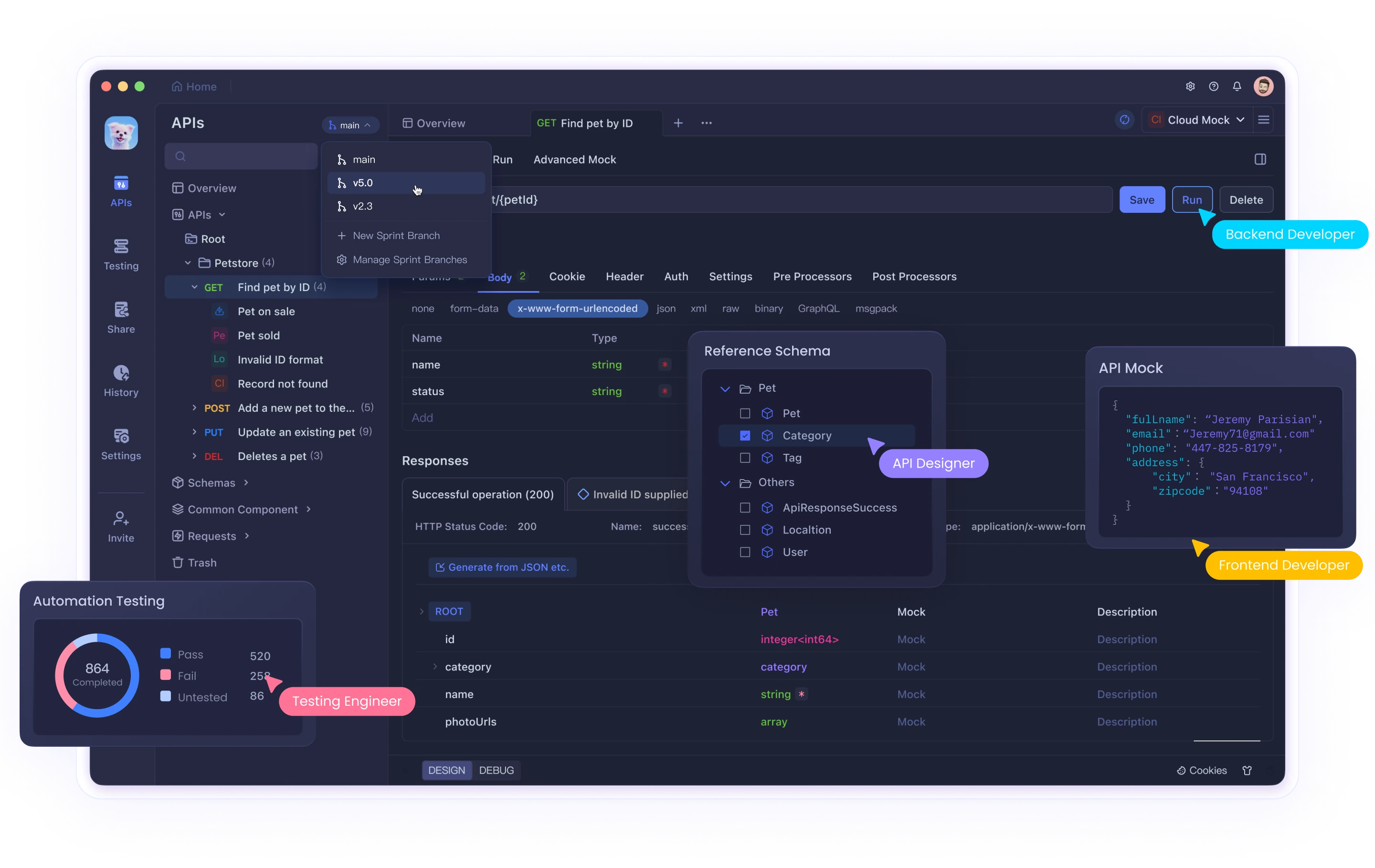

Whether you're using Claude's web interface or integrating with its API through tools like Cursor or Cline, hitting these limits can disrupt your workflow and productivity. While tools like Claude provide powerful AI capabilities, effectively managing API interactions requires proper testing and debugging tools. Apidog helps developers navigate these complexities when working with AI and other APIs.

This comprehensive guide will explore why Claude API rate limits exist, how to identify when you've hit them, and provide three detailed solutions to help you overcome these challenges effectively.

What Are Claude API's Rate Limits and Why Do They Exist?

Rate limits are restrictions imposed by API providers to control the volume of requests a user can make within a specific timeframe. Anthropic implements these limits for several important reasons:

- Server Resource Management: Preventing any single user from consuming too many computational resources

- Equitable Access: Ensuring fair distribution of API access across all users

- Abuse Prevention: Protecting against malicious activities like scraping or DDoS attacks

- Service Stability: Maintaining overall system performance during peak usage times

Claude API's Specific Rate Limits

Claude's rate limits vary based on your account type:

- Free Users: Approximately 100 messages per day, with the quota resetting at midnight

- Pro Users: Roughly five times the limit of free users (approximately 500 messages daily)

- API Users: Custom limits based on your specific plan and agreements with Anthropic

Additionally, during peak usage times, these limits might be more strictly enforced, and you may experience temporary throttling even before reaching your maximum allocation.

Identifying Rate Limit Issues

You've likely hit a rate limit when your application receives a 429 Too Many Requests HTTP status code. The response typically includes headers with information about:

- When you can resume making requests

- Your current usage statistics

- Remaining quota information

Solution 1: Implement Proper Rate Limiting in Your Code

The most fundamental approach to handling API rate limits is implementing client-side rate limiting. This proactively prevents your application from exceeding the allowed request volume.

Using a Token Bucket Algorithm

The token bucket is a popular algorithm for rate limiting that works by:

- Maintaining a "bucket" that fills with tokens at a constant rate

- Consuming a token for each API request

- Blocking requests when no tokens are available

Here's a Python implementation:

import time

import threading

class TokenBucket:

def __init__(self, tokens_per_second, max_tokens):

self.tokens_per_second = tokens_per_second

self.max_tokens = max_tokens

self.tokens = max_tokens

self.last_refill = time.time()

self.lock = threading.Lock()

def _refill_tokens(self):

now = time.time()

elapsed = now - self.last_refill

new_tokens = elapsed * self.tokens_per_second

self.tokens = min(self.max_tokens, self.tokens + new_tokens)

self.last_refill = now

def get_token(self):

with self.lock:

self._refill_tokens()

if self.tokens >= 1:

self.tokens -= 1

return True

return False

def wait_for_token(self, timeout=None):

start_time = time.time()

while True:

if self.get_token():

return True

if timeout is not None and time.time() - start_time > timeout:

return False

time.sleep(0.1) # Sleep to avoid busy waiting

# Example usage with Claude API

import anthropic

# Create a rate limiter (5 requests per second, max burst of 10)

rate_limiter = TokenBucket(tokens_per_second=5, max_tokens=10)

client = anthropic.Anthropic(api_key="your_api_key")

def generate_with_claude(prompt):

# Wait for a token to become available

if not rate_limiter.wait_for_token(timeout=30):

raise Exception("Timed out waiting for rate limit token")

try:

response = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

messages=[{"role": "user", "content": prompt}]

)

return response.content

except Exception as e:

if "429" in str(e):

print("Rate limit hit despite our rate limiting! Backing off...")

time.sleep(10) # Additional backoff

return generate_with_claude(prompt) # Retry

raise

This implementation:

- Creates a token bucket that refills at a constant rate

- Waits for tokens to become available before making requests

- Implements additional backoff if rate limits are still encountered

Handling 429 Responses with Exponential Backoff

Even with proactive rate limiting, you might occasionally hit limits. Implementing exponential backoff helps your application recover gracefully:

import time

import random

def call_claude_api_with_backoff(prompt, max_retries=5, base_delay=1):

retries = 0

while retries <= max_retries:

try:

# Wait for rate limiter token

rate_limiter.wait_for_token()

# Make the API call

response = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

messages=[{"role": "user", "content": prompt}]

)

return response.content

except Exception as e:

if "429" in str(e) and retries < max_retries:

# Calculate delay with exponential backoff and jitter

delay = base_delay * (2 ** retries) + random.uniform(0, 0.5)

print(f"Rate limited. Retrying in {delay:.2f} seconds...")

time.sleep(delay)

retries += 1

else:

raise

raise Exception("Max retries exceeded")

This function:

- Attempts to make the API call

- If a 429 error occurs, it waits for an exponentially increasing time

- Adds random jitter to prevent request synchronization

- Gives up after a maximum number of retries

Solution 2: Implement Request Queuing and Prioritization

For applications with varying levels of request importance, implementing a request queue with priority handling can optimize your API usage.

Building a Priority Queue System

import heapq

import threading

import time

from dataclasses import dataclass, field

from typing import Any, Callable, Optional

@dataclass(order=True)

class PrioritizedRequest:

priority: int

execute_time: float = field(compare=False)

callback: Callable = field(compare=False)

args: tuple = field(default_factory=tuple, compare=False)

kwargs: dict = field(default_factory=dict, compare=False)

class ClaudeRequestQueue:

def __init__(self, requests_per_minute=60):

self.queue = []

self.lock = threading.Lock()

self.processing = False

self.requests_per_minute = requests_per_minute

self.interval = 60 / requests_per_minute

def add_request(self, callback, priority=0, delay=0, *args, **kwargs):

"""Add a request to the queue with the given priority."""

with self.lock:

execute_time = time.time() + delay

request = PrioritizedRequest(

priority=-priority, # Negate so higher values have higher priority

execute_time=execute_time,

callback=callback,

args=args,

kwargs=kwargs

)

heapq.heappush(self.queue, request)

if not self.processing:

self.processing = True

threading.Thread(target=self._process_queue, daemon=True).start()

def _process_queue(self):

"""Process requests from the queue, respecting rate limits."""

while True:

with self.lock:

if not self.queue:

self.processing = False

return

# Get the highest priority request that's ready to execute

request = self.queue[0]

now = time.time()

if request.execute_time > now:

# Wait until the request is ready

wait_time = request.execute_time - now

time.sleep(wait_time)

continue

# Remove the request from the queue

heapq.heappop(self.queue)

# Execute the request outside the lock

try:

request.callback(*request.args, **request.kwargs)

except Exception as e:

print(f"Error executing request: {e}")

# Wait for the rate limit interval

time.sleep(self.interval)

# Example usage

queue = ClaudeRequestQueue(requests_per_minute=60)

def process_result(result, callback):

print(f"Got result: {result[:50]}...")

if callback:

callback(result)

def make_claude_request(prompt, callback=None, priority=0):

def execute():

try:

response = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

messages=[{"role": "user", "content": prompt}]

)

process_result(response.content, callback)

except Exception as e:

if "429" in str(e):

# Re-queue with a delay if rate limited

print("Rate limited, re-queuing...")

queue.add_request(

make_claude_request,

priority=priority-1, # Lower priority for retries

delay=10, # Wait 10 seconds before retrying

prompt=prompt,

callback=callback,

priority=priority

)

else:

print(f"Error: {e}")

queue.add_request(execute, priority=priority)

# Make some requests with different priorities

make_claude_request("High priority question", priority=10)

make_claude_request("Medium priority question", priority=5)

make_claude_request("Low priority question", priority=1)

This implementation:

- Creates a priority queue for API requests

- Processes requests based on priority and scheduled execution time

- Automatically throttles requests to stay under rate limits

- Handles retries with decreasing priority

Solution 3: Distribute Requests Across Multiple Instances

For high-volume applications, distributing Claude API requests across multiple instances can help you scale beyond single-account limits.

Load Balancing Across Multiple API Keys

import random

import threading

from datetime import datetime, timedelta

class APIKeyManager:

def __init__(self, api_keys, requests_per_day_per_key):

self.api_keys = {}

self.lock = threading.Lock()

# Initialize each API key's usage tracking

for key in api_keys:

self.api_keys[key] = {

'key': key,

'daily_limit': requests_per_day_per_key,

'used_today': 0,

'last_reset': datetime.now().date(),

'available': True

}

def _reset_daily_counters(self):

"""Reset daily counters if it's a new day."""

today = datetime.now().date()

for key_info in self.api_keys.values():

if key_info['last_reset'] < today:

key_info['used_today'] = 0

key_info['last_reset'] = today

key_info['available'] = True

def get_available_key(self):

"""Get an available API key that hasn't exceeded its daily limit."""

with self.lock:

self._reset_daily_counters()

available_keys = [

key_info for key_info in self.api_keys.values()

if key_info['available'] and key_info['used_today'] < key_info['daily_limit']

]

if not available_keys:

return None

# Choose a key with the fewest used requests today

selected_key = min(available_keys, key=lambda k: k['used_today'])

selected_key['used_today'] += 1

# If key has reached its limit, mark as unavailable

if selected_key['used_today'] >= selected_key['daily_limit']:

selected_key['available'] = False

return selected_key['key']

def mark_key_used(self, api_key):

"""Mark that a request was made with this key."""

with self.lock:

if api_key in self.api_keys:

self.api_keys[api_key]['used_today'] += 1

if self.api_keys[api_key]['used_today'] >= self.api_keys[api_key]['daily_limit']:

self.api_keys[api_key]['available'] = False

def mark_key_rate_limited(self, api_key, retry_after=60):

"""Mark a key as temporarily unavailable due to rate limiting."""

with self.lock:

if api_key in self.api_keys:

self.api_keys[api_key]['available'] = False

# Start a timer to mark the key available again after the retry period

def make_available_again():

with self.lock:

if api_key in self.api_keys:

self.api_keys[api_key]['available'] = True

timer = threading.Timer(retry_after, make_available_again)

timer.daemon = True

timer.start()

# Example usage

api_keys = [

"key1_abc123",

"key2_def456",

"key3_ghi789"

]

key_manager = APIKeyManager(api_keys, requests_per_day_per_key=100)

def call_claude_api_distributed(prompt):

api_key = key_manager.get_available_key()

if not api_key:

raise Exception("No available API keys - all have reached their daily limits")

client = anthropic.Anthropic(api_key=api_key)

try:

response = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

messages=[{"role": "user", "content": prompt}]

)

return response.content

except Exception as e:

if "429" in str(e):

# Parse retry-after header if available, otherwise use default

retry_after = 60 # Default

key_manager.mark_key_rate_limited(api_key, retry_after)

# Recursively try again with a different key

return call_claude_api_distributed(prompt)

else:

raise

This approach:

- Manages multiple API keys and tracks their usage

- Distributes requests to stay under per-key rate limits

- Handles rate limit responses by temporarily removing affected keys from rotation

- Automatically resets usage counters daily

Best Practices for Managing Claude API Rate Limits

Beyond the three solutions above, here are some additional best practices:

Monitor Your Usage Proactively

- Implement dashboards to track your API usage

- Set up alerts for when you approach rate limits

- Regularly review usage patterns to identify optimization opportunities

Implement Graceful Degradation

- Design your application to provide alternative responses when rate limited

- Consider caching previous responses for similar queries

- Provide transparent feedback to users when experiencing rate limits

Optimize Your Prompts

- Reduce unnecessary API calls by crafting more effective prompts

- Combine related queries into single requests where possible

- Pre-process inputs to eliminate the need for clarification requests

Communicate with Anthropic

- For production applications, consider upgrading to higher-tier plans

- Reach out to Anthropic about custom rate limits for your specific use case

- Stay informed about platform updates and changes to rate limiting policies

Conclusion

Rate limits are an inevitable part of working with any powerful API like Claude. By implementing the solutions outlined in this article—proper rate limiting code, request queuing, and distributed request handling—you can build robust applications that gracefully handle these limitations.

Remember that rate limits exist to ensure fair access and system stability for all users. Working within these constraints not only improves your application's reliability but also contributes to the overall health of the ecosystem.

With careful planning and implementation of these strategies, you can maximize your use of Claude's powerful AI capabilities while maintaining a smooth experience for your users, even as your application scales.