If you’ve ever needed to capture, process, and react to data the moment it happens or are diving into the world of real-time data processing, you’ve probably bumped into the world of event streaming solutions.

From stock price fluctuations to IoT sensor readings to payment transaction logs, event streaming has quietly become the backbone of real-time applications. Whether you're building scalable applications, handling massive data flows, or aiming for real-time analytics, event streaming solutions are game-changers.

And here’s the truth: choosing the right event streaming platform can make or break your system’s scalability, reliability, and speed.

Want an integrated, All-in-One platform for your Developer Team to work together with maximum productivity?

Apidog delivers all your demands, and replaces Postman at a much more affordable price!

Now, without further ado, let’s explore the best event streaming solutions in 2025, highlighting what makes each unique and why they might fit your specific needs.

What is Event Streaming and Why Should You Care?

Let’s make this simple.

Event streaming is the process of capturing and delivering a continuous stream of data “events” as they happen, so you can process them in near real time.

Think of it like:

- Your credit card company instantly detecting fraudulent charges.

- A ride-sharing app updating your driver’s location every second.

- A logistics company tracking packages across a global network.

Without event streaming solutions, all of this would be delayed, clunky, and way less reliable. With organizations relying more and more on data-driven decisions, choosing the right event streaming solution is crucial. It should be scalable, reliable, and easy to integrate with your ecosystem.

How to Choose the Right Event Streaming Solution

When evaluating an event streaming platform, you might wonder, how do we pick the best solutions? you need to look at:

- Scalability: Can it handle millions of events per second without breaking a sweat?

- Latency: Are you getting near-instant processing or waiting seconds (which can feel like forever in real-time apps)?

- Flexibility: Is it suitable for various use cases and technologies?

- Integration: Does it play nicely with cloud services, APIs, and data pipelines?

- Reliability: Is data delivery guaranteed, even during failures?

- Cost: Can you run it cost-effectively at scale?

- Ease of Use: How straightforward is it to deploy and manage?

- Ecosystem Integration: Does it integrate well with other tools, like API managers such as Apidog?

- Community and Support: Strong backing from developers and vendors for problem-solving.

- Innovations: New features and improvements to keep it future-proof.

Alright, here’s the cream of the crop for event streaming this year, ranked based on performance, popularity and ecosystem.

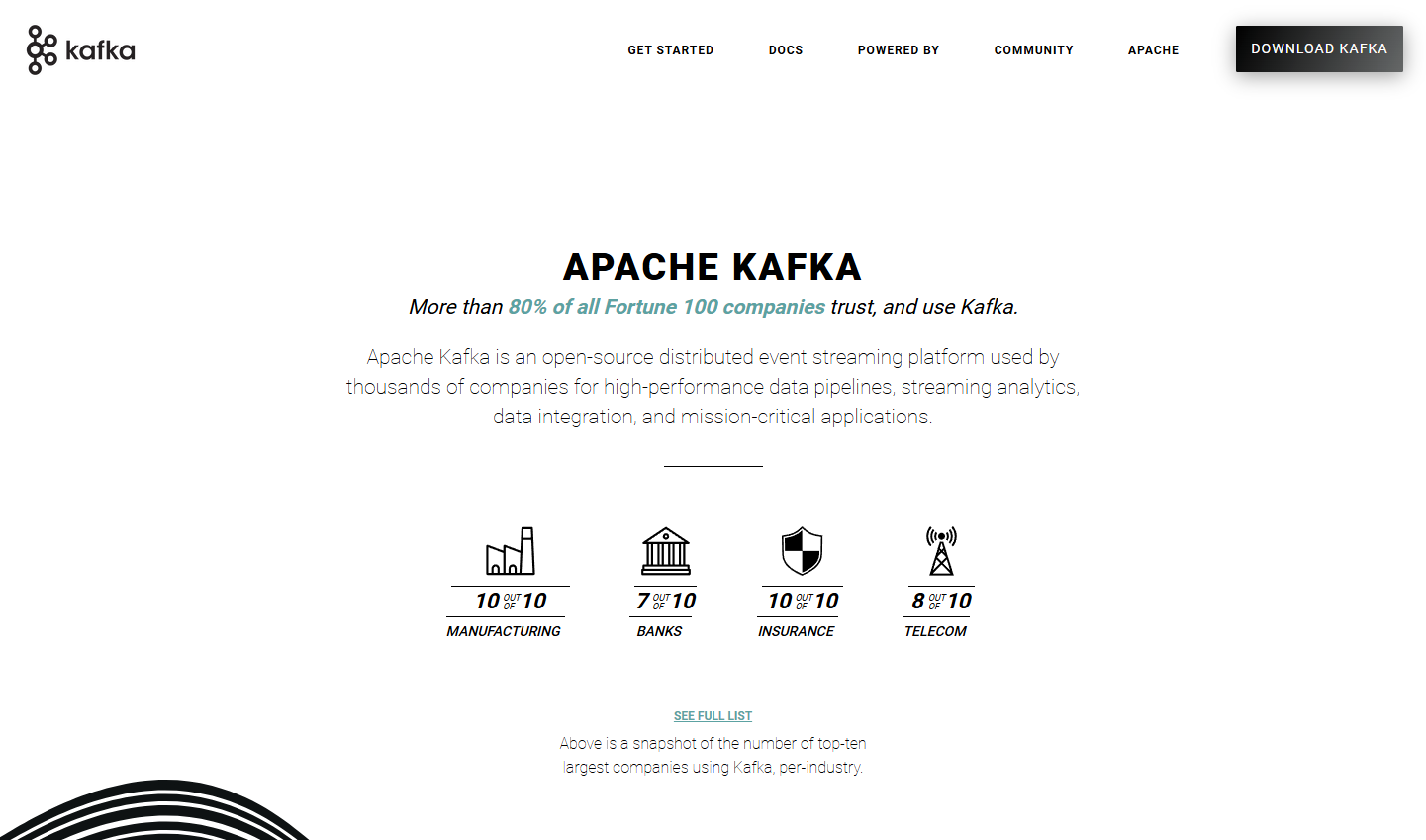

1. Apache Kafka

If event streaming had a celebrity hall of fame, Apache Kafka would be front and center. Apache Kafka remains the undisputed leader in event streaming. It’s an open-source distributed event streaming platform, designed to handle trillions of events a day. Originally developed at LinkedIn, Kafka is now the most widely used event streaming platform in the world, great for building real-time pipelines and streaming apps.

Why it’s great:

- Handles high-throughput workloads like a champ.

- Strong persistence guarantees, events don’t just disappear.

- Huge open-source ecosystem.

- Strong community support.

Best for: Enterprises and developers who want maximum control and flexibility.

Downsides:

- Steep learning curve for beginners.

- Requires careful cluster management.

2. Confluent

Confluent is built on Kafka but adds enterprise features, enhanced security, and user-friendly interfaces. Confluent is basically Kafka on steroids. It’s built by the original creators of Kafka but packaged with enterprise features like schema registry, managed services, and cloud-native scaling.

Key highlights:

- Fully managed Kafka in the cloud.

- Pre-built connectors for databases, cloud services, and more.

- Advanced monitoring and governance tools.

Best for: Teams that want Kafka’s power without dealing with operational headaches.

3. Amazon Kinesis

Amazon Kinesis is AWS’s fully managed real-time streaming service. If you’re already deep into the AWS ecosystem, Amazon Kinesis is the obvious choice. It’s tightly integrated with AWS Lambda, S3, and Redshift.

Benefits of Kinesis:

- Seamless AWS integration.

- Scales automatically.

- Great for analytics and machine learning pipelines.

Best for: Businesses fully committed to AWS.

4. Azure Event Hubs

Azure Event Hubs is Microsoft’s answer to high-volume streaming ingestion. It’s built for scenarios like IoT data, telemetry, and application logging. Perfect for users of Microsoft’s cloud services.

Why it stands out:

- Supports millions of events per second.

- Integrates with Azure Stream Analytics and Power BI.

- Built-in data retention and replay.

Best for: Azure-based infrastructures and enterprise data teams.

5. Google Cloud Pub/Sub

Google Cloud Pub/Sub is the backbone of many GCP-powered event-driven systems. It offers at-least-once delivery and global availability.

Google’s Pub/Sub provides global real-time messaging to build event-driven systems on Google Cloud Platform.

Notable features:

- Auto-scaling to handle spikes.

- Global distribution.

- Low operational overhead.

Best for: Developers building global, cloud-native apps on GCP.

6. Redpanda

Redpanda is a newer player that’s Kafka API compatible but claims lower latency and a modern architecture built for simplified operations. It eliminates Zookeeper and runs as a single binary.

Why consider Redpanda?

- Kafka API compatible (no code changes needed).

- Lower latency than Kafka in many cases.

- Easier deployment.

Best for: Teams who want Kafka performance without the operational complexity.

7. Pulsar

Apache Pulsar is an open-source event streaming platform designed for cloud-native environments, supporting both messaging and streaming with built-in multi-tenancy and geo-replication. It’s great for multi-tenant setups and long-term storage.

Main advantages:

- Built-in geo-replication.

- Supports both streaming and message queues.

- Tiered storage for infinite retention.

Best for: Complex, distributed, and multi-region deployments.

8. NATS JetStream

NATS JetStream is a modern messaging system that’s fast, lightweight, and easy to run. NATS is known for its simplicity and performance for modern cloud-native applications. It’s great for microservices and IoT.

Why it’s loved:

- Extremely low latency.

- Simple deployment.

- Kubernetes-native design.

- Flexible publish/subscribe and queue models.

Best for: Developers who value simplicity and speed over massive feature sets.

9. RabbitMQ with Streams

RabbitMQ has been around forever, but with stream support, it’s now a viable event streaming option.

Why it’s great:

- Mature and stable.

- Easy to integrate.

- Good for smaller-scale event streaming needs.

Best for: Teams already using RabbitMQ who want streaming without switching platforms.

10. Materialize

Materialize offers streaming SQL for event-driven apps, making real-time data transformation easier through SQL queries on event streams.

Why it’s worth it:

- Real-time streaming SQL support.

- Simplifies complex event stream processing.

- Useful for analysts and developers working heavily with streaming data.

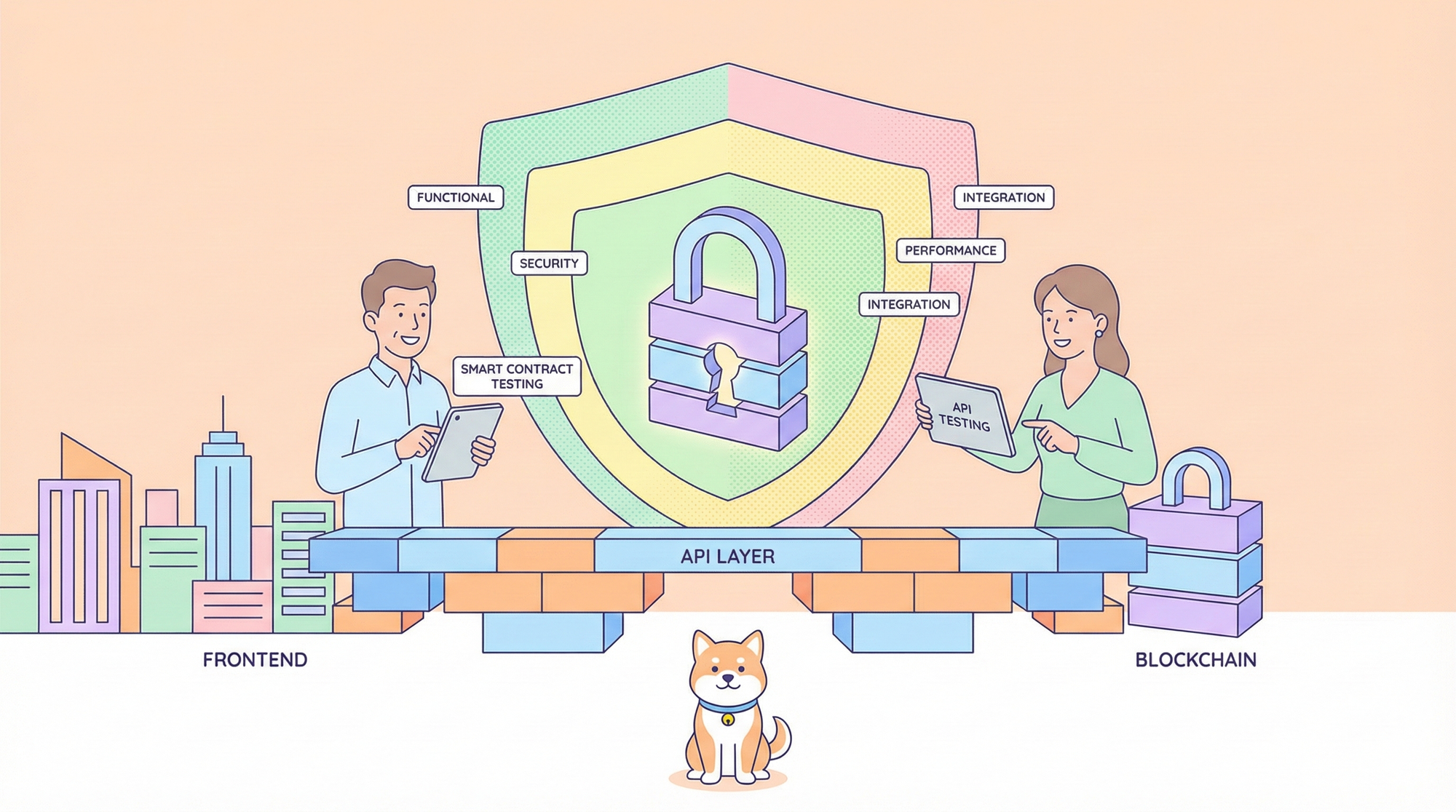

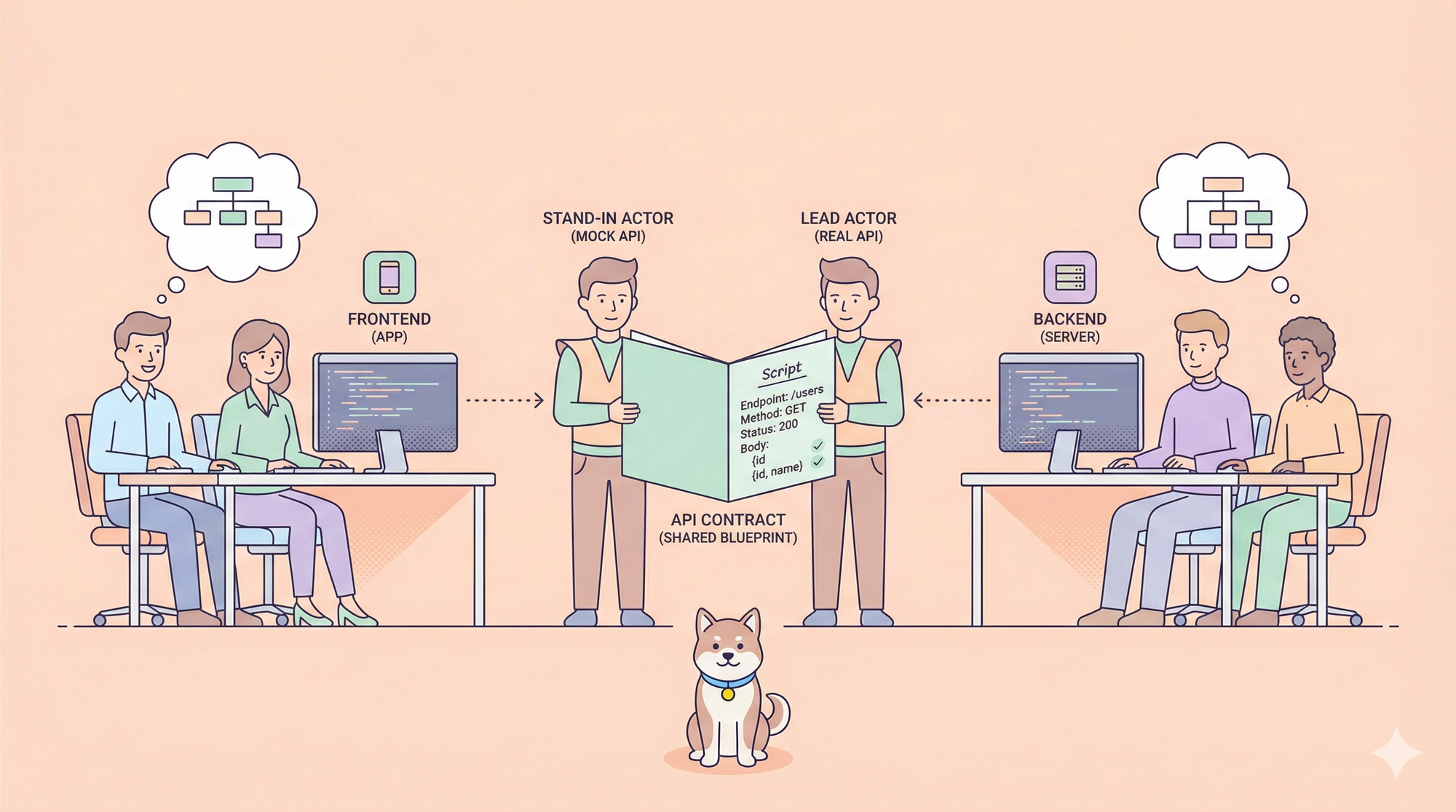

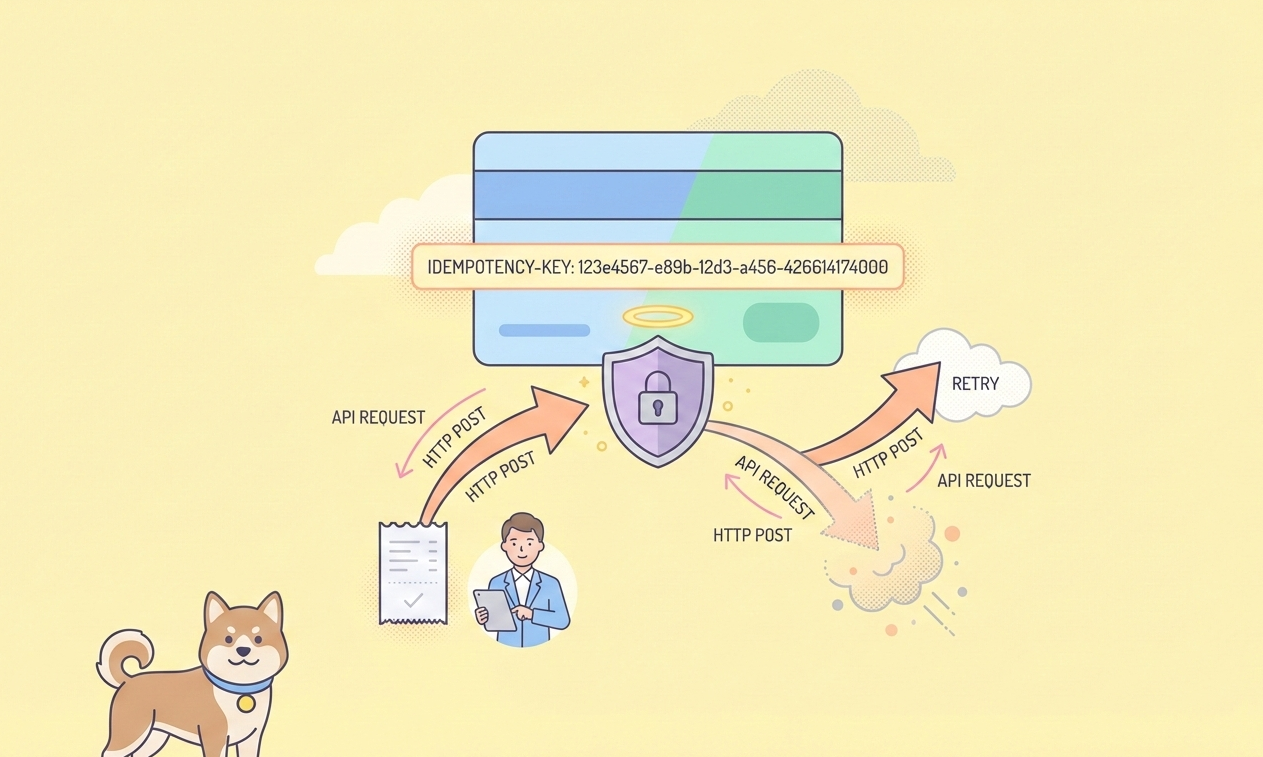

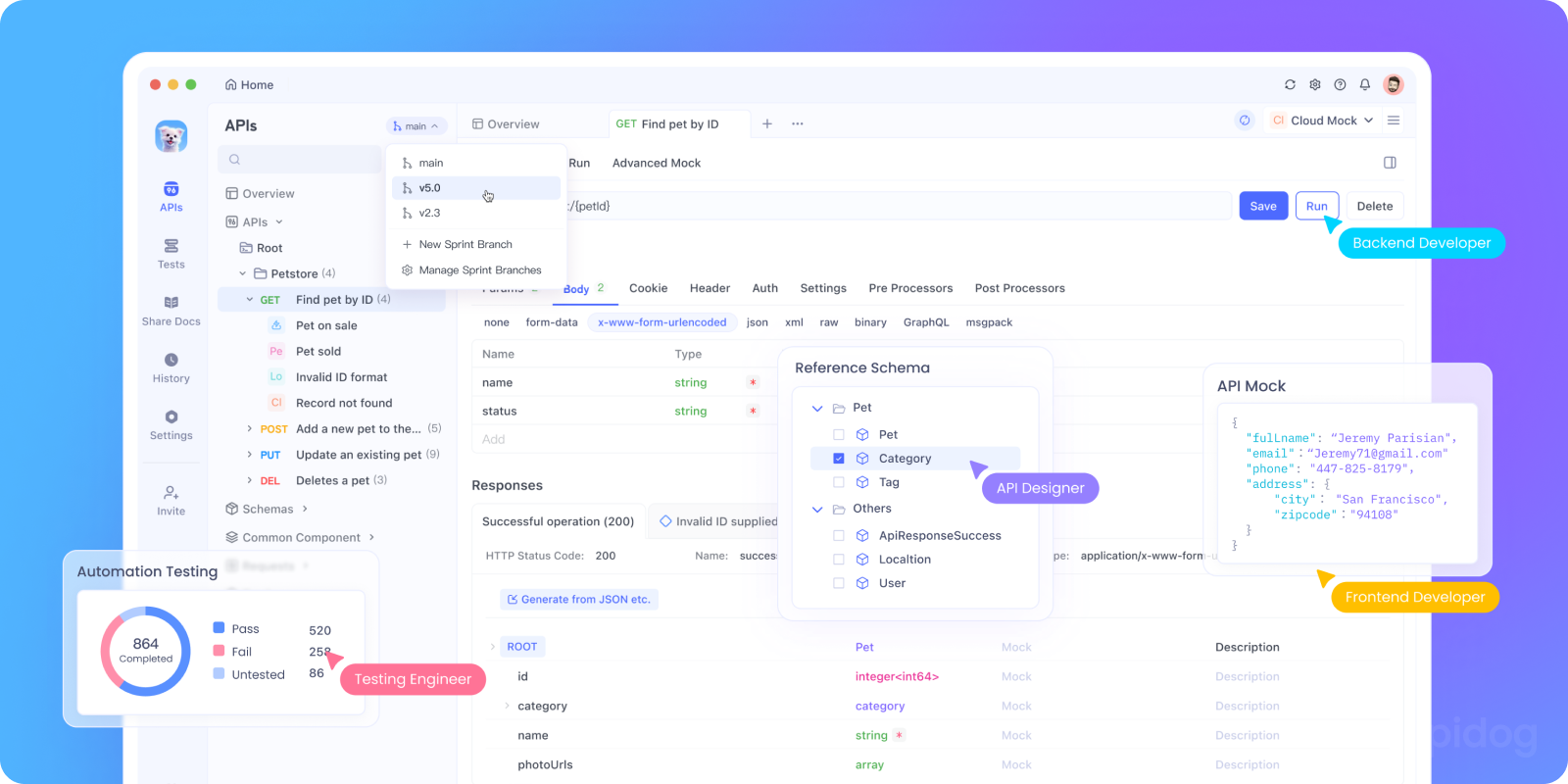

Apidog: The API Testing Tool That Complements Your Event Streaming Journey

Handling event streams involves not only capturing and processing events but also managing APIs that interact with these streams. Apidog is your best companion for this purpose. Once you set up Kafka, Kinesis, or Pub/Sub, you’ll need API endpoints to produce and consume events.

It helps you:

- Design APIs to connect to your event stream.

- Easily test APIs connected to your streaming platforms.

- Automate API contract testing, ensuring your streaming data flows are accurate.

- Collaborate across teams to ensure smooth API and event infrastructure.

By integrating Apidog in your event streaming workflow, you reduce bugs and enhance data reliability. This means fewer integration bugs and faster time-to-production for real-time applications.

Final Thoughts

In 2025, real-time data isn’t optional it’s expected. Selecting the best event streaming tool depends on your specific business needs, technical ecosystem, and team skills.

Whether you’re streaming stock data, monitoring IoT devices, or syncing multi-cloud systems, the right event streaming platform can make all the difference.

If you want raw a battle-tested, scalable solution, power and flexibility, go with Apache Kafka, Pulsar and Confluent are gold standards.

For seamless cloud integration, explore the options from AWS, Azure, and Google Cloud.

If you want something cloud-native and low-maintenance, Kinesis, Event Hubs, or Pub/Sub are great bets. Redpanda and Materialize offer interesting innovations for new architectures and SQL-based streaming respectively.

And if you want to make sure your event streams actually work as intended, grab Apidog for free and integrate it into your testing workflow.