When working with Python to interact with web APIs, especially when handling multiple requests, you’ve probably encountered some delays. Whether it’s downloading files, scraping data, or making requests to APIs, waiting for each task to finish can slow things down considerably. This is where asynchronous programming steps in to save the day, and one of the best tools in the Python ecosystem for this is the aiohttp library.

If you're unfamiliar with asynchronous programming, don’t worry! I'll break it down in simple terms and explain how aiohttp can help streamline your web requests, making them faster and more efficient.

Before we dive deeper, let’s address something every developer deals with: API testing. And if you're like most teams, you're probably using Postman for that.

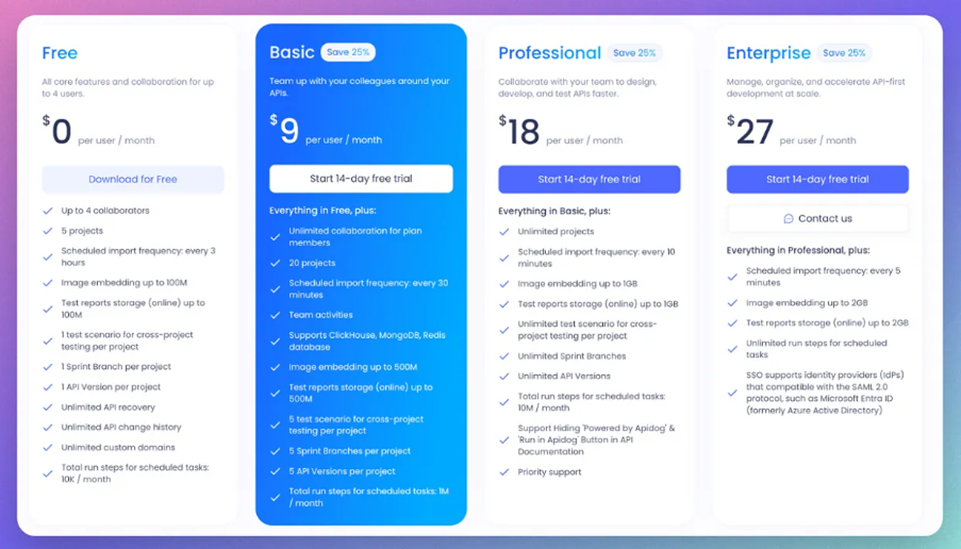

I get it—Postman is a familiar tool. But let’s be honest, haven’t we all noticed how it's becoming less and less appealing each year? Yet, here you are, working as a team, and you need collaboration tools to keep the development process smooth. So, what do you do? You invest in Postman Enterprise for a whopping $49 a month.

But here’s the kicker: you don’t have to.

Yes, you read that right. There's a better, more efficient way to manage API testing and team collaboration without having to sink so much money into a tool that, frankly, doesn’t offer what it once did. Let’s explore some better alternatives!

APIDog: You Get Everything from Postman Paid Version, But CHEAPER

That’s right, APIDog gives you all the features that comes with Postman paid version, at a fraction of the cost. Migration has been so easily that you only need to click a few buttons, and APIDog will do everything for you.

APIDog is definitely worth a shot. But if you are the Tech Lead of a Dev Team that really want to dump Postman for something Better, and Cheaper, Check out APIDog!

What is aiohttp?

aiohttp is a popular Python library that allows you to write asynchronous HTTP clients and servers. Think of it like Python’s requests library but turbocharged with the power of asynchronous programming.

It’s built on top of Python's asyncio framework, which means it can handle a large number of requests concurrently without waiting for each one to complete before moving to the next.

Imagine you’re at a coffee shop, and instead of waiting in line for each order to be completed one at a time, multiple baristas start working on your order simultaneously.

With aiohttp, it’s as if you're working with a team of baristas, all brewing coffee (or, in this case, fetching data) at the same time. The result? Faster results with less waiting around.

Why Should You Care About aiohttp?

Let’s talk about why aiohttp should matter to you, whether you’re a Python beginner, data scientist, web scraper, or even a seasoned developer.

- Performance: The main reason to use aiohttp is performance. If you need to make multiple API calls or requests to a website, aiohttp can handle them simultaneously.

Instead of processing them one after another, you can execute dozens or even hundreds of requests at the same time. - Scalability: Web scraping or calling multiple APIs can be a slow, blocking process using synchronous programming. However, with aiohttp, you can manage more tasks in less time, meaning your application can handle increased demand or larger data sets.

- Reduced Wait Times: Traditional synchronous programs have to wait for one task to finish before starting the next.

With asynchronous code, tasks don’t have to wait. You can download data from multiple URLs at once, reducing overall runtime drastically. - Efficient Resource Usage: Async programming, and aiohttp in particular, makes more efficient use of your system’s resources.

Instead of blocking an entire thread or process waiting for a response, aiohttp lets other tasks run while waiting for an I/O operation to complete.

How Does aiohttp Work?

Let’s dive into how aiohttp works in practice.

First, let’s clarify what asynchronous programming really means. In a synchronous program, each task must finish before the next one begins.

If you’re waiting for a web server to respond to your request, the entire program halts until that response arrives. Asynchronous programming allows the program to continue executing other tasks while waiting for the response.

aiohttp leverages this asynchronous model to allow you to fire off multiple HTTP requests at once, and then handle the responses as they come in. Here’s an example that demonstrates this in a simple way:

How to Make Web Request with aiohttp: the Basics

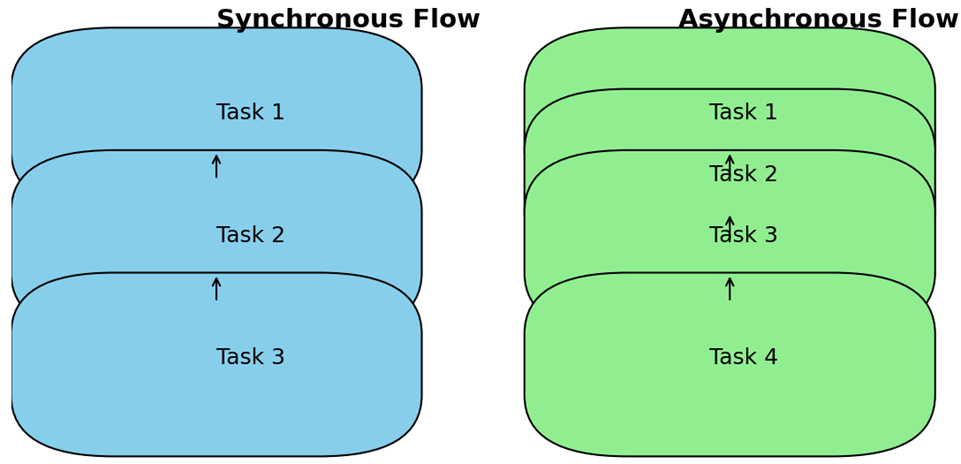

This diagram(figure 2) compares synchronous flow (one task completes before the next) and asynchronous flow (multiple tasks running concurrently).

Blue boxes represent synchronous tasks, while green boxes represent asynchronous tasks.

Arrows indicate the flow of execution for both synchronous and asynchronous processes.

Event Loop Visualization (Loop Mechanism within Python's Asynchronous Model)

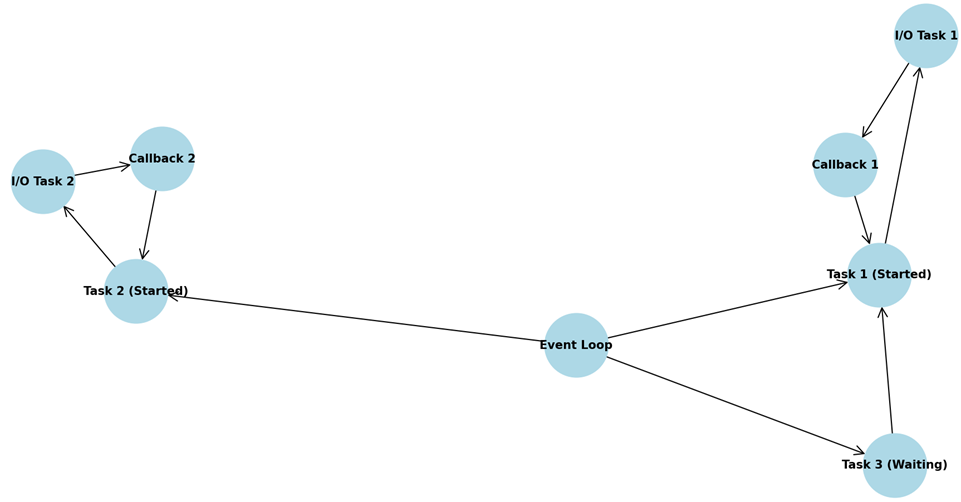

This diagram (figure 3)illustrates the event loop mechanism in Python’s asynchronous programming model.

Nodes represent different steps, such as starting tasks, waiting for I/O operations, and executing callbacks.

Directed arrows show how tasks are initiated, how they await I/O operations, and how the event loop handles callbacks and restarts tasks.

Concurrent URL Fetching in aiohttp

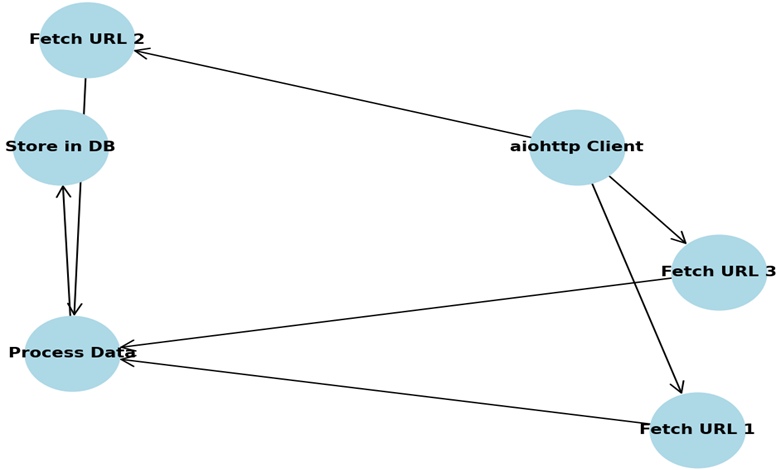

USe case diagram (figure 4) visualizes how aiohttp can handle multiple URL requests concurrently. Each "Fetch URL" process is handled concurrently and passed to a central processing node.

This diagram shows how aiohttp can concurrently fetch multiple URLs in a web scraping or API request scenario.

Workflow Diagram

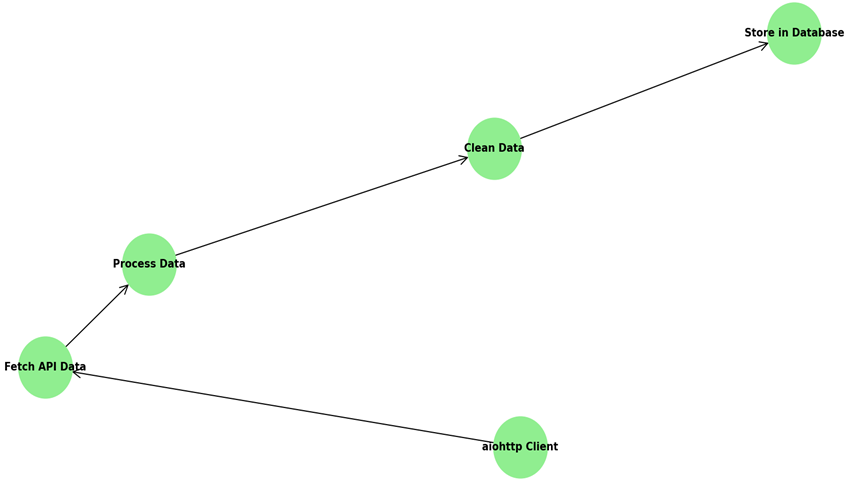

The workflow diagram visualizes a basic data pipeline, showing the steps of fetching, processing, cleaning, and storing data.

Example: Making Asynchronous Requests with aiohttp

import aiohttp

import asyncio

async def fetch_url(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

urls = [

"https://jsonplaceholder.typicode.com/posts/1",

"https://jsonplaceholder.typicode.com/posts/2",

"https://jsonplaceholder.typicode.com/posts/3",

]

async with aiohttp.ClientSession() as session:

tasks = [fetch_url(session, url) for url in urls]

responses = await asyncio.gather(*tasks)

for i, response in enumerate(responses):

print(f"Response {i+1}: {response[:60]}...") # Print first 60 characters of each response

Run the event loop

asyncio.run(main())

Downloading contents synchronously

First, let us try doing this synchronously using the requests library. It can be installed using:

pip.python3.7 -m pip install requests

Downloading an online resource using requests is straightforward.

import requestsresponse = requests.get("https://www.python.org/dev/peps/pep-8010/")print(response.content)

It will print out the HTML content of PEP 8010. To save it locally to a file:

filename = "sync_pep_8010.html"with open(filename, "wb") as pep_file:pep_file.write(content.encode('utf-8'))The file sync_pep_8010.html will be created.

Real-World Use Cases for aiohttp

1. Web Scraping

If you’re scraping multiple pages from a website, waiting for each page to load can be a painfully slow process. With aiohttp, you can scrape multiple pages simultaneously, speeding up the process dramatically. Just imagine fetching hundreds of pages at once instead of waiting for each to load in sequence.

2. API Requests

When working with APIs, especially those that have rate limits or respond slowly, you can send multiple requests at once using aiohttp. For instance, if you’re querying a weather API to get data for multiple cities, aiohttp can help you gather the results faster.

3. Data Collection

Whether you're working with stock market data, social media feeds, or news websites, aiohttp can be a game-changer for handling massive amounts of HTTP requests simultaneously, allowing you to collect data faster and more efficiently.

Here are three practical examples of using Python's aiohttp library, complete with steps and sample code:

1. Making Asynchronous HTTP Requests

This example demonstrates how to make multiple asynchronous HTTP requests using aiohttp.

Steps:

- Import necessary modules

- Define an asynchronous function to fetch a URL

- Create a list of URLs to fetch

- Set up an async event loop

- Run the async function for each URL concurrently

import aiohttp

import asyncio

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

urls = [

'https://api.github.com',

'https://api.github.com/events',

'https://api.github.com/repos/python/cpython'

]

async with aiohttp.ClientSession() as session:

tasks = [fetch(session, url) for url in urls]

responses = await asyncio.gather(*tasks)

for url, response in zip(urls, responses):

print(f"URL: {url}\nResponse length: {len(response)}\n")

asyncio.run(main())

2. Creating a Simple API Server

This example shows how to create a basic API server using aiohttp.

Steps:

- Import necessary modules

- Define route handlers

- Create the application and add routes

- Run the application

from aiohttp import web

async def handle_root(request):

return web.json_response({"message": "Welcome to the API"})

async def handle_users(request):

users = [

{"id": 1, "name": "Alice"},

{"id": 2, "name": "Bob"},

{"id": 3, "name": "Charlie"}

]

return web.json_response(users)

app = web.Application()

app.add_routes([

web.get('/', handle_root),

web.get('/users', handle_users)

])

if __name__ == '__main__':

web.run_app(app)

3. Websocket Chat Server

This example demonstrates how to create a simple websocket-based chat server using aiohttp.

Steps:

- Import necessary modules

- Create a set to store active websockets

- Define websocket handler

- Create the application and add routes

- Run the application

import aiohttp

from aiohttp import web

import asyncio

active_websockets = set()

async def websocket_handler(request):

ws = web.WebSocketResponse()

await ws.prepare(request)

active_websockets.add(ws)

try:

async for msg in ws:

if msg.type == aiohttp.WSMsgType.TEXT:

for client in active_websockets:

if client != ws:

await client.send_str(f"User{id(ws)}: {msg.data}")

elif msg.type == aiohttp.WSMsgType.ERROR:

print(f"WebSocket connection closed with exception {ws.exception()}")

finally:

active_websockets.remove(ws)

return ws

app = web.Application()

app.add_routes([web.get('/ws', websocket_handler)])

if __name__ == '__main__':

web.run_app(app)

To test this websocket server, you can use a websocket client or create a simple HTML page with JavaScript to connect to the server.

These examples showcase different aspects of aiohttp, from making asynchronous requests to creating web servers and handling websockets. They provide a solid foundation for building more complex applications using this powerful library.

Wrapping Up

In today’s data-driven world, speed matters, and the ability to handle many tasks at once can give you an edge. aiohttp is a must-have tool in your Python toolkit if you’re dealing with web scraping, API requests, or any other task that requires making many HTTP requests.

By going asynchronous, you’re not only saving time but also making your code more efficient and scalable.

So, if you’re looking to take your Python web requests to the next level, give aiohttp a try.

You’ll quickly see why it’s such a popular choice among developers dealing with I/O-heavy applications.

Did you find this page helpful? I hope this was a very rich materail for you!

Wish you all the best!