Modern software development faces a critical challenge: creating comprehensive test cases that actually catch bugs before they reach production. Traditional testing approaches often fall short, leaving teams scrambling to fix issues after deployment. However, artificial intelligence now offers a powerful solution that transforms how we approach test case creation and execution.

Understanding AI-Powered Test Case Generation

Artificial intelligence brings unprecedented capabilities to software testing. Machine learning algorithms analyze code patterns, user behavior, and historical bug data to generate test cases that human testers might overlook. This technology doesn't replace human expertise but amplifies it, creating more thorough coverage with less manual effort.

AI systems excel at pattern recognition and can identify potential failure points by examining code structure, API endpoints, and data flows. These systems learn from previous testing cycles, continuously improving their ability to predict where issues might occur. Consequently, teams achieve better test coverage while reducing the time spent on repetitive testing tasks.

Benefits of AI in Test Case Development

Enhanced Test Coverage

AI algorithms systematically analyze software components to identify testing gaps. Traditional manual testing often misses edge cases due to human limitations and time constraints. In contrast, AI-powered systems examine every possible code path, generating test cases for scenarios that developers might not consider.

Machine learning models study application behavior patterns and create test cases that cover both common use cases and unusual edge conditions. This comprehensive approach significantly reduces the likelihood of bugs reaching production environments.

Accelerated Testing Cycles

Speed becomes crucial in modern development workflows. AI dramatically reduces the time required to create and execute test cases. While human testers might spend hours writing comprehensive test suites, AI systems generate hundreds of test cases in minutes.

Furthermore, AI systems automatically update test cases when code changes occur. This dynamic adaptation ensures that test suites remain relevant and effective throughout the development lifecycle, eliminating the need for manual test maintenance.

Improved Test Quality

AI-generated test cases often demonstrate higher quality than manually created ones. Machine learning algorithms analyze vast amounts of testing data to identify the most effective testing strategies. These systems learn from successful test cases and incorporate proven patterns into new test generation.

Additionally, AI eliminates human errors that commonly occur in manual test creation. Consistency improves across all test cases, ensuring that testing standards remain uniform throughout the entire project.

Key AI Tools for Test Case Generation

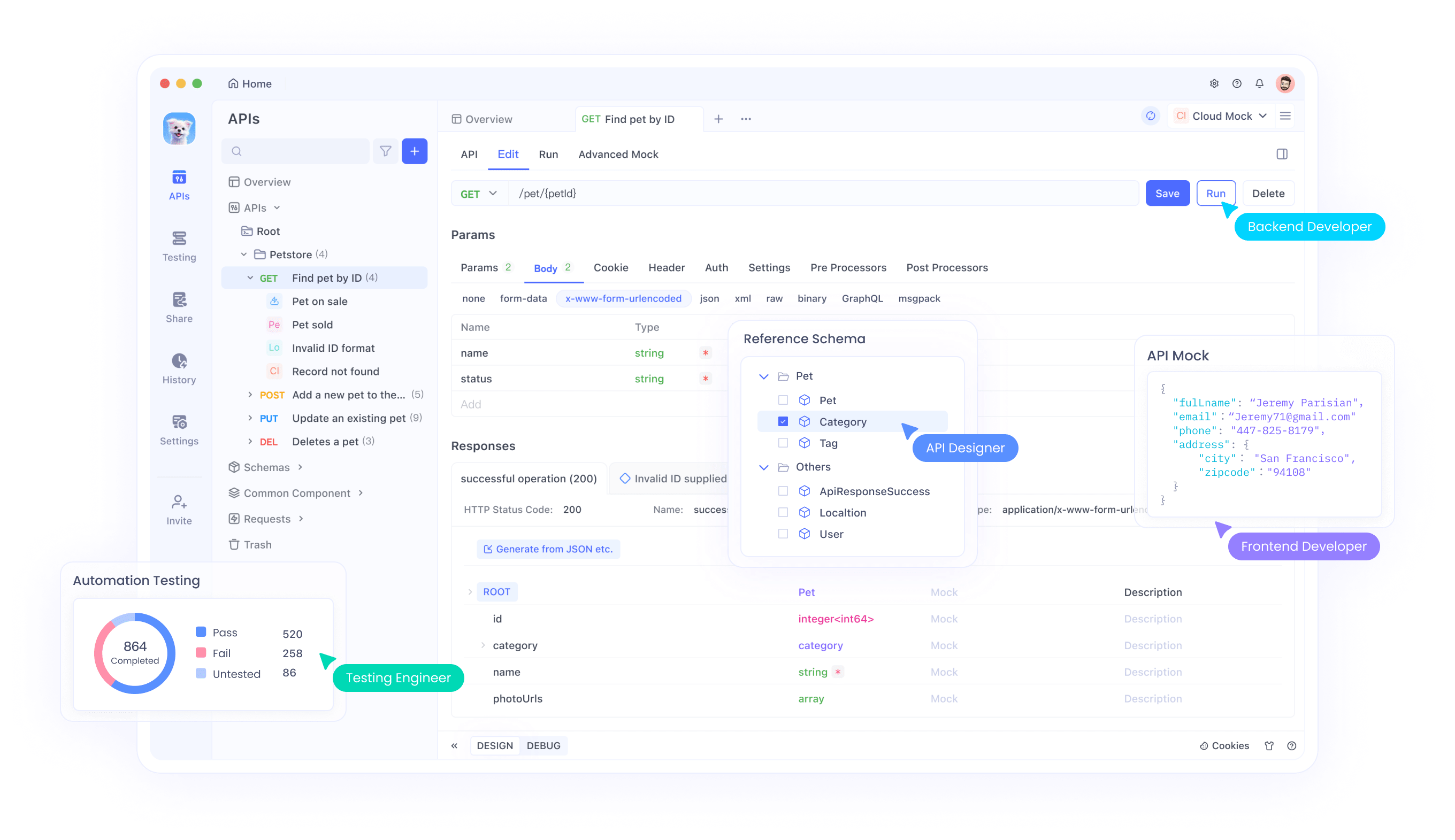

Apidog: Comprehensive API Testing Platform

Apidog represents a leading solution in AI-powered API testing. This platform combines intelligent test case generation with robust execution capabilities. Users can automatically generate test cases from API specifications, reducing manual effort while ensuring comprehensive coverage.

The platform's AI engine analyzes API documentation and automatically creates test scenarios that validate functionality, performance, and security. Apidog's machine learning capabilities continuously improve test case quality based on execution results and user feedback.

Natural Language Processing Tools

Several AI tools leverage natural language processing to convert requirements into executable test cases. These systems parse user stories, acceptance criteria, and documentation to generate comprehensive test suites.

NLP-powered tools understand context and intent, creating test cases that align with business requirements. This approach bridges the gap between business stakeholders and technical teams, ensuring that tests validate actual user needs.

Machine Learning Testing Frameworks

Advanced frameworks integrate machine learning algorithms directly into testing workflows. These tools analyze application behavior, identify patterns, and generate test cases that adapt to changing software requirements.

ML frameworks excel at regression testing, automatically generating test cases that verify that new code changes don't break existing functionality. This capability proves particularly valuable in continuous integration environments.

Implementation Strategies for AI Testing

Setting Up AI-Powered Testing Environments

Successful AI testing implementation requires careful planning and setup. Teams must first assess their current testing infrastructure and identify areas where AI can provide the most value. This assessment should consider existing tools, team expertise, and project requirements.

Integration with existing development tools becomes crucial for seamless adoption. AI testing platforms should connect with version control systems, continuous integration pipelines, and project management tools. This integration ensures that AI-generated test cases fit naturally into established workflows.

Training AI Models for Your Specific Needs

AI systems require training data to generate effective test cases. Teams should provide historical test data, bug reports, and code repositories to train AI models. This training process helps AI systems understand project-specific patterns and requirements.

Regular model updates ensure that AI systems stay current with evolving codebases and changing business requirements. Teams should establish processes for feeding new data back into AI systems, enabling continuous improvement in test case quality.

Establishing Quality Gates

AI-generated test cases require validation before execution. Teams should implement review processes that combine AI efficiency with human expertise. This hybrid approach ensures that generated test cases meet quality standards while maintaining the speed benefits of AI generation.

Quality gates should include automated validation of test case syntax, logic verification, and business requirement alignment. These checkpoints prevent low-quality test cases from entering the execution pipeline.

Best Practices for AI Test Case Development

Combining AI with Human Expertise

The most effective testing strategies combine AI capabilities with human insight. AI systems excel at generating comprehensive test cases, while human testers provide context, creativity, and domain knowledge.

Teams should establish clear roles where AI handles repetitive test generation tasks, and humans focus on complex scenarios, exploratory testing, and test strategy development. This division of labor maximizes the strengths of both AI and human testers.

Maintaining Test Case Relevance

AI-generated test cases require ongoing maintenance to remain effective. Teams should regularly review and update test cases based on application changes, user feedback, and bug discovery patterns.

Automated test case management systems can help maintain relevance by tracking test execution results and identifying outdated or redundant test cases. This ongoing maintenance ensures that test suites continue providing value over time.

Monitoring and Optimization

Continuous monitoring of AI testing performance enables optimization and improvement. Teams should track metrics such as test coverage, bug detection rates, and false positive rates to assess AI effectiveness.

Regular analysis of these metrics helps identify areas for improvement and guides adjustments to AI algorithms and training data. This iterative approach ensures that AI testing systems continuously evolve and improve.

Common Challenges and Solutions

Data Quality Issues

AI systems depend on high-quality training data to generate effective test cases. Poor data quality leads to ineffective test cases that miss bugs or generate false positives. Teams must invest in data cleaning and validation processes to ensure AI systems receive accurate training data.

Solutions include implementing data validation pipelines, establishing data quality standards, and regularly auditing training datasets. These measures help maintain the quality of AI-generated test cases.

Integration Complexity

Integrating AI testing tools with existing development workflows can present technical challenges. Legacy systems may lack APIs or integration points required for AI tool connectivity.

Teams should evaluate integration requirements early in the selection process and choose AI tools that align with their technical infrastructure. Gradual implementation approaches can help minimize disruption while allowing teams to adapt to new workflows.

Skill Gap Management

AI testing tools require new skills and knowledge that many teams may lack. Organizations must invest in training and skill development to maximize the benefits of AI-powered testing.

Training programs should cover AI tool usage, test case review processes, and AI model management. Additionally, teams should establish knowledge sharing practices to distribute AI testing expertise across the organization.

Measuring Success with AI Testing

Key Performance Indicators

Effective measurement requires clear KPIs that demonstrate AI testing value. Important metrics include test coverage percentage, bug detection rates, testing cycle time, and cost per test case.

Teams should establish baseline measurements before AI implementation to accurately assess improvement. Regular measurement and reporting help demonstrate ROI and guide future AI testing investments.

ROI Calculation

Calculating return on investment for AI testing involves measuring cost savings from reduced manual testing effort, improved bug detection, and faster release cycles.

Cost savings calculations should include reduced testing personnel time, decreased bug fix costs, and improved customer satisfaction from higher quality releases. These comprehensive ROI calculations help justify AI testing investments and guide expansion decisions.

Future Trends in AI Testing

Advanced Machine Learning Integration

Future AI testing tools will incorporate more sophisticated machine learning algorithms that provide deeper insights into software behavior. These systems will predict potential failure points with greater accuracy and generate more targeted test cases.

Advances in deep learning and neural networks will enable AI systems to understand complex software interactions and generate test cases that validate system-wide behavior rather than just individual components.

Autonomous Testing Systems

The future holds promise for fully autonomous testing systems that require minimal human intervention. These systems will automatically generate, execute, and maintain test cases while continuously adapting to software changes.

Autonomous testing will enable continuous validation throughout the development lifecycle, providing immediate feedback on code quality and functionality. This real-time testing capability will revolutionize how teams approach software quality assurance.

Conclusion

AI-powered test case generation represents a fundamental shift in software testing approaches. By leveraging machine learning algorithms and intelligent automation, teams can achieve better test coverage, faster testing cycles, and improved software quality.

Success with AI testing requires careful implementation, ongoing optimization, and the right combination of AI capabilities with human expertise. Teams that embrace these technologies while maintaining focus on quality and user needs will gain significant competitive advantages in software development.

The future of software testing lies in the intelligent combination of AI automation and human creativity. Organizations that invest in AI testing capabilities today will be better positioned to deliver high-quality software in an increasingly competitive market.