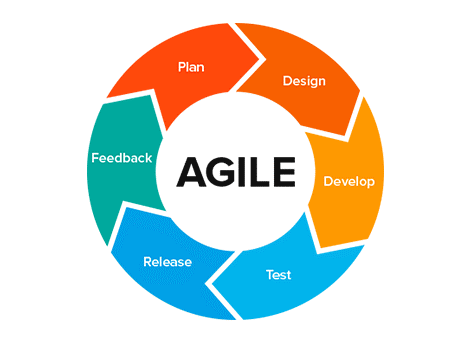

Agile testing goes against the conventional testing script by allowing testing to occur continually during development rather than waiting for developers to complete coding before validation starts. Agile Testing integrates directly into the development cycle, with testers collaborating alongside developers from day one. This approach catches defects early when they’re cheapest to fix and ensures that every release meets quality standards without sacrificing speed.

Why Agile Testing Matters

Traditional waterfall testing creates a quality bottleneck. After weeks of development, testers receive a massive code dump, find hundreds of bugs, and force lengthy rework cycles. Agile Testing prevents this death march by embedding quality checks into every sprint.

The business impact is measurable: defects found during agile testing cost 15x less to fix than those discovered in production. Teams release faster with higher confidence. Customer feedback gets incorporated immediately rather than waiting for the next major release.

Core Principles of Agile Testing

Agile Testing rests on four foundational principles that guide every activity:

1. Testing Moves Left

Testing starts during requirements discussion, not after coding. Testers help define acceptance criteria and identify edge cases before developers write a single line of code.

2. Continuous Feedback Loops

Tests run automatically on every code commit. Results are visible within minutes, not days. Teams adapt immediately based on test outcomes.

3. Whole Team Ownership

Quality is everyone’s responsibility. Developers write unit tests, testers design integration scenarios, and product owners validate business acceptance.

4. Automation is Essential

Manual testing can’t keep pace with agile delivery. Automated regression suites free testers to focus on exploratory testing and new feature validation.

How Agile Testing is Performed: A Sprint-Based Workflow

Agile Testing unfolds across the sprint timeline with distinct activities at each stage:

| Sprint Phase | Testing Activities | Deliverables |

|---|---|---|

| Sprint Planning | Review user stories, define acceptance criteria, estimate testing effort | Test strategy for sprint |

| Development | Write unit tests, pair test with developers, automate API tests | Automated test scripts |

| Daily Standup | Share test progress, identify blockers, adjust test scope | Updated test backlog |

| Sprint Review | Demo tested features, gather feedback, plan regression | Accepted stories |

| Sprint Retrospective | Evaluate testing process, improve automation, share learnings | Process improvements |

Day-to-Day Execution

During a typical two-week sprint, Agile Testing looks like this:

Week 1:

- Days 1-2: Testers review sprint backlog, clarify acceptance criteria with product owners

- Days 3-5: As developers complete user stories, testers immediately validate them

- Days 3-5: Automate API tests for completed endpoints using tools like Apidog

Week 2:

- Days 6-8: Execute integration tests across completed features

- Days 9-10: Perform end-to-end scenario testing and exploratory testing

- Final day: Run full regression suite and prepare for release

This rhythm prevents the "testing crunch" at sprint end and maintains steady quality throughput.

Agile Testing Automation in Action

Here’s how Agile Testing automation works in practice:

// Jest test for a user story: "As a user, I can reset my password"

describe('Password Reset Flow', () => {

// Test written during sprint development

it('sends reset email for valid user', async () => {

const response = await api.post('/auth/reset', {

email: 'test@example.com'

});

// Oracle: status code and email queued

expect(response.status).toBe(200);

expect(mockEmailService.sent).toBe(true);

});

it('returns 404 for non-existent user', async () => {

const response = await api.post('/auth/reset', {

email: 'nonexistent@example.com'

});

// Security oracle: don't reveal user existence

expect(response.status).toBe(200); // Always return 200

expect(mockEmailService.sent).toBe(false); // But don't send email

});

});

This test becomes part of the automated suite that runs on every commit, providing continuous feedback throughout the sprint.

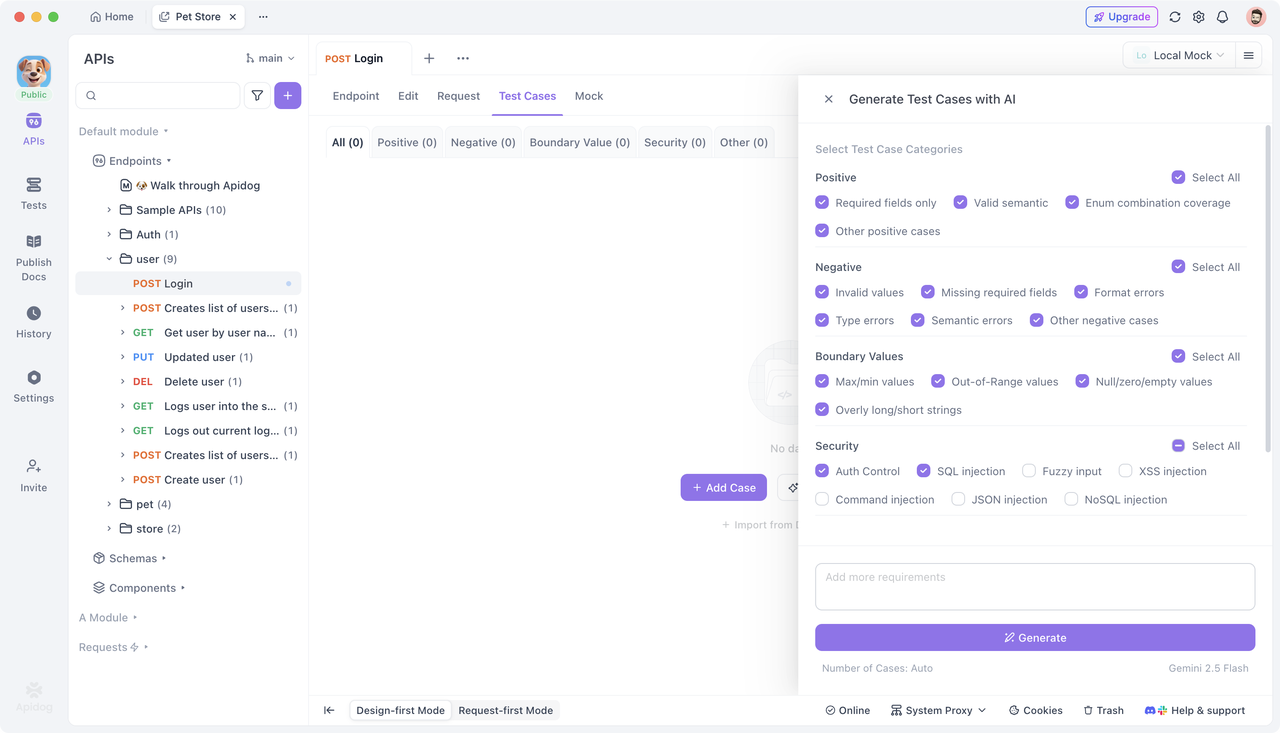

How Apidog Supports Agile API Testing

API testing is the backbone of modern agile testing because most applications communicate through APIs. Apidog eliminates the manual effort that traditionally bogs down agile teams.

Sprint-Ready Test Creation

On day one of a sprint, import your API specification into Apidog. It automatically generates test cases for:

- Positive scenarios (happy paths)

- Negative scenarios (invalid inputs)

- Boundary values (edge cases)

- Security checks (injection attempts)

A user story for "create user" instantly becomes executable tests without manual scripting:

# Apidog auto-generates from OpenAPI spec

Test: POST /api/users - Valid Data

Given: Valid user payload

When: Send request

Oracle 1: Status 201

Oracle 2: User ID returned in response

Oracle 3: Database contains new record

Oracle 4: Response time < 500ms

Continuous Test Execution

Configure Apidog to run tests automatically:

- On every pull request: Catch breaking changes before merge

- Nightly regression: Full suite runs against development branch

- Pre-deployment smoke tests: Quick validation of critical paths

Results appear in Slack or email within minutes, fitting perfectly into Agile Testing rapid feedback cycles.

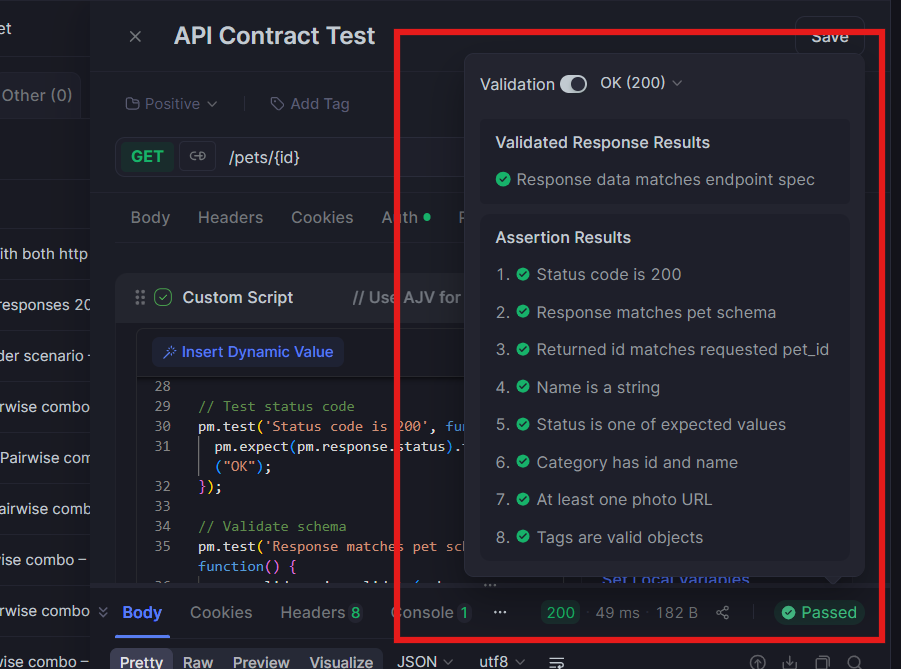

Test-Driven API Development

In true agile fashion, developers can use Apidog to define API contracts first, then write code to satisfy the tests. The API specification becomes the test oracle, ensuring implementation matches design from day one.

Collaborative Quality

Product owners can review API test scenarios in Apidog’s visual interface without reading code. This transparency ensures agile testing truly reflects business acceptance criteria, not just technical correctness.

Frequently Asked Questions

Q1: Can Agile Testing work without automation?

Ans: It can, but it’s unsustainable. Manual testing creates bottlenecks that prevent rapid releases. Agile Testing relies on automation for regression, freeing testers for exploratory work that requires human judgment.

Q2: How do testers keep up with daily code changes in Agile Testing?

Ans: Testers work alongside developers, testing features as they’re built. Shift-left testing means testers clarify requirements early and validate incrementally, not in a big batch at sprint end.

Q3: What metrics should we track for Agile Testing success?

Ans: Focus on sprint metrics: defect escape rate, test automation coverage, story acceptance rate, and time-to-fix. Avoid vanity metrics like total test count. Quality over quantity defines Agile Testing.

Q4: How does Apidog integrate with our existing CI/CD pipeline?

Ans: Apidog provides CLI tools and webhook integrations for Jenkins, GitHub Actions, and GitLab CI. Add one line to your pipeline config to run API tests automatically on every commit, with results posted directly to your team’s communication channels.

Q5: Who owns test automation in Agile Testing?

Ans: The whole team. Developers write unit tests, testers design integration scenarios, and everyone contributes to the automation suite. Apidog’s visual interface makes API test automation accessible to all skill levels, breaking down traditional silos.

Conclusion

Agile testing transforms quality from a final gate into a continuous practice that accelerates delivery rather than slowing it down. By embedding testing into every sprint activity, automating repetitive checks, and making quality a team responsibility, organizations release faster with fewer defects.

The key is starting small. Choose one user story in your next sprint and apply Agile testing principles: define acceptance criteria as executable tests, automate them with Apidog, and run them continuously. Measure the reduction in escaped defects and time spent on regression. This data will build the case for expanding agile testing across your organization.

Quality isn’t achieved through massive test phases at project end—it’s built incrementally, day by day, through disciplined agile testing practices that treat every story as an opportunity to prevent bugs rather than find them.