AgenticSeek: A Complete Guide to Local, Private AI Productivity

In today’s landscape, AI assistants are more capable than ever—but privacy, data control, and cloud dependency remain major concerns for technical teams. Enter AgenticSeek: a fully local AI assistant that brings advanced features like voice interaction, web automation, and code generation to your own hardware, with zero cloud reliance.

If you’re an API developer, backend engineer, or a product-minded tech lead, this guide will show you exactly how to install, configure, and leverage AgenticSeek for everything from coding support to workflow automation—while keeping your data 100% private.

💡 Looking for robust API documentation, testing, and team productivity? Apidog delivers beautiful API docs and an all-in-one workflow for developer teams—boosting productivity and affordably replacing Postman.

Why AgenticSeek Stands Out for Developers

True Local Operation and Data Privacy

AgenticSeek’s core advantage is its 100% local architecture:

- No data leaves your device

- No internet required for core AI functions

- No subscription or API fees for local use

- Full control over your files, code, and conversations

This makes AgenticSeek ideal for teams handling sensitive code or proprietary data, where cloud-based AI tools simply aren’t an option.

Multi-Modal AI: Beyond Just Chat

AgenticSeek is far more than a chatbot. It offers:

- Autonomous web browsing: Automate research, extract data, fill forms

- Code generation & execution: Write runnable code in Python, Go, Java, C, and more

- Task planning: Break down complex projects with intelligent agent routing

- Voice interaction: Natural speech-to-text and text-to-speech

- Local file management: Read, write, organize, and analyze documents

Specialized Agent Routing

AgenticSeek automatically routes your requests to the right specialist agent—whether you need code written, web data scraped, or tasks scheduled.

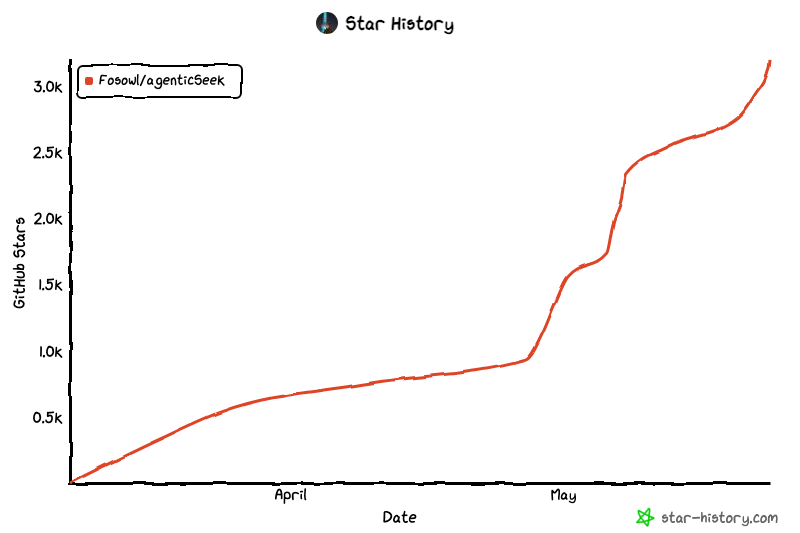

Open-Source and Actively Maintained

Explore its growth and source code:

- AgenticSeek on GitHub: Fully local, no third-party APIs, no monthly fees.

![]()

GitHubFosowl

System Requirements and Hardware Recommendations

Before you begin, ensure your environment meets these guidelines:

Minimum Requirements

- OS: Linux, macOS, Windows

- Python: 3.10 (recommended)

- Chrome Browser: Latest version

- Docker: For supporting services

- RAM: 16GB+ recommended

LLM Hardware Needs

| Model Size | GPU VRAM | Performance Recommendation |

|---|---|---|

| 7B | 8GB | Not recommended—unstable performance |

| 14B | 12GB (RTX 3060) | Usable for simple tasks |

| 32B | 24GB (RTX 4090) | Good all-round performance |

| 70B+ | 48GB+ (M2 Ultra) | Excellent, ideal for power users |

Recommended Models

- Deepseek R1: Top choice for reasoning and tool use

- Qwen, Llama: Strong alternatives for general use

Step-by-Step Installation Guide

1. Clone & Set Up the Project

git clone https://github.com/Fosowl/agenticSeek.git

cd agenticSeek

mv .env.example .env

2. Create a Python Virtual Environment

Python 3.10 is required for dependency compatibility.

python3 -m venv agentic_seek_env

source agentic_seek_env/bin/activate

3. Install Dependencies

Linux/macOS (Auto Install)

./install.sh

Windows

./install.bat

Manual Installation (if needed)

Linux:

sudo apt update

sudo apt install -y alsa-utils portaudio19-dev python3-pyaudio libgtk-3-dev libnotify-dev libgconf-2-4 libnss3 libxss1

sudo apt install -y chromium-chromedriver

pip3 install -r requirements.txt

macOS:

brew update

brew install --cask chromedriver

brew install portaudio

python3 -m pip install --upgrade pip

pip3 install --upgrade setuptools wheel

pip3 install -r requirements.txt

Windows:

pip install pyreadline3

pip install pyaudio

pip3 install -r requirements.txt

Note: On Windows, download ChromeDriver manually and add to PATH.

4. Start Your Local LLM Provider

Ollama is the simplest option:

ollama serve

ollama pull deepseek-r1:14b # Adjust for your hardware

Configuration: Fine-Tuning AgenticSeek

The config.ini File

Here’s what the core configuration might look like:

[MAIN]

is_local = True

provider_name = ollama

provider_model = deepseek-r1:14b

provider_server_address = 127.0.0.1:11434

agent_name = Jarvis

recover_last_session = True

save_session = True

speak = True

listen = False

work_dir = /Users/yourname/Documents/ai_workspace

jarvis_personality = False

languages = en zh

[BROWSER]

headless_browser = True

stealth_mode = True

Key Options Explained

- is_local: Run locally (True) or via API (False)

- provider_name/model: Select your LLM provider & model

- work_dir: Directory for the AI to read/write files

- agent_name: Set your assistant’s wake word

- speak/listen: Enable TTS/STT as desired

- headless_browser/stealth_mode: For automated, undetected browsing

Workspace Setup

Choose a dedicated directory for AgenticSeek’s operations:

mkdir ~/Documents/agentic_workspace

Set work_dir in your config.ini accordingly.

Getting Started: First Run

1. Start Supporting Services

source agentic_seek_env/bin/activate

sudo ./start_services.sh # Linux/macOS

# or

start_services.cmd # Windows

2. Launch the Interface

Command Line:

python3 cli.py

Tip: Set headless_browser = False and speak = True for CLI voice responses.

Web Interface:

python3 api.py

Then open http://localhost:3000/.

Tip: Use headless_browser = True for better web performance.

Core Capabilities for API-Focused Teams

Autonomous Web Browsing

AgenticSeek can research topics, extract data, and automate form submissions.

Best Practice:

- “Search the web for the top 10 REST API frameworks and save a summary to frameworks.txt”

- Avoid vague queries—be explicit when you want web research.

Code Generation & Execution

Generate, run, and debug code in multiple languages:

- “Write a Python script to test a REST API endpoint and log the response times.”

- “Create a Go program for a simple CRUD API.”

AgenticSeek writes, tests, and saves code directly in your workspace.

Complex Task Planning

Delegate end-to-end workflows:

- “Plan a developer onboarding checklist. Research best practices and save to onboarding.txt.”

- AgenticSeek will research, compile, and organize the content.

File and Data Management

- Analyze logs, process CSVs, or organize project directories with simple commands.

- Example: “Read errors.log, summarize the most common issues, and save as summary.txt.”

Advanced Features for Power Users

Voice Interaction

To enable hands-free operation:

speak = True

listen = True

agent_name = Friday

- Use wake word (e.g., “Friday”) and confirmation phrases like “do it,” “run,” or “proceed.”

Multi-Language Support

Set multiple languages for text-to-speech:

languages = en zh fr es

First language is default.

Session Management

Keep your context across sessions:

recover_last_session = True

save_session = True

Best Practices for Effective Usage

- Be specific: “Search the web for GraphQL API security best practices and save to graphql_security.txt”

- Specify file operations: “Save the results to analysis.txt”

- Break down tasks: For multi-step requests, provide clear, sequential instructions.

Sample Workflow for API Teams:

- “Search the web for latest API authentication methods.”

- “Summarize and save to api_auth_methods.txt.”

- “Generate a Python script to test OAuth2 flows.”

- “Analyze logs in logs/ and report error trends.”

Troubleshooting Common Issues

- ChromeDriver version mismatch:

Check Chrome version, download the matching ChromeDriver, replace your local driver. - No connection adapters:

Ensure yourprovider_server_addressincludeshttp:// - SearxNG base URL error:

Rename.env.exampleto.envor setSEARXNG_BASE_URL - Performance issues:

Upgrade to a larger model (32B+), increase RAM/VRAM, close other GPU-intensive apps.

Advanced Configuration

Switching LLM Providers

Ollama:

provider_name = ollama

provider_model = deepseek-r1:32b

provider_server_address = 127.0.0.1:11434

LM Studio:

provider_name = lm-studio

provider_model = your-model-name

provider_server_address = http://127.0.0.1:1234

Remote Server:

provider_name = server

provider_model = deepseek-r1:70b

provider_server_address = your-server-ip:3333

Using API Providers

If local hardware is insufficient:

is_local = False

provider_name = deepseek

provider_model = deepseek-chat

Set your API key:

export DEEPSEEK_API_KEY="your-api-key-here"

Maximizing Performance

- GPU/RAM: Close other applications to free resources.

- Model tuning: Start with smaller models; upgrade as needs grow.

- Workspace organization: Use a clear folder structure for

work_dir. - Session management: Enable save/recover for long projects.

- Voice commands: Use in a quiet environment for best accuracy.

Conclusion: Local AI That Puts You in Control

AgenticSeek offers developer teams a private, robust alternative to cloud-based AI assistants—empowering you to automate research, code, and workflow tasks with full data control.

As you integrate AgenticSeek into your daily workflows, you’ll discover new ways to boost productivity, automate routine tasks, and keep your sensitive data secure. And for API teams who want end-to-end productivity—consider combining AgenticSeek’s automation with Apidog’s all-in-one API platform for documentation, testing, and seamless team collaboration.

The future of AI assistance is local, private, and developer-driven. With AgenticSeek, you’re in charge.